Flink SQL Client 启动失败 Java版本问题

Posted 宝哥大数据

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了Flink SQL Client 启动失败 Java版本问题相关的知识,希望对你有一定的参考价值。

启动flink sql client报错

[chb@test1 flink-1.12.1]$ ./bin/sql-client.sh embedded

Setting HBASE_CONF_DIR=/etc/hbase/conf because no HBASE_CONF_DIR was set.

SLF4J: Class path contains multiple SLF4J bindings.

SLF4J: Found binding in [jar:file:/opt/flink-1.12.1/lib/log4j-slf4j-impl-2.12.1.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/usr/hdp/3.1.4.0-315/hadoop/lib/slf4j-log4j12-1.7.25.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation.

SLF4J: Actual binding is of type [org.apache.logging.slf4j.Log4jLoggerFactory]

No default environment specified.

Searching for '/opt/flink-1.12.1/conf/sql-client-defaults.yaml'...found.

Reading default environment from: file:/opt/flink-1.12.1/conf/sql-client-defaults.yaml

No session environment specified.

2021-08-09 15:02:09,324 INFO org.apache.hadoop.hive.conf.HiveConf [] - Found configuration file null

2021-08-09 15:02:16,169 ERROR org.apache.hadoop.hive.metastore.utils.MetaStoreUtils [] - Got exception: java.lang.ClassCastException class [Ljava.lang.Object; cannot be cast to class [Ljava.net.URI; ([Ljava.lang.Object; and [Ljava.net.URI; are in module java.base of loader 'bootstrap')

java.lang.ClassCastException: class [Ljava.lang.Object; cannot be cast to class [Ljava.net.URI; ([Ljava.lang.Object; and [Ljava.net.URI; are in module java.base of loader 'bootstrap')

at org.apache.hadoop.hive.metastore.HiveMetaStoreClient.resolveUris(HiveMetaStoreClient.java:263) ~[flink-sql-connector-hive-3.1.2_2.11-1.12.1.jar:1.12.1]

at org.apache.hadoop.hive.metastore.HiveMetaStoreClient.<init>(HiveMetaStoreClient.java:183) ~[flink-sql-connector-hive-3.1.2_2.11-1.12.1.jar:1.12.1]

at jdk.internal.reflect.NativeConstructorAccessorImpl.newInstance0(Native Method) ~[?:?]

at jdk.internal.reflect.NativeConstructorAccessorImpl.newInstance(NativeConstructorAccessorImpl.java:62) ~[?:?]

at jdk.internal.reflect.DelegatingConstructorAccessorImpl.newInstance(DelegatingConstructorAccessorImpl.java:45) ~[?:?]

at java.lang.reflect.Constructor.newInstance(Constructor.java:490) ~[?:?]

at org.apache.hadoop.hive.metastore.utils.JavaUtils.newInstance(JavaUtils.java:84) ~[flink-sql-connector-hive-3.1.2_2.11-1.12.1.jar:1.12.1]

at org.apache.hadoop.hive.metastore.RetryingMetaStoreClient.<init>(RetryingMetaStoreClient.java:95) ~[flink-sql-connector-hive-3.1.2_2.11-1.12.1.jar:1.12.1]

at org.apache.hadoop.hive.metastore.RetryingMetaStoreClient.getProxy(RetryingMetaStoreClient.java:148) ~[flink-sql-connector-hive-3.1.2_2.11-1.12.1.jar:1.12.1]

at org.apache.hadoop.hive.metastore.RetryingMetaStoreClient.getProxy(RetryingMetaStoreClient.java:104) ~[flink-sql-connector-hive-3.1.2_2.11-1.12.1.jar:1.12.1]

at jdk.internal.reflect.NativeMethodAccessorImpl.invoke0(Native Method) ~[?:?]

at jdk.internal.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62) ~[?:?]

at jdk.internal.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43) ~[?:?]

at java.lang.reflect.Method.invoke(Method.java:566) ~[?:?]

at org.apache.flink.table.catalog.hive.client.HiveShimV310.getHiveMetastoreClient(HiveShimV310.java:112) ~[flink-sql-connector-hive-3.1.2_2.11-1.12.1.jar:1.12.1]

at org.apache.flink.table.catalog.hive.client.HiveMetastoreClientWrapper.createMetastoreClient(HiveMetastoreClientWrapper.java:274) ~[flink-sql-connector-hive-3.1.2_2.11-1.12.1.jar:1.12.1]

at org.apache.flink.table.catalog.hive.client.HiveMetastoreClientWrapper.<init>(HiveMetastoreClientWrapper.java:80) ~[flink-sql-connector-hive-3.1.2_2.11-1.12.1.jar:1.12.1]

at org.apache.flink.table.catalog.hive.client.HiveMetastoreClientFactory.create(HiveMetastoreClientFactory.java:32) ~[flink-sql-connector-hive-3.1.2_2.11-1.12.1.jar:1.12.1]

at org.apache.flink.table.catalog.hive.HiveCatalog.open(HiveCatalog.java:265) ~[flink-sql-connector-hive-3.1.2_2.11-1.12.1.jar:1.12.1]

at org.apache.flink.table.catalog.CatalogManager.registerCatalog(CatalogManager.java:186) ~[flink-table_2.11-1.12.1.jar:1.12.1]

at org.apache.flink.table.api.internal.TableEnvironmentImpl.registerCatalog(TableEnvironmentImpl.java:351) ~[flink-table_2.11-1.12.1.jar:1.12.1]

at org.apache.flink.table.client.gateway.local.ExecutionContext.lambda$null$5(ExecutionContext.java:685) ~[flink-sql-client_2.11-1.12.1.jar:1.12.1]

at java.util.HashMap.forEach(HashMap.java:1336) ~[?:?]

at org.apache.flink.table.client.gateway.local.ExecutionContext.lambda$initializeCatalogs$6(ExecutionContext.java:681) ~[flink-sql-client_2.11-1.12.1.jar:1.12.1]

at org.apache.flink.table.client.gateway.local.ExecutionContext.wrapClassLoader(ExecutionContext.java:265) [flink-sql-client_2.11-1.12.1.jar:1.12.1]

at org.apache.flink.table.client.gateway.local.ExecutionContext.initializeCatalogs(ExecutionContext.java:677) [flink-sql-client_2.11-1.12.1.jar:1.12.1]

at org.apache.flink.table.client.gateway.local.ExecutionContext.initializeTableEnvironment(ExecutionContext.java:565) [flink-sql-client_2.11-1.12.1.jar:1.12.1]

at org.apache.flink.table.client.gateway.local.ExecutionContext.<init>(ExecutionContext.java:187) [flink-sql-client_2.11-1.12.1.jar:1.12.1]

at org.apache.flink.table.client.gateway.local.ExecutionContext.<init>(ExecutionContext.java:138) [flink-sql-client_2.11-1.12.1.jar:1.12.1]

at org.apache.flink.table.client.gateway.local.ExecutionContext$Builder.build(ExecutionContext.java:961) [flink-sql-client_2.11-1.12.1.jar:1.12.1]

at org.apache.flink.table.client.gateway.local.LocalExecutor.openSession(LocalExecutor.java:225) [flink-sql-client_2.11-1.12.1.jar:1.12.1]

at org.apache.flink.table.client.SqlClient.start(SqlClient.java:108) [flink-sql-client_2.11-1.12.1.jar:1.12.1]

at org.apache.flink.table.client.SqlClient.main(SqlClient.java:201) [flink-sql-client_2.11-1.12.1.jar:1.12.1]

2021-08-09 15:02:16,175 ERROR org.apache.hadoop.hive.metastore.utils.MetaStoreUtils [] - Converting exception to MetaException

Exception in thread "main" org.apache.flink.table.client.SqlClientException: Unexpected exception. This is a bug. Please consider filing an issue.

at org.apache.flink.table.client.SqlClient.main(SqlClient.java:215)

Caused by: org.apache.flink.table.client.gateway.SqlExecutionException: Could not create execution context.

at org.apache.flink.table.client.gateway.local.ExecutionContext$Builder.build(ExecutionContext.java:972)

at org.apache.flink.table.client.gateway.local.LocalExecutor.openSession(LocalExecutor.java:225)

at org.apache.flink.table.client.SqlClient.start(SqlClient.java:108)

at org.apache.flink.table.client.SqlClient.main(SqlClient.java:201)

Caused by: org.apache.flink.table.catalog.exceptions.CatalogException: Failed to create Hive Metastore client

at org.apache.flink.table.catalog.hive.client.HiveShimV310.getHiveMetastoreClient(HiveShimV310.java:114)

at org.apache.flink.table.catalog.hive.client.HiveMetastoreClientWrapper.createMetastoreClient(HiveMetastoreClientWrapper.java:274)

at org.apache.flink.table.catalog.hive.client.HiveMetastoreClientWrapper.<init>(HiveMetastoreClientWrapper.java:80)

at org.apache.flink.table.catalog.hive.client.HiveMetastoreClientFactory.create(HiveMetastoreClientFactory.java:32)

at org.apache.flink.table.catalog.hive.HiveCatalog.open(HiveCatalog.java:265)

at org.apache.flink.table.catalog.CatalogManager.registerCatalog(CatalogManager.java:186)

at org.apache.flink.table.api.internal.TableEnvironmentImpl.registerCatalog(TableEnvironmentImpl.java:351)

at org.apache.flink.table.client.gateway.local.ExecutionContext.lambda$null$5(ExecutionContext.java:685)

at java.base/java.util.HashMap.forEach(HashMap.java:1336)

at org.apache.flink.table.client.gateway.local.ExecutionContext.lambda$initializeCatalogs$6(ExecutionContext.java:681)

at org.apache.flink.table.client.gateway.local.ExecutionContext.wrapClassLoader(ExecutionContext.java:265)

at org.apache.flink.table.client.gateway.local.ExecutionContext.initializeCatalogs(ExecutionContext.java:677)

at org.apache.flink.table.client.gateway.local.ExecutionContext.initializeTableEnvironment(ExecutionContext.java:565)

at org.apache.flink.table.client.gateway.local.ExecutionContext.<init>(ExecutionContext.java:187)

at org.apache.flink.table.client.gateway.local.ExecutionContext.<init>(ExecutionContext.java:138)

at org.apache.flink.table.client.gateway.local.ExecutionContext$Builder.build(ExecutionContext.java:961)

... 3 more

Caused by: java.lang.reflect.InvocationTargetException

at java.base/jdk.internal.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at java.base/jdk.internal.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

at java.base/jdk.internal.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.base/java.lang.reflect.Method.invoke(Method.java:566)

at org.apache.flink.table.catalog.hive.client.HiveShimV310.getHiveMetastoreClient(HiveShimV310.java:112)

... 18 more

Caused by: java.lang.RuntimeException: Unable to instantiate org.apache.hadoop.hive.metastore.HiveMetaStoreClient

at org.apache.hadoop.hive.metastore.utils.JavaUtils.newInstance(JavaUtils.java:86)

at org.apache.hadoop.hive.metastore.RetryingMetaStoreClient.<init>(RetryingMetaStoreClient.java:95)

at org.apache.hadoop.hive.metastore.RetryingMetaStoreClient.getProxy(RetryingMetaStoreClient.java:148)

at org.apache.hadoop.hive.metastore.RetryingMetaStoreClient.getProxy(RetryingMetaStoreClient.java:104)

... 23 more

Caused by: java.lang.reflect.InvocationTargetException

at java.base/jdk.internal.reflect.NativeConstructorAccessorImpl.newInstance0(Native Method)

at java.base/jdk.internal.reflect.NativeConstructorAccessorImpl.newInstance(NativeConstructorAccessorImpl.java:62)

at java.base/jdk.internal.reflect.DelegatingConstructorAccessorImpl.newInstance(DelegatingConstructorAccessorImpl.java:45)

at java.base/java.lang.reflect.Constructor.newInstance(Constructor.java:490)

at org.apache.hadoop.hive.metastore.utils.JavaUtils.newInstance(JavaUtils.java:84)

... 26 more

Caused by: MetaException(message:Got exception: java.lang.ClassCastException class [Ljava.lang.Object; cannot be cast to class [Ljava.net.URI; ([Ljava.lang.Object; and [Ljava.net.URI; are in module java.base of loader 'bootstrap'))

at org.apache.hadoop.hive.metastore.utils.MetaStoreUtils.logAndThrowMetaException(MetaStoreUtils.java:171)

at org.apache.hadoop.hive.metastore.HiveMetaStoreClient.resolveUris(HiveMetaStoreClient.java:268)

at org.apache.hadoop.hive.metastore.HiveMetaStoreClient.<init>(HiveMetaStoreClient.java:183)

... 31 more

解决方法

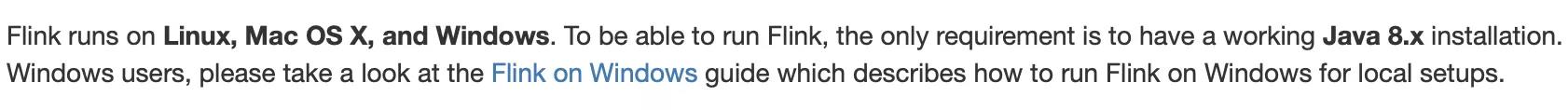

在官网的教程上看到了如下信息:

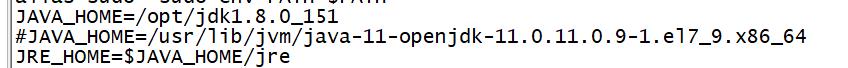

关键就在"have a working Java8.x installation",这句话中并没有"or higher"之类的字眼,所以我推断当前版本的flink只能在Java8上工作,而我的JDK版本是Java11,抱着试一试的心态把JDK版本降为了Java8,再次启动flink,成功。所以遇到类似问题的朋友可以检查一下你们的JDK版本是否匹配。

关注我的公众号【宝哥大数据】

以上是关于Flink SQL Client 启动失败 Java版本问题的主要内容,如果未能解决你的问题,请参考以下文章