metrics-server监控主机资源

Posted 123坤

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了metrics-server监控主机资源相关的知识,希望对你有一定的参考价值。

使用metrics-server实现主机资源监控

获取metrics-server资源清单文件

直接使用命令来获取资源清单文件

wget https://github.com/kubernetes-sigs/metrics-server/releases/download/v0.6.1/components.yaml

修改metrics-server资源清单文件

在使用过程中没有创建证书,使用非安全的tls即可。

129 metadata:

130 labels:

131 k8s-app: metrics-server

132 spec:

133 containers:

134 - args:

135 - --cert-dir=/tmp

136 - --secure-port=4443

137 - --kubelet-preferred-address-types=InternalIP,ExternalIP,Hostname

138 - --kubelet-use-node-status-port

139 - --metric-resolution=15s

140 - --kubelet-insecure-tls ##添加内容

部署metrics-server资源清单文件

在没有布署之前是没有办法使用的。

[root@k8s-master01 kube-dashboard]# kubectl top nodes

W1225 14:15:31.293319 91003 top_node.go:119] Using json format to get metrics. Next release will switch to protocol-buffers, switch early by passing --use-protocol-buffers flag

error: Metrics API not available

[root@k8s-master01 kube-dashboard]# kubectl top pods

W1225 14:16:15.435419 91600 top_pod.go:140] Using json format to get metrics. Next release will switch to protocol-buffers, switch early by passing --use-protocol-buffers flag

error: Metrics API not available

布署

[root@k8s-master01 kube-dashboard]# kubectl apply -f components.yaml

serviceaccount/metrics-server created

clusterrole.rbac.authorization.k8s.io/system:aggregated-metrics-reader created

clusterrole.rbac.authorization.k8s.io/system:metrics-server created

rolebinding.rbac.authorization.k8s.io/metrics-server-auth-reader created

clusterrolebinding.rbac.authorization.k8s.io/metrics-server:system:auth-delegator created

clusterrolebinding.rbac.authorization.k8s.io/system:metrics-server created

service/metrics-server created

deployment.apps/metrics-server created

apiservice.apiregistration.k8s.io/v1beta1.metrics.k8s.io created

完成之后。由于此处用的时国外的源,在拉取镜像时会报错。

[root@k8s-master01 kube-dashboard]# kubectl describe pod -n kube-system metrics-server-8bb87844c-q558z

Name: metrics-server-8bb87844c-q558z

Namespace: kube-system

Priority: 2000000000

Priority Class Name: system-cluster-critical

Node: k8s-master03/192.168.10.103

Start Time: Sun, 25 Dec 2022 14:16:41 +0800

Labels: k8s-app=metrics-server

pod-template-hash=8bb87844c

Annotations: cni.projectcalico.org/containerID: 6245ec07a432de1a32d6a254d38396cc13415b9b83ebc71b5e00d6c818cb64d5

cni.projectcalico.org/podIP: 10.244.195.3/32

cni.projectcalico.org/podIPs: 10.244.195.3/32

Status: Pending

IP: 10.244.195.3

IPs:

IP: 10.244.195.3

Controlled By: ReplicaSet/metrics-server-8bb87844c

Containers:

metrics-server:

Container ID:

Image: k8s.gcr.io/metrics-server/metrics-server:v0.6.1

Image ID:

Port: 4443/TCP

Host Port: 0/TCP

Args:

--cert-dir=/tmp

--secure-port=4443

--kubelet-preferred-address-types=InternalIP,ExternalIP,Hostname

--kubelet-use-node-status-port

--metric-resolution=15s

--kubelet-insecure-tls

State: Waiting

Reason: ImagePullBackOff

Ready: False

Restart Count: 0

Requests:

cpu: 100m

memory: 200Mi

Liveness: http-get https://:https/livez delay=0s timeout=1s period=10s #success=1 #failure=3

Readiness: http-get https://:https/readyz delay=20s timeout=1s period=10s #success=1 #failure=3

Environment: <none>

Mounts:

/tmp from tmp-dir (rw)

/var/run/secrets/kubernetes.io/serviceaccount from kube-api-access-5xc29 (ro)

Conditions:

Type Status

Initialized True

Ready False

ContainersReady False

PodScheduled True

Volumes:

tmp-dir:

Type: EmptyDir (a temporary directory that shares a pod's lifetime)

Medium:

SizeLimit: <unset>

kube-api-access-5xc29:

Type: Projected (a volume that contains injected data from multiple sources)

TokenExpirationSeconds: 3607

ConfigMapName: kube-root-ca.crt

ConfigMapOptional: <nil>

DownwardAPI: true

QoS Class: Burstable

Node-Selectors: kubernetes.io/os=linux

Tolerations: node.kubernetes.io/not-ready:NoExecute op=Exists for 300s

node.kubernetes.io/unreachable:NoExecute op=Exists for 300s

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal Scheduled 32m default-scheduler Successfully assigned kube-system/metrics-server-8bb87844c-q558z to k8s-master03

Normal SandboxChanged 32m kubelet Pod sandbox changed, it will be killed and re-created.

Warning Failed 31m (x3 over 32m) kubelet Failed to pull image "k8s.gcr.io/metrics-server/metrics-server:v0.6.1": rpc error: code = Unknown desc = Error response from daemon: Get "https://k8s.gcr.io/v2/": net/http: request canceled while waiting for connection (Client.Timeout exceeded while awaiting headers)

Warning Failed 31m (x3 over 32m) kubelet Error: ErrImagePull

Warning Failed 30m (x7 over 32m) kubelet Error: ImagePullBackOff

Normal Pulling 30m (x4 over 32m) kubelet Pulling image "k8s.gcr.io/metrics-server/metrics-server:v0.6.1"

Normal BackOff 2m26s (x119 over 32m) kubelet Back-off pulling image "k8s.gcr.io/metrics-server/metrics-server:v0.6.1"

可以从阿里云下载镜像,然后将其打上tag即可。

[root@k8s-master01 kube-dashboard]# docker pull registry.cn-hangzhou.aliyuncs.com/zailushang/metrics-server:v0.6.0

v0.6.0: Pulling from zailushang/metrics-server

2df365faf0e3: Pull complete

aae7d2b1eda3: Pull complete

Digest: sha256:60a353297a2b74ef6eea928fe2d44efc8d8e6298441bf1184b2e3bc25ca1b3e2

Status: Downloaded newer image for registry.cn-hangzhou.aliyuncs.com/zailushang/metrics-server:v0.6.0

registry.cn-hangzhou.aliyuncs.com/zailushang/metrics-server:v0.6.0

[root@k8s-master01 kube-dashboard]# docker tag registry.cn-hangzhou.aliyuncs.com/zailushang/metrics-server:v0.6.0 k8s.gcr.io/metrics-server/metrics-server:v0.6.0

然后编辑让其生效。

[root@k8s-master01 kube-dashboard]# kubectl get deployment -n kube-system

NAME READY UP-TO-DATE AVAILABLE AGE

calico-kube-controllers 1/1 1 1 14h

coredns 1/1 1 1 14h

metrics-server 0/1 1 0 47m

[root@k8s-master01 kube-dashboard]# kubectl scale deployment metrics-server -n kube-system --replicas=0

deployment.apps/metrics-server scaled

[root@k8s-master01 kube-dashboard]# kubectl get pod -n kube-system

NAME READY STATUS RESTARTS AGE

calico-kube-controllers-7cc8dd57d9-bm5vz 1/1 Running 0 14h

calico-node-9s8bl 1/1 Running 0 14h

calico-node-9wdj6 1/1 Running 0 14h

calico-node-d5qw7 1/1 Running 0 14h

calico-node-pbt77 1/1 Running 0 14h

coredns-675db8b7cc-49nh9 1/1 Running 0 14h

[root@k8s-master01 kube-dashboard]# kubectl scale deployment metrics-server -n kube-system --replicas=1

deployment.apps/metrics-server scaled

[root@k8s-master01 kube-dashboard]# kubectl get pod -n kube-system

NAME READY STATUS RESTARTS AGE

calico-kube-controllers-7cc8dd57d9-bm5vz 1/1 Running 0 14h

calico-node-9s8bl 1/1 Running 0 14h

calico-node-9wdj6 1/1 Running 0 14h

calico-node-d5qw7 1/1 Running 0 14h

calico-node-pbt77 1/1 Running 0 14h

coredns-675db8b7cc-49nh9 1/1 Running 0 14h

metrics-server-8bb87844c-4zcwn 0/1 ContainerCreating 0 2s

此时可能还会报错,因为可能调度的工作节点本机没有镜像还是会从默认的地址拉取,所以在所有节点都需要从阿里云拉镜像然后重新打上tag。

验证及授权

[root@k8s-master01 kube-dashboard]# kubectl get pod -n kube-system -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

calico-kube-controllers-7cc8dd57d9-bm5vz 1/1 Running 0 15h 10.244.195.1 k8s-master03 <none> <none>

calico-node-9s8bl 1/1 Running 0 15h 192.168.10.103 k8s-master03 <none> <none>

calico-node-9wdj6 1/1 Running 0 15h 192.168.10.104 k8s-worker02 <none> <none>

calico-node-d5qw7 1/1 Running 0 15h 192.168.10.101 k8s-master01 <none> <none>

calico-node-pbt77 1/1 Running 0 15h 192.168.10.102 k8s-master02 <none> <none>

coredns-675db8b7cc-49nh9 1/1 Running 0 15h 10.244.69.193 k8s-worker02 <none> <none>

metrics-server-5794ccf74d-2hjwh 1/1 Running 0 112s 10.244.69.195 k8s-worker02 <none> <none>

此时会发现有一点错误,是因为apiserver上无法读去metrics的信息,可以用新建一个系统用户设置为k8s集群管理员的身份;另一种就是生成一个文件,修改apiserver的信息,然后重启即可。

[root@k8s-master01 kube-dashboard]# kubectl top nodes

W1225 15:15:23.901393 12100 top_node.go:119] Using json format to get metrics. Next release will switch to protocol-buffers, switch early by passing --use-protocol-buffers flag

Error from server (ServiceUnavailable): the server is currently unable to handle the request (get nodes.metrics.k8s.io)

[root@k8s-master01 kube-dashboard]# kubectl top pods

W1225 15:17:54.176328 14249 top_pod.go:140] Using json format to get metrics. Next release will switch to protocol-buffers, switch early by passing --use-protocol-buffers flag

Error from server (ServiceUnavailable): the server is currently unable to handle the request (get pods.metrics.k8s.io)

采用第一种的方法来做:

[root@k8s-master01 kube-dashboard]# kubectl create clusterrolebinding system:anonymous --clusterrole=cluster-admin --user=system:anonymous

clusterrolebinding.rbac.authorization.k8s.io/system:anonymous created

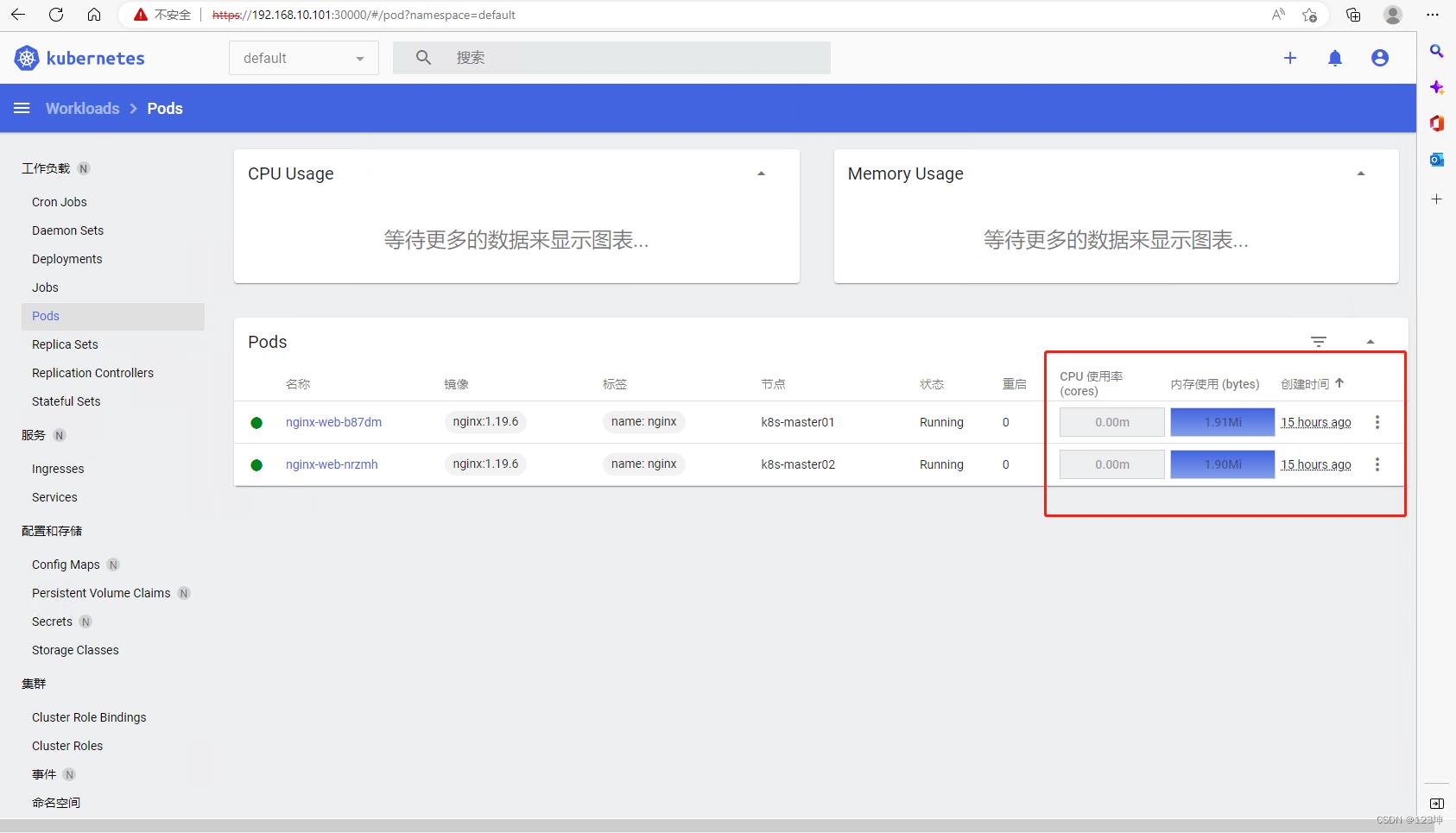

[root@k8s-master01 kube-dashboard]# kubectl top pods

W1225 15:21:31.503987 17374 top_pod.go:140] Using json format to get metrics. Next release will switch to protocol-buffers, switch early by passing --use-protocol-buffers flag

NAME CPU(cores) MEMORY(bytes)

nginx-web-b87dm 0m 1Mi

nginx-web-nrzmh 0m 1Mi

[root@k8s-master01 kube-dashboard]# kubectl top nodes

W1225 15:21:35.710547 17411 top_node.go:119] Using json format to get metrics. Next release will switch to protocol-buffers, switch early by passing --use-protocol-buffers flag

NAME CPU(cores) CPU% MEMORY(bytes) MEMORY%

k8s-master01 147m 7% 2342Mi 61%

k8s-master02 135m 6% 1703Mi 44%

k8s-master03 133m 6% 1666Mi 43%

k8s-worker02 73m 3% 861Mi 46%

获取token 重新登陆查看

[root@k8s-master01 kube-dashboard]# kubectl get secret -n kubenetes-dashboard

No resources found in kubenetes-dashboard namespace.

[root@k8s-master01 kube-dashboard]# kubectl get secret -n kubernetes-dashboard

NAME TYPE DATA AGE

default-token-w2s7j kubernetes.io/service-account-token 3 120m

kubernetes-dashboard-certs Opaque 0 120m

kubernetes-dashboard-csrf Opaque 1 120m

kubernetes-dashboard-key-holder Opaque 2 120m

kubernetes-dashboard-token-t4qdp kubernetes.io/service-account-token 3 120m

[root@k8s-master01 kube-dashboard]# kubectl describe secret kubernetes-dashboard-token-t4qdp -n kubernetes-dashboard

Name: kubernetes-dashboard-token-t4qdp

Namespace: kubernetes-dashboard

Labels: <none>

Annotations: kubernetes.io/service-account.name: kubernetes-dashboard

kubernetes.io/service-account.uid: e204650e-df62-46c8-810d-47d8fd98a4c1

Type: kubernetes.io/service-account-token

Data

====

ca.crt: 1367 bytes

namespace: 20 bytes

token: eyJhbGciOiJSUzI1NiIsImtpZCI6InpaRW5OczVSZ19RYTIzcUVOb2c5V25fVkY2YW1aT2NOWlFzVFRCbUkzUlEifQ.eyJpc3MiOiJrdWJlcm5ldGVzL3NlcnZpY2VhY2NvdW50Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9uYW1lc3BhY2UiOiJrdWJlcm5ldGVzLWRhc2hib2FyZCIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VjcmV0Lm5hbWUiOiJrdWJlcm5ldGVzLWRhc2hib2FyZC10b2tlbi10NHFkcCIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VydmljZS1hY2NvdW50Lm5hbWUiOiJrdWJlcm5ldGVzLWRhc2hib2FyZCIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VydmljZS1hY2NvdW50LnVpZCI6ImUyMDQ2NTBlLWRmNjItNDZjOC04MTBkLTQ3ZDhmZDk4YTRjMSIsInN1YiI6InN5c3RlbTpzZXJ2aWNlYWNjb3VudDprdWJlcm5ldGVzLWRhc2hib2FyZDprdWJlcm5ldGVzLWRhc2hib2FyZCJ9.QocuobfL-pUwFJgqIVOIvq9rkGQsJ5f4undVJmqi6mZRYTCSSMpp0QC-Po05fH1Hp1_2llEUcwC0rUSALw7aX5Y3qwdGxxc0oV1C1lm2K-I67NMYLU4IcLrjhRRV9x0cnc3pk8i_k1fA5FwYhtP1_U6c0Q0e1nSOKJFy-SQFDvCV4OcFxGA2bua4ul-IUG91fZEDYNNp64uDuhrnGC8DSAyE0-N52t9mOr3Azng_1r15_b2mfA36B4lLUSUMuG9AkIThE4ggqe1fc2PqQyeoDUzRysvF3PMgRiq-B-IkEMJvVU7umkySDV2jm9wdF-UPs-M9zwd49HQKH8flBH8fxA

以上是关于metrics-server监控主机资源的主要内容,如果未能解决你的问题,请参考以下文章