LVS负载均衡群集DR模式+Keepalived部署!

Posted handsomeboy-东

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了LVS负载均衡群集DR模式+Keepalived部署!相关的知识,希望对你有一定的参考价值。

文章目录

LVS-DR模式数据包流量

假设服务器都在同一局域网

- 客户端向目标VIP发送请求,由负载均衡器接受

- 负载均衡器根据算法选择后端真实服务器,不修改不封装IP报文,而是修改MAC地址为真实服务器的MAC地址,然后再 区域网上发送

- 后端真实服务器收到数据帧,解封装发现目标ip(VIP)与本机匹配(事先设置好的),于是接受并处理该报文

- 随后重新封装报文,将响应报文通过lo接口传送给物理网卡然后向外发出,客户端收到报文并回复,

LVS-DR模式中ARP问题

- 在LVS-DR负载均衡集中,负载均衡与节点服务器都要配置相同的VIP地址。在局域网中具有相同的IP地址会造成各服务器ARP通信的紊乱。当ARP广播发送到LVS-DR集群时,因为负载均衡器和节点服务器都是连接到相同网络上,它们都会接收到ARP广播。只有前端的负载均衡器进行响应,其他节点服务器不应该响应ARP广播。

解决方法:对节点服务器进行处理,使其不响应针对VIP的ARP请求。 使用虚接口 lo:0 承载VIP地址设置内核参数 arp_ignore=1:系统只响应目的IP为本地 IP 的ARP请求 - Real Server返回报文(源IP是VIP)经路由器转发,重新封装报文时,需要先获取路由器的MAC地址

发送ARP请求时,Linux默认使用IP包的源IP地址(即VIP)作为ARP请求包中的源IP地址,而不使

用发送接口的IP地址如:ens33,路由器收到ARP请求后,将更新ARP表项,原有的VIP对应Director的MAC地址会被更新为VIP对应RealServer的MAC地址,路由器根据ARP表项,会将新来的请求报文转发给Realserver,导致Director的VIP失效

解决方法:对节点服务器进行处理,设置内核参数arp_announce=2:系统不使用IP包的源地址来设置ARP请求的源地址,而选择发送接口的IP地址

解决ARP两个问题设置方法

修改 /etc/sysctl.conf 文件

net.ipv4.conf.lo.arp_ignore = 1

net.ipv4.conf.lo.arp_announce = 2

net.ipv4.conf.all.arp_ignore = 1

net.ipv4.conf.all.arp_announce = 2

LVS-DR工作模式的特点

- 负载均衡调度器和后端真实服务器再同一区域网内

- 负载均衡调度器最为群集的访问入口,但不作为网关使用,所有的回复报文不经过负载均衡调度器

- 后端真实服务器上的lo接口配置VIP的IP地址

LVS-DR + Keepalived部署

设备准备:

- 主/备负载调度器:主(192.168.118.100),备(192.168.118.200)

- 后端服务器:server1(ens33:192.168.118.50,VIP:192.168.118.66)、server2(ens33:192.168.118.55,VIP:192.168.118.66)

- 客户端:192.168.118.99

LVS调度服务器配置(主-备)

LVS-01:

[root@lvs-01 ~]# yum install -y ipvsadm keepalived

[root@lvs-01 ~]# modprobe ip_vs #加载ip_vs模块

[root@lvs-01 ~]# cat /proc/net/ip_vs

IP Virtual Server version 1.2.1 (size=4096)

Prot LocalAddress:Port Scheduler Flags

-> RemoteAddress:Port Forward Weight ActiveConn InActConn

##配置VIP

[root@lvs-01 ~]# cd /etc/sysconfig/network-scripts/

[root@lvs-01 network-scripts]# cp -p ifcfg-ens33 ifcfg-ens33:0

[root@lvs-01 network-scripts]# vim ifcfg-ens33:0 #删除原本内容,添加以下内容

DEVICE=ens33:0

ONBOOT=yes

IPADDR=192.168.118.66

NETMASK=255.255.255.255

[root@lvs-01 network-scripts]# ifup ens33:0

[root@lvs-01 network-scripts]# ifconfig ens33:0

ens33:0: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500

inet 192.168.118.66 netmask 255.255.255.0 broadcast 192.168.118.255

ether 00:0c:29:4e:ad:4a txqueuelen 1000 (Ethernet)

## 设置proc参数,关闭linux内核参数,关闭转发和重定向

[root@lvs-01 network-scripts]# vim /etc/sysctl.conf #再后面插入以下内容

net.ipv4.ip_forward = 0

net.ipv4.conf.all.send_redirects = 0

net.ipv4.conf.default.send_redirects = 0

net.ipv4.conf.ens33.send_redirects = 0

[root@lvs-01 network-scripts]# sysctl -p

net.ipv4.ip_forward = 0

net.ipv4.conf.all.send_redirects = 0

net.ipv4.conf.default.send_redirects = 0

net.ipv4.conf.ens33.send_redirects = 0

##开启ipvsadm

[root@lvs-01 network-scripts]# ipvsadm-save > /etc/sysconfig/ipvsadm

[root@lvs-01 network-scripts]# systemctl start ipvsadm

[root@lvs-01 network-scripts]# systemctl status ipvsadm

● ipvsadm.service - Initialise the Linux Virtual Server

Loaded: loaded (/usr/lib/systemd/system/ipvsadm.service; disabled; vendor preset: disabled)

Active: active (exited) since 星期一 2021-07-26 15:36:20 CST; 9s ago

##用脚本创建ipvsadm规则

[root@lvs-01 network-scripts]# vim /opt/gz.sh

#!/bin/bash

ipvsadm -C

ipvsadm -A -t 192.168.118.66:80 -s rr ##设置为轮询

ipvsadm -a -t 192.168.118.66:80 -r 192.168.118.50:80 -g

ipvsadm -a -t 192.168.118.66:80 -r 192.168.118.55:80 -g

ipvsadm

[root@lvs-01 network-scripts]# chmod +x /opt/gz.sh

[root@lvs-01 network-scripts]# /opt/gz.sh #开启ipvsadm,(在配置完web服务器后执行)

IP Virtual Server version 1.2.1 (size=4096)

Prot LocalAddress:Port Scheduler Flags

-> RemoteAddress:Port Forward Weight ActiveConn InActConn

TCP lvs-01:http rr

-> 192.168.118.50:http Route 1 0 0

-> 192.168.118.55:http Route 1 0 0

LVS-02:

[root@lvs-02 ~]# yum -y install ipvsadm keepalived

[root@lvs-02 ~]# modprobe ip_vs

[root@lvs-02 ~]# cat /proc/net/ip_vs

IP Virtual Server version 1.2.1 (size=4096)

Prot LocalAddress:Port Scheduler Flags

-> RemoteAddress:Port Forward Weight ActiveConn InActConn

[root@lvs-02 ~]# cd /etc/sysconfig/network-scripts/

[root@lvs-02 network-scripts]# cp -p ifcfg-ens33 ifcfg-ens33:0

[root@lvs-02 network-scripts]# vim ifcfg-ens33:0

DEVICE=ens33:0

ONBOOT=yes

IPADDR=192.168.118.88

NETMASK=255.255.255.255

[root@lvs-02 network-scripts]# vim /etc/sysctl.conf

net.ipv4.ip_forward = 0

net.ipv4.conf.all.send_redirects = 0

net.ipv4.conf.default.send_redirects = 0

net.ipv4.conf.ens33.send_redirects = 0

[root@lvs-02 network-scripts]# ipvsadm-save > /etc/sysconfig/ipvsadm

[root@lvs-02 network-scripts]# systemctl start ipvsadm

[root@lvs-02 network-scripts]# vim /opt/gz.sh

#!/bin/bash

ipvsadm -C

ipvsadm -A -t 192.168.118.66:80 -s rr

ipvsadm -a -t 192.168.118.66:80 -r 192.168.118.50:80 -g

ipvsadm -a -t 192.168.118.66:80 -r 192.168.118.55:80 -g

ipvsadm

[root@lvs-02 network-scripts]# chmod +x /opt/gz.sh

[root@lvs-02 network-scripts]# /opt/gz.sh

IP Virtual Server version 1.2.1 (size=4096)

Prot LocalAddress:Port Scheduler Flags

-> RemoteAddress:Port Forward Weight ActiveConn InActConn

TCP lvs-02:http rr

-> 192.168.118.50:http Route 1 0 0

-> 192.168.118.55:http Route 1 0 0

web服务器配置

server1:

[root@server1 ~]# cd /etc/sysconfig/network-scripts/

[root@server1 network-scripts]# cp -p ifcfg-lo ifcfg-lo:0

[root@server1 network-scripts]# vim /ifcfg-lo:0

DEVICE=lo:0

ONBOOT=yes

IPADDR=192.168.118.66

NETMASK=255.255.255.255

[root@server1 network-scripts]# ifup lo:0

[root@server1 network-scripts]# ifconfig lo:0

lo:0: flags=73<UP,LOOPBACK,RUNNING> mtu 65536

inet 192.168.118.66 netmask 255.255.255.255

loop txqueuelen 1000 (Local Loopback)

##禁锢路由

[root@server1 network-scripts]# route add -host 192.168.118.66 dev lo:0

[root@server1 network-scripts]# route -n

Kernel IP routing table

Destination Gateway Genmask Flags Metric Ref Use Iface

0.0.0.0 192.168.118.2 0.0.0.0 UG 100 0 0 ens33

192.168.118.0 0.0.0.0 255.255.255.0 U 100 0 0 ens33

192.168.118.66 0.0.0.0 255.255.255.255 UH 0 0 0 lo

192.168.122.0 0.0.0.0 255.255.255.0 U 0 0 0 virbr0

###也可以配置启动管理来执行路由禁锢

[root@server1 network-scripts]# vim /etc/rc.local ##再最后添加以下内容

/sbin/route add -host 192.168.118.66 dev lo:0

##下载安装httpd

[root@server1 network-scripts]# yum install -y httpd

[root@server1 network-scripts]# vim /etc/sysctl.conf #调整内核的ARP响应参数以阻止更新VIP的MAC地址,避免冲突

net.ipv4.conf.lo.arp_ignore = 1

net.ipv4.conf.lo.arp_announce = 2

net.ipv4.conf.all.arp_ignore = 1

net.ipv4.conf.all.arp_announce = 2

[root@server1 network-scripts]# sysctl -p

net.ipv4.conf.lo.arp_ignore = 1

net.ipv4.conf.lo.arp_announce = 2

net.ipv4.conf.all.arp_ignore = 1

net.ipv4.conf.all.arp_announce = 2

[root@server1 network-scripts]# systemctl start httpd #开启apache

[root@server1 network-scripts]# vim /var/www/html/index.html #设置web1的网页

<html>

<body>

<meta http-equiv="Content-Type" content="text/html;charset=utf-8">

<h1>this is server1</h1>

</body>

</html>

server2:

[root@server2 ~]# cd /etc/sysconfig/network-scripts/

[root@server2 network-scripts]# cp -p ifcfg-lo ifcfg-lo:0

[root@server2 network-scripts]# vim ifcfg-lo:0

DEVICE=lo:0

ONBOOT=yes

IPADDR=192.168.118.66

NETMASK=255.255.255.255

[root@server2 network-scripts]# ifup ifcfg-lo:0

[root@server2 network-scripts]# ifconfig lo:0

lo:0: flags=73<UP,LOOPBACK,RUNNING> mtu 65536

inet 192.168.118.66 netmask 255.255.255.255

loop txqueuelen 1000 (Local Loopback)

[root@server2 network-scripts]# route add -host 192.168.118.66 dev lo:0

[root@server2 network-scripts]# vim /etc/rc.local

/sbin/route add -host 192.168.118.66 dev lo:0

[root@server2 network-scripts]# yum install -y httpd

[root@server2 network-scripts]# sysctl -p

net.ipv4.conf.lo.arp_ignore = 1

net.ipv4.conf.lo.arp_announce = 2

net.ipv4.conf.all.arp_ignore = 1

net.ipv4.conf.all.arp_announce = 2

[root@server2 network-scripts]# systemctl start httpd

[root@server2 network-scripts]# vim /var/www/html/index.html

<html>

<body>

<meta http-equiv="Content-Type" content="text/html;charset=utf-8">

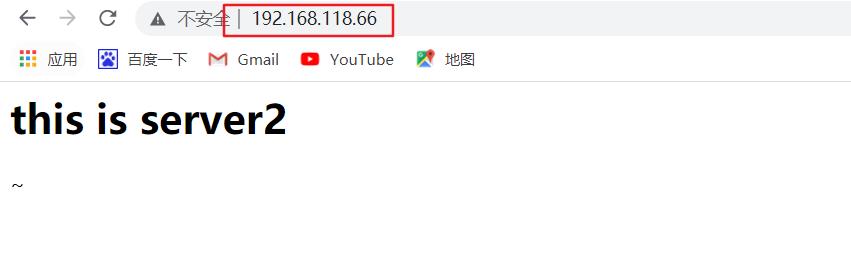

<h1>this is server2</h1>

</body>

</html>

~

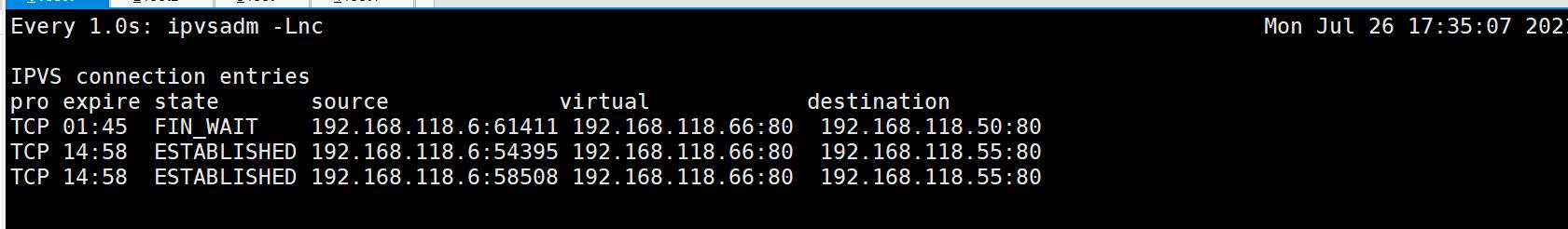

- 此时可以在浏览器上查看网页(不断刷新),同时在负载调度器上实时观察

[root@lvs-01 network-scripts]# watch -n 1 ipvsadm -Lnc

部署Keepalived

Keepalived概述

- 支持故障自动切换

- 支持节点健康状态检查

- 判断LVS负载调度器、节点服务器的可用性,当master主机出现故障及时切换到backup节点保证业务正常,当 master故障主机恢复后将其重新加入群集并且业务重新切换回 master 节点(优先级)。

Keepalived实现原理

- keepalived采用VRRP热备份协议实现Linux 服务器的多机热备功能

- VRRP(虚拟路由冗余协议)是针对路由器的一种备份解决方案。由多台路由器组成一个热备份组,通过共用的虚拟IP地址对外提供服,每个热备组内同时只有一台主路由器提供服务,其他路由器处于冗余状态,若当前在线的路由器失效,则其他路由器会根据设置的优先级自动接替虚拟IP地址,继续提供服务

- snmp 通过网络管理服务器、交换机、路由器等设备的一种协议在keepalived SNMP管理的是健康检查(状态), 在监控中也会通过snmp 监控、获取被监控服务器的数据

Keepalived安装设置

LVS-01:

[root@lvs-01 ~]# vim /etc/keepalived/keepalived.conf

global_defs { #定义全局参数

router_id lvs_01 #热备组内的设备名称不能一致

}

vrrp_instance vi_1 { #定义VRRP热备实例参数

state MASTER #指定热备状态,主为master,备为backup

interface ens33 #指定承载vip地址的物理接口

virtual_router_id 51 #指定虚拟路由器的ID号,每个热备组保持一致

priority 110 #指定优先级,数值越大越优先

advert_int 1

authentication {

auth_type PASS

auth_pass 6666

}

virtual_ipaddress { #指定集群VIP地址

192.168.118.66

}

}

#指定虚拟服务器地址vip,端口,定义虚拟服务器和web服务器池参数

virtual_server 192.168.118.66 80 {

lb_algo rr #指定调度算法,轮询(rr)

lb_kind DR #指定集群工作模式,直接路由DR

persistence_timeout 6 #健康检查的间隔时间

protocol TCP #应用服务采用的是TCP协议

#指定第一个web节点的地址,端口

real_server 192.168.118.50 80 {

weight 1 #节点权重

TCP_CHECK {

connect_port 80 #添加检查的目标端口

connect_timeout 3 #添加连接超时

nb_get_retry 3 #添加重试次数

delay_before_retry 3 #添加重试间隔

}

}

#指定第二个web节点的地址,端口

real_server 192.168.118.55 80 {

weight 1

TCP_CHECK {

connect_port 80

connect_timeout 3

nb_get_retry 3

delay_before_retry 3

}

}

}

[root@lvs-01 ~]# systemctl start keepalived.service #开启keepalived

[root@lvs-01 ~]# systemctl status keepalived.service

● keepalived.service - LVS and VRRP High Availability Monitor

Loaded: loaded (/usr/lib/systemd/system/keepalived.service; disabled; vendor preset: disabled)

Active: active (running) since 星期一 2021-07-26 21:45:42 CST; 7s ago

Process: 83249 ExecStart=/usr/sbin/keepalived $KEEPALIVED_OPTIONS (code=exited, status=0/SUCCESS)

LVS-02:

[root@lvs-02 network-scripts]# vim /etc/keepalived/keepalived.conf

global_defs {

router_id lvs_02 #这里得更改序号

}

vrrp_instance vi_1 {

state BACKUP

interface ens33

virtual_router_id 51

priority 100 #优先级改为100

advert_int 1

authentication {

auth_type PASS

auth_pass 6666

}

virtual_ipaddress {

192.168.226.100

}

}

virtual_server 192.168.118.66 80 {

lb_algo rr

lb_kind DR

persistence_timeout 6

global_defs {

router_id lvs_01

}

vrrp_instance vi_1 {

interface ens33

virtual_router_id 51

priority 110

advert_int 1

auth_pass 6666

}

192.168.118.66

}

}

virtual_server 192.168.118.66 80 {

protocol TCP

real_server 192.168.118.50 80 {

weight 1

TCP_CHECK {

connect_port 80

connect_timeout 3

nb_get_retry 3

delay_before_retry 3

}

}

real_server 192.168.118.55 80 {

weight 1

TCP_CHECK {

connect_port 80

connect_timeout 3

nb_get_retry 3

delay_before_retry 3

}

}

}

[root@lvs-02 network-scripts]# systemctl start keepalived.service

[root@lvs-02 network-scripts]# systemctl status keepalived.service

● keepalived.service - LVS and VRRP High Availability Monitor

Loaded: loaded (/usr/lib/systemd/system/keepalived.service; disabled; vendor preset: disabled)

Active: active (running) since 一 2021-07-26 21:46:28 CST; 11s ago

Process: 79523 ExecStart=/usr/sbin/keepalived $KEEPALIVED_OPTIONS (code=exited, status=0/SUCCESS)

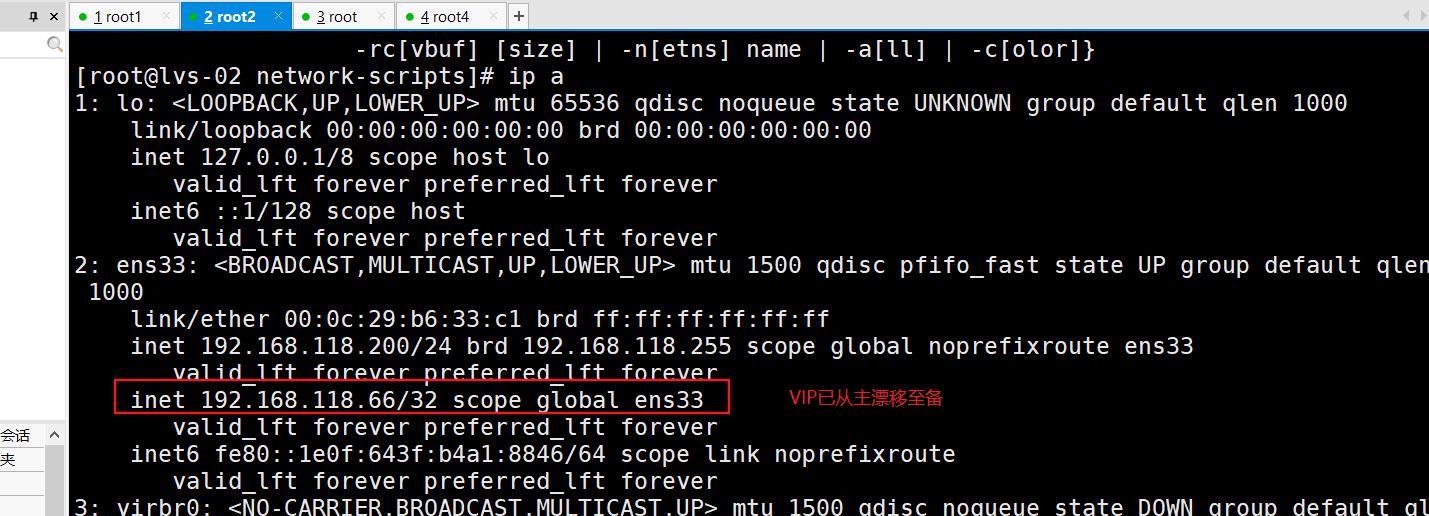

- 此时在LVS-02上用“ip a”看不到VIP地址,假设LVS-01发生故障(关闭keepalived),再查看LVS-02的IP地址详细信息

[root@lvs-01 ~]# systemctl stop keepalived.service

以上是关于LVS负载均衡群集DR模式+Keepalived部署!的主要内容,如果未能解决你的问题,请参考以下文章