linux12k8s --> 03二进制安装

Posted FikL-09-19

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了linux12k8s --> 03二进制安装相关的知识,希望对你有一定的参考价值。

文章目录

- K8S 二进制安装部署

- 一、节点规划

- 二、系统优化

- 三、安装docker

- 四、生成+颁发集群证书 (master01执行)

- 1.准备证书生成工具

- 五、部署ETCD集群

- 2.创建ETCD集群证书

- 六、部署master节点

- 一、创建证书

- 七、下载安装包+编写配置文件

- 八、部署各个组件

- 八、部署node节点

- 九、安装集群图形化界面

K8S 二进制安装部署

kubernetes

k8s和docker之间的关系?

k8s是一个容器化管理平台,docker是一个容器,

k8s和docker之间的关系?

`docker是一个容器 k8s是一个容器化管理平台

集群角色:

master节点: `管理集群

node节点: `主要用来部署应用

Master节点部署插件:

kube-apiserver : # 中央管理器,调度管理集群 an p r server

kube-controller-manager :# 控制器: 管理容器,监控容器 kan chu na man ni zr

kube-scheduler: # 调度器:调度容器 s gan ze le

flannel: # 提供集群间网络

etcd: # 数据库

====================================================================================================

kubelet : # 部署容器,监控容器(只监控自己的那一台)

kube-proxy : # 提供容器间的网络

Node节点部署插件:

kubelet : # 部署容器,监控容器(只监控自己的那一台)

kube-proxy : # 提供容器间的网络

优化节点并安装Docker

- 以下操作均在所有节点执行

- 准备相应机器,配置节点规划

- 所有节点配置以下内容到hosts文件

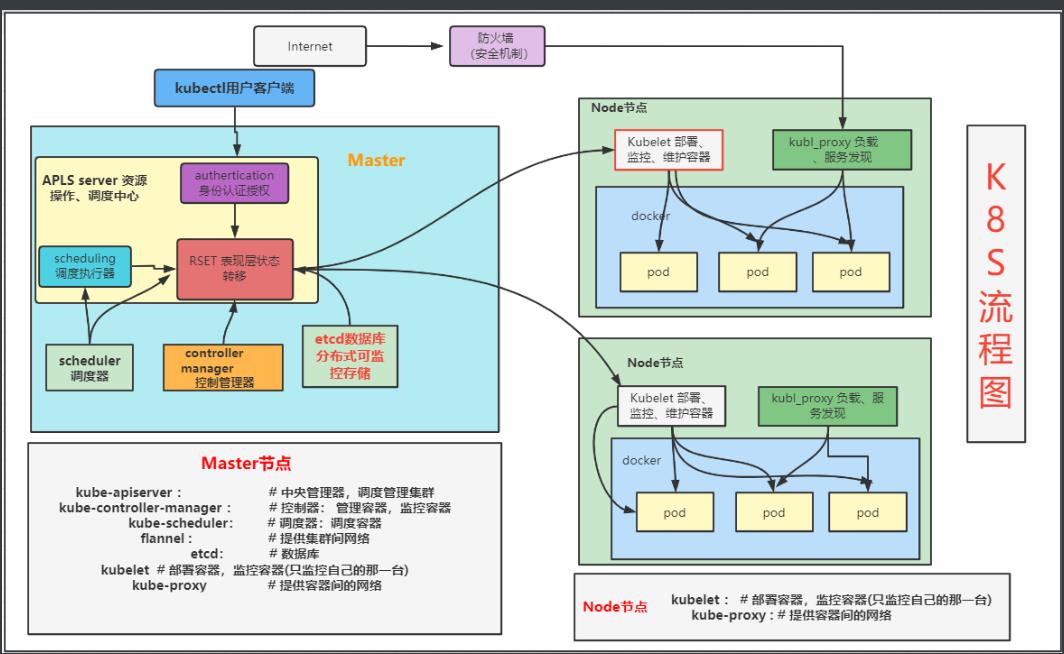

k8s架构图

在架构图中,我们把服务分为运行在工作节点上的服务和组成在集群级别控制板的服务

Kubernetes主要由以下几个核心组件组成:

1. etcd保存整个集群的状态

2. apiserver提供了资源的唯一入口,并提供认证、授权、访问控制、API注册和发现等

3. controller manager负责维护集群的状态,比如故障检测、自动扩展、滚动更新等

4. scheduler负责资源的调度,按照预定的调度策略将Pod调度到相应的机器上

5. kubelet负责维护容器的生命周期,同时也负责Volume(CVI)和网络(CNI)的管理

6. Container runtime负责镜像的管理以及Pod和容器的真正运行(CRI)

7. kube-poxy负责为Service提供cluster内部的服务发现和负载均衡

除了核心组件,还有一些推荐的组件:

8. kube-dns负责为整个集群提供DNS服务

9. Ingress Controller 为服务提供外网入口

10. Heapster提供资源监控

11. Dashboard提供GUIFederation提供跨可用区的集群

12. Fluentd-elasticsearch提供集群日志采集,存储与查询

一、节点规划

| 主机名称 | IP | 域名解析 |

|---|---|---|

| k8s-m-01 | 192.168.15.51 | m1 |

| k8s-m-02 | 192.168.15.52 | m2 |

| k8s-m-03 | 192.168.15.53 | m3 |

| k8s-n-01 | 192.168.15.54 | n1 |

| k8s-n-02 | 192.168.15.55 | n2 |

| k8s-m-vip | 192.168.15.56 | vip |

1、插件规划参考

# Master节点规划

kube-apiserver

kube-controller-manager

kube-scheduler

flannel

etcd

kubelet

kube-proxy

# Node节点规划

kubelet

kube-proxy

2、环境准备

# 1、所有机器需要执行IP

[root@k8s-m-01 ~]# cat base.sh

#!/bin/

# 1、修改主机名和网卡

hostnamectl set-hostname $1 &&\\

sed -i "s#111#$2#g" /etc/sysconfig/network-scripts/ifcfg-eth[01] &&\\

systemctl restart network &&\\

# 2、修改本机hosts文件

cat >>/etc/hosts <<EOF

172.16.1.51 k8s-m-01 m1

172.16.1.52 k8s-m-02 m2

172.16.1.53 k8s-m-03 m3

172.16.1.54 k8s-n-01 n1

172.16.1.55 k8s-n-02 n2

# 虚拟VIP

172.16.1.56 k8s-m-vip vip

EOF

二、系统优化

- 以下操作均在所有节点执行(如果版本已经更新完,无需优化)

第一部分优化: 基础优化

[root@k8s-m-01 ~]# cat base.sh

#!/bin/

# 1、修改主机名和网卡

# hostnamectl set-hostname $1 &&\\

# sed -i "s#111#$2#g" /etc/sysconfig/network-scripts/ifcfg-eth[01] &&\\

# systemctl restart network &&\\

# 2、关闭selinux和防火墙和ssh连接

setenforce 0 &&\\

sed -i 's#enforcing#disabled#g' /etc/selinux/config &&\\

systemctl disable --now firewalld &&\\

sed -i 's/#UseDNS yes/UseDNS no/g' /etc/ssh/sshd_config &&\\

systemctl restart sshd &&\\

# 3、关闭swap分区

# 一旦触发 swap,会导致系统性能急剧下降,所以一般情况下,K8S 要求关闭 swap

# cat /etc/fstab

# 注释最后一行swap,如果没有安装swap就不需要

swapoff -a &&\\

echo 'KUBELET_EXTRA_ARGS="--fail-swap-on=false"' > /etc/sysconfig/kubelet &&\\ #忽略swap

# 4、修改本机hosts文件

# cat >>/etc/hosts <<EOF

# 172.16.1.51 k8s-m-01 m1

# 172.16.1.52 k8s-m-02 m2

# 172.16.1.53 k8s-m-03 m3

# 172.16.1.54 k8s-n-01 n1

# 172.16.1.55 k8s-n-02 n2

# 虚拟VIP

# 172.16.1.56 k8s-m-vip vip

# EOF

# 5、配置镜像源(国内源)

# 默认情况下,CentOS 使用的是官方 yum 源,所以一般情况下在国内使用是非常慢,所以我们可以替换成 国内的一些比较成熟的 yum 源,例如:清华大学镜像源,网易云镜像源等等

rm -rf /ect/yum.repos.d/* &&\\

curl -o /etc/yum.repos.d/CentOS-Base.repo https://mirrors.aliyun.com/repo/Centos-7.repo &&\\

curl -o /etc/yum.repos.d/epel.repo http://mirrors.aliyun.com/repo/epel-7.repo &&\\

yum clean all &&\\

yum makecache &&\\

# 6、更新系统

#查看内核版本,若内核高于4.0,可不加--exclud选项

yum update -y --exclud=kernel* &&\\

# 由于 Docker 运行需要较新的系统内核功能,例如 ipvs 等等,所以一般情况下,我们需要使用 4.0+以上版 本的系统内核要求是 4.18+,如果是 CentOS 8 则不需要内核系统更新

# 7、安装基础常用软件,是为了方便我们的日常使用

yum install wget expect vim net-tools ntp -completion ipvsadm ipset jq iptables conntrack sysstat libseccomp ntpdate -y &&\\

# 8、更新系统内核

#如果是centos8则不需要升级内核

cd /opt/ &&\\

wget https://elrepo.org/linux/kernel/el7/x86_64/RPMS/kernel-lt-5.4.127-1.el7.elrepo.x86_64.rpm &&\\

wget https://elrepo.org/linux/kernel/el7/x86_64/RPMS/kernel-lt-devel-5.4.127-1.el7.elrepo.x86_64.rpm &&\\

# 官网https://elrepo.org/linux/kernel/el7/x86_64/RPMS/

# 9、安装系统内容

yum localinstall /opt/kernel-lt* -y &&\\

# 10、调到默认启动

grub2-set-default 0 && grub2-mkconfig -o /etc/grub2.cfg &&\\

# 11、查看当前默认启动的内核

grubby --default-kernel &&\\

reboot

# 安装完成就是5.4内核

=============================================================================================

第二部分优化: 免密优化

# 1、免密

[root@k8s-master-01 ~]# ssh-keygen -t rsa

[root@k8s-master-01 ~]# for i in m1 m2 m3;do ssh-copy-id -i ~/.ssh/id_rsa.pub root@$i;done

# 2、集群时间同步 crontab -e

# 每隔1分钟刷新一次

*/1 * * * * /usr/sbin/ntpdate ntp.aliyun.com &> /dev/null

==============================================================================================

第三部分优化: 安装IPVS和内核优化

# 1、安装 IPVS 、加载 IPVS 模块 (所有节点)

ipvs 是系统内核中的一个模块,其网络转发性能很高。一般情况下,我们首选 ipvs

[root@k8s-n-01 ~]# cat /etc/sysconfig/modules/ipvs.modules

#!/bin/

ipvs_modules="ip_vs ip_vs_lc ip_vs_wlc ip_vs_rr ip_vs_wrr ip_vs_lblc ip_vs_lblcr ip_vs_dh ip_vs_sh ip_vs_fo ip_vs_nq ip_vs_sed ip_vs_ftp nf_conntrack"

for kernel_module in ${ipvs_modules}; do

/sbin/modinfo -F filename ${kernel_module} > /dev/null 2>&1

if [ $? -eq 0 ]; then

/sbin/modprobe ${kernel_module}

fi

done

# 2、授权(所有节点)

[root@k8s-n-01 ~]# chmod 755 /etc/sysconfig/modules/ipvs.modules && /etc/sysconfig/modules/ipvs.modules && lsmod | grep ip_vs

# 3、内核参数优化(所有节点)

加载IPVS 模块、生效配置

内核参数优化的主要目的是使其更适合 kubernetes 的正常运行

[root@k8s-n-01 ~]# cat /etc/sysctl.d/k8s.conf

net.ipv4.ip_forward = 1

net.bridge.bridge-nf-call-iptables = 1 # 可以之间修改这两个

net.bridge.bridge-nf-call-ip6tables = 1 # 可以之间修改这两个

fs.may_detach_mounts = 1

vm.overcommit_memory=1

vm.panic_on_oom=0

fs.inotify.max_user_watches=89100

fs.file-max=52706963

fs.nr_open=52706963

net.ipv4.tcp_keepalive_time = 600

net.ipv4.tcp.keepaliv.probes = 3

net.ipv4.tcp_keepalive_intvl = 15

net.ipv4.tcp.max_tw_buckets = 36000

net.ipv4.tcp_tw_reuse = 1

net.ipv4.tcp.max_orphans = 327680

net.ipv4.tcp_orphan_retries = 3

net.ipv4.tcp_syncookies = 1

net.ipv4.tcp_max_syn_backlog = 16384

net.ipv4.ip_conntrack_max = 65536

net.ipv4.tcp_max_syn_backlog = 16384

net.ipv4.top_timestamps = 0

net.core.somaxconn = 16384

# 立即生效

sysctl --system

三、安装docker

- 以下操作均在所有节点执行

1、手动安装docker

# 1、若您安装过docker,需要先删掉,之后再安装依赖:

sudo yum remove docker docker-common docker-selinux docker-engine &&\\

sudo yum install -y yum-utils device-mapper-persistent-data lvm2 &&\\

# 2、安装doceker源

wget -O /etc/yum.repos.d/docker-ce.repo https://repo.huaweicloud.com/docker-ce/linux/centos/docker-ce.repo &&\\

# 3、软件仓库地址替换

sudo sed -i 's+download.docker.com+repo.huaweicloud.com/docker-ce+' /etc/yum.repos.d/docker-ce.repo &&\\

# 4、重新生成源

yum clean all &&\\

yum makecache &&\\

# 5、安装docker

sudo yum makecache fast &&\\

sudo yum install docker-ce -y &&\\

# 6、设置docker开机自启动

systemctl enable --now docker.service

2、docker脚本安装

[root@k8s-m-01 ~]# vim docker.sh

# 1、清空已安装的docker

sudo yum remove docker docker-common docker-selinux docker-engine &&\\

sudo yum install -y yum-utils device-mapper-persistent-data lvm2 &&\\

# 2、安装doceker源

wget -O /etc/yum.repos.d/docker-ce.repo https://repo.huaweicloud.com/docker-ce/linux/centos/docker-ce.repo &&\\

# 3、软件仓库地址替换

sudo sed -i 's+download.docker.com+repo.huaweicloud.com/docker-ce+' /etc/yum.repos.d/docker-ce.repo &&\\

# 4、重新生成源

yum clean all &&\\

yum makecache &&\\

# 5、安装docker

sudo yum makecache fast &&\\

sudo yum install docker-ce -y &&\\

# 6、设置docker开机自启动

systemctl enable --now docker.service

# 7、创建docker目录、启动服务(所有节点) ------ 单独执行加速docekr运行速度

sudo mkdir -p /etc/docker

sudo tee /etc/docker/daemon.json <<-'EOF'

{

"registry-mirrors": ["https://k7eoap03.mirror.aliyuncs.com"]

}

EOF

四、生成+颁发集群证书 (master01执行)

- 以下命令只需要在master01执行即可

kubernetes组件众多,这些组件之间通过HTTP/GRPC互相通信,来协同完成集群中的应用部署和管理工作.

1.准备证书生成工具

#cfssl证书生成工具是一款把预先的证书机构、使用期等时间写在json文件里面会更加高效和自动化。

#cfssl采用go语言编写,是一个开源的证书管理工具,cfssljson用来从cfssl程序获取json输出,并将证书、密钥、csr和bundle写入文件中。

# 1、安装证书生成工具

wget https://pkg.cfssl.org/R1.2/cfssl_linux-amd64 &&\\

wget https://pkg.cfssl.org/R1.2/cfssljson_linux-amd64

# 2、设置执行权限

chmod +x cfssljson_linux-amd64 &&\\

chmod +x cfssl_linux-amd64

或者

chmod +x cfssl*

# 3、移动到/usr/local/bin

mv cfssljson_linux-amd64 cfssljson &&\\

mv cfssl_linux-amd64 cfssl &&\\

mv cfssljson cfssl /usr/local/bin

2、生成根证书

#根证书:是CA认证中心与用户建立信任关系的基础,用户的数字证书必须有一个受信任的根证书,用户的数字证书才有效。

#证书包含三部分,用户信息、用户的公钥、证书签名。CA负责数字认证的批审、发放、归档、撤销等功能,CA颁发的数字证书拥有CA的数字签名,所以除了CA自身,其他机构无法不被察觉的改动。

mkdir -p /opt/cert/ca

cat > /opt/cert/ca/ca-config.json <<EOF

{

"signing": {

"default": {

"expiry": "8760h"

},

"profiles": {

"kubernetes": {

"usages": [

"signing",

"key encipherment",

"server auth",

"client auth"

],

"expiry": "8760h"

}

}

}

}

EOF

# 详解: default:默认策略:默认有效1年

profiles:定义使用场景,指定不同的过期时间,使用场景等参数

singing:表示该证书可用于签名其他证书,生成的ca.pem证书

server auth:表示client可以用该CA对server提供的证书进行校验

client auth:表示server可以用该CA对client提供的证书进行验证

3、生成根证书请求文件

cat > /opt/cert/ca/ca-csr.json << EOF

{

"CN": "kubernetes",

"key": {

"algo": "rsa",

"size": 2048

},

"names":[{

"C": "CN",

"ST": "BeiJing",

"L": "BeiJing"

}]

}

EOF

证书详解

| 证书项 | 解释 |

|---|---|

| C | 国家 |

| ST | 省 |

| L | 城市 |

| O | 组织 |

| OU | 组织别名 |

4、生成根证书

[root@k8s-m-01 ca]# cfssl gencert -initca ca-csr.json | cfssljson -bare ca -

2021/06/29 08:49:16 [INFO] generating a new CA key and certificate from CSR

2021/06/29 08:49:16 [INFO] generate received request

2021/06/29 08:49:16 [INFO] received CSR

2021/06/29 08:49:16 [INFO] generating key: rsa-2048

2021/06/29 08:49:17 [INFO] encoded CSR

2021/06/29 08:49:17 [INFO] signed certificate with serial number 137666249701104309463931206360792420984700751682

[root@k8s-m-01 ca]# ll

total 20

-rw-r--r-- 1 root root 285 Jun 29 08:48 ca-config.json

-rw-r--r-- 1 root root 960 Jun 29 08:49 ca.csr

-rw-r--r-- 1 root root 153 Jun 29 08:48 ca-csr.json

-rw------- 1 root root 1679 Jun 29 08:49 ca-key.pem

-rw-r--r-- 1 root root 1281 Jun 29 08:49 ca.pem

参数详解

| 参数项 | 解释 |

|---|---|

| gencert | 生成新的key(密钥)和签名证书 |

| –initca | 初始化一个新CA证书 |

五、部署ETCD集群

ETCD是基于Raft的分布式key-value存储系统,常用于服务发现,共享配置,以及并发控制(如leader选举,分布式锁等等)。kubernetes使用etcd进行状态和数据存储!

- ETCD需要做高可用

- 在一台master节点执行即可

1、节点规划

| Ip | 主机名 | 域名解析 |

|---|---|---|

| 172.16.1.51 | k8s-m-01 | m1 |

| 172.16.1.52 | k8s-m-02 | m2 |

| 172.16.1.53 | k8s-m-03 | m3 |

2.创建ETCD集群证书

[root@k8s-m-01 ca]# cat /etc/hosts

172.16.1.51 k8s-m-01 m1 etcd-1

172.16.1.52 k8s-m-02 m2 etcd-2

172.16.1.53 k8s-m-03 m3 etcd-3

172.16.1.54 k8s-n-01 n1

172.16.1.55 k8s-n-02 n2

# 虚拟VIP

172.16.1.56 k8s-m-vip vip

# 注: ectd也可以不用写,因为就在master节点上了

2、创建ETCD集群证书

mkdir -p /opt/cert/etcd

cd /opt/cert/etcd

cat > etcd-csr.json << EOF

{

"CN": "etcd",

"hosts": [

"127.0.0.1",

"172.16.1.51",

"172.16.1.52",

"172.16.1.53",

"172.16.1.54",

"172.16.1.55",

"172.16.1.56"

],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "BeiJing",

"L": "BeiJing"

}

]

}

EOF

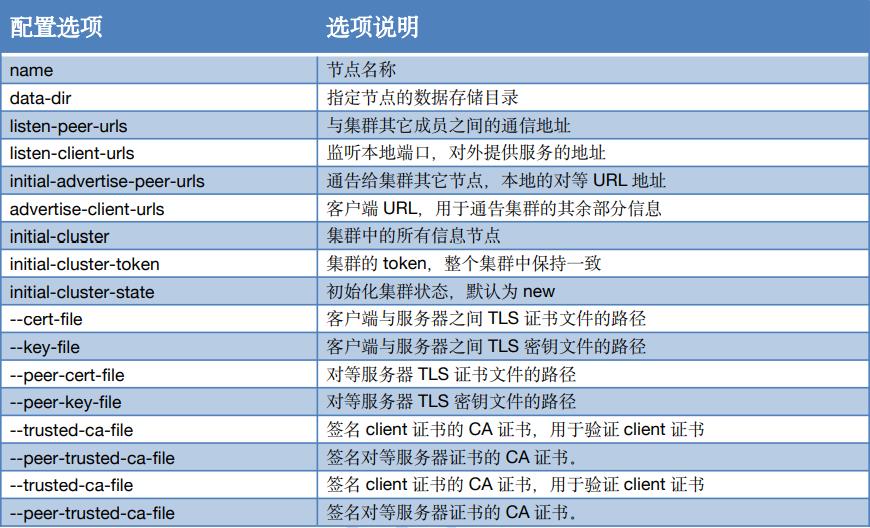

配置项详解

| 配置项详解 | 配置选项 |

|---|---|

| name | 节点名称 |

| data-dir | 指定节点的数据存储目录 |

| listen-peer-urls | 与集群其它成员之间的通信地址 |

| listen-client-urls | 监听本地端口,对外提供服务的地址 |

| initial-advertise-peer-urls | 通告给集群其它节点,本地的对等URL地址 |

| advertise-client-urls | 客户端URL,用于通告集群的其余部分信息 |

| initial-cluster | 集群中的所有信息节点 |

| initial-cluster-token | 集群的token,整个集群中保持一致 |

| initial-cluster-state | 初始化集群状态,默认为new |

| –cert-file | 客户端与服务器之间TLS证书文件的路径 |

| –key-file | 客户端与服务器之间TLS密钥文件的路径 |

| –peer-cert-file | 对等服务器TLS证书文件的路径 |

| –peer-key-file | 对等服务器TLS密钥文件的路径 |

| –trusted-ca-file | 签名client证书的CA证书,用于验证client证书 |

| –peer-trusted-ca-file | 签名对等服务器证书的CA证书 |

| –trusted-ca-file | 签名client证书的CA证书,用于验证client证书 |

| –peer-trusted-ca-file | 签名对等服务器证书的CA证书。 |

3、生成ETCD证书

[root@k8s-m-01 etcd]# cfssl gencert -ca=../ca/ca.pem -ca-key=../ca/ca-key.pem -config=../ca/ca-config.json -profile=kubernetes etcd-csr.json | cfssljson -bare etcd

2021/06/29 08:59:41 [INFO] generate received request

2021/06/29 08:59:41 [INFO] received CSR

2021/06/29 08:59:41 [INFO] generating key: rsa-2048

2021/06/29 08:59:42 [INFO] encoded CSR

2021/06/29 08:59:42 [INFO] signed certificate with serial number 495333324552725195895077036503159161152536226206

2021/06/29 08:59:42 [WARNING] This certificate lacks a "hosts" field. This makes it unsuitable for

websites. For more information see the Baseline Requirements for the Issuance and Management

of Publicly-Trusted Certificates, v.1.1.6, from the CA/Browser Forum (https://cabforum.org);

specifically, section 10.2.3 ("Information Requirements").

[root@k8s-m-01 etcd]# ll

total 16

-rw-r--r-- 1 root root 1050 Jun 29 08:59 etcd.csr

-rw-r--r-- 1 root root 382 Jun 29 08:58 etcd-csr.json

-rw------- 1 root root 1675 Jun 29 08:59 etcd-key.pem

-rw-r--r-- 1 root root 1379 Jun 29 08:59 etcd.pem

参数详解

| 参数项 | 解释 |

|---|---|

| gencert | 生成新的key(密钥)和签名证书 |

| -initca | 初始化一个新的ca |

| -ca-key | 指明ca的证书 |

| -config | 指明ca的私钥文件 |

| -profile | 指明请求证书的json文件 |

| -ca | 与config中的profile对应,是指根据config中的profile段来生成证书的相关信息 |

4、分发ETCD证书

[root@k8s-m-01 /opt/cert/etcd]# for ip in m{1..3};do

ssh root@${ip} "mkdir -pv /etc/etcd/ssl"

scp ../ca/ca*.pem root@${ip}:/etc/etcd/ssl

scp ./etcd*.pem root@${ip}:/etc/etcd/ssl

done

mkdir: created directory ‘/etc/etcd’

mkdir: created directory ‘/etc/etcd/ssl’

ca-key.pem 100% 1675 299.2KB/s 00:00

ca.pem 100% 1281 232.3KB/s 00:00

etcd-key.pem 100% 1675 1.4MB/s 00:00

etcd.pem 100% 1379 991.0KB/s 00:00

mkdir: created directory ‘/etc/etcd’

mkdir: created directory ‘/etc/etcd/ssl’

ca-key.pem 100% 1675 1.1MB/s 00:00

ca.pem 100% 1281 650.8KB/s 00:00

etcd-key.pem 100% 1675 507.7KB/s 00:00

etcd.pem 100% 1379 166.7KB/s 00:00

mkdir: created directory ‘/etc/etcd’

mkdir: created directory ‘/etc/etcd/ssl’

ca-key.pem 100% 1675 109.1KB/s 00:00

ca.pem 100% 1281 252.9KB/s 00:00

etcd-key.pem 100% 1675 121.0KB/s 00:00

etcd.pem 100% 1379 180.4KB/s 00:00

# 查看分发证书

[root@k8s-m-01 cert]# cd /etc/etcd/ssl/

[root@k8s-m-01 ssl]# ll

total 16

-rw------- 1 root root 1679 Jun 29 09:13 ca-key.pem

-rw-r--r-- 1 root root 1281 Jun 29 09:13 ca.pem

-rw------- 1 root root 1675 Jun 29 09:13 etcd-key.pem

-rw-r--r-- 1 root root 1379 Jun 29 09:13 etcd.pem

5、部署ETCD

# 下载ETCD安装包

[root@k8s-m-01 ssl]# cd /opt/

[root@k8s-m-01 opt]# ll

wget https://mirrors.huaweicloud.com/etcd/v3.3.24/etcd-v3.3.24-linux-amd64.tar.gz

# 解压

[root@k8s-m-01 opt]# tar xf etcd-v3.3.24-linux-amd64.tar.gz

# 分发至其他节点

for i in m1 m2 m3;do scp ./etcd-v3.3.24-linux-amd64/etcd* root@$i:/usr/local/bin/;done

# 三节点各自查看自己ETCD版本(是否分发成功)

[root@k8s-m-01 opt]# etcd --version

etcd Version: 3.3.24

Git SHA: bdd57848d

Go Version: go1.12.17

Go OS/Arch: linux/amd64

6、注册ETCD服务 (三台master节点上同时执行)

- 在三台master节点上同时执行

- 利用变量主机名与ip,让其在每台master节点注册

[root@k8s-m-01 ~]# cat etcd.sh

#1、创建存放目录

mkdir -pv /etc/kubernetes/conf/etcd &&\\

#2、定义变量

ETCD_NAME=`hostname`

INTERNAL_IP=`hostname -i`

INITIAL_CLUSTER=k8s-m-01=https://172.16.1.51:2380,k8s-m-02=https://172.16.1.52:2380,k8s-m-03=https://172.16.1.53:2380

#3、准备配置文件

cat << EOF | sudo tee /usr/lib/systemd/system/etcd.service

[Unit]

Description=etcd

Documentation=https://github.com/coreos

[Service]

ExecStart=/usr/local/bin/etcd \\\\

--name ${ETCD_NAME} \\\\

--cert-file=/etc/etcd/ssl/etcd.pem \\\\

--key-file=/etc/etcd/ssl/etcd-key.pem \\\\

--peer-cert-file=/etc/etcd/ssl/etcd.pem \\\\

--peer-key-file=/etc/etcd/ssl/etcd-key.pem \\\\

--trusted-ca-file=/etc/etcd/ssl/ca.pem \\\\

--peer-trusted-ca-file=/etc/etcd/ssl/ca.pem \\\\

--peer-client-cert-auth \\\\

--client-cert-auth \\\\

--initial-advertise-peer-urls https://${INTERNAL_IP}:2380 \\\\

--listen-peer-urls https://${INTERNAL_IP}:2380 \\\\

--listen-client-urls https://${INTERNAL_IP}:2379,https://127.0.0.1:2379 \\\\

--advertise-client-urls https://${INTERNAL_IP}:2379 \\\\

--initial-cluster-token etcd-cluster \\\\

--initial-cluster ${INITIAL_CLUSTER} \\\\

--initial-cluster-state new \\\\

--data-dir=/var/lib/etcd

Restart=on-failure

RestartSec=5

[Install]

WantedBy=multi-user.target

EOF

# 4、启动ETCD服务

systemctl enable --now etcd.service

# 5、验证ETCD服务

systemctl status etcd.service

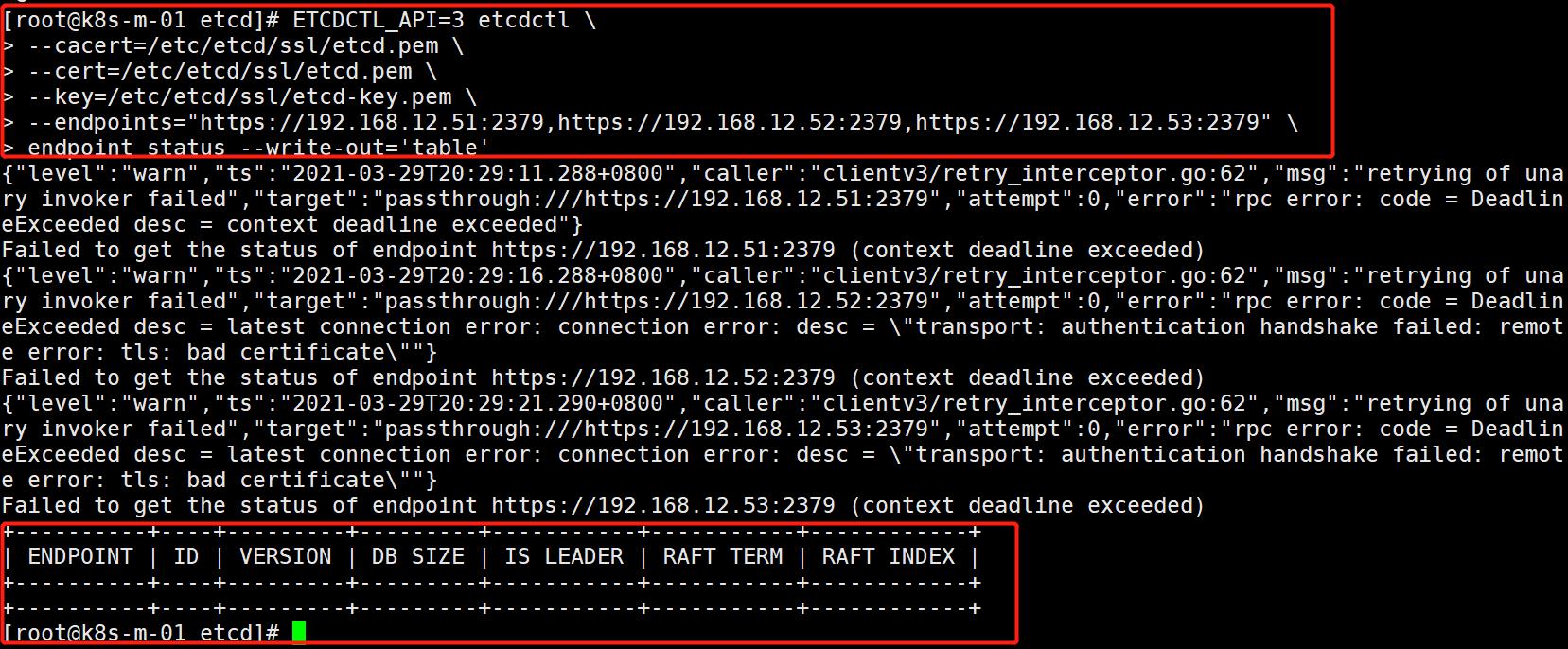

7、测试ETCD服务

- 在一台master节点执行即可(如master01)

# 第一种方式

ETCDCTL_API=3 etcdctl \\

--cacert=/etc/etcd/ssl/etcd.pem \\

--cert=/etc/etcd/ssl/etcd.pem \\

--key=/etc/etcd/ssl/etcd-key.pem \\

--endpoints="https://172.16.1.51:2379,https://172.16.1.52:2379,https://172.16.1.53:2379" \\

endpoint status --write-out='table'

# 测试结果

+--------------------------+------------------+---------+---------+-----------+-----------+------------+

| ENDPOINT | ID | VERSION | DB SIZE | IS LEADER | RAFT TERM | RAFT INDEX |

+--------------------------+------------------+---------+---------+-----------+-----------+------------+

| https://172.16.1.51:2379 | 2760f98de9dc762 | 3.3.24 | 20 kB | true | 55 | 9 |

| https://172.16.1.52:2379 | 18273711b3029818 | 3.3.24 | 20 kB | false | 55 | 9 |

| https://172.16.1.53:2379 | f42951486b449d48 | 3.3.24 | 20 kB | false | 55 | 9 |

+--------------------------+------------------+---------+---------+-----------+-----------+------------+

# 第二种方式

ETCDCTL_API=3 etcdctl \\

--cacert=/etc/etcd/ssl/etcd.pem \\

--cert=/etc/etcd/ssl/etcd.pem \\

--key=/etc/etcd/ssl/etcd-key.pem \\

--endpoints="https://172.16.1.51:2379,https://172.16.1.52:2379,https://172.16.1.53:2379" \\

member list --write-out='table'

# 测试结果

+------------------+---------+----------+--------------------------+--------------------------+

| ID | STATUS | NAME | PEER ADDRS | CLIENT ADDRS |

+------------------+---------+----------+--------------------------+--------------------------+

| 2760f98de9dc762 | started | k8s-m-01 | https://172.16.1.51:2380 | https://172.16.1.51:2379 |

| 18273711b3029818 | started | k8s-m-02 | https://172.16.1.52:2380 | https://172.16.1.52:2379 |

| f42951486b449d48 | started | k8s-m-03 | https://172.16.1.53:2380 | https://172.16.1.53:2379 |

+------------------+---------+----------+--------------------------+--------------------------+

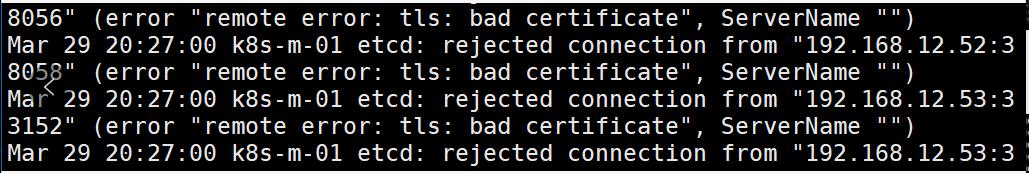

补充:

如果测试不成功出现如下图报错

清理缓存数据,重新启动即可

[root@k8s-m-01 ~]# cd /var/lib/etcd/

[root@k8s-m-01 etcd]# ll

总用量 0

drwx------ 4 root root 29 3月 29 20:37 member

[root@k8s-m-01 etcd]# rm -rf member/ #删除缓存数据

[root@k8s-m-01 etcd]#

[root@k8s-m-01 etcd]# systemctl start etcd

[root@k8s-m-01 etcd]# systemctl status etcd

六、部署master节点

主要把master节点上的各个组件部署成功。

kube-apiserver、控制器、调度器、flannel、etcd、kubelet、kube-proxy、DNS

1、集群规划

172.16.1.51 k8s-m-01 m1

172.16.1.52 k8s-m-02 m2

172.16.1.53 k8s-m-03 m3

# kube-apiserver、控制器、调度器、flannel、etcd、kubelet、kube-proxy、DNS

一、创建证书

只需要在任意一台 master 节点上执行(如master01)

1.创建集群CA证书

Master 节点是集群当中最为重要的一部分,组件众多,部署也最为复杂

以下证书均是在 /opt/cert/k8s 下生成

1)创建ca集群证书

创建集群各个组件之间的证书

mkdir /opt/cert/k8s

cd /opt/cert/k8s

cat > ca-config.json << EOF

{

"signing": {

"default": {

"expiry": "87600h"

},

"profiles": {

"kubernetes": {

"expiry": "87600h",

"usages": [

"signing",

"key encipherment",

"server auth",

"client auth"

]

}

}

}

}

EOF

2)创建根证书签名

cat > ca-csr.json << EOF

{

"CN": "kubernetes",

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"L": "BeiJing",

"ST": "BeiJing"

}

]

}

EOF

# 1、查看集群证书

[root@k8s-m-01 k8s]# ll

total 8

-rw-r--r-- 1 root root 294 Jul 16 19:16 ca-config.json

-rw-r--r-- 1 root root 214 Jul 16 19:16 ca-csr.json

3)生成根证书

[root@k8s-m-01 k8s]# cfssl gencert -initca ca-csr.json | cfssljson -bare ca -

2021/07/16 19:17:09 [INFO] generating a new CA key and certificate from CSR #生成过程

2021/07/16 19:17:09 [INFO] generate received request

2021/07/16 19:17:09 [INFO] received CSR

2021/07/16 19:17:09 [INFO] generating key: rsa-2048

2021/07/16 19:17:10 [INFO] encoded CSR

2021/07/16 19:17:10 [INFO] signed certificate with serial number 148240958746672103928071367692758832732603343709

[root@k8s-m-01 k8s]# ll #查看证书

total 20

-rw-r--r-- 1 root root 294 Jul 16 19:16 ca-config.json

-rw-r--r-- 1 root root 960 Jul 16 19:17 ca.csr

-rw-r--r-- 1 root root 214 Jul 16 19:16 ca-csr.json

-rw------- 1 root root 1679 Jul 16 19:17 ca-key.pem

-rw-r--r-- 1 root root 1281 Jul 16 19:17 ca.pem

# ca 证书是为了生成普通证书

2.创建集群普通证书

即创建集群各个组件之间的证书

1)创建kube-apiserver的证书

1> 创建证书签名配置

mkdir -p /opt/cert/k8s

cd /opt/cert/k8s

cat > server-csr.json << EOF

{

"CN": "kubernetes",

"hosts": [

"127.0.0.1",

"172.16.1.51",

"172.16.1.52",

"172.16.1.53",

"172.16.1.54",

"172.16.1.55",

"172.16.1.56",

"10.96.0.1",

"kubernetes",

"kubernetes.default",

"kubernetes.default.svc",

"kubernetes.default.svc.cluster",

"kubernetes.default.svc.cluster.local"

],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"L": "BeiJing",

"ST": "BeiJing"

}

]

}

EOF

2> 生成证书

[root@k8s-m-01 k8s]# cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes server-csr.json | cfssljson -bare server

2021/07/16 19:19:37 [INFO] generate received request #生成过程

2021/07/16 19:19:37 [INFO] received CSR

2021/07/16 19:19:37 [INFO] generating key: rsa-2048

2021/07/16 19:19:37 [INFO] encoded CSR

2021/07/16 19:19:37 [INFO] signed certificate with serial number 526999021546243182432347319587302894152755417201

2021/07/16 19:19:37 [WARNING] This certificate lacks a "hosts" field. This makes it unsuitable for

websites. For more information see the Baseline Requirements for the Issuance and Management

of Publicly-Trusted Certificates, v.1.1.6, from the CA/Browser Forum (https://cabforum.org);

specifically, section 10.2.3 ("Information Requirements").

[root@k8s-m-01 k8s]# ll #查看生成

total 36

-rw-r--r-- 1 root root 294 Jul 16 19:16 ca-config.json

-rw-r--r-- 1 root root 960 Jul 16 19:17 ca.csr

-rw-r--r-- 1 root root 214 Jul 16 19:16 ca-csr.json

-rw------- 1 root root 1679 Jul 16 19:17 ca-key.pem

-rw-r--r-- 1 root root 1281 Jul 16 19:17 ca.pem

-rw-r--r-- 1 root root 1245 Jul 16 19:19 server.csr

-rw-r--r-- 1 root root 591 Jul 16 19:19 server-csr.json

-rw------- 1 root root 1679 Jul 16 19:19 server-key.pem

-rw-r--r-- 1 root root 1574 Jul 16 19:19 server.pem

2)创建controller-manager的证书

1> 创建证书签名配置

[root@k8s-m-01 k8s]# pwd

/opt/cert/k8s

cat > kube-controller-manager-csr.json << EOF

{

"CN": "system:kube-controller-manager",

"hosts": [

"127.0.0.1",

"172.16.1.51",

"172.16.1.52",

"172.16.1.53",

"172.16.1.54",

"172.16.1.55",

"172.16.1.56"

],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"L": "BeiJing",

"ST": "BeiJing",

"O": "system:kube-controller-manager",

"OU": "System"

}

]

}

EOF

2> 生成证书

# ca路径一定要指定正确,否则生成是失败!

[root@k8s-m-01 k8s]# cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes kube-controller-manager-csr.json | cfssljson -bare kube-controller-manager

2021/07/16 19:24:21 [INFO] generate received request # 生成过程

2021/07/16 19:24:21 [INFO] received CSR

2021/07/16 19:24:21 [INFO] generating key: rsa-2048

2021/07/16 19:24:21 [INFO] encoded CSR

2021/07/16 19:24:21 [INFO] signed certificate with serial number 105819922871201435896062879045495573023643038916

2021/07/16 19:24:21 [WARNING] This certificate lacks a "hosts" field. This makes it unsuitable for

websites. For more information see the Baseline Requirements for the Issuance and Management

of Publicly-Trusted Certificates, v.1.1.6, from the CA/Browser Forum (https://cabforum.org);

specifically, section 10.2.3 ("Information Requirements").

[root@k8s-m-01 k8s]# ll #查看证书

total 52

-rw-r--r-- 1 root root 294 Jul 16 19:16 ca-config.json

-rw-r--r-- 1 root root 960 Jul 16 19:17 ca.csr

-rw-r--r-- 1 root root 214 Jul 16 19:16 ca-csr.json

-rw------- 1 root root 1679 Jul 16 19:17 ca-key.pem

-rw-r--r-- 1 root root 1281 Jul 16 19:17 ca.pem

-rw-r--r-- 1 root root 1163 Jul 16 19:24 kube-controller-manager.csr

-rw-r--r-- 1 root root 493 Jul 16 19:23 kube-controller-manager-csr.json

-rw------- 1 root root 1675 Jul 16 19:24 kube-controller-manager-key.pem

-rw-r--r-- 1 root root 1497 Jul 16 19:24 kube-controller-manager.pem

-rw-r--r-- 1 root root 1245 Jul 16 19:19 server.csr

-rw-r--r-- 1 root root 591 Jul 16 19:19 server-csr.json

-rw------- 1 root root 1679 Jul 16 19:19 server-key.pem

-rw-r--r-- 1 root root 1574 Jul 16 19:19 server.pem

3)创建kube-scheduler的证书

1> 创建证书签名配置

[root@k8s-m-01 k8s]# pwd

/opt/cert/k8s

cat > kube-scheduler-csr.json << EOF

{

"CN": "system:kube-scheduler",

"hosts": [

"127.0.0.1",

"172.16.1.51",

"172.16.1.52",

"172.16.1.53",

"172.16.1.54",

"172.16.1.55",

"172.16.1.56"

],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"L": "BeiJing",

"ST": "BeiJing",

"O": "system:kube-scheduler",

"OU": "System"

}

]

}

EOF

2> 开始生成

[root@k8s-m-01 k8s]# cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes kube-scheduler-csr.json | cfssljson -bare kube-scheduler

2021/07/16 19:29:45 [INFO] generate received request #生成过程

2021/07/16 19:29:45 [INFO] received CSR

2021/07/16 19:29:45 [INFO] generating key: rsa-2048

2021/07/16 19:29:45 [INFO] encoded CSR

2021/07/16 19:29:45 [INFO] signed certificate with serial number 626840840439558052435621714466927492785323876680

2021/07/16 19:29:45 [WARNING] This certificate lacks a "hosts" field. This makes it unsuitable for

websites. For more information see the Baseline Requirements for the Issuance and Management

of Publicly-Trusted Certificates, v.1.1.6, from the CA/Browser Forum (https://cabforum.org);

specifically, section 10.2.3 ("Information Requirements").

[root@k8s-m-01 k8s]# ll #查看证书

total 68

-rw-r--r-- 1 root root 294 Jul 16 19:16 ca-config.json

-rw-r--r-- 1 root root 960 Jul 16 19:17 ca.csr

-rw-r--r-- 1 root root 214 Jul 16 19:16 ca-csr.json

-rw------- 1 root root 1679 Jul 16 19:17 ca-key.pem

-rw-r--r-- 1 root root 1281 Jul 16 19:17 ca.pem

-rw-r--r-- 1 root root 1163 Jul 16 19:24 kube-controller-manager.csr

-rw-r--r-- 1 root root 493 Jul 16 19:23 kube-controller-manager-csr.json

-rw------- 1 root root 1675 Jul 16 19:24 kube-controller-manager-key.pem

-rw-r--r-- 1 root root 1497 Jul 16 19:24 kube-controller-manager.pem

-rw-r--r-- 1 root root 1135 Jul 16 19:29 kube-scheduler.csr

-rw-r--r-- 1 root root 473 Jul 16 19:29 kube-scheduler-csr.json

-rw------- 1 root root 1679 Jul 16 19:29 kube-scheduler-key.pem

-rw-r--r-- 1 root root 1468 Jul 16 19:29 kube-scheduler.pem

-rw-r--r-- 1 root root 1245 Jul 16 19:19 server.csr

-rw-r--r-- 1 root root 591 Jul 16 19:19 server-csr.json

-rw------- 1 root root 1679 Jul 16 19:19 server-key.pem

-rw-r--r-- 1 root root 1574 Jul 16 19:19 server.pem

4)创建kube-proxy证书

1> 创建证书签名配置

[root@k8s-m-01 k8s]# pwd

/opt/cert/k8s

cat > kube-proxy-csr.json << EOF

{

"CN":"system:kube-proxy",

"hosts":[],

"key":{

"algo":"rsa",

"size":2048

},

"names":[

{

"C":"CN",

"L":"BeiJing",

"ST":"BeiJing",

"O":"system:kube-proxy",

"OU":"System"

}

]

}

EOF

2> 开始生成

[root@k8s-m-01 k8s]# cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes kube-proxy-csr.json | cfssljson -bare kube-proxy

2021/06/29 11:14:09 [INFO] generate received request # 生成过程

2021/07/16 19:31:42 [INFO] generate received request

2021/07/16 19:31:42 [INFO] received CSR

2021/07/16 19:31:42 [INFO] generating key: rsa-2048

2021/07/16 19:31:42 [INFO] encoded CSR

2021/07/16 19:31:42 [INFO] signed certificate with serial number 640459339169399647496256803266992860728910371233

2021/07/16 19:31:42 [WARNING] This certificate lacks a "hosts" field. This makes it unsuitable for

websites. For more information see the Baseline Requirements for the Issuance and Management

of Publicly-Trusted Certificates, v.1.1.6, from the CA/Browser Forum (https://cabforum.org);

specifically, section 10.2.3 ("Information Requirements").

[root@k8s-m-01 k8s]# ll #查看证书

total 84

-rw-r--r-- 1 root root 294 Jul 16 19:16 ca-config.json

-rw-r--r-- 1 root root 960 Jul 16 19:17 ca.csr

-rw-r--r-- 1 root root 214 Jul 16 19:16 ca-csr.json

-rw------- 1 root root 1679 Jul 16 19:17 ca-key.pem

-rw-r--r-- 1 root root 1281 Jul 16 19:17 ca.pem

-rw-r--r-- 1 root root 1163 Jul 16 19:24 kube-controller-manager.csr

-rw-r--r-- 1 root root 493 Jul 16 19:23 kube-controller-manager-csr.json

-rw------- 1 root root 1675 Jul 16 19:24 kube-controller-manager-key.pem

-rw-r--r-- 1 root root 1497 Jul 16 19:24 kube-controller-manager.pem

-rw-r--r-- 1 root root 1029 Jul 16 19:31 kube-proxy.csr

-rw-r--r-- 1 root root 294 Jul 16 19:31 kube-proxy-csr.json

-rw------- 1 root root 1679 Jul 16 19:31 kube-proxy-key.pem

-rw-r--r-- 1 root root 1383 Jul 16 19:31 kube-proxy.pem

-rw-r--r-- 1 root root 1135 Jul 16 19:29 kube-scheduler.csr

-rw-r--r-- 1 root root 473 Jul 16 19:29 kube-scheduler-csr.json

-rw------- 1 root root 1679 Jul 16 19:29 kube-scheduler-key.pem

-rw-r--r-- 1 root root 1468 Jul 16 19:29 kube-scheduler.pem

-rw-r--r-- 1 root root 1245 Jul 16 19:19 server.csr

-rw-r--r-- 1 root root 591 Jul 16 19:19 server-csr.json

-rw------- 1 root root 1679 Jul 16 19:19 server-key.pem

-rw-r--r-- 1 root root 1574 Jul 16 19:19 server.pem

5)创建集群管理员证书

1> 创建证书签名配置

#为了能让集群客户端工具安全的访问集群,所以要为集群客户端创建证书,使其具有所有集群权限

[root@k8s-m-01 k8s]# pwd

/opt/cert/k8s

cat > admin-csr.json << EOF

{

"CN":"admin",

"key":{

"algo":"rsa",

"size":2048

},

"names":[

{

"C":"CN",

"L":"BeiJing",

"ST":"BeiJing",

"O":"system:masters",

"OU":"System"

}

]

}

EOF

2> 开时生成

[root@k8s-m-01 k8s]# cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes admin-csr.json | cfssljson -bare admin

2021/07/16 19:33:28 [INFO] generate received request # 生成过程

2021/07/16 19:33:28 [INFO] received CSR

2021/07/16 19:33:28 [INFO] generating key: rsa-2048

2021/07/16 19:33:28 [INFO] encoded CSR

2021/07/16 19:33:28 [INFO] signed certificate with serial number 208208770401522948648550441130579134081563117219

2021/07/16 19:33:28 [WARNING] This certificate lacks a "hosts" field. This makes it unsuitable for

websites. For more information see the Baseline Requirements for the Issuance and Management

of Publicly-Trusted Certificates, v.1.1.6, from the CA/Browser Forum (https://cabforum.org);

specifically, section 10.2.3 ("Information Requirements").

[root@k8s-m-01 k8s]# ll # 查看证书

total 100

-rw-r--r-- 1 root root 1009 Jul 16 19:33 admin.csr

-rw-r--r-- 1 root root 260 Jul 16 19:33 admin-csr.json

-rw------- 1 root root 1679 Jul 16 19:33 admin-key.pem

-rw-r--r-- 1 root root 1363 Jul 16 19:33 admin.pem

-rw-r--r-- 1 root root 294 Jul 16 19:16 ca-config.json

-rw-r--r-- 1 root root 960 Jul 16 19:17 ca.csr

-rw-r--r-- 1 root root 214 Jul 16 19:16 ca-csr.json

-rw------- 1 root root 1679 Jul 16 19:17 ca-key.pem

-rw-r--r-- 1 root root 1281 Jul 16 19:17 ca.pem

-rw-r--r-- 1 root root 1163 Jul 16 19:24 kube-controller-manager.csr

-rw-r--r-- 1 root root 493 Jul 16 19:23 kube-controller-manager-csr.json

-rw------- 1 root root 1675 Jul 16 19:24 kube-controller-manager-key.pem

-rw-r--r-- 1 root root 1497 Jul 16 19:24 kube-controller-manager.pem

-rw-r--r-- 1 root root 1029 Jul 16 19:31 kube-proxy.csr

-rw-r--r-- 1 root root 294 Jul 16 19:31 kube-proxy-csr.json

-rw------- 1 root root 1679 Jul 16 19:31 kube-proxy-key.pem

-rw-r--r-- 1 root root 1383 Jul 16 19:31 kube-proxy.pem

-rw-r--r-- 1 root root 1135 Jul 16 19:29 kube-scheduler.csr

-rw-r--r-- 1 root root 473 Jul 16 19:29 kube-scheduler-csr.json

-rw------- 1 root root 1679 Jul 16 19:29 kube-scheduler-key.pem

-rw-r--r-- 1 root root 1468 Jul 16 19:29 kube-scheduler.pem

-rw-r--r-- 1 root root 1245 Jul 16 19:19 server.csr

-rw-r--r-- 1 root root 591 Jul 16 19:19 server-csr.json

-rw------- 1 root root 1679 Jul 16 19:19 server-key.pem

-rw-r--r-- 1 root root 1574 Jul 16 19:19 server.pem

6)颁发证书

# Master节点所需证书

1、ca、kube-apiservver

2、kube-controller-manager

3、kube-scheduler

4、用户证书

5、Etcd 证书

1> 给其他master节点颁发证书(master02、master03)

# Master节点所需证书:ca、kube-apiservver、kube-controller-manager、kube-scheduler、用户证书、Etcd证书。

[root@k8s-m-01 /opt/cert/k8s]# mkdir -pv /etc/kubernetes/ssl

[root@k8s-m-01 /opt/cert/k8s]# cp -p ./{ca*pem,server*pem,kube-controller-manager*pem,kube-scheduler*.pem,kube-proxy*pem,admin*.pem} /etc/kubernetes/ssl #备份一份

[root@k8s-m-01 /opt/cert/k8s]# for i in m1 m2 m3;do

ssh root@$i "mkdir -pv /etc/kubernetes/ssl"

scp /etc/kubernetes/ssl/* root@$i:/etc/kubernetes/ssl

done

七、下载安装包+编写配置文件

在一台master节点执行即可(如master01)

1.下载安装包并分发组件

1)下载安装包(master01节点)

# 1、创建存放目录

mkdir /opt/data

cd /opt/data

# 2、下载二进制组件

方式一:

## 1)下载server安装包

[root@k8s-m-01 /opt/data]# wget https://dl.k8s.io/v1.18.8/kubernetes-server-linux-amd64.tar.gz

方式二:

## 2)从容器中复制(如果下载不成功可执行这步)

[root@k8s-m-01 /opt/data]# docker run -it registry.cn-hangzhou.aliyuncs.com/k8sos/k8s:v1.18.8.1

方式三:

## 3)从自己博客中复制(如果下载不成功可执行这步)

[root@k8s-m-01 /opt/data]# wget http://www.mmin.xyz:81/package/k8s/kubernetes-server-linux-amd64.tar.gz

# 3、分发组件

[root@k8s-m-01 ~]# cd /opt/data/

[root@k8s-m-01 data]# ll

total 0

[root@k8s-m-01 data]# docker cp 4511c1146868:kubernetes-server-linux-amd64.tar.gz . 其他软件依次复制

[root@k8s-m-01 data]# docker cp 4511c1146868:kubernetes-client-linux-amd64.tar.gz .

[root@k8s-m-01 data]# ll

total 487492

-rw-r--r-- 1 root root 14503878 Aug 18 2020 etcd-v3.3.24-linux-amd64.tar.gz

-rw-r--r-- 1 root root 9565743 Jan 29 2019 flannel-v0.11.0-linux-amd64.tar.gz

-rw-r--r-- 1 root root 13237066 Aug 14 2020 kubernetes-client-linux-amd64.tar.gz

-rw-r--r-- 1 root root 97933232 Aug 14 2020 kubernetes-node-linux-amd64.tar.gz

-rw-r--r-- 1 root root 363943527 Aug 14 2020 kubernetes-server-linux-amd64.tar.gz

[root@k8s-m-01 /opt/data]# tar -xf kubernetes-server-linux-amd64.tar.gz

[root@k8s-m-01 /opt/data]# cd kubernetes/server/bin

[root@k8s-m-01 bin]# for i in m1 m2 m3;do scp kube-apiserver kube-scheduler kubectl kube-controller-manager kubelet kube-proxy root@$i:/usr/local/bin/;done

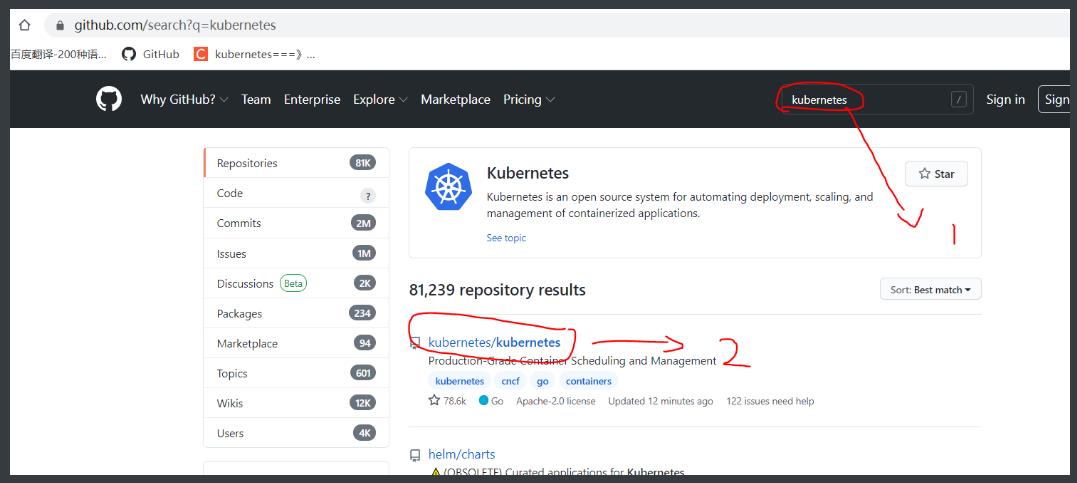

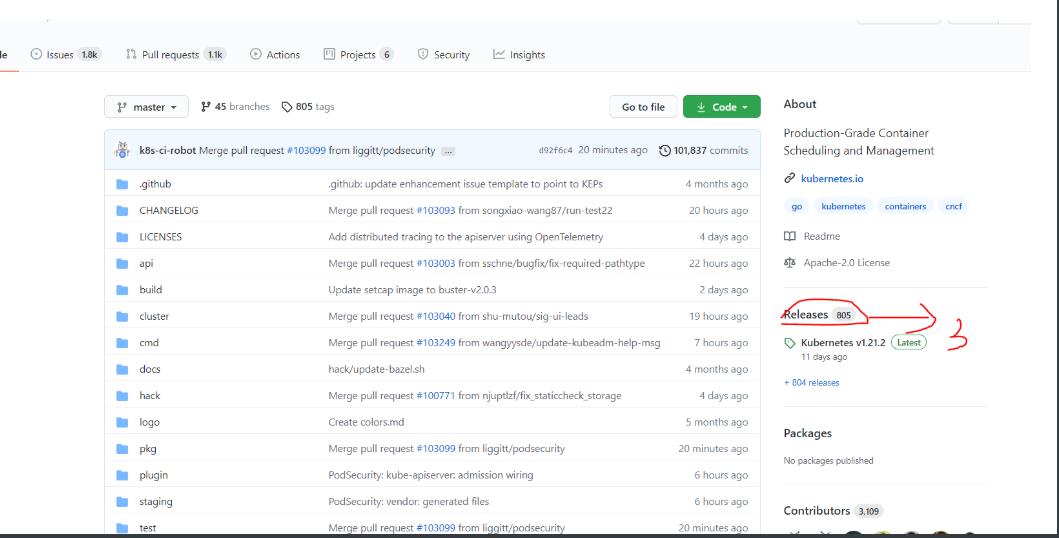

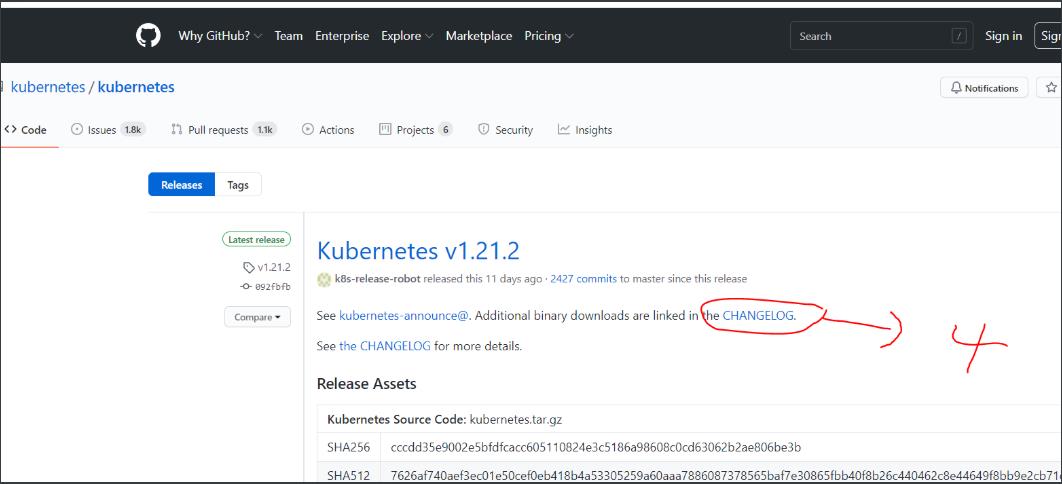

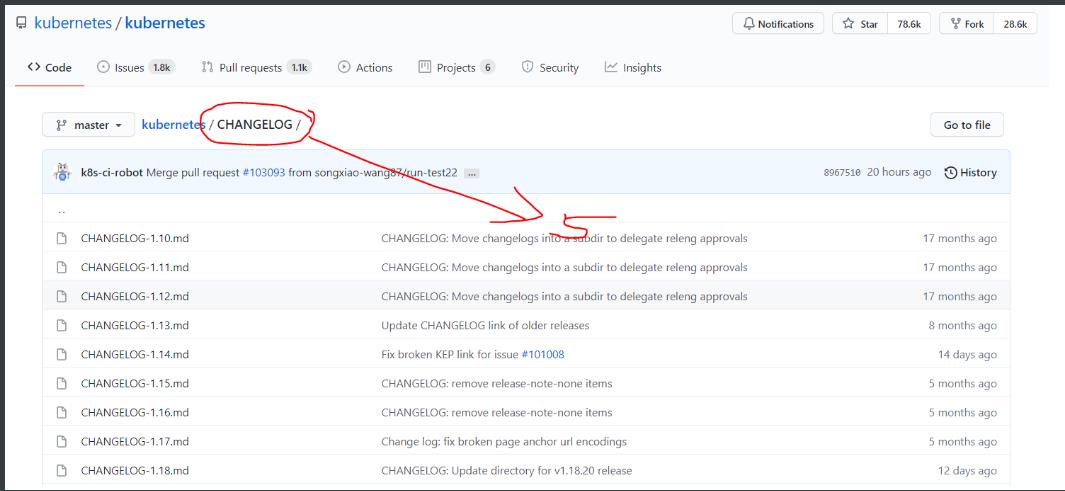

github.com 安装kubernetes-server

2)创建集群配置文件

1>创建kube-controller-manager.kubeconfig(master01节点)

# 1、在kuberbetes中,我们需要创建一个配置文件,用来配置集群、用户、命名空间及身份认证等信息

cd /opt/cert/k8s

# 2、创建kube-controller-manager.kubeconfig

export KUBE_APISERVER="https://172.16.1.56:8443" # vip地址

# 3、设置集群参数

kubectl config set-cluster kubernetes \\

--certificate-authority=/etc/kubernetes/ssl/ca.pem \\

--embed-certs=true \\

--server=${KUBE_APISERVER} \\

--kubeconfig=kube-controller-manager.kubeconfig

# 4、设置客户端认证参数

kubectl config set-credentials "kube-controller-manager" \\

--client-certificate=/etc/kubernetes/ssl/kube-controller-manager.pem \\

--client-key=/etc/kubernetes/ssl/kube-controller-manager-key.pem \\

--embed-certs=true \\

--kubeconfig=kube-controller-manager.kubeconfig

# 5、设置上下文参数(在上下文参数中将集群参数和用户参数关联起来)

kubectl config set-context default \\

--cluster=kubernetes \\

--user="kube-controller-manager" \\

--kubeconfig=kube-controller-manager.kubeconfig

# 配置默认上下文

kubectl config use-context default --kubeconfig=kube-controller-manager.kubeconfig

2>创建kube-scheduler.kubeconfig(master01节点)

# 1、创建kube-scheduler.kubeconfig

export KUBE_APISERVER="https://172.16.1.56:8443"

# 2、设置集群参数

kubectl config set-cluster kubernetes \\

--certificate-authority=/etc/kubernetes/ssl/ca.pem \\

--embed-certs=true \\

--server=${KUBE_APISERVER} \\

--kubeconfig=kube-scheduler.kubeconfig

# 3、设置客户端认证参数

kubectl config set-credentials "kube-scheduler" \\

--client-certificate=/etc/kubernetes/ssl/kube-scheduler.pem \\

--client-key=/etc/kubernetes/ssl/kube-scheduler-key.pem \\

--embed-certs=true \\

--kubeconfig=kube-scheduler.kubeconfig

# 4、设置上下文参数(在上下文参数中将集群参数和用户参数关联起来)

kubectl config set-context default \\

--cluster=kubernetes \\

--user="kube-scheduler" \\

--kubeconfig=kube-scheduler.kubeconfig

# 5、配置默认上下文

kubectl config use-context default --kubeconfig=kube-scheduler.kubeconfig

3>创建kube-proxy.kubeconfig集群配置文件(master01节点)

# 1、创建kube-proxy.kubeconfig集群配置文件

export KUBE_APISERVER="https://172.16.1.56:8443"

# 2、设置集群参数

kubectl config set-cluster kubernetes \\

--certificate-authority=/etc/kubernetes/ssl/ca.pem \\

--embed-certs=true \\

--server=${KUBE_APISERVER} \\

--kubeconfig=kube-proxy.kubeconfig

# 3、设置客户端认证参数

kubectl config set-credentials "kube-proxy" \\

--client-certificate=/etc/kubernetes/ssl/kube-proxy.pem \\

--client-key=/etc/kubernetes/ssl/kube-proxy-key.pem \\

--embed-certs=true \\

--kubeconfig=kube-proxy.kubeconfig

# 4、设置上下文参数(在上下文参数中将集群参数和用户参数关联起来)

kubectl config set-context default \\

--cluster=kubernetes \\

--user="kube-proxy" \\

--kubeconfig=kube-proxy.kubeconfig

# 5、配置默认上下文

kubectl config use-context default --kubeconfig=kube-proxy.kubeconfig

4>创建超级管理员的集群配置文件(master01节点)

# 1、创建超级管理员集群配置文件

export KUBE_APISERVER="https://172.16.1.56:8443"

# 2、设置集群参数

kubectl config set-cluster kubernetes \\

--certificate-authority=/etc/kubernetes/ssl/ca.pem \\

--embed-certs=true \\

--server=${KUBE_APISERVER} \\

--kubeconfig=admin.kubeconfig

# 3、设置客户端认证参数

kubectl config set-credentials "admin" \\

--client-certifica以上是关于linux12k8s --> 03二进制安装的主要内容,如果未能解决你的问题,请参考以下文章