脚本管理日志流式采集处理保存过程

Posted Mr.zhou_Zxy

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了脚本管理日志流式采集处理保存过程相关的知识,希望对你有一定的参考价值。

使用脚本管理日志采集过程

实现将数据通过hudi,导入到hdfs中,并创建hive表来存储数据

[hdfs,yarn,hive,openresty,frp,zookeeper,kafka,log2hudi]

1.流程介绍:

首先通过frp穿透,将数据数据穿透到openresty

再从openresty,通过lua脚本,将数据从生产端生产到Kafka中

再从Kafka通过代码将数据通过hudi和Struct Streaming保存到hdfs中

并在hive中建立表,方便后续分析

2.Real_time_data_warehouse.sh

#!/bin/bash

# filename:Real_time_data_warehouse.sh

# autho:zxy

# date:2021-07-19

# 使用脚本实现开启hdfs和yarn进程,hive服务,启动frp穿透,开启openresty采集数据,开启Kafka的服务,执行log2hudi脚本

# 1.start-dfs.sh

# start-yarn.sh

# stop-yarn.sh

# stop-dfs.sh

# 2.sh /opt/apps/scripts/hiveScripts/start-supervisord-hive.sh start

# sh /opt/apps/scripts/hiveScripts/start-supervisord-hive.sh stop

# 3.sh /opt/apps/scripts/frpScripts/frp-agent.sh start

# sh /opt/apps/scripts/frpScripts/frp-agent.sh stop

# 3.openresty -p /opt/apps/collect-app/

# openresty -p /opt/apps/collect-app/ -s stop

# 4.sh /opt/apps/scripts/kafkaScripts/start-kafka.sh zookeeper

# sh /opt/apps/scripts/kafkaScripts/start-kafka.sh kafka

# sh /opt/apps/scripts/kafkaScripts/start-kafka.sh stop

# 5.sh /opt/apps/scripts/log2hudi.sh start

# sh /opt/apps/scripts/log2hudi.sh stop

#JDK环境

JAVA_HOME=/data/apps/jdk1.8.0_261

#Hadoop环境

HADOOP_HOME=/data/apps/hadoop-2.8.1

#Hive环境

HIVE_HOME=/data/apps/hive-1.2.1

#Kafka环境

KAFKA_HOME=/data/apps/kafka_2.11-2.4.1

#Frp穿透环境

FRP_HOME=/data/apps/frp

#Openresty环境

COLLECT_HOME=/data/apps/collect-app

#脚本目录

SCRIPTS=/opt/apps/scripts

#接收参数

CMD=$1

DONE=$2

## 帮助函数

usage() {

echo "usage:"

echo "Real_time_data_warehouse.sh hdfs start/stop"

echo "Real_time_data_warehouse.sh yarn start/stop"

echo "Real_time_data_warehouse.sh hive start/stop"

echo "Real_time_data_warehouse.sh frp start/stop"

echo "Real_time_data_warehouse.sh openresty start/stop"

echo "Real_time_data_warehouse.sh zookeeper start/stop"

echo "Real_time_data_warehouse.sh kafka start/stop"

echo "Real_time_data_warehouse.sh log2hudi start/stop"

echo "description:"

echo " hdfs:start/stop hdfsService"

echo " yarn:start/stop yarnService"

echo " hive:start/stop hiveService"

echo " frp:start/stop frpService"

echo " openresty:start/stop openrestyService"

echo " zookeeper:start/stop zookeeperService"

echo " kafka:start/stop kafkaService"

echo " log2hudi:start/stop log2hudiService"

exit 0

}

# 1.管理hdfs服务

if [ ${CMD} == "hdfs" ] && [ ${DONE} == "start" ];then

# 启动hdfs

start-dfs.sh

echo " hdfs启动成功 "

elif [ ${CMD} == "hdfs" ] && [ ${DONE} == "stop" ];then

# 关闭hdfs服务

stop-dfs.sh

echo " hdfs关闭成功 "

# 2.管理yarn服务

elif [ ${CMD} == "yarn" ] && [ ${DONE} == "start" ];then

# 启动yarn

start-yarn.sh

echo " yarn启动成功 "

elif [ ${CMD} == "yarn" ] && [ ${DONE} == "stop" ];then

# 关闭yarn服务

stop-yarn.sh

echo " yarn关闭成功 "

# 3.管理hive服务

elif [ ${CMD} == "hive" ] && [ ${DONE} == "start" ];then

# 启动hive

sh ${SCRIPTS}/hiveScripts/start-supervisord-hive.sh start

echo " hive启动成功 "

elif [ ${CMD} == "hive" ] && [ ${DONE} == "stop" ];then

# 关闭hive服务

sh ${SCRIPTS}/hiveScripts/start-supervisord-hive.sh stop

echo " hive关闭成功 "

# 4.管理frp服务

elif [ ${CMD} == "frp" ] && [ ${DONE} == "start" ];then

# 启动frp

sh ${SCRIPTS}/frpScripts/frp-agent.sh start

echo " frp启动成功 "

elif [ ${CMD} == "frp" ] && [ ${DONE} == "stop" ];then

# 关闭frp服务

sh ${SCRIPTS}/frpScripts/frp-agent.sh start

echo " frp关闭成功 "

# 4.管理openresty服务

elif [ ${CMD} == "openresty" ] && [ ${DONE} == "start" ];then

# 启动openresty

openresty -p ${COLLECT_HOME}

echo " openresty启动成功 "

elif [ ${CMD} == "openresty" ] && [ ${DONE} == "stop" ];then

# 关闭openresty服务

openresty -p ${COLLECT_HOME} -s stop

echo " openresty关闭成功 "

# 5.管理kafka服务

elif [ ${CMD} == "zookeeper" ] && [ ${DONE} == "start" ];then

# 启动zookeeper

sh ${SCRIPTS}/kafkaScripts/start-kafka.sh zookeeper

echo " zookeeper启动成功 "

elif [ ${CMD} == "zookeeper" ] && [ ${DONE} == "stop" ];then

# 关闭zookeeper服务

sh ${SCRIPTS}/kafkaScripts/start-kafka.sh stopz

echo " zookeeper关闭成功 "

elif [ ${CMD} == "kafka" ] && [ ${DONE} == "start" ];then

# 开启kafka服务

sh ${SCRIPTS}/kafkaScripts/start-kafka.sh kafka

echo " Kafka启动成功 "

elif [ ${CMD} == "kafka" ] && [ ${DONE} == "stop" ];then

# 关闭kafka服务

sh ${SCRIPTS}/kafkaScripts/start-kafka.sh stopk

echo " Kafka关闭成功 "

# 6.管理log2hudi服务

elif [ ${CMD} == "log2hudi" ] && [ ${DONE} == "start" ];then

# 启动log2hudi

sh ${SCRIPTS}/log2hudi.sh start

echo " log2hudi启动成功 "

elif [ ${CMD} == "log2hudi" ] && [ ${DONE} == "stop" ];then

# 关闭log2hudi服务

sh ${SCRIPTS}/log2hudi.sh stop

echo " log2hudi关闭成功 "

else

usage

fi

3.启动测试运行

[root@hadoop MyScripts]# sh Real_time_data_warehouse.sh hdfs start

Starting namenodes on [hadoop]

hadoop: starting namenode, logging to /data/apps/hadoop-2.8.1/logs/hadoop-root-namenode-hadoop.out

localhost: starting datanode, logging to /data/apps/hadoop-2.8.1/logs/hadoop-root-datanode-hadoop.out

Starting secondary namenodes [0.0.0.0]

0.0.0.0: starting secondarynamenode, logging to /data/apps/hadoop-2.8.1/logs/hadoop-root-secondarynamenode-hadoop.out

hdfs启动成功

[root@hadoop MyScripts]# sh Real_time_data_warehouse.sh yarn start

starting yarn daemons

starting resourcemanager, logging to /data/apps/hadoop-2.8.1/logs/yarn-root-resourcemanager-hadoop.out

localhost: starting nodemanager, logging to /data/apps/hadoop-2.8.1/logs/yarn-root-nodemanager-hadoop.out

yarn启动成功

[root@hadoop MyScripts]# sh Real_time_data_warehouse.sh hive start

● supervisord.service - Process Monitoring and Control Daemon

Loaded: loaded (/usr/lib/systemd/system/supervisord.service; disabled; vendor preset: disabled)

Active: active (running) since 一 2021-07-19 20:24:02 CST; 9ms ago

Process: 88087 ExecStart=/usr/bin/supervisord -c /etc/supervisord.conf (code=exited, status=0/SUCCESS)

Main PID: 88090 (supervisord)

CGroup: /system.slice/supervisord.service

└─88090 /usr/bin/python /usr/bin/supervisord -c /etc/supervisord.conf

7月 19 20:24:02 hadoop systemd[1]: Starting Process Monitoring and Control Daemon...

7月 19 20:24:02 hadoop systemd[1]: Started Process Monitoring and Control Daemon.

hive启动成功

[root@hadoop MyScripts]# sh Real_time_data_warehouse.sh openresty start

openresty启动成功

[root@hadoop MyScripts]# sh Real_time_data_warehouse.sh frp start

root 80039 0.0 0.0 113184 1460 pts/0 S+ 20:11 0:00 sh Real_time_data_warehouse.sh frp start

root 80040 0.0 0.0 113184 1432 pts/0 S+ 20:11 0:00 sh /opt/apps/scripts/frpScripts/frp-agent.sh start

root 80041 0.0 0.0 11644 4 pts/0 D+ 20:11 0:00 [frpc]

root 80043 0.0 0.0 112728 952 pts/0 S+ 20:11 0:00 grep frp

frp启动成功

[root@hadoop MyScripts]# sh Real_time_data_warehouse.sh zookeeper start

zookeeper启动成功

[root@hadoop MyScripts]# sh Real_time_data_warehouse.sh kafka start

Kafka启动成功

[root@hadoop MyScripts]# sh Real_time_data_warehouse.sh log2hudi start

starting -------------->

log2hudi启动成功

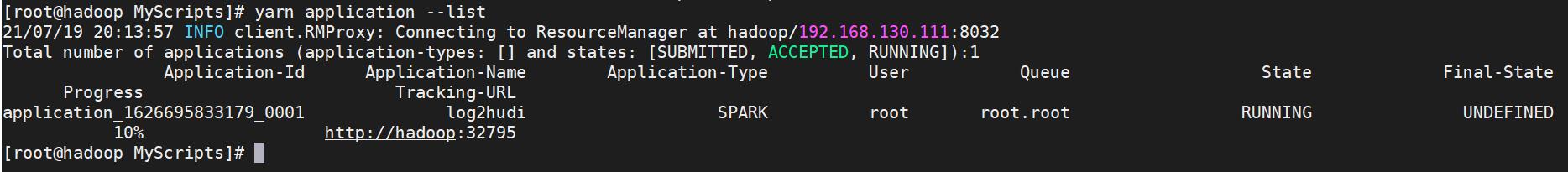

[root@hadoop MyScripts]# yarn application --list

21/07/19 20:13:57 INFO client.RMProxy: Connecting to ResourceManager at hadoop/192.168.130.111:8032

Total number of applications (application-types: [] and states: [SUBMITTED, ACCEPTED, RUNNING]):1

Application-Id Application-Name Application-Type User Queue State Final-State Progress Tracking-URL

application_1626695833179_0001 log2hudi SPARK root root.root RUNNING UNDEFINED 10% http://hadoop:32795

- yarn进程

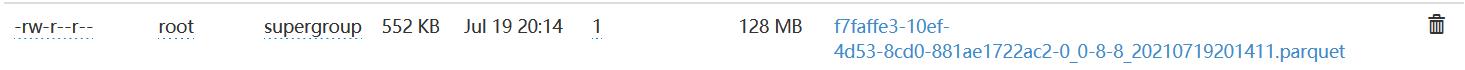

- 日志成功保存到hdfs文件

4.关闭测试运行

[root@hadoop MyScripts]# sh Real_time_data_warehouse.sh log2hudi stop

21/07/19 20:17:50 INFO client.RMProxy: Connecting to ResourceManager at hadoop/192.168.130.111:8032

21/07/19 20:17:52 INFO client.RMProxy: Connecting to ResourceManager at hadoop/192.168.130.111:8032

Killing application application_1626695833179_0001

21/07/19 20:17:53 INFO impl.YarnClientImpl: Killed application application_1626695833179_0001

stop ok

log2hudi关闭成功

[root@hadoop MyScripts]# sh Real_time_data_warehouse.sh kafka stop

kafka关闭成功

[root@hadoop MyScripts]# sh Real_time_data_warehouse.sh zookeeper stop

No zookeeper server to stop

zookeeper关闭成功

[root@hadoop MyScripts]# sh Real_time_data_warehouse.sh openresty stop

openresty关闭成功

[root@hadoop MyScripts]# sh Real_time_data_warehouse.sh frp stop

root 80041 0.3 0.1 713160 12480 pts/0 Sl 20:11 0:01 frpc http --sd zxy -l 8802 -s frp.qfbigdata.com:7001 -u zxy

root 84665 0.0 0.0 113184 1460 pts/0 S+ 20:18 0:00 sh Real_time_data_warehouse.sh frp stop

root 84666 0.0 0.0 113184 1432 pts/0 S+ 20:18 0:00 sh /opt/apps/scripts/frpScripts/frp-agent.sh start

root 84667 0.0 0.0 714312 8124 pts/0 Sl+ 20:18 0:00 frpc http --sd zxy -l 8802 -s frp.qfbigdata.com:7001 -u zxy

root 84669 0.0 0.0 112728 952 pts/0 S+ 20:18 0:00 grep frp

frp关闭成功

[root@hadoop MyScripts]# sh Real_time_data_warehouse.sh hive stop

● supervisord.service - Process Monitoring and Control Daemon

Loaded: loaded (/usr/lib/systemd/system/supervisord.service; disabled; vendor preset: disabled)

Active: inactive (dead)

7月 19 17:33:23 hadoop systemd[1]: Stopping Process Monitoring and Control Daemon...

7月 19 17:33:34 hadoop systemd[1]: Stopped Process Monitoring and Control Daemon.

7月 19 17:33:43 hadoop systemd[1]: Starting Process Monitoring and Control Daemon...

7月 19 17:33:43 hadoop systemd[1]: Started Process Monitoring and Control Daemon.

7月 19 19:46:17 hadoop systemd[1]: Stopping Process Monitoring and Control Daemon...

7月 19 19:46:27 hadoop systemd[1]: Stopped Process Monitoring and Control Daemon.

7月 19 19:57:25 hadoop systemd[1]: Starting Process Monitoring and Control Daemon...

7月 19 19:57:25 hadoop systemd[1]: Started Process Monitoring and Control Daemon.

7月 19 20:19:03 hadoop systemd[1]: Stopping Process Monitoring and Control Daemon...

7月 19 20:19:14 hadoop systemd[1]: Stopped Process Monitoring and Control Daemon.

hive关闭成功

[root@hadoop MyScripts]# sh Real_time_data_warehouse.sh yarn stop

stopping yarn daemons

stopping resourcemanager

localhost: stopping nodemanager

localhost: nodemanager did not stop gracefully after 5 seconds: killing with kill -9

no proxyserver to stop

yarn关闭成功

[root@hadoop MyScripts]# sh Real_time_data_warehouse.sh hdfs stop

Stopping namenodes on [hadoop]

hadoop: stopping namenode

localhost: stopping datanode

Stopping secondary namenodes [0.0.0.0]

0.0.0.0: stopping secondarynamenode

hdfs关闭成功

[root@hadoop MyScripts]# jps

85651 Jps

5.帮助文档

# 开启项目

sh Real_time_data_warehouse.sh hdfs start

sh Real_time_data_warehouse.sh yarn start

sh Real_time_data_warehouse.sh hive start

sh Real_time_data_warehouse.sh openresty start

sh Real_time_data_warehouse.sh frp start

sh Real_time_data_warehouse.sh zookeeper start

sh Real_time_data_warehouse.sh kafka start

sh Real_time_data_warehouse.sh log2hudi start

# 关闭项目

sh Real_time_data_warehouse.sh log2hudi stop

sh Real_time_data_warehouse.sh kafka stop

sh Real_time_data_warehouse.sh zookeeper stop

sh Real_time_data_warehouse.sh openresty stop

sh Real_time_data_warehouse.sh frp stop

sh Real_time_data_warehouse.sh hive stop

sh Real_time_data_warehouse.sh yarn stop

sh Real_time_data_warehouse.sh hdfs stop

6.涉及脚本

- start-supervisord-hive.sh

hive脚本使用介绍 - frp-agent.sh

个人视情况而定 - start-kafka.sh

Kafka脚本使用介绍 - log2hudi.sh

log2hudi脚本使用介绍

以上是关于脚本管理日志流式采集处理保存过程的主要内容,如果未能解决你的问题,请参考以下文章