hadoop高可用的安装--3台虚拟机

Posted wyju

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了hadoop高可用的安装--3台虚拟机相关的知识,希望对你有一定的参考价值。

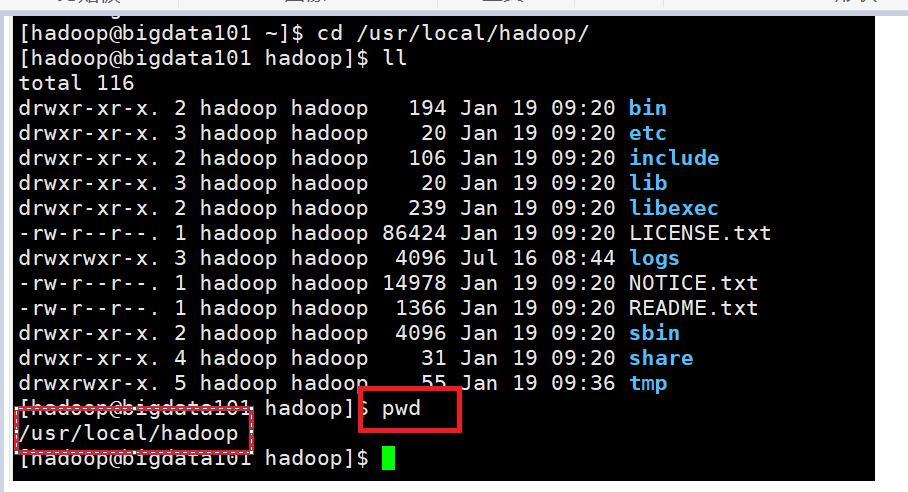

解压hadoop包

1.解压hadoop包到 /usr/local里面

sudo tar -zxf hadoop-2.7.7.tar.gz -C /usr/local

2.进入到 /usr/local里面,修改名字为hadoop

cd /usr/local/

sudo mv ./hadoop-2.7.7/ ./hadoop

3.授权当前用户hadoop拥有该文件夹的所有者权限。

sudo chown -R hadoop ./hadoop

4.查看文件安装路径

pwd

5.配置环境变量

打开 /etc/profile文件

sudo vim /etc/profile

添加以下内容

#HADOOP_HOME

export HADOOP_HOME=/usr/local/hadoop

export PATH=$PATH:$HADOOP_HOME/bin

export PATH=$PATH:$HADOOP_HOME/sbin

使配置环境生效

source /etc/profile

查看是否生效

hadoop version

修改配置文件,以下配置文件都在 hadoop-2.7.2/etc/hadoop下

6.进入hadoop的配置文件

cd /usr/local/hadoop/etc/hadoop/

7.修改配置文件

7.1配置:hadoop-env.sh

# export JAVA_HOME=

export JAVA_HOME=/usr/local/lib/jdk1.8.0_212

# 定义一些变量

export HDFS_NAMENODE_USER=root

export HDFS_DATANODE_USER=root

export HDFS_SECONDARYNAMENODE_USER=root

export YARN_RESOURCEMANAGER_USER=root

export YARN_NODEMANAGER_USER=root

export HDFS_JOURNALNODE_USER=root

export HDFS_ZKFC_USER=root

7.2配置core-site.xml

<?xml version="1.0" encoding="UTF-8"?>

<?xml-stylesheet type="text/xsl" href="configuration.xsl"?>

<!--

Licensed under the Apache License, Version 2.0 (the "License");

you may not use this file except in compliance with the License.

You may obtain a copy of the License at

http://www.apache.org/licenses/LICENSE-2.0

Unless required by applicable law or agreed to in writing, software

distributed under the License is distributed on an "AS IS" BASIS,

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

See the License for the specific language governing permissions and

limitations under the License. See accompanying LICENSE file.

-->

<!-- Put site-specific property overrides in this file. -->

<configuration>

<!-- 核心的hdfs协议访问方式 董事会

指定hdfs的nameservice为ns

-->

<property>

<name>fs.defaultFS</name>

<value>hdfs://node</value>

</property>

<!-- 所有hadoop的存储目录 -->

<property>

<name>hadoop.tmp.dir</name>

<value>/usr/local/hadoop/data/hadoop-${user.name}</value>

</property>

<!-- 告诉hadoop,zookeeper放哪了 -->

<property>

<name>ha.zookeeper.quorum</name>

<value>bigdata101:2181,bigdata102:2181,bigdata103:2181</value>

</property>

</configuration>

7.3配置 yarn-site.xml

<?xml version="1.0"?>

<!--

Licensed under the Apache License, Version 2.0 (the "License");

you may not use this file except in compliance with the License.

You may obtain a copy of the License at

http://www.apache.org/licenses/LICENSE-2.0

Unless required by applicable law or agreed to in writing, software

distributed under the License is distributed on an "AS IS" BASIS,

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

See the License for the specific language governing permissions and

limitations under the License. See accompanying LICENSE file.

-->

<configuration>

<!-- Site specific YARN configuration properties -->

<!-- 配置yarn -->

<property>

<name>yarn.nodemanager.aux-services</name>

<value>mapreduce_shuffle</value>

</property>

<property>

<name>yarn.nodemanager.env-whitelist</name>

<value>JAVA_HOME,HADOOP_COMMON_HOME,HADOOP_HDFS_HOME,HADOOP_CONF_DIR,CLASSPATH_PREPEND_DISTCACHE,HADOOP_YARN_HOME,HADOOP_MAPRED_HOME</value>

</property>

<!-- yarn开启ha -->

<property>

<name>yarn.resourcemanager.ha.enabled</name>

<value>true</value>

</property>

<!-- yarn董事会的名字 -->

<property>

<name>yarn.resourcemanager.cluster-id</name>

<value>ns-yarn</value>

</property>

<!-- 董事会列表 -->

<property>

<name>yarn.resourcemanager.ha.rm-ids</name>

<value>rm1,rm2</value>

</property>

<!-- hostname,webapp-->

<property>

<name>yarn.resourcemanager.hostname.rm1</name>

<value>bigdata101</value>

</property>

<property>

<name>yarn.resourcemanager.webapp.address.rm1</name>

<value>bigdata101:8088</value>

</property>

<!-- 第二台 -->

<property>

<name>yarn.resourcemanager.hostname.rm2</name>

<value>bigdata102</value>

</property>

<property>

<name>yarn.resourcemanager.webapp.address.rm2</name>

<value>bigdata102:8088</value>

</property>

<!-- zookeeper -->

<property>

<name>yarn.resourcemanager.zk-address</name>

<value>bigdata101:2181,bigdata102:2181,bigdata103:2181</value>

</property>

</configuration>

7.4配置hdfs-site.xml

<?xml version="1.0" encoding="UTF-8"?>

<?xml-stylesheet type="text/xsl" href="configuration.xsl"?>

<configuration>

<!-- 副本数;默认3个 -->

<property>

<name>dfs.replication</name>

<value>2</value>

</property>

<!-- 权限检查 -->

<property>

<name>dfs.permissions.enabled</name>

<value>false</value>

</property>

<!-- dfs.namenode.name.dir:namenode的目录放的路径在hadoop.tmp.dir之上做了修改 file://${hadoop.tmp.dir}/dfs/name

dfs.datanode.data.dir:namenode的目录放的路径在hadoop.tmp.dir之上做了修改 file://${hadoop.tmp.dir}/dfs/data -->

<!-- 为nameservice起一个别名 董事会 -->

<property>

<name>dfs.nameservices</name>

<value>node</value>

</property>

<!-- 董事会的成员 -->

<property>

<name>dfs.ha.namenodes.node</name>

<value>nn1,nn2</value>

</property>

<!-- 配置每一个攻事会成员 每一个配置的时候得有rpc(底层),http(上层==网页) -->

<property>

<name>dfs.namenode.rpc-address.node.nn1</name>

<value>bigdata101:8020</value>

</property>

<property>

<name>dfs.namenode.http-address.node.nn1</name>

<value>bigdata101:9870</value>

</property>

<!-- 第二个成员 -->

<property>

<name>dfs.namenode.rpc-address.node.nn2</name>

<value>bigdata102:8020</value>

</property>

<property>

<name>dfs.namenode.http-address.jh.nn2</name>

<value>bigdata102:9870</value>

</property>

<!-- journalnode:负责hadoop与zk进行沟通 -->

<property>

<name>dfs.namenode.shared.edits.dir</name>

<value>qjournal://bigdata101:8485;bigdata102:8485;bigdata103:8485/node</value>

</property>

<!-- 哪个类决定了自动切换 哪个namenode是活着的(active) -->

<property>

<name>dfs.client.failover.proxy.provider.node</name>

<value>org.apache.hadoop.hdfs.server.namenode.ha.ConfiguredFailoverProxyProvider</value>

</property>

<!-- journal的存储位置 -->

<property>

<name>dfs.journalnode.edits.dir</name>

<value>/usr/local/hadoop/data/journal/</value>

</property>

<!-- 大哥挂了,自动切换到二哥上 启动故障转移 -->

<property>

<name>dfs.ha.automatic-failover.enabled</name>

<value>true</value>

</property>

<!-- (ssh免密码登录) -->

<property>

<name>dfs.ha.fencing.methods</name>

<value>sshfence</value>

</property>

<property>

<name>dfs.ha.fencing.ssh.private-key-files</name>

<value>/root/.ssh/id_rsa</value>

</property>

</configuration>

7.5配置mapred-site.xml

由于没有mapred-site.xml,我们需要把mapred-site.xml.template修改名字

cp mapred-site.xml.template mapred-site.xml

vim mapred-site.xml

7.6配置slaves

bigdata101

bigdata102

bigdata103

注意:

以上步骤做完之后发送到各个节,每个都需要哦

sudo scp -r hadoop bigdata102:`pwd`

sudo scp -r hadoop bigdata103:`pwd`

授权当前用户hadoop拥有该文件夹的所有者权限。

sudo chown -R hadoop:hadoop ./hadoop

8.启动zookeeper

bin/zkServer.sh start

9.启动journalnode

#在bigdata101,bigdata102,bigdata103,上启动

sbin/hadoop-daemon.sh start journalnode

10.格式化namenode

#在bigdata101上格式化

bin/hdfs namenode -format

11.把刚才格式化后的元数据拷贝到另外一个namenode上

# 一定要进入到/usr/local/hadoop/data中

scp -r hadoop-hadoop/ bigdata102:`pwd`

12.启动namenode

sbin/hadoop-daemon.sh start namenode

13.在没有格式化的namenode上执行:

#在bigdata102上执行

bin/hdfs namenode -bootstrapStandby

14.启动第二个namenode

sbin/hadoop-daemon.sh start namenode

15.在其中一个节点上初始化zkfc(一定要启动zookeeper)

bin/hdfs zkfc -formatZK

16.重新启动hdfs,yarn

#关闭hdfs,yarn

sbin/stop-dfs.sh

sbin/stop-yarn.sh

#启动hdfs,yarn

sbin/start-dfs.sh

sbin/start-yarn.sh

#启动所有

sbin/start-all.sh

#关闭所有

sbin/stop-all.sh

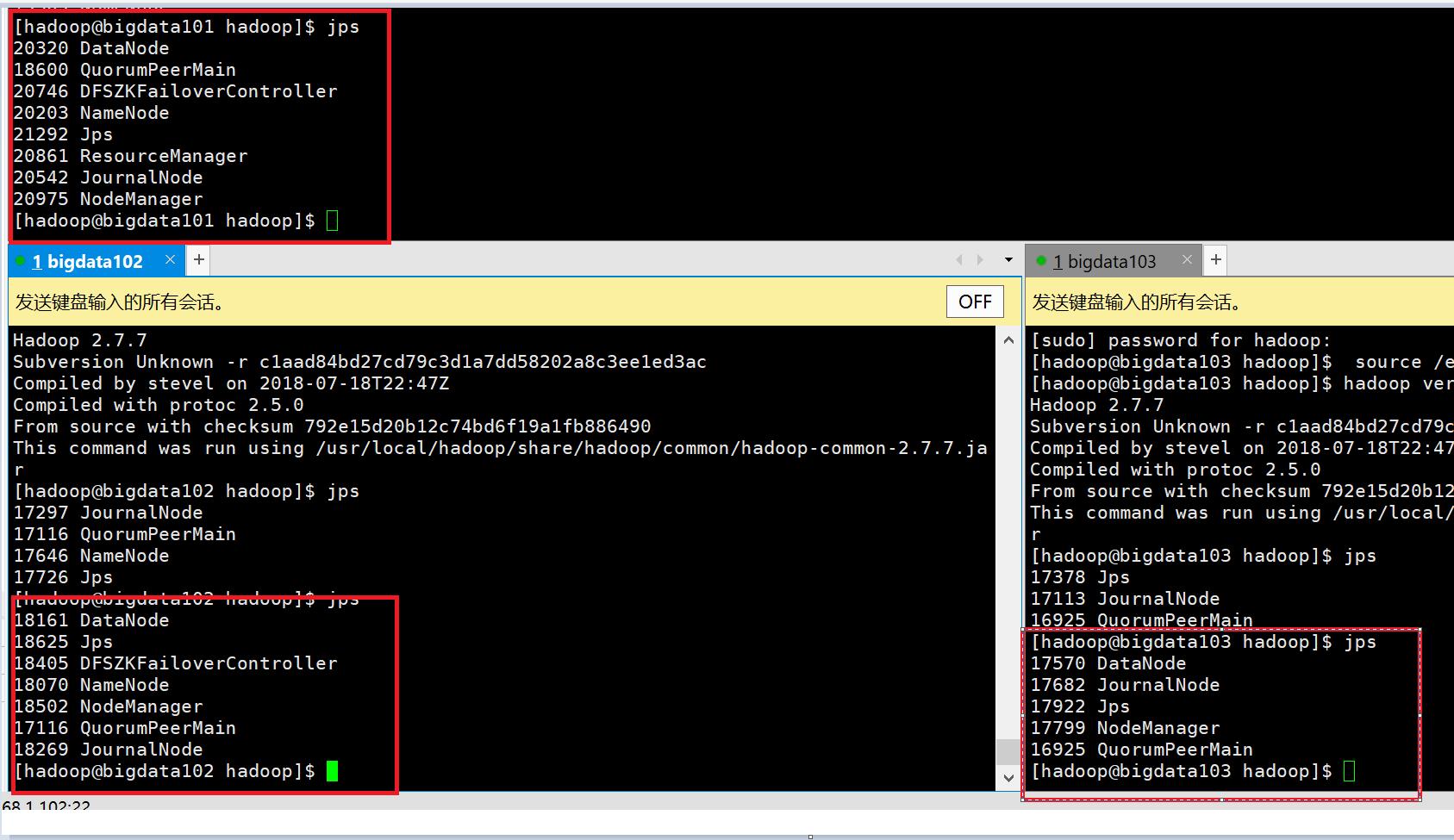

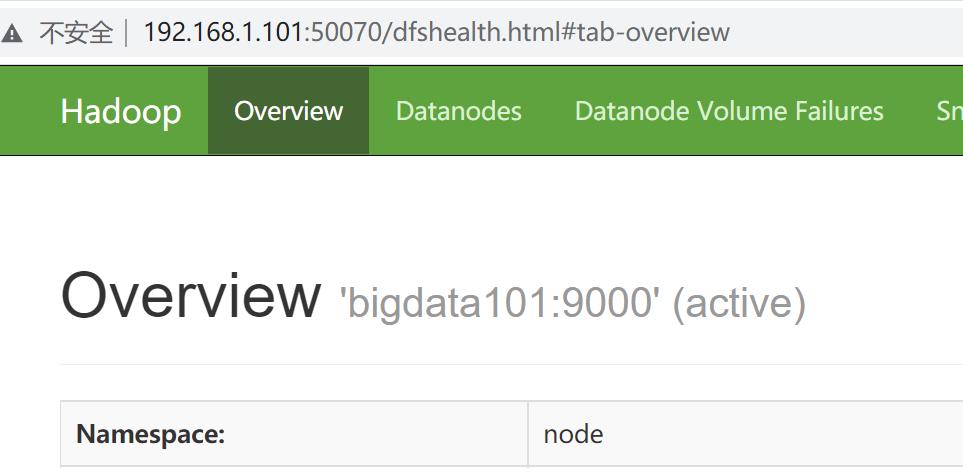

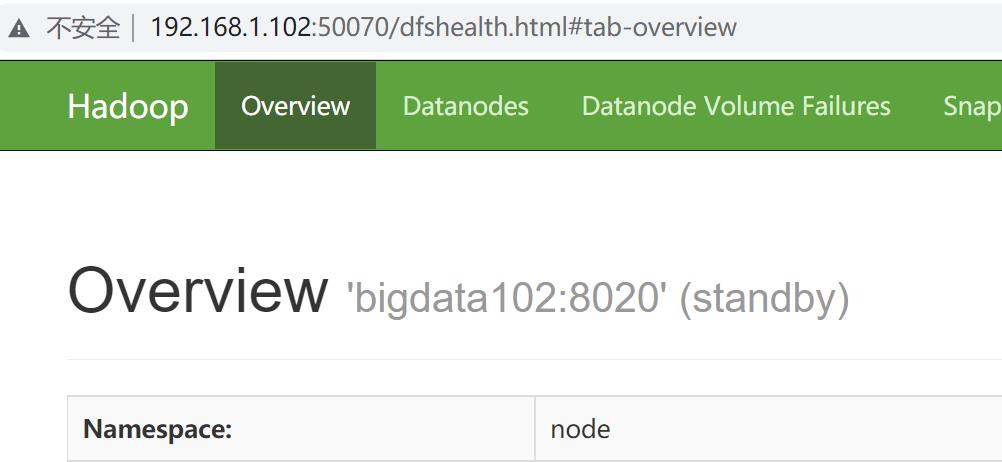

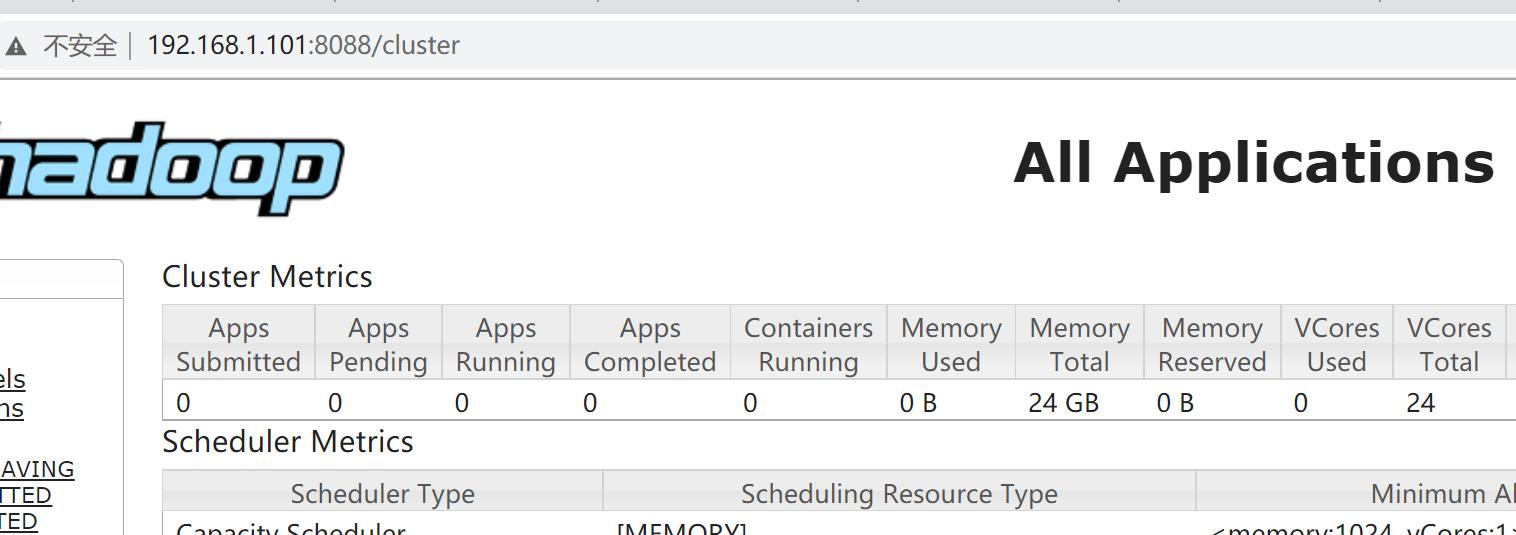

17.查看节点启动是否成功

第一种:

jps

第二种:

以上是关于hadoop高可用的安装--3台虚拟机的主要内容,如果未能解决你的问题,请参考以下文章