爬虫学习笔记—— Scrapy框架

Posted 别呀

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了爬虫学习笔记—— Scrapy框架相关的知识,希望对你有一定的参考价值。

一、下载中间件

-

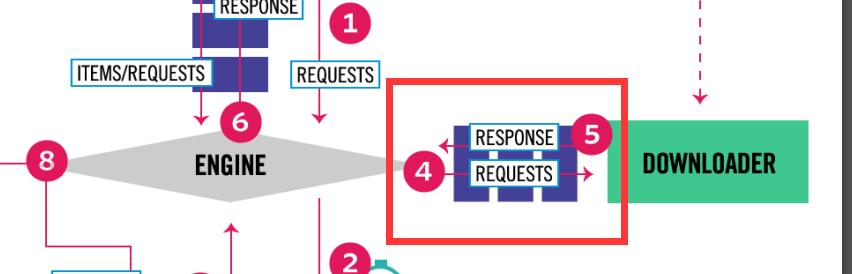

下载中间件是一个用来hooks进Scrapy的request/response处理过程的框架。

-

它是一个轻量级的底层系统,用来全局修改scrapy的request和response。

-

scrapy框架中的下载中间件,是实现了特殊方法的类。

1.1、内置中间件

-

scrapy系统自带的中间件被放在DOWNLOADER_MIDDLEWARES_BASE设置中

可以通过命令scrapy setttings --get=DOWNLOADER_MIDDLEWARES_BASE查看 -

用户自定义的中间件需要在DOWNLOADER_MIDDLEWARES中进行设置

该设置是一个dict,键是中间件类路径,期值是中间件的顺序,是一个正整数0-1000,越小越靠近引擎。

例: “scrapy.downloadermiddlewares.robotstxt.RobotsTxtMiddleware”: 100,数字越小,优先级越高 即越靠近引擎 -

各中间件含义(通过命令

scrapy setttings --get=DOWNLOADER_MIDDLEWARES_BASE查看)

"scrapy.downloadermiddlewares.robotstxt.RobotsTxtMiddleware": 100, #机器人协议中间件

"scrapy.downloadermiddlewares.httpauth.HttpAuthMiddleware": 300, #http身份验证中间件

"scrapy.downloadermiddlewares.downloadtimeout.DownloadTimeoutMiddleware": 350, #下载延迟中间件

"scrapy.downloadermiddlewares.defaultheaders.DefaultHeadersMiddleware": 400, #默认请求头中间件

"scrapy.downloadermiddlewares.useragent.UserAgentMiddleware": 500, #用户代理中间件

"scrapy.downloadermiddlewares.retry.RetryMiddleware": 550, #重新尝试中间件

"scrapy.downloadermiddlewares.ajaxcrawl.AjaxCrawlMiddleware": 560, #基于元片段html标签找到“ AJAX可抓取”页面变体的中间件

"scrapy.downloadermiddlewares.redirect.MetaRefreshMiddleware": 580, #始终使用字符串作为原因

"scrapy.downloadermiddlewares.httpcompression.HttpCompressionMiddleware": 590, ##该中间件允许从网站发送/接收压缩(gzip,deflate)流量。

"scrapy.downloadermiddlewares.redirect.RedirectMiddleware": 600, #该中间件根据响应状态处理请求的重定向。

"scrapy.downloadermiddlewares.cookies.CookiesMiddleware": 700,#cookie中间件

"scrapy.downloadermiddlewares.httpproxy.HttpProxyMiddleware": 750, #IP代理中间件

"scrapy.downloadermiddlewares.stats.DownloaderStats": 850,#用于存储通过它的所有请求,响应和异常的统计信息

"scrapy.downloadermiddlewares.httpcache.HttpCacheMiddleware": 900 #缓存中间件

1.2、下载中间件API

-

process_request(request,spider)处理请求,对于通过中间件的每个请求调用此方法 -

process_response(request, response, spider)处理响应,对于通过中间件的每个响应,调用此方法 -

process_exception(request, exception, spider)处理请求时发生了异常调用 -

from_crawler(cls,crawler )创建爬虫

注意:要注意每个方法的返回值,返回值内容的不同,决定着请求或响应进一步在哪里执行

class BaiduDownloaderMiddleware(object):

# Not all methods need to be defined. If a method is not defined,

# scrapy acts as if the downloader middleware does not modify the

# passed objects.

@classmethod

def from_crawler(cls, crawler):

# This method is used by Scrapy to create your spiders.

s = cls()

crawler.signals.connect(s.spider_opened, signal=signals.spider_opened)

return s

def process_request(self, request, spider):

# Called for each request that goes through the downloader

# middleware.

# Must either: 以下必选其一

# - return None: continue processing this request #返回None request 被继续交个下一个中间件处理

# - or return a Response object #返回response对象 不会交给下一个precess_request 而是直接交给下载器

# - or return a Request object #返回request对象 直接交给引擎处理

# - or raise IgnoreRequest: process_exception() methods of #抛出异常 process_exception处理

# installed downloader middleware will be called

return None

def process_response(self, request, response, spider):

# Called with the response returned from the downloader.

# Must either;

# - return a Response object #返回response对象 继续交给下一中间件处理

# - return a Request object #返回request对象 直接交给引擎处理

# - or raise IgnoreRequest #抛出异常 process_exception处理

return response

def process_exception(self, request, exception, spider):

# Called when a download handler or a process_request()

# (from other downloader middleware) raises an exception.

#处理异常

# Must either:

# - return None: continue processing this exception #继续调用其他中间件的process_exception

# - return a Response object: stops process_exception() chain #返回response 停止调用其他中间件的process_exception

# - return a Request object: stops process_exception() chain #返回request 直接交给引擎处理

pass

def spider_opened(self, spider):

spider.logger.info('Spider opened: %s' % spider.name)

二、自定义中间件

2.1、用户代理池

2.1.1、settings文件添加列表

#这里仅供参考,具体自己添加

user_agent_list = [

"Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/537.1 "

"(KHTML, like Gecko) Chrome/22.0.1207.1 Safari/537.1",

"Mozilla/5.0 (X11; CrOS i686 2268.111.0) AppleWebKit/536.11 "

"(KHTML, like Gecko) Chrome/20.0.1132.57 Safari/536.11",

"Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/536.6 "

"(KHTML, like Gecko) Chrome/20.0.1092.0 Safari/536.6",

"Mozilla/5.0 (Windows NT 6.2) AppleWebKit/536.6 "

"(KHTML, like Gecko) Chrome/20.0.1090.0 Safari/536.6",

"Mozilla/5.0 (Windows NT 6.2; WOW64) AppleWebKit/537.1 "

"(KHTML, like Gecko) Chrome/19.77.34.5 Safari/537.1",

"Mozilla/5.0 (X11; Linux x86_64) AppleWebKit/536.5 "

"(KHTML, like Gecko) Chrome/19.0.1084.9 Safari/536.5",

"Mozilla/5.0 (Windows NT 6.0) AppleWebKit/536.5 "

"(KHTML, like Gecko) Chrome/19.0.1084.36 Safari/536.5",

"Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/536.3 "

"(KHTML, like Gecko) Chrome/19.0.1063.0 Safari/536.3",

"Mozilla/5.0 (Windows NT 5.1) AppleWebKit/536.3 "

"(KHTML, like Gecko) Chrome/19.0.1063.0 Safari/536.3",

"Mozilla/5.0 (Macintosh; Intel Mac OS X 10_8_0) AppleWebKit/536.3 "

"(KHTML, like Gecko) Chrome/19.0.1063.0 Safari/536.3",

"Mozilla/5.0 (Windows NT 6.2) AppleWebKit/536.3 "

"(KHTML, like Gecko) Chrome/19.0.1062.0 Safari/536.3",

"Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/536.3 "

"(KHTML, like Gecko) Chrome/19.0.1062.0 Safari/536.3",

"Mozilla/5.0 (Windows NT 6.2) AppleWebKit/536.3 "

"(KHTML, like Gecko) Chrome/19.0.1061.1 Safari/536.3",

"Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/536.3 "

"(KHTML, like Gecko) Chrome/19.0.1061.1 Safari/536.3",

"Mozilla/5.0 (Windows NT 6.1) AppleWebKit/536.3 "

"(KHTML, like Gecko) Chrome/19.0.1061.1 Safari/536.3",

"Mozilla/5.0 (Windows NT 6.2) AppleWebKit/536.3 "

"(KHTML, like Gecko) Chrome/19.0.1061.0 Safari/536.3",

"Mozilla/5.0 (X11; Linux x86_64) AppleWebKit/535.24 "

"(KHTML, like Gecko) Chrome/19.0.1055.1 Safari/535.24",

"Mozilla/5.0 (Windows NT 6.2; WOW64) AppleWebKit/535.24 "

"(KHTML, like Gecko) Chrome/19.0.1055.1 Safari/535.24"

]

2.1.2、middlewares文件设置用户代理

from .settings import user_agent_list

import random

class User_AgentDownloaderMiddleware(object): #类名自定义

def process_request(self, request, spider):

request.headers["User_Agent"]=random.choice(user_agent_list) #随机

return None #被继续交个下一个中间件处理

2.2、IP代理池

2.2.1、settings中ip 代理池

#此处是示例,以上的代理基本无法使用的,同时不建议大家去找免费的代理,会不安全

IPPOOL=[

{"ipaddr":"61.129.70.131:8080"},

{"ipaddr":"61.152.81.193:9100"},

{"ipaddr":"120.204.85.29:3128"},

{"ipaddr":"219.228.126.86:8123"},

{"ipaddr":"61.152.81.193:9100"},

{"ipaddr":"218.82.33.225:53853"},

{"ipaddr":"223.167.190.17:42789"}

]

2.2.2、middlewares文件设置用户代理

from .settings import IPPOOL

import random

class MyproxyDownloaderMiddleware(object): #类名自定义

#目的 设置多个代理

#通过meta 设置代理

def process_request(self, request, spider):

proxyip=random.choice(IPPOOL)

request.meta["proxy"]="http://"+proxyip["ipaddr"]#http://61.129.70.131:8080

return None #被继续交个下一个中间件处理

2.3、小案例:爬取豆瓣电影信息

具体代码实现见:爬虫学习笔记(六)——Scrapy框架(一),这里仅添加实现用户代理和ip代理代码

middle文件

自己重新构造类:

class UserAgentDownloaderMiddleware:

def process_request(self, request, spider):

request.headers['User_Agent'] = random.choice(UserAgent_list)

return None

class ProxyIpDownloaderMiddleware:

def process_request(self, request, spider):

proxyip=random.choice(IP_POOL)

request.meta['proxy']='http://'+proxyip['ipaddr']

return None

settings文件

①添加上面的 用户代理池和IP代理池

②设置下载中间件

DOWNLOADER_MIDDLEWARES = {

# 'db250.middlewares.Db250DownloaderMiddleware': 543,

'db250.middlewares.ProxyIpDownloaderMiddleware': 523,

'db250.middlewares.UserAgentDownloaderMiddleware': 524,

}

三、Scrapy.settings文件

BOT_NAME = 'baidu' #baidu: 项目名字

SPIDER_MODULES = ['baidu.spiders'] #爬虫模块

NEWSPIDER_MODULE = 'baidu.spiders' #使用genspider 命令创建的爬虫模块

3.1、基本配置

1. 项目名称,默认的USER_AGENT由它来构成,也作为日志记录的日志名

#BOT_NAME = 'db250'

2. 爬虫应用路径

#SPIDER_MODULES = ['db250.spiders']

#NEWSPIDER_MODULE = 'db250.spiders'

3. 客户端User-Agent请求头

#USER_AGENT = 'db250 (+http://www.yourdomain.com)'

4. 是否遵循爬虫协议 一般我们是不会遵循的

#Obey robots.txt rules

ROBOTSTXT_OBEY = False

5. 是否支持cookie,cookiejar进行操作cookie,默认开启

#Disable cookies (enabled by default)

#COOKIES_ENABLED = False

6. Telnet用于查看当前爬虫的信息,操作爬虫等...使用telnet ip port ,然后通过命令操作

#TELNETCONSOLE_ENABLED = False

#TELNETCONSOLE_HOST = '127.0.0.1'

#TELNETCONSOLE_PORT = [6023,]

7. Scrapy发送HTTP请求默认使用的请求头

DEFAULT_REQUEST_HEADERS = {

'Accept': 'text/html,application/xhtml+xml,application/xml;q=0.9,*/*;q=0.8',

'Accept-Language': 'en',

}

8. 请求失败后(retry)

scrapy自带scrapy.downloadermiddlewares.retry.RetryMiddleware中间件,如果想通过重试次数,可以进行如下操作:

参数配置:

#RETRY_ENABLED: 是否开启retry

#RETRY_TIMES: 重试次数

#RETRY_HTTP_CODECS: 遇到什么http code时需要重试,默认是500,502,503,504,408,其他的,网络连接超时等问题也会自动retry的

3.2、并发与延迟

1. 下载器总共最大处理的并发请求数,默认值16

#CONCURRENT_REQUESTS = 32

2. 每个域名能够被执行的最大并发请求数目,默认值8

#CONCURRENT_REQUESTS_PER_DOMAIN = 16

3. 能够被单个IP处理的并发请求数,默认值0,代表无限制,需要注意两点

#I、如果不为零,那CONCURRENT_REQUESTS_PER_DOMAIN将被忽略,即并发数的限制是按照每个IP来计算,而不是每个域名

#II、该设置也影响DOWNLOAD_DELAY,如果该值不为零,那么DOWNLOAD_DELAY下载延迟是限制每个IP而不是每个域 #CONCURRENT_REQUESTS_PER_IP = 16

4. 如果没有开启智能限速,这个值就代表一个规定死的值,代表对同一网址延迟请求的秒数

#DOWNLOAD_DELAY = 3

3.3、智能限速/自动节流

1.开启True,默认False

#AUTOTHROTTLE_ENABLED = True

2.起始的延迟

#AUTOTHROTTLE_START_DELAY = 5

3.最小延迟

#DOWNLOAD_DELAY = 3

4.最大延迟

#AUTOTHROTTLE_MAX_DELAY = 10

5.每秒并发请求数的平均值,不能高于 CONCURRENT_REQUESTS_PER_DOMAIN或CONCURRENT_REQUESTS_PER_IP,调高了则吞吐量增大强奸目标站点,调低了则对目标站点更加”礼貌“

每个特定的时间点,scrapy并发请求的数目都可能高于或低于该值,这是爬虫视图达到的建议值而不是硬限制

AUTOTHROTTLE_TARGET_CONCURRENCY = 16.0

6.调试

#AUTOTHROTTLE_DEBUG = True

#CONCURRENT_REQUESTS_PER_DOMAIN = 16

#CONCURRENT_REQUESTS_PER_IP = 16

3.4、爬取深度与爬取方式

1. 爬虫允许的最大深度,可以通过meta查看当前深度;0表示无深度

#DEPTH_LIMIT = 3

2. 爬取时,0表示深度优先Lifo(默认);1表示广度优先FiFo

**后进先出,深度优先**

#DEPTH_PRIORITY = 0

#SCHEDULER_DISK_QUEUE = 'scrapy.squeue.PickleLifoDiskQueue'

#SCHEDULER_MEMORY_QUEUE = 'scrapy.squeue.LifoMemoryQueue'

**先进先出,广度优先**

#DEPTH_PRIORITY = 1

#SCHEDULER_DISK_QUEUE = 'scrapy.squeue.PickleFifoDiskQueue'

#SCHEDULER_MEMORY_QUEUE = 'scrapy.squeue.FifoMemoryQueue'

3. 调度器队列

#SCHEDULER = 'scrapy.core.scheduler.Scheduler'

#from scrapy.core.scheduler import Scheduler

4. 访问URL去重

#DUPEFILTER_CLASS = 'step8_king.duplication.RepeatUrl'

3.5、缓存

1.是否启用缓存策略

#HTTPCACHE_ENABLED = True

2.缓存策略:所有请求均缓存,下次在请求直接访问原来的缓存即可

#HTTPCACHE_POLICY = "scrapy.extensions.httpcache.DummyPolicy"

3.缓存策略:

根据Http响应头:Cache-Control、Last-Modified 等进行缓存的策略

#HTTPCACHE_POLICY = "scrapy.extensions.httpcache.RFC2616Policy"

4.缓存超时时间

#HTTPCACHE_EXPIRATION_SECS = 0

5.缓存保存路径

#HTTPCACHE_DIR = 'httpcache'

6.缓存忽略的Http状态码

#HTTPCACHE_IGNORE_HTTP_CODES = []

7.缓存存储的插件

#HTTPCACHE_STORAGE = 'scrapy.extensions.httpcache.FilesystemCacheStorage'

详见官方文档:

下载中间件:https://docs.scrapy.org/en/latest/topics/downloader-middleware.html

配置文件:https://docs.scrapy.org/en/latest/topics/settings.html

爬虫中间件:https://docs.scrapy.org/en/latest/topics/spider-middleware.html

以上是关于爬虫学习笔记—— Scrapy框架的主要内容,如果未能解决你的问题,请参考以下文章