解决EnforceNotMet: grad_op_maker_ should not be null Operator GradOpMaker has not been registered. at(

Posted 叶庭云

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了解决EnforceNotMet: grad_op_maker_ should not be null Operator GradOpMaker has not been registered. at(相关的知识,希望对你有一定的参考价值。

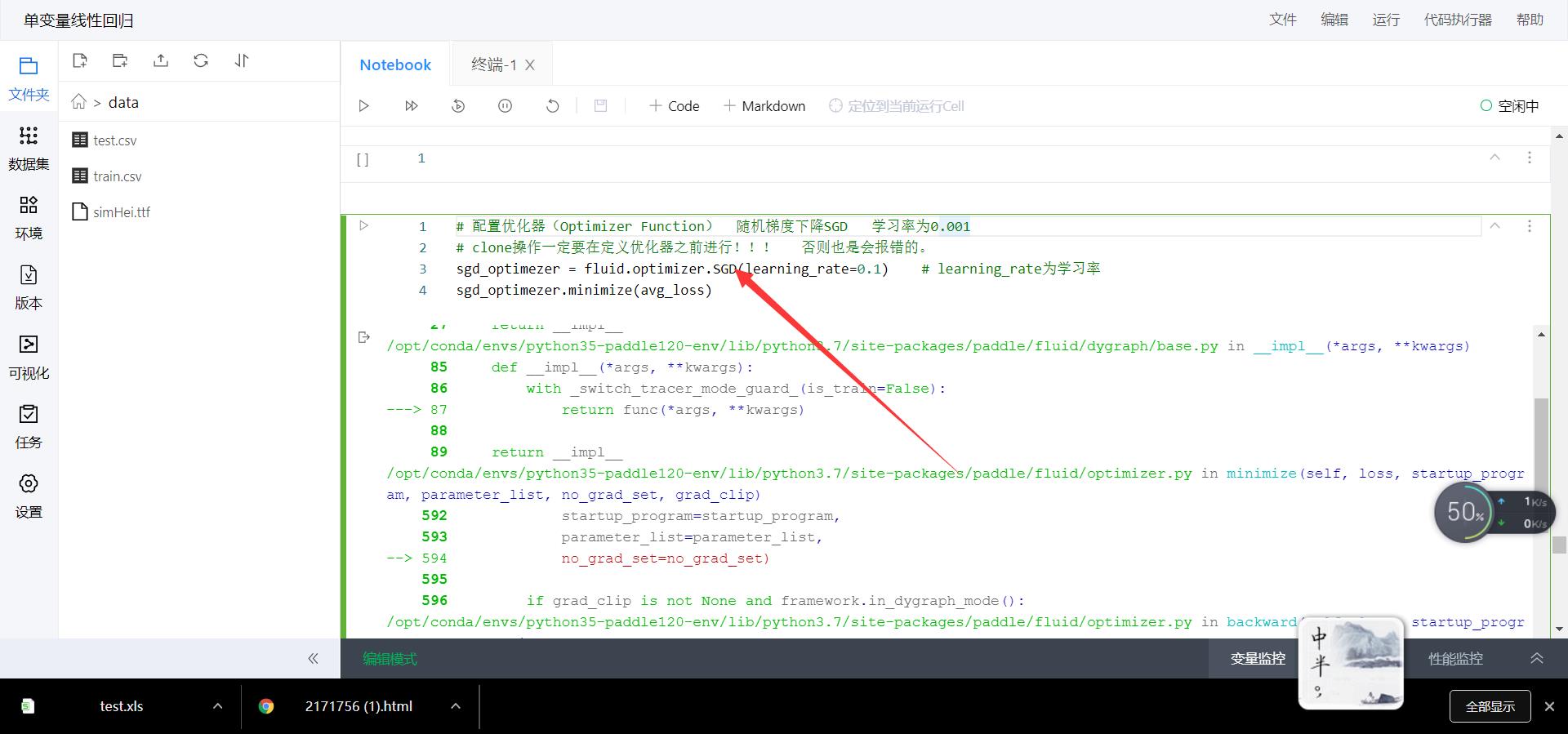

问题描述:使用百度飞桨训练自己搭建的模型时,在运行到:

optimizer=fluid.optimizer.Adam(learning_rate=0.001)

optimizer.minimize(avg_loss)

出现了这样一个错误。如下所示:

---------------------------------------------------------------------------EnforceNotMet Traceback (most recent call last)<ipython-input-114-71baa0f01aff> in <module>

1 # 配置优化器(Optimizer Function) 随机梯度下降SGD 学习率为0.001

2 sgd_optimezer = fluid.optimizer.SGD(learning_rate=0.1) # learning_rate为学习率

----> 3 sgd_optimezer.minimize(avg_loss)

</opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages/decorator.py:decorator-gen-145> in minimize(self, loss, startup_program, parameter_list, no_grad_set, grad_clip)

/opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages/paddle/fluid/wrapped_decorator.py in __impl__(func, *args, **kwargs)

23 def __impl__(func, *args, **kwargs):

24 wrapped_func = decorator_func(func)

---> 25 return wrapped_func(*args, **kwargs)

26

27 return __impl__

/opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages/paddle/fluid/dygraph/base.py in __impl__(*args, **kwargs)

85 def __impl__(*args, **kwargs):

86 with _switch_tracer_mode_guard_(is_train=False):

---> 87 return func(*args, **kwargs)

88

89 return __impl__

/opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages/paddle/fluid/optimizer.py in minimize(self, loss, startup_program, parameter_list, no_grad_set, grad_clip)

592 startup_program=startup_program,

593 parameter_list=parameter_list,

--> 594 no_grad_set=no_grad_set)

595

596 if grad_clip is not None and framework.in_dygraph_mode():

/opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages/paddle/fluid/optimizer.py in backward(self, loss, startup_program, parameter_list, no_grad_set, callbacks)

491 with program_guard(program, startup_program):

492 params_grads = append_backward(loss, parameter_list,

--> 493 no_grad_set, callbacks)

494 # Note: since we can't use all_reduce_op now,

495 # dgc_op should be the last op of one grad.

/opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages/paddle/fluid/backward.py in append_backward(loss, parameter_list, no_grad_set, callbacks)

569 grad_to_var,

570 callbacks,

--> 571 input_grad_names_set=input_grad_names_set)

572

573 # Because calc_gradient may be called multiple times,

/opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages/paddle/fluid/backward.py in _append_backward_ops_(block, ops, target_block, no_grad_dict, grad_to_var, callbacks, input_grad_names_set)

308 # Getting op's corresponding grad_op

309 grad_op_desc, op_grad_to_var = core.get_grad_op_desc(

--> 310 op.desc, cpt.to_text(no_grad_dict[block.idx]), grad_sub_block_list)

311

312 # If input_grad_names_set is not None, extend grad_op_descs only when

EnforceNotMet: grad_op_maker_ should not be null

Operator GradOpMaker has not been registered. at [/paddle/paddle/fluid/framework/op_info.h:69]

PaddlePaddle Call Stacks:

0 0x7efdea8b2c28p void paddle::platform::EnforceNotMet::Init<std::string>(std::string, char const*, int) + 360

1 0x7efdea8b2f77p paddle::platform::EnforceNotMet::EnforceNotMet(std::string const&, char const*, int) + 87

2 0x7efdea8b3dacp paddle::framework::OpInfo::GradOpMaker() const + 108

3 0x7efdea8ac83ep

4 0x7efdea8e1af6p

5 0x559b94463744p _PyMethodDef_RawFastCallKeywords + 596

6 0x559b94463861p _PyCFunction_FastCallKeywords + 33

7 0x559b944cf2bdp _PyEval_EvalFrameDefault + 20173

8 0x559b94413539p _PyEval_EvalCodeWithName + 761

9 0x559b94462f57p _PyFunction_FastCallKeywords + 903

10 0x559b944cb8ccp _PyEval_EvalFrameDefault + 5340

11 0x559b94413539p _PyEval_EvalCodeWithName + 761

12 0x559b94462ef5p _PyFunction_FastCallKeywords + 805

13 0x559b944ca806p _PyEval_EvalFrameDefault + 1046

14 0x559b94413539p _PyEval_EvalCodeWithName + 761

15 0x559b94462f57p _PyFunction_FastCallKeywords + 903

16 0x559b944cb8ccp _PyEval_EvalFrameDefault + 5340

17 0x559b94413539p _PyEval_EvalCodeWithName + 761

18 0x559b94414635p _PyFunction_FastCallDict + 469

19 0x559b944cc232p _PyEval_EvalFrameDefault + 7746

20 0x559b9441381ap _PyEval_EvalCodeWithName + 1498

21 0x559b94414635p _PyFunction_FastCallDict + 469

22 0x559b944cc232p _PyEval_EvalFrameDefault + 7746

23 0x559b9441381ap _PyEval_EvalCodeWithName + 1498

24 0x559b94462f57p _PyFunction_FastCallKeywords + 903

25 0x559b944ca806p _PyEval_EvalFrameDefault + 1046

26 0x559b94413539p _PyEval_EvalCodeWithName + 761

27 0x559b94462ef5p _PyFunction_FastCallKeywords + 805

28 0x559b944caa93p _PyEval_EvalFrameDefault + 1699

29 0x559b94413539p _PyEval_EvalCodeWithName + 761

30 0x559b94414424p PyEval_EvalCodeEx + 68

31 0x559b9441444cp PyEval_EvalCode + 28

32 0x559b944d9f8dp

33 0x559b944635d9p _PyMethodDef_RawFastCallKeywords + 233

34 0x559b94463861p _PyCFunction_FastCallKeywords + 33

35 0x559b944ceb94p _PyEval_EvalFrameDefault + 18340

36 0x559b9446c592p _PyGen_Send + 674

37 0x559b944cbe69p _PyEval_EvalFrameDefault + 6777

38 0x559b9446c592p _PyGen_Send + 674

39 0x559b944cbe69p _PyEval_EvalFrameDefault + 6777

40 0x559b9446c592p _PyGen_Send + 674

41 0x559b9446357dp _PyMethodDef_RawFastCallKeywords + 141

42 0x559b9446b3cfp _PyMethodDescr_FastCallKeywords + 79

43 0x559b944cf07cp _PyEval_EvalFrameDefault + 19596

44 0x559b94462ccbp _PyFunction_FastCallKeywords + 251

45 0x559b944ca806p _PyEval_EvalFrameDefault + 1046

46 0x559b94462ccbp _PyFunction_FastCallKeywords + 251

47 0x559b944caa93p _PyEval_EvalFrameDefault + 1699

48 0x559b94413539p _PyEval_EvalCodeWithName + 761

49 0x559b94414860p _PyFunction_FastCallDict + 1024

50 0x559b94432e53p _PyObject_Call_Prepend + 99

51 0x559b94425dbep PyObject_Call + 110

52 0x559b944cc232p _PyEval_EvalFrameDefault + 7746

53 0x559b9441381ap _PyEval_EvalCodeWithName + 1498

54 0x559b94462f57p _PyFunction_FastCallKeywords + 903

55 0x559b944cb8ccp _PyEval_EvalFrameDefault + 5340

56 0x559b9446c059p

57 0x559b944635d9p _PyMethodDef_RawFastCallKeywords + 233

58 0x559b94463861p _PyCFunction_FastCallKeywords + 33

59 0x559b944ceb94p _PyEval_EvalFrameDefault + 18340

60 0x559b9441381ap _PyEval_EvalCodeWithName + 1498

61 0x559b94462f57p _PyFunction_FastCallKeywords + 903

62 0x559b944caa93p _PyEval_EvalFrameDefault + 1699

63 0x559b9446c059p

64 0x559b944635d9p _PyMethodDef_RawFastCallKeywords + 233

65 0x559b94463861p _PyCFunction_FastCallKeywords + 33

66 0x559b944ceb94p _PyEval_EvalFrameDefault + 18340

67 0x559b9441381ap _PyEval_EvalCodeWithName + 1498

68 0x559b94462f57p _PyFunction_FastCallKeywords + 903

69 0x559b944ca806p _PyEval_EvalFrameDefault + 1046

70 0x559b9446c059p

71 0x559b944635d9p _PyMethodDef_RawFastCallKeywords + 233

72 0x559b94463861p _PyCFunction_FastCallKeywords + 33

73 0x559b944ceb94p _PyEval_EvalFrameDefault + 18340

74 0x559b9441381ap _PyEval_EvalCodeWithName + 1498

75 0x559b94414635p _PyFunction_FastCallDict + 469

76 0x559b94432e53p _PyObject_Call_Prepend + 99

77 0x559b94425dbep PyObject_Call + 110

78 0x559b944cc232p _PyEval_EvalFrameDefault + 7746

79 0x559b9446c43cp _PyGen_Send + 332

80 0x559b9446357dp _PyMethodDef_RawFastCallKeywords + 141

81 0x559b9446b3cfp _PyMethodDescr_FastCallKeywords + 79

82 0x559b944cf07cp _PyEval_EvalFrameDefault + 19596

83 0x559b94462ccbp _PyFunction_FastCallKeywords + 251

84 0x559b944caa93p _PyEval_EvalFrameDefault + 1699

85 0x559b9441381ap _PyEval_EvalCodeWithName + 1498

86 0x559b94414635p _PyFunction_FastCallDict + 469

87 0x559b94522b5bp

88 0x559b9446b8fbp _PyObject_FastCallKeywords + 1179

89 0x559b944cee86p _PyEval_EvalFrameDefault + 19094

90 0x559b94462ccbp _PyFunction_FastCallKeywords + 251

91 0x559b944caa93p _PyEval_EvalFrameDefault + 1699

92 0x559b94413d09p _PyEval_EvalCodeWithName + 2761

93 0x559b94462f57p _PyFunction_FastCallKeywords + 903

94 0x559b944fe383p

95 0x559b94434584p _PyMethodDef_RawFastCallDict + 404

96 0x559b944347c1p _PyCFunction_FastCallDict + 33

97 0x559b944d0007p _PyEval_EvalFrameDefault + 23575

98 0x559b94462ccbp _PyFunction_FastCallKeywords + 251

99 0x559b944caa93p _PyEval_EvalFrameDefault + 1699

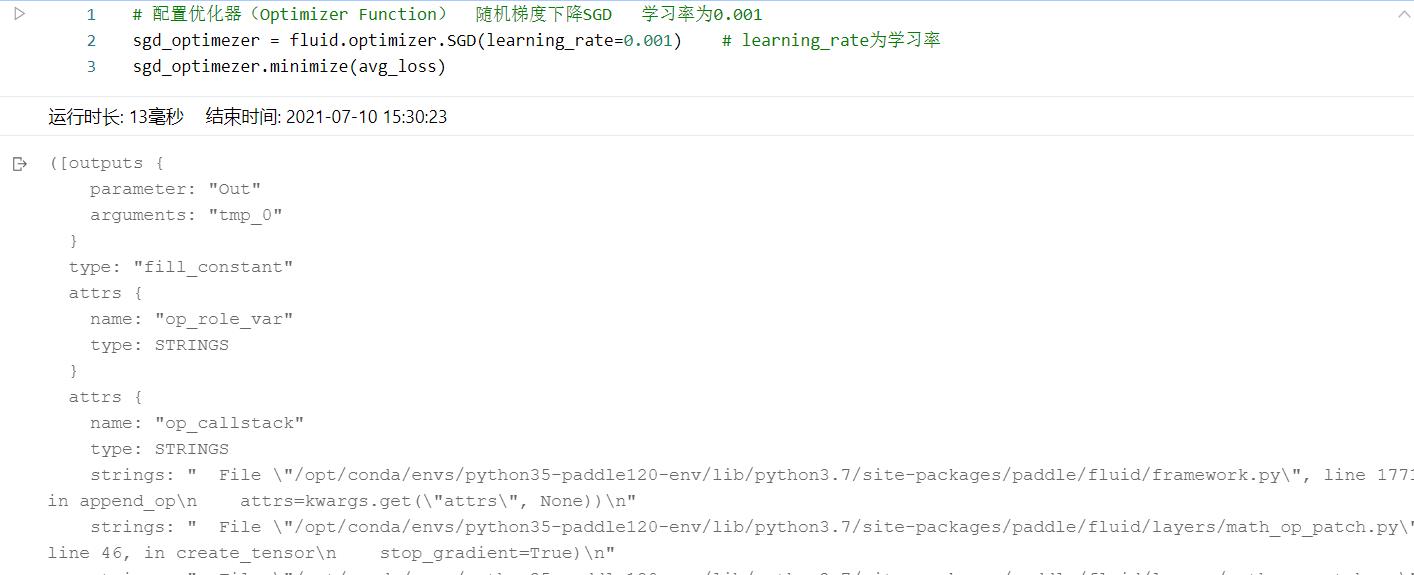

# 配置优化器(Optimizer Function) 随机梯度下降SGD 学习率为0.001

# clone操作一定要在定义优化器之前进行!!! 否则也是会报错的。

sgd_optimezer = fluid.optimizer.SGD(learning_rate=0.1) # learning_rate为学习率

sgd_optimezer.minimize(avg_loss)

clone操作一定要在定义优化器之前进行!!! 否则也是会报错的。 解决方法如下:

先配置优化器,再进行 clone 操作。

以上是关于解决EnforceNotMet: grad_op_maker_ should not be null Operator GradOpMaker has not been registered. at(的主要内容,如果未能解决你的问题,请参考以下文章