k8s单节点集群二进制部署(步骤详细,图文详解)

Posted 王大雏

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了k8s单节点集群二进制部署(步骤详细,图文详解)相关的知识,希望对你有一定的参考价值。

k8s单节点集群二进制部署(步骤详细,图文详解)

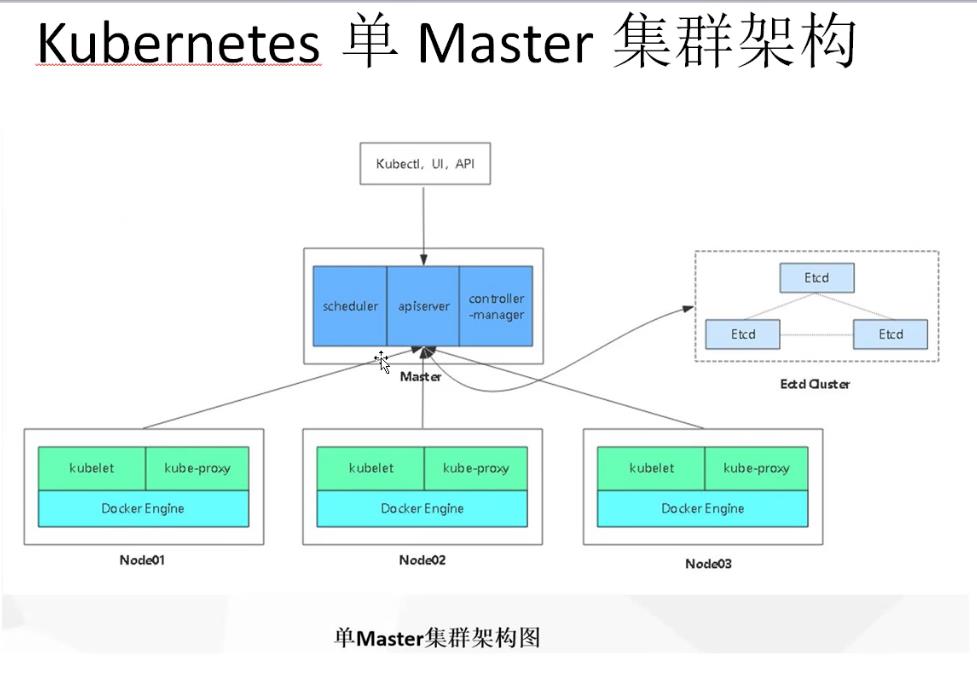

一、k8s集群搭建

环境准备

k8s集群master01: 192.168.80.71 kube-apiserver kube-controller-manager kube-scheduler etcd

k8s集群master02: 192.168.80.74

k8s集群node01: 192.168.80.72 kubelet kube-proxy docker flannel

k8s集群node02: 192.168.80.73

etcd集群节点1: 192.168.80.71 etcd

etcd集群节点2: 192.168.80.72

etcd集群节点3: 192.168.80.73

负载均衡nginx+keepalive01 (master) : 192.168.80.14

负载均衡nginx+keepalive02 (backup) : 192.168.80.15

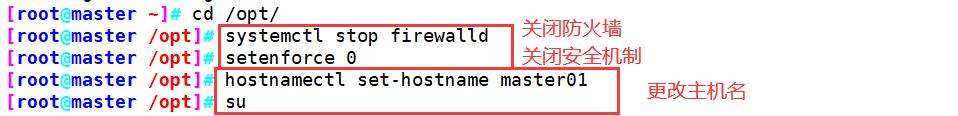

systemctl stop firewalld

systemctl disable firewalld

setenforce 0

master01

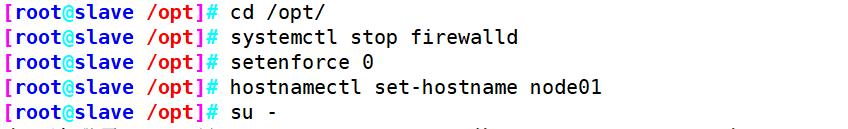

node01

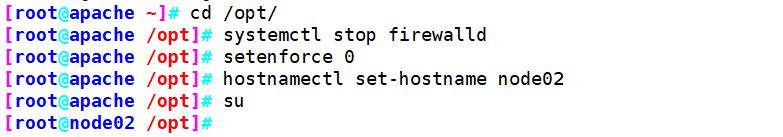

node02

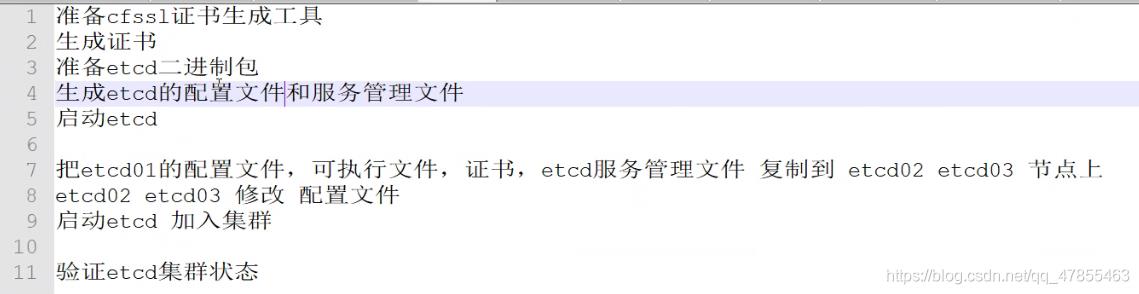

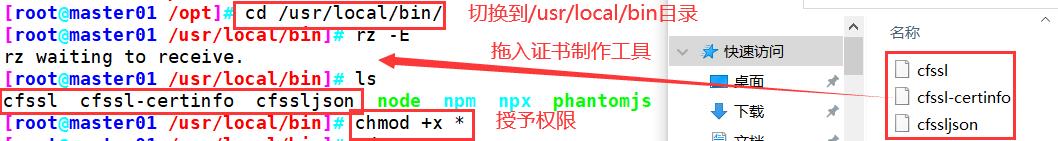

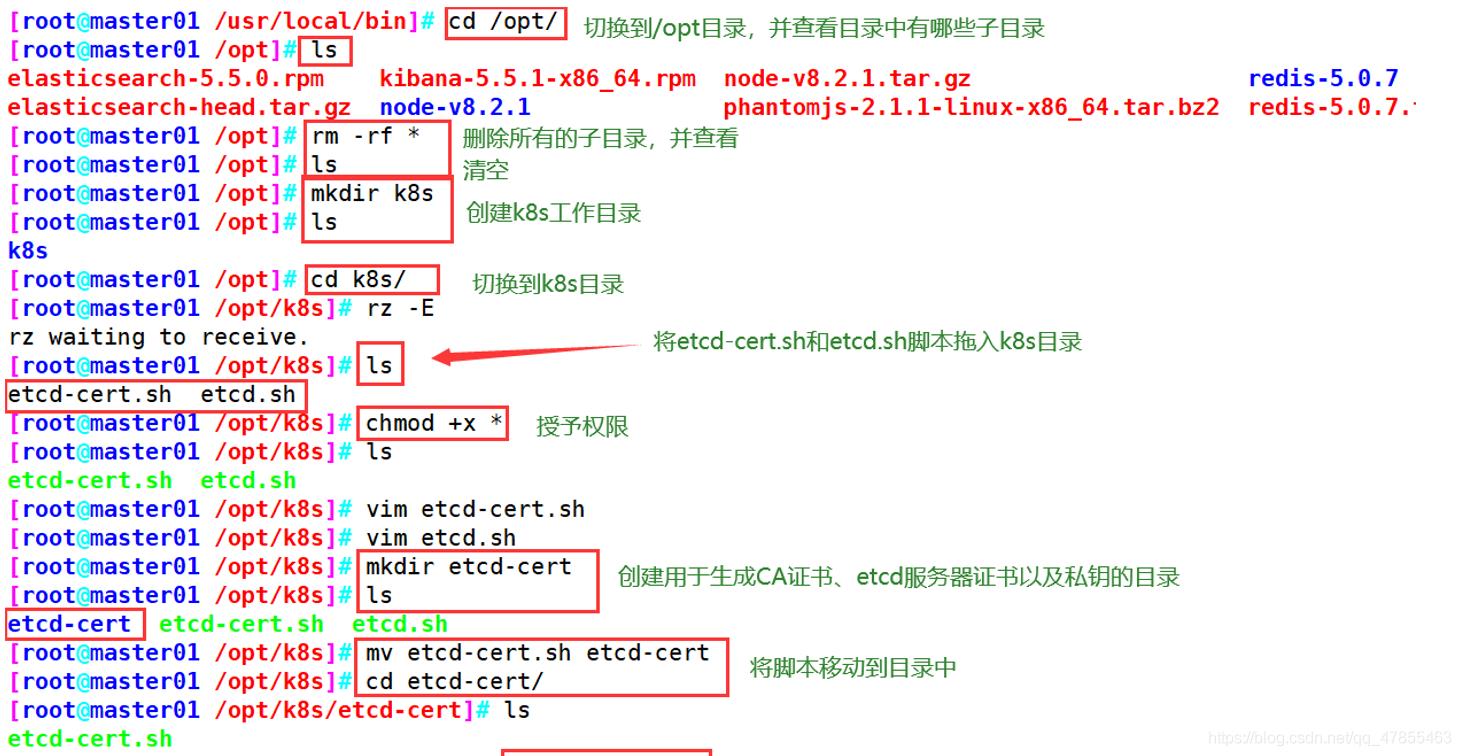

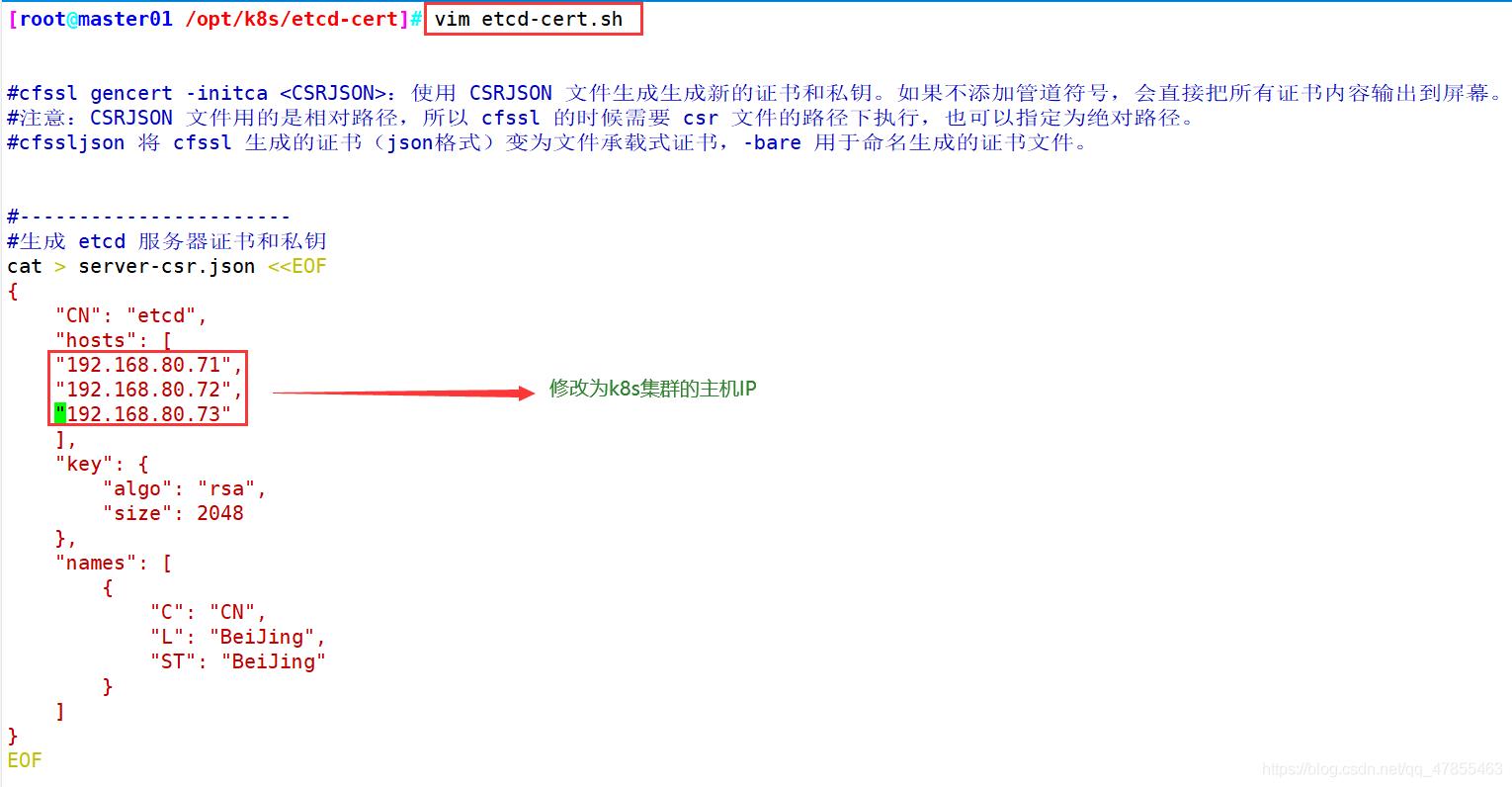

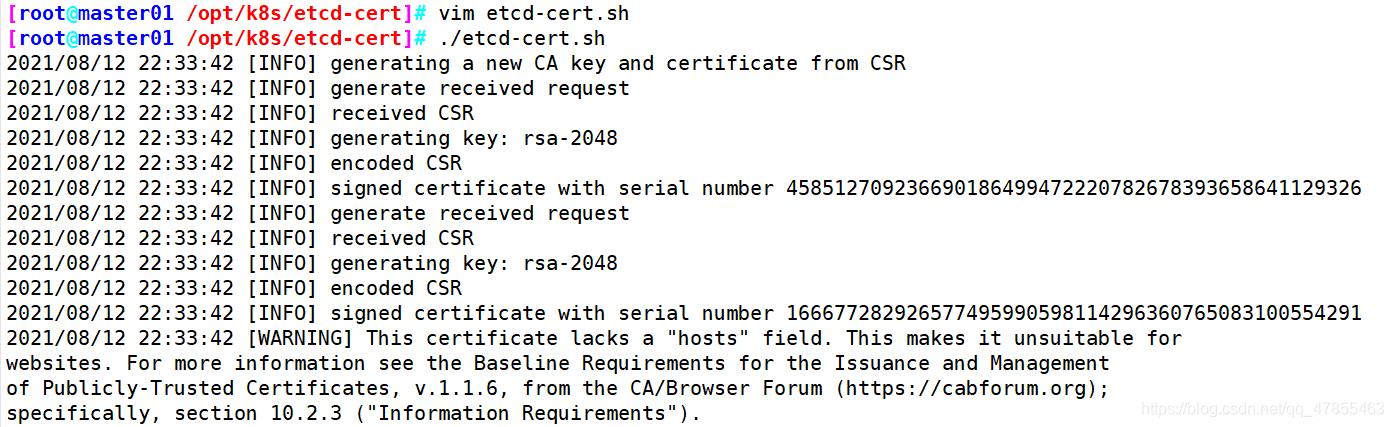

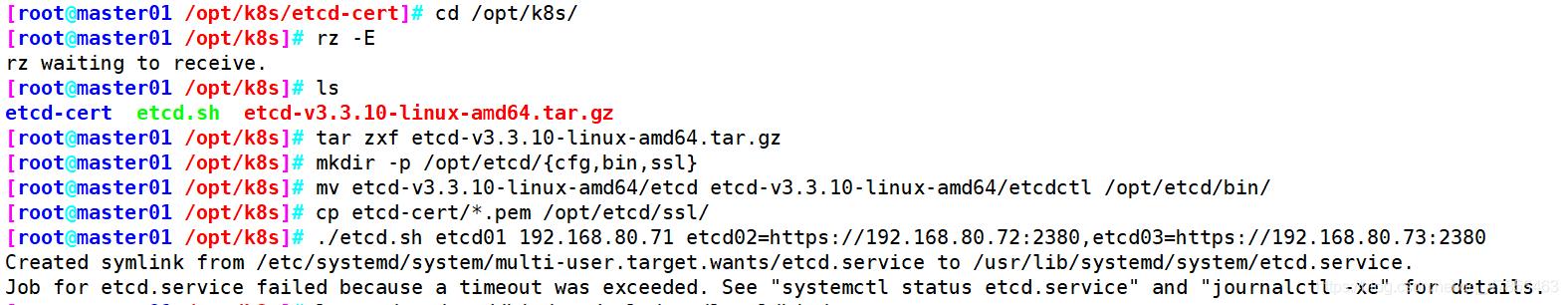

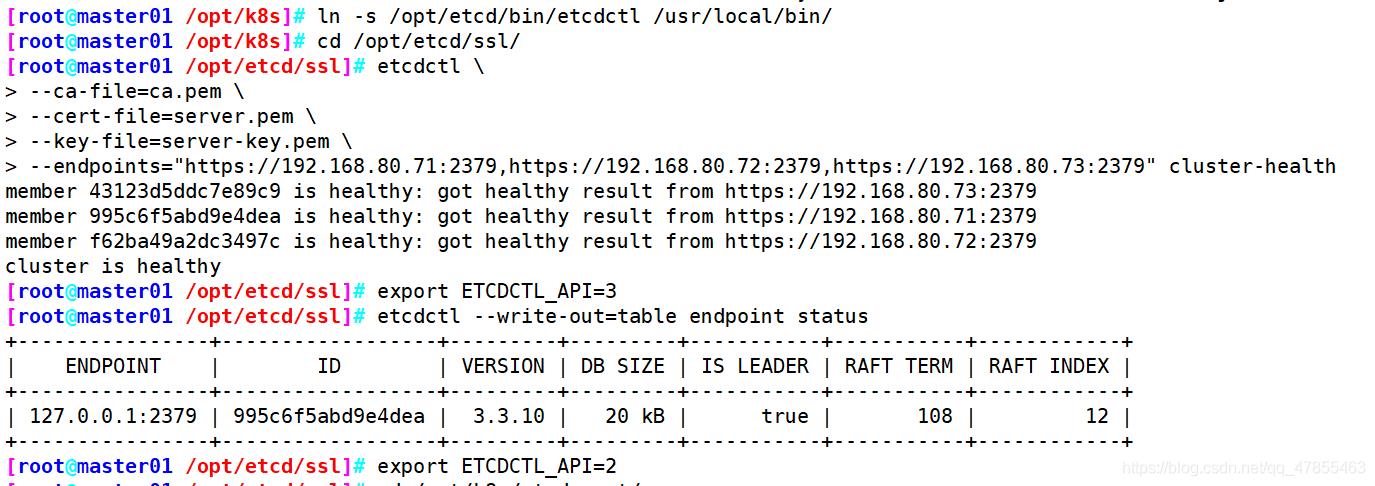

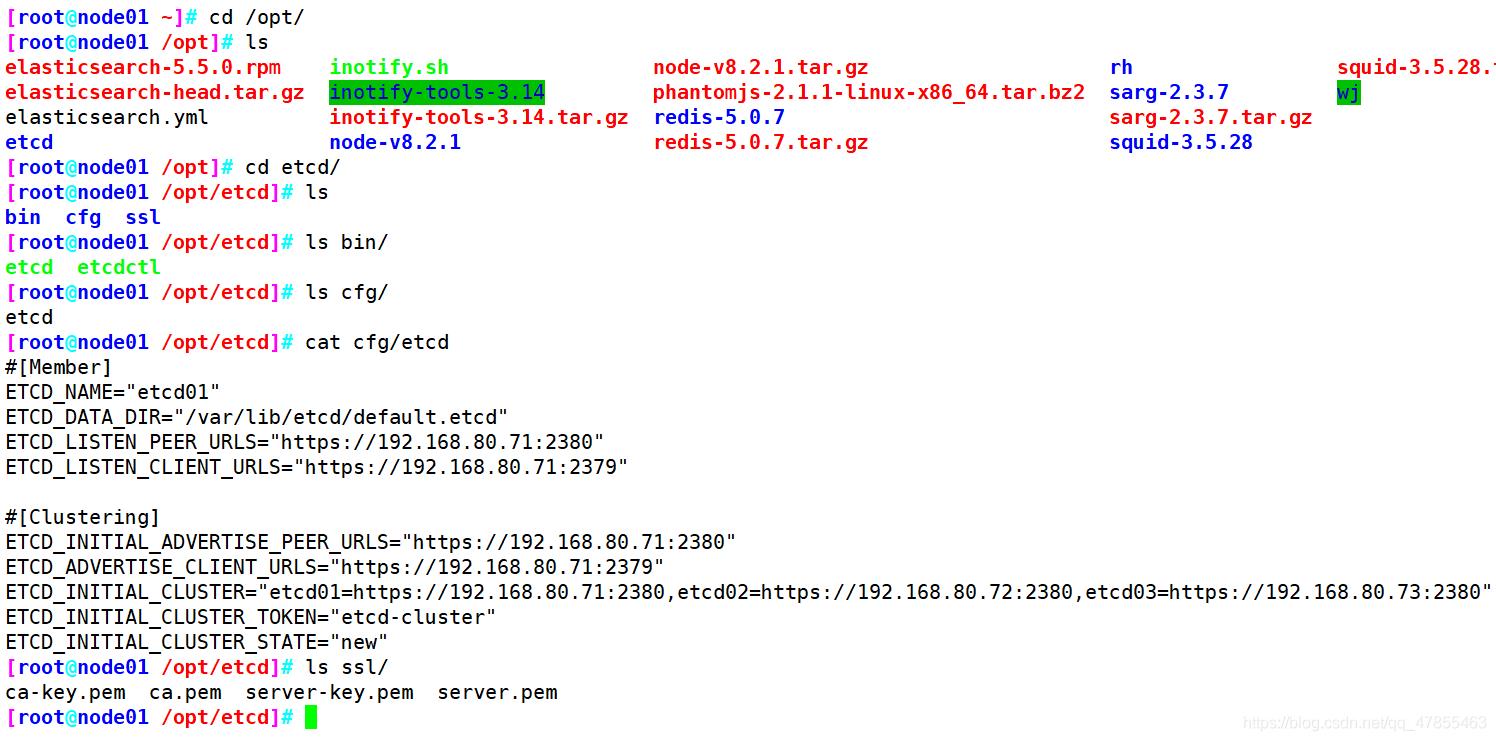

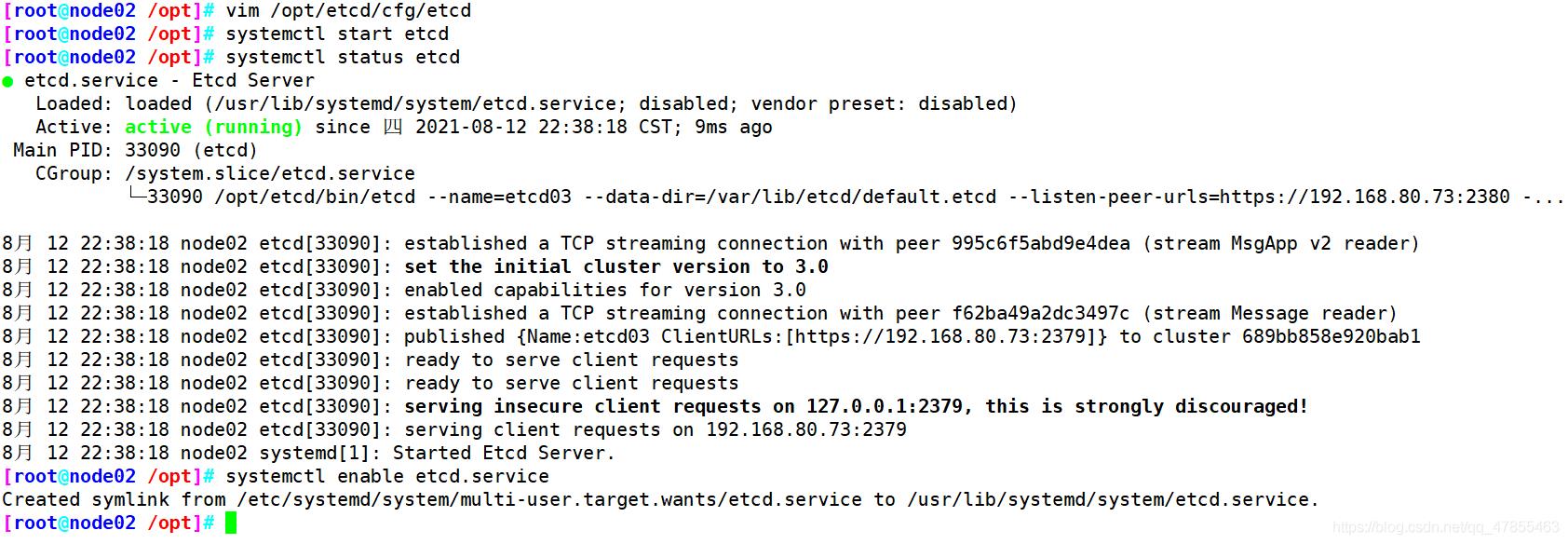

1、etcd集群

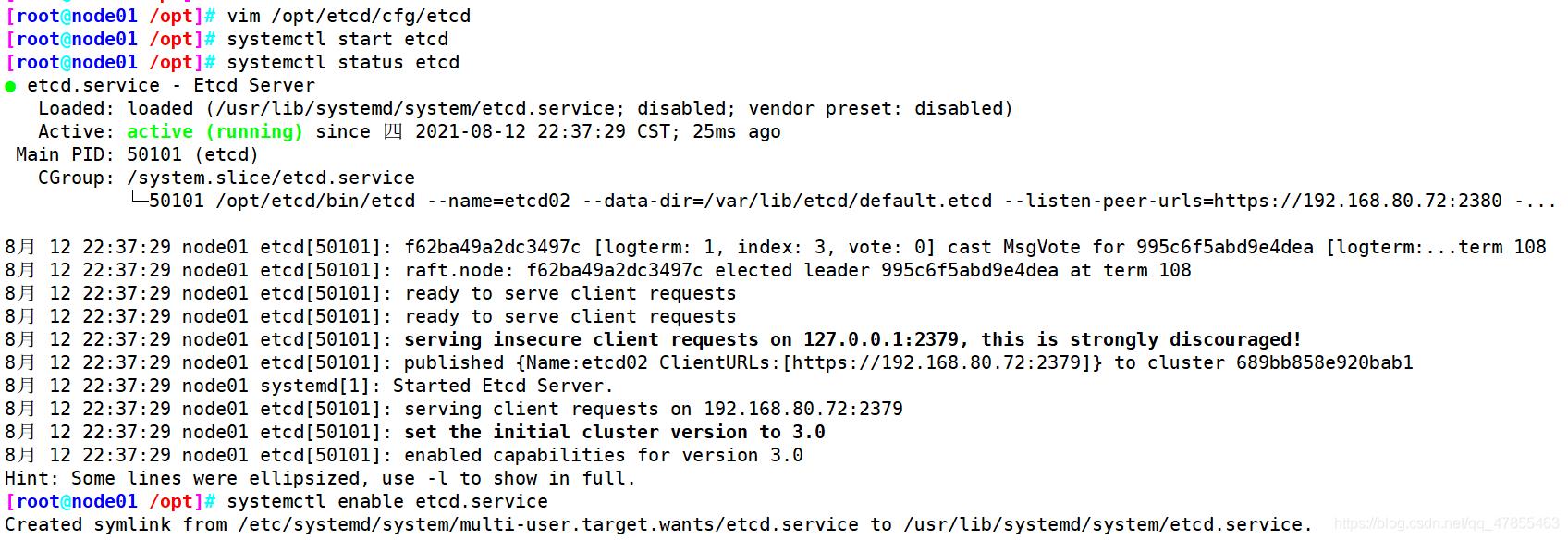

master01

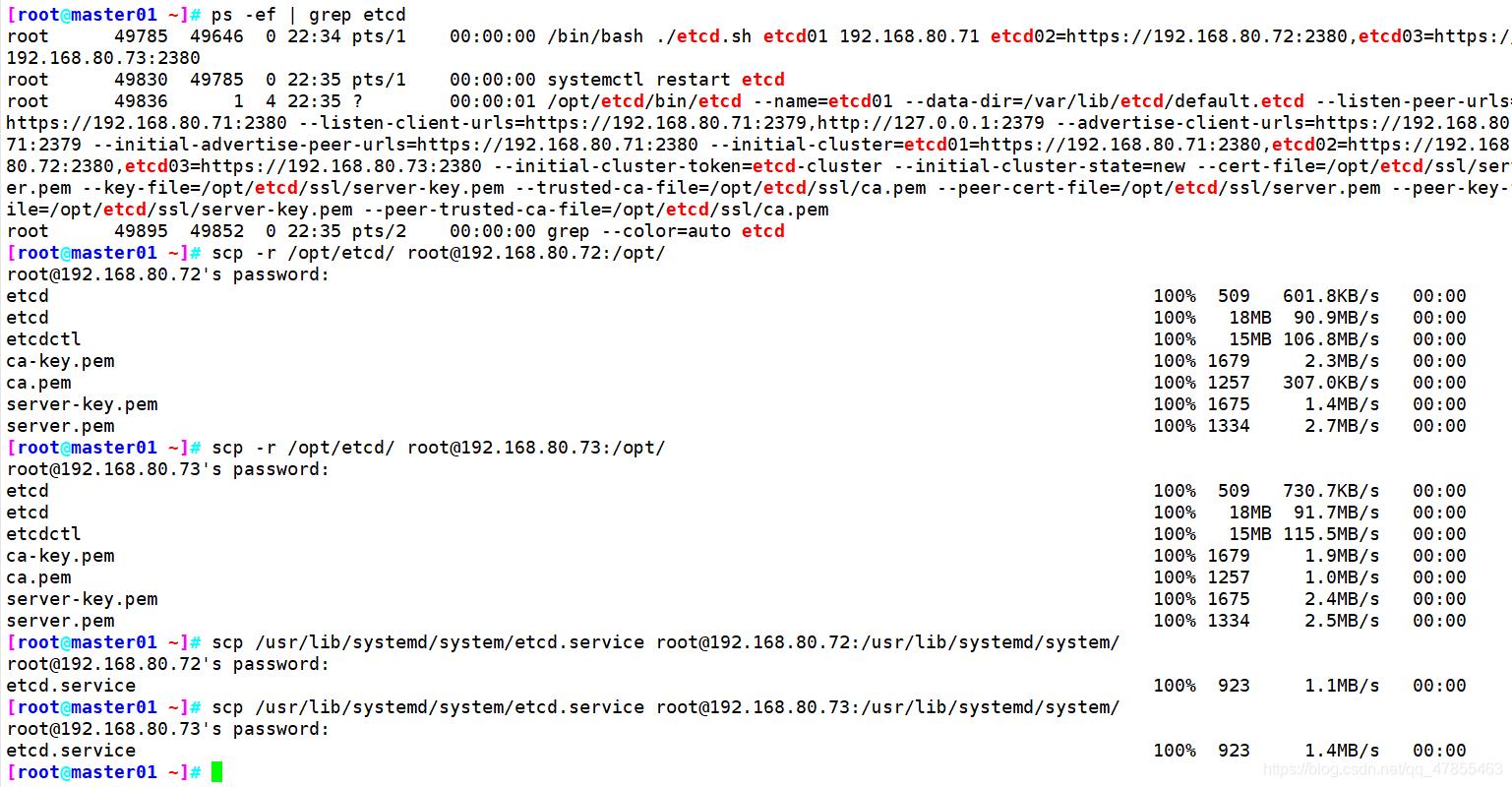

另外打开一个窗口查看etcd进程是否正常

node01

在node01和node02节点上查看master复制的etcd配置文件,命令文件,证书的目录

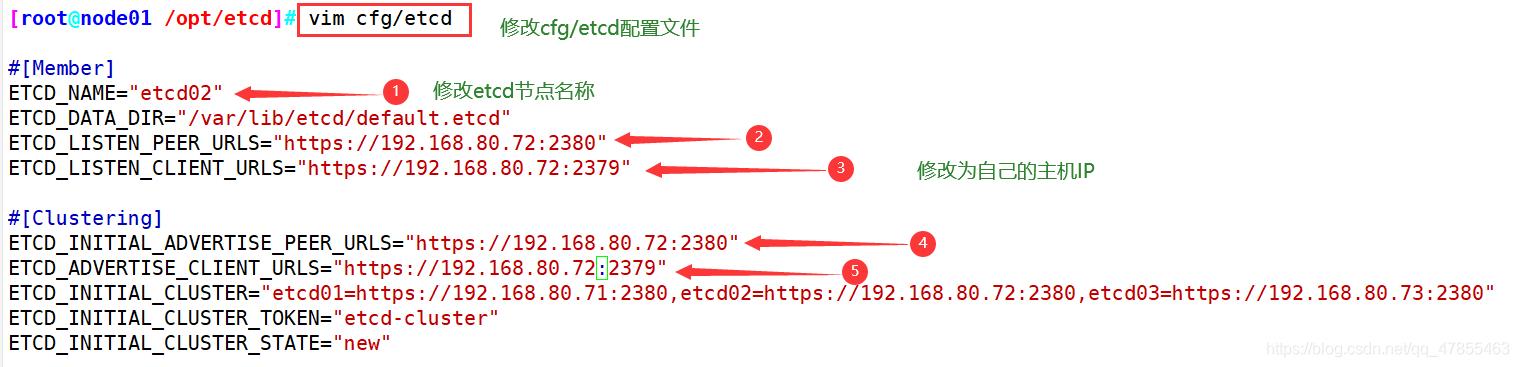

修改node节点的cfg/etcd配置文件

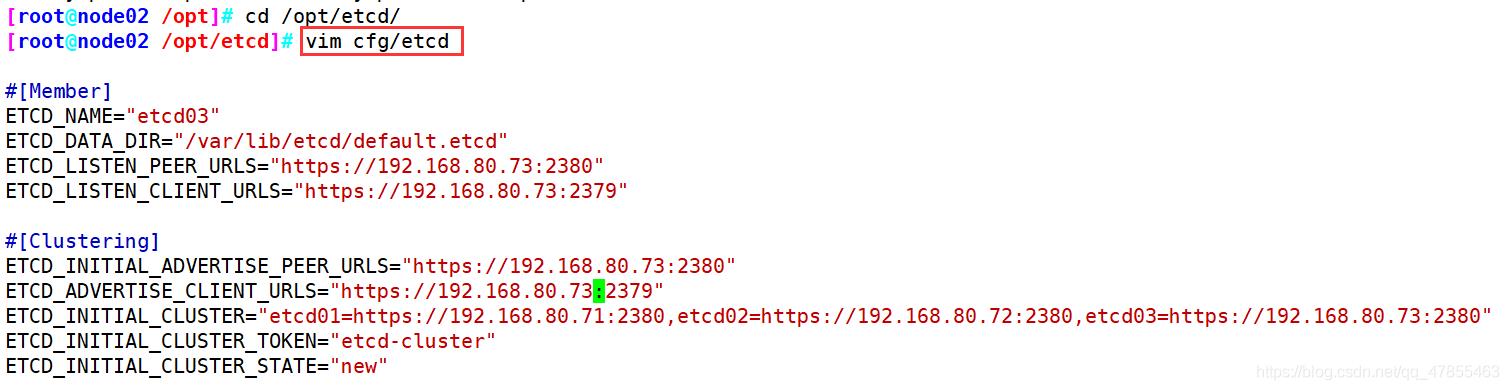

node02

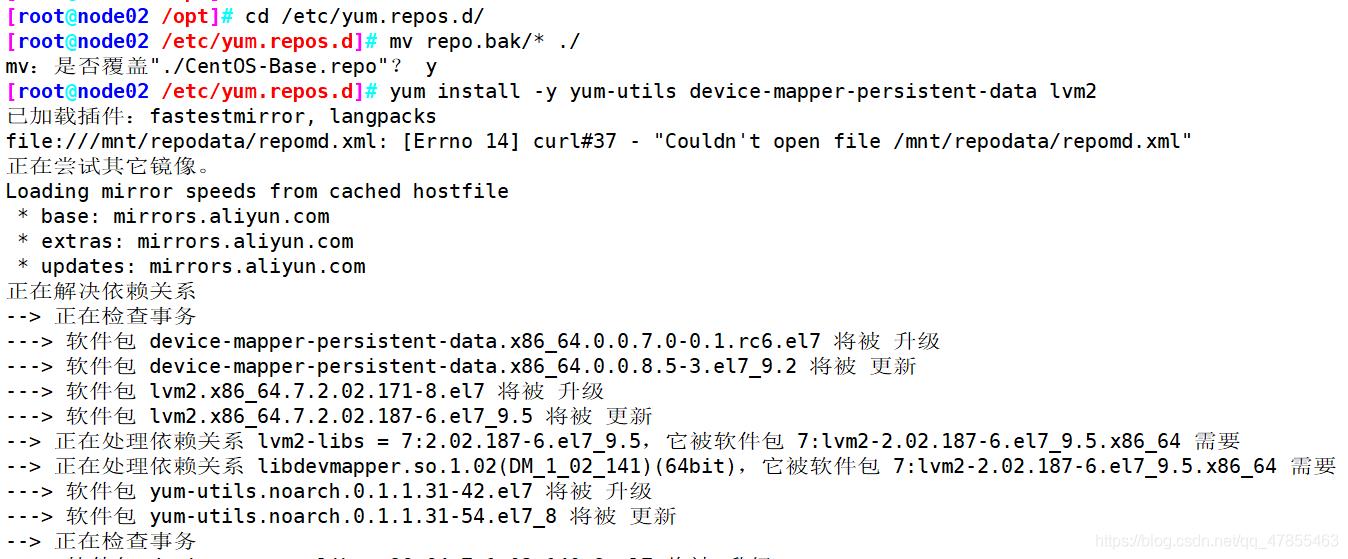

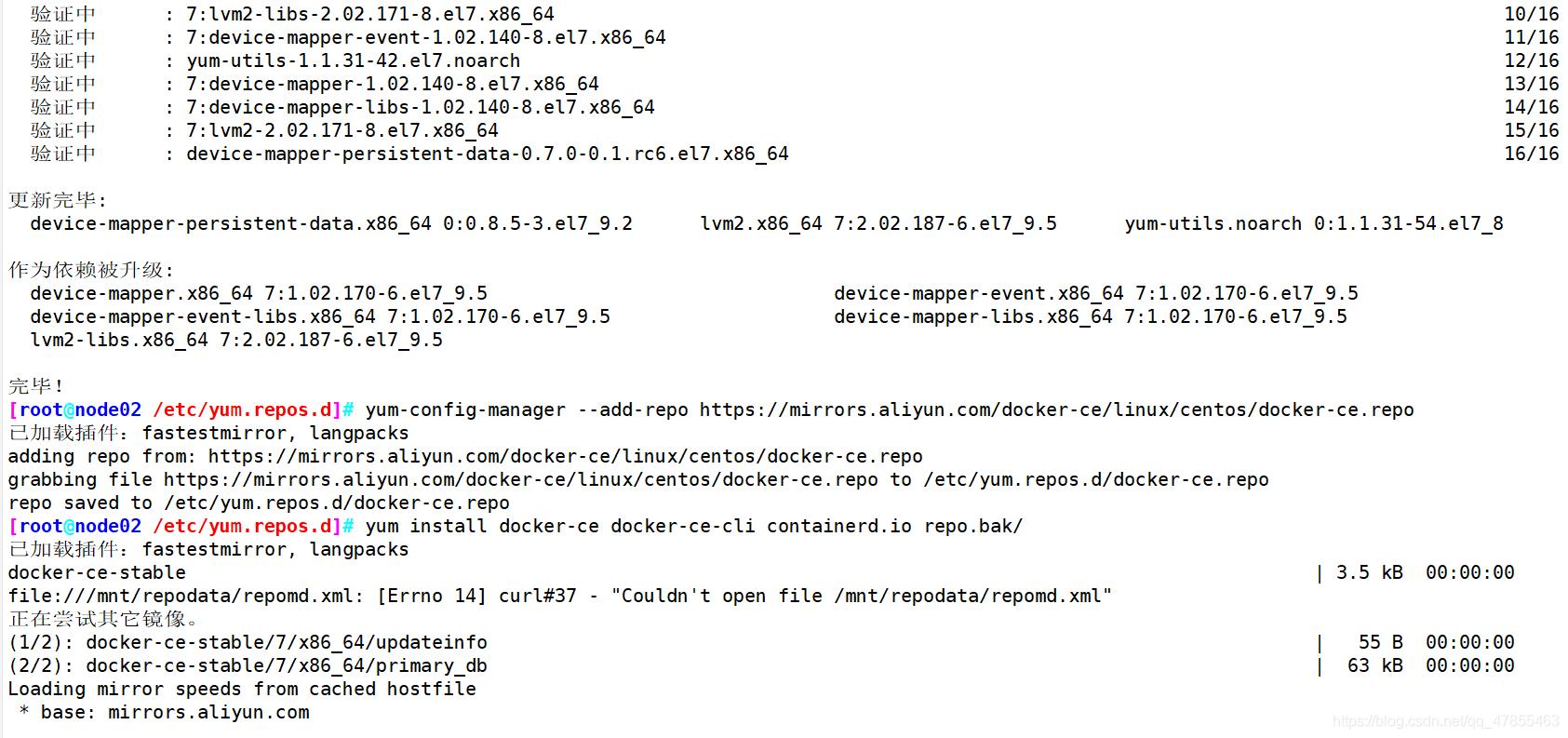

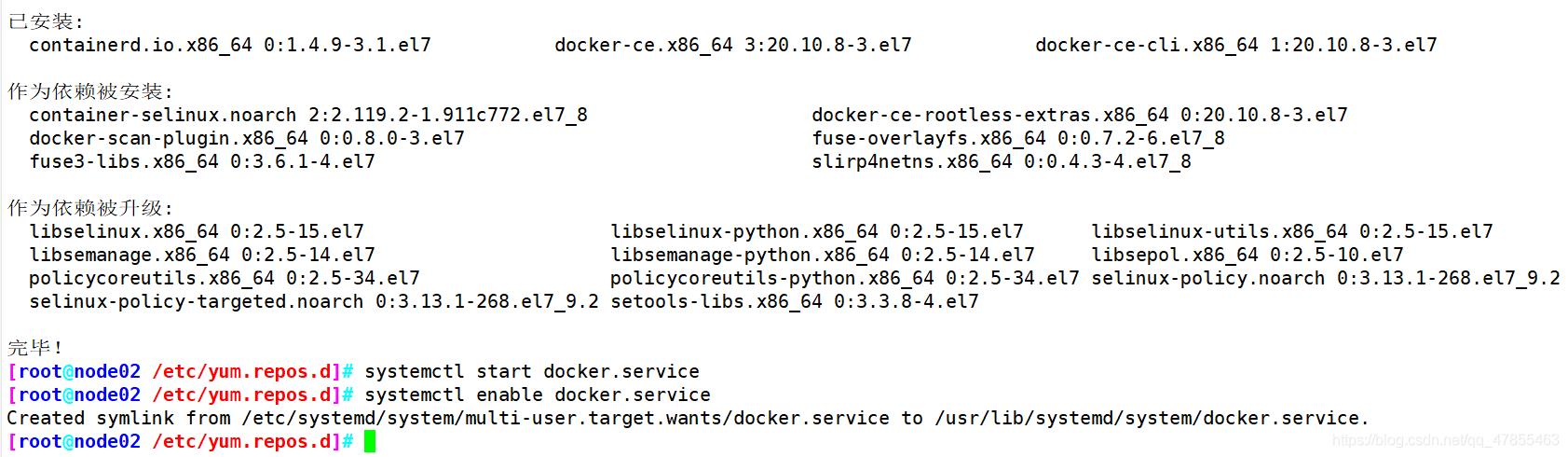

所有node节点部署docker引擎

//所有node节点部署docker引擎

yum install -y yum-utils device-mapper-persistent-data lvm2

yum-config-manager --add-repo https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

yum install -y docker-ce docker-ce-cli containerd.io

systemctl start docker.service

systemctl enable docker.service

2、flannel网络插件

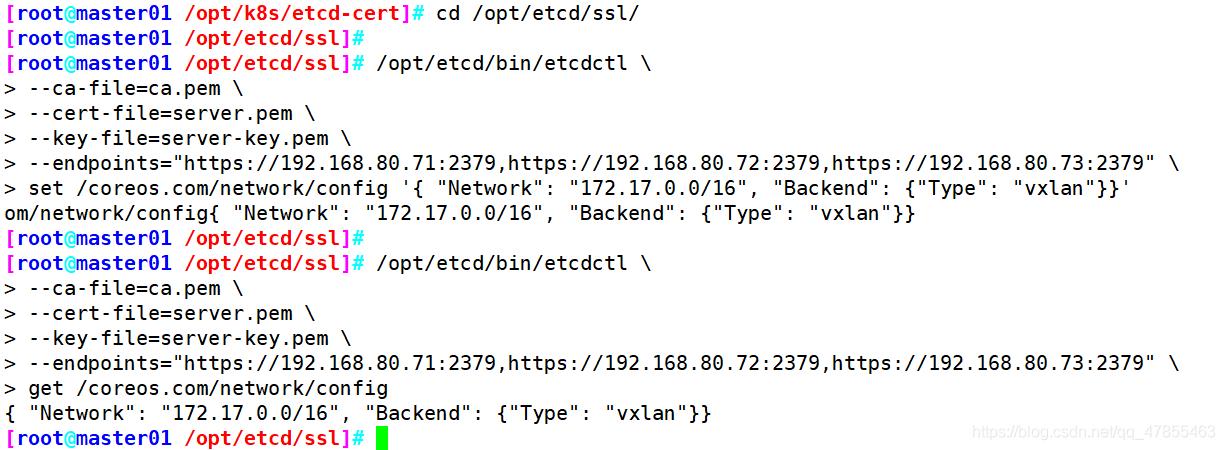

#写入分配的子网段到ETCD中,供flannel使用(master主机)

cd /opt/etcd/ssl/

/opt/etcd/bin/etcdctl \\

--ca-file=ca.pem \\

--cert-file=server.pem \\

--key-file=server-key.pem \\

--endpoints="https://192.168.80.71:2379,https://192.168.80.72:2379,https://192.168.80.73:2379" \\

set /coreos.com/network/config ' "Network": "172.17.0.0/16", "Backend": "Type": "vxlan"'

#命令简介--------------------------------------------------

#使用etcdctl命令,借助ca证书,目标断点为三个ETCD节点IP,端口为2379

#set /coreos.com/network/config 设置网段信息

#"Network": "172.17.0.0/16" 此网段必须是集合网段(B类地址),而Pod分配的资源必须在此网段中的子网段(C类地址)

#"Backend": "Type": "vxlan" 外部通讯的类型是VXLAN

----------------------------------------------------------

#查看写入的信息(master主机)

/opt/etcd/bin/etcdctl \\

--ca-file=ca.pem \\

--cert-file=server.pem \\

--key-file=server-key.pem \\

--endpoints="https://192.168.80.71:2379,https://192.168.80.72:2379,https://192.168.80.73:2379" \\

get /coreos.com/network/config

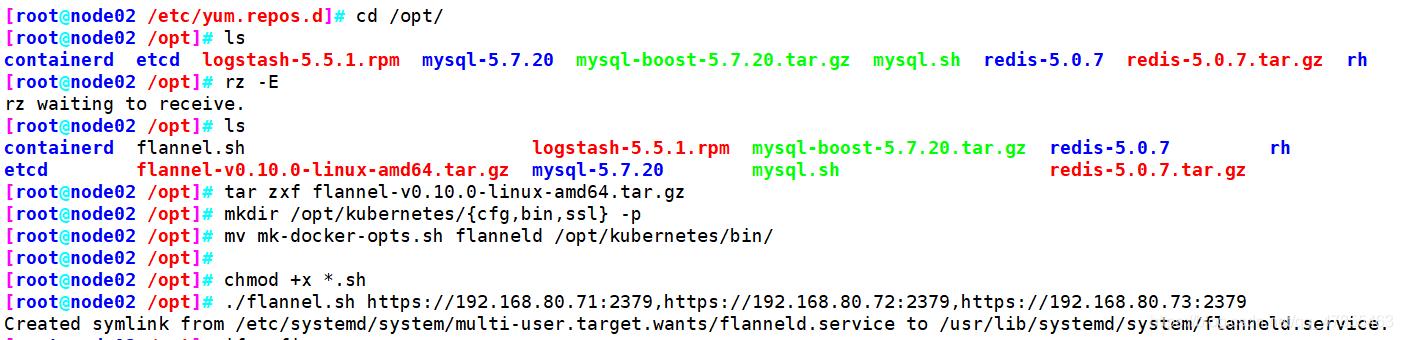

#上传flannel软件包到所有的 node 节点并解压(所有node节点)

cd /opt/

rz -E(flannel.sh flannel-v0.10.0-linux-amd64.tar.gz)(只需要部署在node节点即可)

ls

tar zxf flannel-v0.10.0-linux-amd64.tar.gz

#创建k8s工作目录(所有node节点)

mkdir /opt/kubernetes/cfg,bin,ssl -p

mv mk-docker-opts.sh flanneld /opt/kubernetes/bin/

#创建启动脚本(两个node节点)

vim flannel.sh

#!/bin/bash

ETCD_ENDPOINTS=$1:-"http://127.0.0.1:2379"

cat <<EOF >/opt/kubernetes/cfg/flanneld #创建配置文件

FLANNEL_OPTIONS="--etcd-endpoints=$ETCD_ENDPOINTS \\ #flannel在使用的时候需要参照CA证书

-etcd-cafile=/opt/etcd/ssl/ca.pem \\

-etcd-certfile=/opt/etcd/ssl/server.pem \\

-etcd-keyfile=/opt/etcd/ssl/server-key.pem"

EOF

cat <<EOF >/usr/lib/systemd/system/flanneld.service #创建启动脚本

[Unit]

Description=Flanneld overlay address etcd agent

After=network-online.target network.target

Before=docker.service

[Service]

Type=notify

EnvironmentFile=/opt/kubernetes/cfg/flanneld

ExecStart=/opt/kubernetes/bin/flanneld --ip-masq \\$FLANNEL_OPTIONS

ExecStartPost=/opt/kubernetes/bin/mk-docker-opts.sh -k DOCKER_NETWORK_OPTIONS -d /run/flannel/subnet.env #Docker使用的网络是flannel提供的

Restart=on-failure

[Install]

WantedBy=multi-user.target #多用户模式

EOF

systemctl daemon-reload

systemctl enable flanneld

systemctl restart flanneld

#开启flannel网络功能(两个node节点)

chmod +x *.sh

./flannel.sh https://192.168.80.71:2379,https://192.168.80.72:2379,https://192.168.80.73:2379

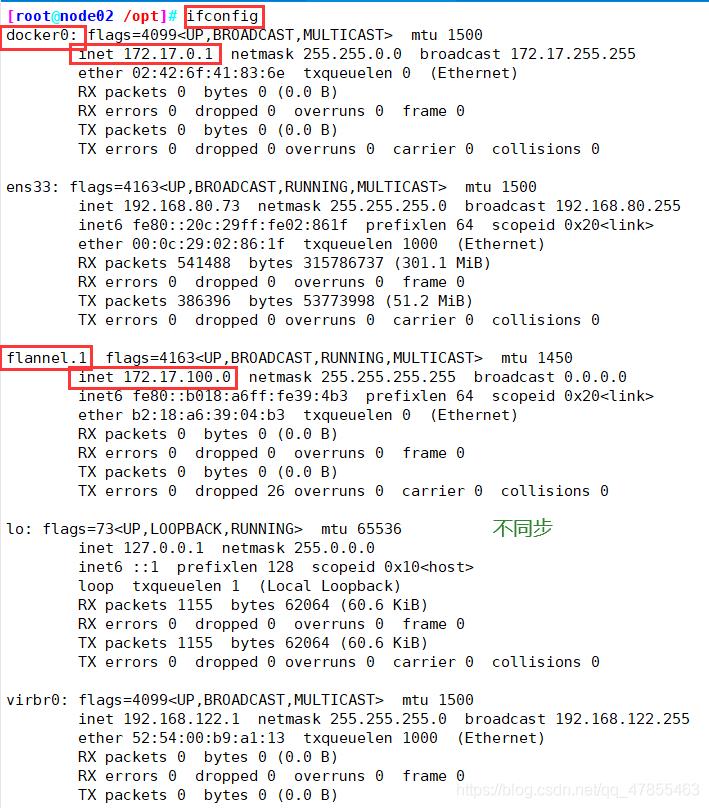

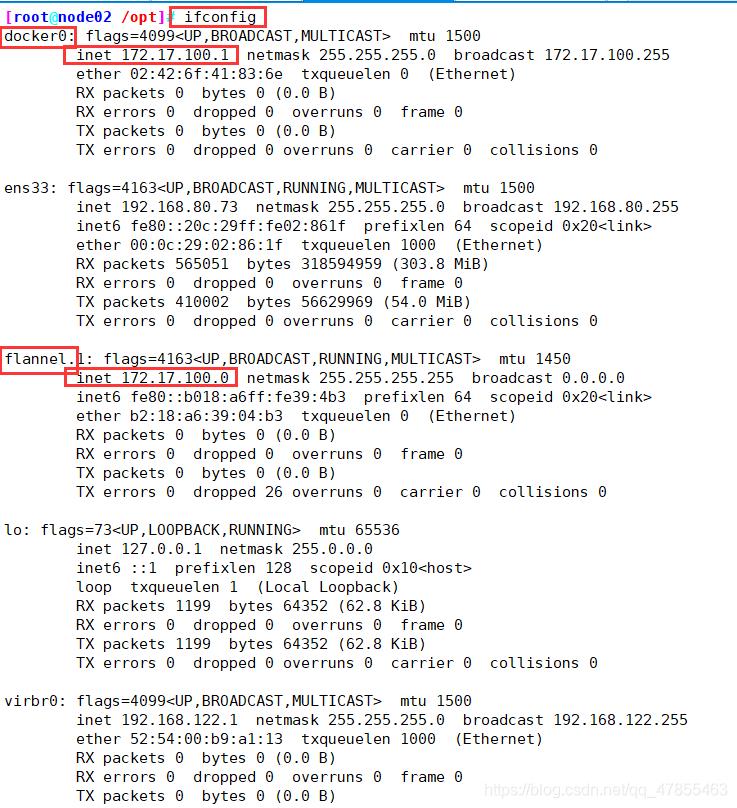

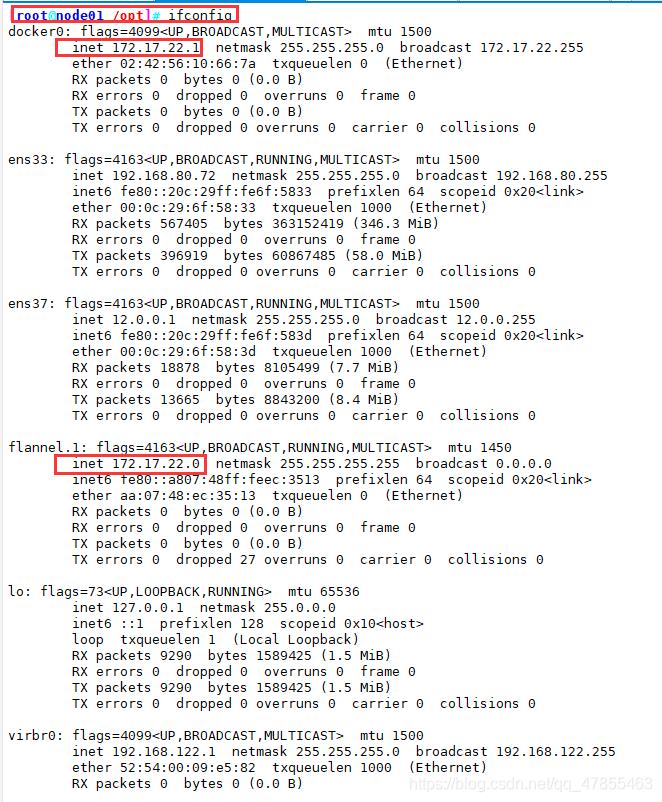

ifconfig

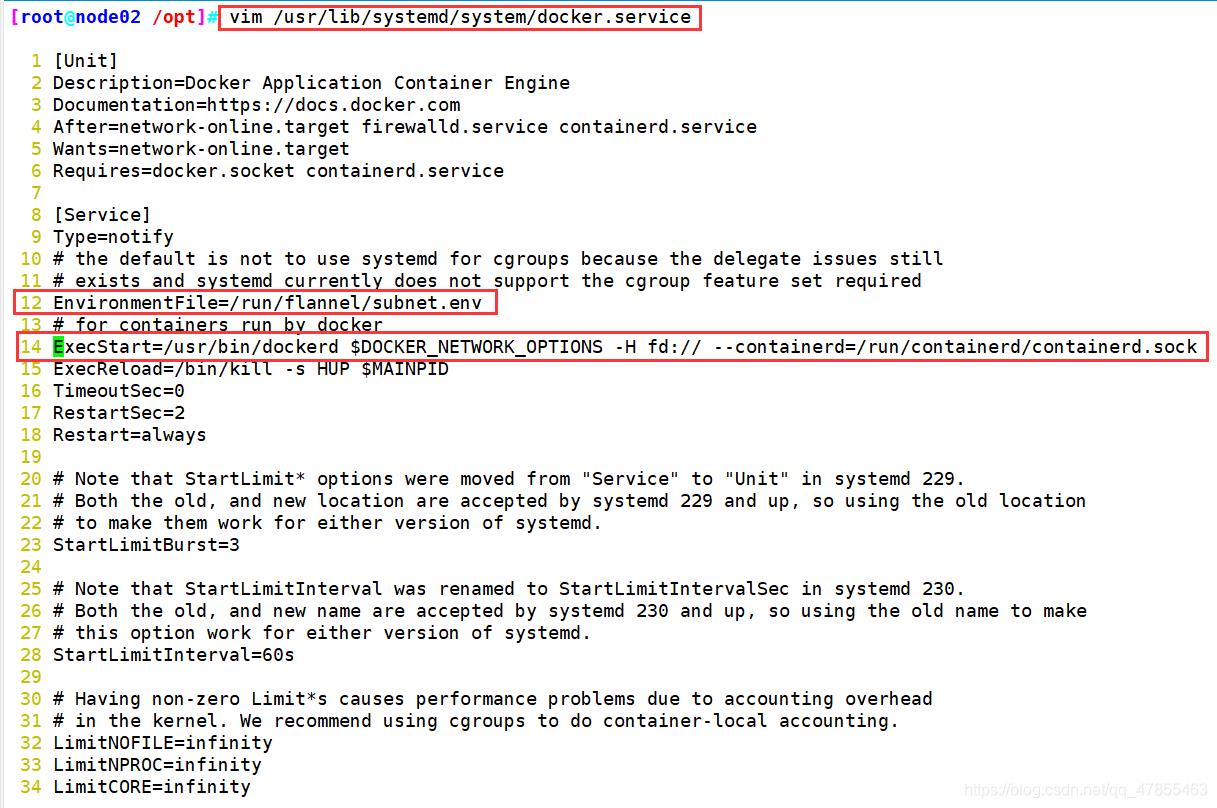

#配置 docker 连接 flannel(两个node节点)

vim /usr/lib/systemd/system/docker.service

-----12行添加

EnvironmentFile=/run/flannel/subnet.env

-----13行修改(添加参数$DOCKER_NETWORK_OPTIONS)

ExecStart=/usr/bin/dockerd $DOCKER_NETWORK_OPTIONS -H fd:// --containerd=/run/containerd/containerd.sock

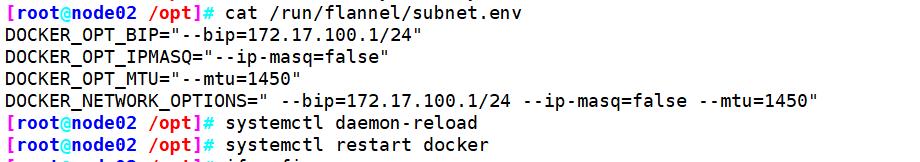

#查看flannel分配的子网段

cat /run/flannel/subnet.env

#重载进程、重启docker

systemctl daemon-reload

systemctl restart docker

ifconfig

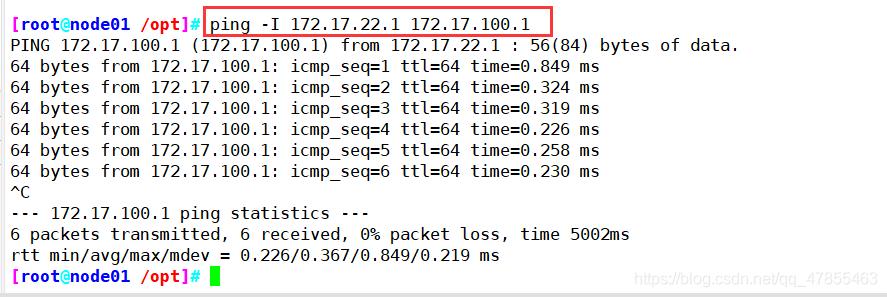

//测试ping通对方docker0网卡 证明flannel起到路由作用

docker run -it centos:7 /bin/bash

yum install net-tools -y

ping -I 172.17.100.1 172.17.22.1(对方主机IP)

master节点

两个node节点

node01

3、搭建master组件

#创建k8s工作目录和apiserver的证书目录

cd ~/k8s

mkdir /opt/kubernetes/cfg,bin,ssl -p

mkdir k8s-cert

#生成证书

cd k8s-cert

vim k8s-cert.sh

cat > ca-config.json <<EOF

"signing":

"default":

"expiry": "87600h"

,

"profiles":

"kubernetes":

"expiry": "87600h",

"usages": [

"signing",

"key encipherment",

"server auth",

"client auth"

]

EOF

cat > ca-csr.json <<EOF

"CN": "kubernetes",

"key":

"algo": "rsa",

"size": 2048

,

"names": [

"C": "CN",

"L": "Beijing",

"ST": "Beijing",

"O": "k8s",

"OU": "System"

]

EOF

cfssl gencert -initca ca-csr.json | cfssljson -bare ca -

cat > server-csr.json <<EOF

"CN": "kubernetes",

"hosts": [

"10.0.0.1",

"127.0.0.1",

"192.168.184.140", #master1节点

"192.168.184.145", #master2节点(为之后做多节点做准备)

"192.168.184.200", #VIP飘逸地址

"192.168.184.146", #nginx1负载均衡地址(主)

"192.168.184.147", #nginx2负载均衡地址(备)

"kubernetes",

"kubernetes.default",

"kubernetes.default.svc",

"kubernetes.default.svc.cluster",

"kubernetes.default.svc.cluster.local"

],

"key":

"algo": "rsa",

"size": 2048

,

"names": [

"C": "CN",

"L": "BeiJing",

"ST": "BeiJing",

"O": "k8s",

"OU": "System"

]

EOF

cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes server-csr.json | cfssljson -bare server

cat > admin-csr.json <<EOF

"CN": "admin",

"hosts": [],

"key":

"algo": "rsa",

"size": 2048

,

"names": [

"C": "CN",

"L": "BeiJing",

"ST": "BeiJing",

"O": "system:masters",

"OU": "System"

]

EOF

cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes admin-csr.json | cfssljson -bare admin

cat > kube-proxy-csr.json <<EOF

"CN": "system:kube-proxy",

"hosts": [],

"key":

"algo": "rsa",

"size": 2048

,

"names": [

"C": "CN",

"L": "BeiJing",

"ST": "BeiJing",

"O": "k8s",

"OU": "System"

]

EOF

cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes kube-proxy-csr.json | cfssljson -bare kube-proxy

#直接执行脚本生成K8S的证书

bash k8s-cert.sh

#此时查看本地目录的证书文件,应该有8个

ls *.pem

#把ca server端的证书复制到k8s工作目录

cp ca*.pem server*.pem /opt/kubernetes/ssl

ls /opt/kubernetes/ssl/

#解压kubernetes压缩包

cd ../

tar zxvf kubernetes-server-linux-amd64.tar.gz

#复制关键命令到k8s的工作目录中

cd kubernetes/server/bin

cp kube-controller-manager kubectl kube-apiserver kube-scheduler /opt/kubernetes/bin

#使用head -c 16 /dev/urandom | od -An -t x | tr -d ’ ',随机生成序列号 生成随机序列号

head -c 16 /dev/urandom | od -An -t x | tr -d ' '

#创建token(令牌)文件

cd /opt/kubernetes/cfg

vim token.csv

上一步随机序列号,kubelet-bootstrap,10001,"system:kubelet-bootstrap"

------------------------------

此角色的定位和作用如下:

① 创建位置:在master节点创建bootstrap角色

② 管理node节点的kubelet

③ kubelet-bootstrap 管理、授权system:kubelet-bootstrap

④ 而system:kubelet-bootstrap 则管理node节点的kubelet

⑤ token就是授权给system:kubelet-bootstrap角色,如果此角色没有token的授权,则不能管理node下的kubelet

------------------------------

#二进制文件,token,证书准备齐全后,开启apiserver

上传master.zip

cd /root/k8s

unzip master.zip

chmod +x controller-manager.sh

apiserver.sh 脚本简介-------------------------------------

#!/bin/bash

MASTER_ADDRESS=$1 #本地地址

ETCD_SERVERS=$2 #群集

cat <<EOF >/opt/kubernetes/cfg/kube-apiserver #生成配置文件到k8s工作目录

KUBE_APISERVER_OPTS="--logtostderr=true \\\\ #从ETCD读取、存入数据

--v=4 \\\\

--etcd-servers=$ETCD_SERVERS \\\\

--bind-address=$MASTER_ADDRESS \\\\ #绑定地址

--secure-port=6443 \\\\

--advertise-address=$MASTER_ADDRESS \\\\ #master本地地址

--allow-privileged=true \\\\ #允许授权

--service-cluster-ip-range=10.0.0.0/24 \\\\

--enable-admission-plugins=NamespaceLifecycle,LimitRanger,ServiceAccount,ResourceQuota,NodeRestriction \\\\ #plugin插件,包括命名空间中的插件、server端的授权

--authorization-mode=RBAC,Node \\\\ #使用RBAC模式验证node端

--kubelet-https=true \\\\ #允许对方使用https协议进行访问

--enable-bootstrap-token-auth \\\\ #开启bootstrap令牌授权

--token-auth-file=/opt/kubernetes/cfg/token.csv \\\\ #令牌文件路径

--service-node-port-range=30000-50000 \\\\ #开启的监听端口

#以下均为证书文件

--tls-cert-file=/opt/kubernetes/ssl/server.pem \\\\

--tls-private-key-file=/opt/kubernetes/ssl/server-key.pem \\\\

--client-ca-file=/opt/kubernetes/ssl/ca.pem \\\\

--service-account-key-file=/opt/kubernetes/ssl/ca-key.pem \\\\

--etcd-cafile=/opt/etcd/ssl/ca.pem \\\\

--etcd-certfile=/opt/etcd/ssl/server.pem \\\\

--etcd-keyfile=/opt/etcd/ssl/server-key.pem"

EOF

cat <<EOF >/usr/lib/systemd/system/kube-apiserver.service #服务启动脚本

[Unit]

Description=Kubernetes API Server

Documentation=https://github.com/kubernetes/kubernetes

[Service]

EnvironmentFile=-/opt/kubernetes/cfg/kube-apiserver

ExecStart=/opt/kubernetes/bin/kube-apiserver \\$KUBE_APISERVER_OPTS

Restart=on-failure

[Install]

WantedBy=multi-user.target

EOF

systemctl daemon-reload

systemctl enable kube-apiserver

systemctl restart kube-apiserver

---------------------------------------------------------

#开启apiserver

bash apiserver.sh 192.168.184.140 https://192.168.184.140:2379,https://192.168.184.141:2379,https://192.168.184.142:2379

#查看api进程验证启动状态

ps aux | grep kube

#查看配置文件是否正常

cat /opt/kubernetes/cfg/kube-apiserver

#查看进行端口是否开启

netstat -natp | grep 6443

#查看scheduler启动脚本

vim scheduler.sh

#!/bin/bash

MASTER_ADDRESS=$1

cat <<EOF >/opt/kubernetes/cfg/kube-scheduler

KUBE_SCHEDULER_OPTS="--logtostderr=true \\\\ #定义日志记录

--v=4 \\\\

--master=$MASTER_ADDRESS:8080 \\\\ #定义master地址,指向8080端口

--leader-elect" #定位为leader

EOF

cat <<EOF >/usr/lib/systemd/system/kube-scheduler.service #定义启动脚本

[Unit]

Description=Kubernetes 以上是关于k8s单节点集群二进制部署(步骤详细,图文详解)的主要内容,如果未能解决你的问题,请参考以下文章