docker-compose 搭建 kafka 集群

Posted 仅此而已-远方

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了docker-compose 搭建 kafka 集群相关的知识,希望对你有一定的参考价值。

环境准备

kafka依赖zookeeper,所以搭建kafka需要先配置zookeeper。网格信息如下:

| zookeeper | 192.168.56.101:2181 |

| kafka1 | 192.168.56.101:9092 |

| kafka2 | 192.168.56.101:9093 |

| kafka3 | 192.168.56.101:9094 |

开始搭建

1、安装 docker-compose

curl -L http://mirror.azure.cn/docker-toolbox/linux/compose/1.25.4/docker-compose-Linux-x86_64 -o /usr/local/bin/docker-compose chmod +x /usr/local/bin/docker-compose

2、创建 docker-compose.yaml 文件

version: \'3.3\' services: zookeeper: image: wurstmeister/zookeeper container_name: zookeeper ports: - 2181:2181 volumes: - ./data/zookeeper/data:/data - ./data/zookeeper/datalog:/datalog - ./data/zookeeper/logs:/logs restart: always kafka1: image: wurstmeister/kafka depends_on: - zookeeper container_name: kafka1 ports: - 9092:9092 environment: KAFKA_BROKER_ID: 1 KAFKA_ZOOKEEPER_CONNECT: 192.168.56.101:2181 KAFKA_ADVERTISED_LISTENERS: PLAINTEXT://192.168.56.101:9092 KAFKA_LISTENERS: PLAINTEXT://0.0.0.0:9092 volumes: - /data/kafka1/data:/data - /data/kafka1/log:/datalog restart: unless-stopped kafka2: image: wurstmeister/kafka depends_on: - zookeeper container_name: kafka2 ports: - 9093:9093 environment: KAFKA_BROKER_ID: 2 KAFKA_ZOOKEEPER_CONNECT: 192.168.56.101:2181 KAFKA_ADVERTISED_LISTENERS: PLAINTEXT://192.168.56.101:9093 KAFKA_LISTENERS: PLAINTEXT://0.0.0.0:9093 volumes: - /data/kafka2/data:/data - /data/kafka2/log:/datalog restart: unless-stopped kafka3: image: wurstmeister/kafka depends_on: - zookeeper container_name: kafka3 ports: - 9094:9094 environment: KAFKA_BROKER_ID: 3 KAFKA_ZOOKEEPER_CONNECT: 192.168.56.101:2181 KAFKA_ADVERTISED_LISTENERS: PLAINTEXT://192.168.56.101:9094 KAFKA_LISTENERS: PLAINTEXT://0.0.0.0:9094 volumes: - /data/kafka3/data:/data - /data/kafka3/log:/datalog restart: unless-stopped

参数说明:

KAFKA_ZOOKEEPER_CONNECT: zk服务地址

KAFKA_ADVERTISED_LISTENERS: kafka服务地址

3、启动

docker-compose up -d

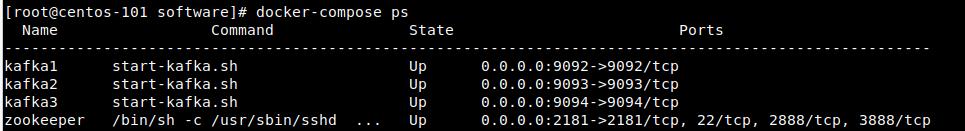

4、查看启动的容器:docker-compose ps

出现如上结果表示启动正常~

测试

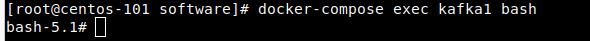

1、登录到 kafka1 容器内

docker-compose exec kafka1 bash

2、创建一个 topic:名称为first,3个分区,2个副本

./opt/kafka_2.13-2.7.0/bin/kafka-topics.sh --create --topic first --zookeeper 192.168.56.101:2181 --partitions 3 --replication-factor 2

注意:副本数不能超过brokers数(分区是可以超过的),否则会创建失败。

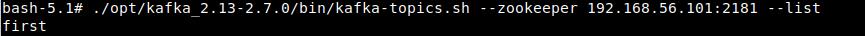

3、查看 topic 列表

./opt/kafka_2.13-2.7.0/bin/kafka-topics.sh --list --zookeeper 192.168.56.101:2181

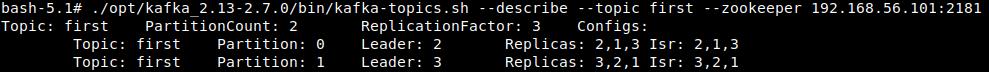

4、查看 topic 为 first 的详情

./opt/kafka_2.13-2.7.0/bin/kafka-topics.sh --describe --topic first --zookeeper 192.168.56.101:2181

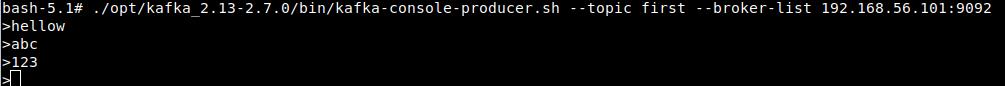

5、创建一个生产者,向 topic 中发送消息

./opt/kafka_2.13-2.7.0/bin/kafka-console-producer.sh --topic first --broker-list 192.168.56.101:9092

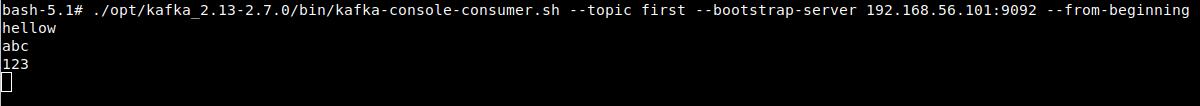

6、登录到 kafka2 或者 kafka3 容器内(参考第1步),然后创建一个消费者,接收 topic 中的消息

./opt/kafka_2.13-2.7.0/bin/kafka-console-consumer.sh --topic first --bootstrap-server 192.168.56.101:9092 --from-beginning

注意:--from-beginning表示从最开始读消息,不加该参数则根据最大offset读(从最新消息开始读取)

总结

1、连接信息

producer --> broker-list

kafka集群 --> zookeeper

consumer --> bootstrap-server 或 zookeeper

0.9版本以前,consumer是连向zookeeper,0.9版本以后,默认连接bootstrap-server

以上是关于docker-compose 搭建 kafka 集群的主要内容,如果未能解决你的问题,请参考以下文章