数据可视化基础专题(二十八):Pandas基础 合并concat

Posted 秋华

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了数据可视化基础专题(二十八):Pandas基础 合并concat相关的知识,希望对你有一定的参考价值。

一 合并

1 Concatenating objects

The concat() function (in the main pandas namespace) does all of the heavy lifting of performing concatenation operations along an axis while performing optional set logic (union or intersection) of the indexes (if any) on the other axes. Note that I say “if any” because there is only a single possible axis of concatenation for Series.

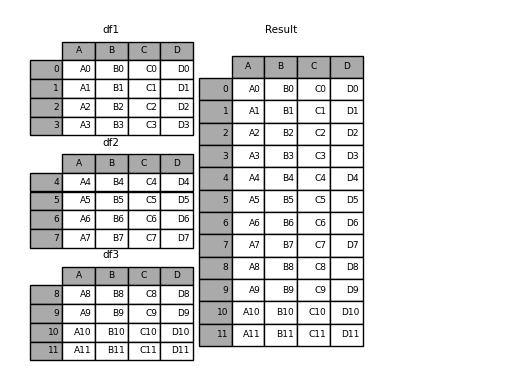

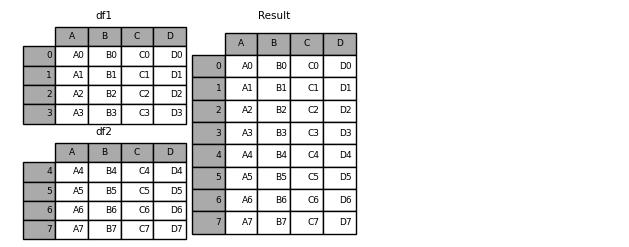

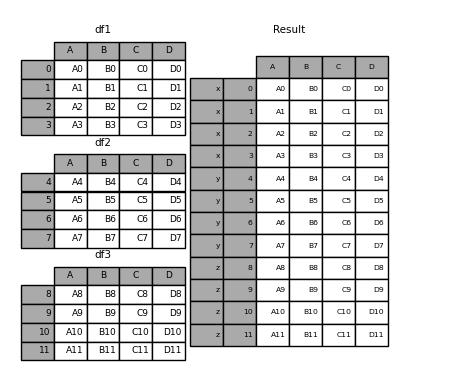

Before diving into all of the details of concat and what it can do, here is a simple example:

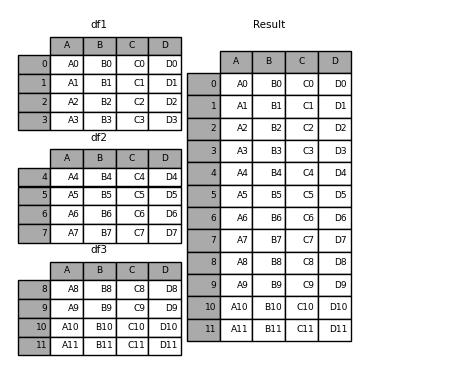

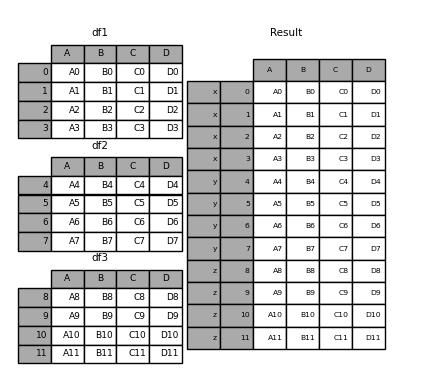

In [1]: df1 = pd.DataFrame( ...: { ...: "A": ["A0", "A1", "A2", "A3"], ...: "B": ["B0", "B1", "B2", "B3"], ...: "C": ["C0", "C1", "C2", "C3"], ...: "D": ["D0", "D1", "D2", "D3"], ...: }, ...: index=[0, 1, 2, 3], ...: ) ...: In [2]: df2 = pd.DataFrame( ...: { ...: "A": ["A4", "A5", "A6", "A7"], ...: "B": ["B4", "B5", "B6", "B7"], ...: "C": ["C4", "C5", "C6", "C7"], ...: "D": ["D4", "D5", "D6", "D7"], ...: }, ...: index=[4, 5, 6, 7], ...: ) ...: In [3]: df3 = pd.DataFrame( ...: { ...: "A": ["A8", "A9", "A10", "A11"], ...: "B": ["B8", "B9", "B10", "B11"], ...: "C": ["C8", "C9", "C10", "C11"], ...: "D": ["D8", "D9", "D10", "D11"], ...: }, ...: index=[8, 9, 10, 11], ...: ) ...: In [4]: frames = [df1, df2, df3] In [5]: result = pd.concat(frames)

Like its sibling function on ndarrays, numpy.concatenate, pandas.concat takes a list or dict of homogeneously-typed objects and concatenates them with some configurable handling of “what to do with the other axes”:

pd.concat( objs, axis=0, join="outer", ignore_index=False, keys=None, levels=None, names=None, verify_integrity=False, copy=True, )

-

objs: a sequence or mapping of Series or DataFrame objects. If a dict is passed, the sorted keys will be used as thekeysargument, unless it is passed, in which case the values will be selected (see below). Any None objects will be dropped silently unless they are all None in which case a ValueError will be raised. -

axis: {0, 1, …}, default 0. The axis to concatenate along. -

join: {‘inner’, ‘outer’}, default ‘outer’. How to handle indexes on other axis(es). Outer for union and inner for intersection. -

ignore_index: boolean, default False. If True, do not use the index values on the concatenation axis. The resulting axis will be labeled 0, …, n - 1. This is useful if you are concatenating objects where the concatenation axis does not have meaningful indexing information. Note the index values on the other axes are still respected in the join. -

keys: sequence, default None. Construct hierarchical index using the passed keys as the outermost level. If multiple levels passed, should contain tuples. -

levels: list of sequences, default None. Specific levels (unique values) to use for constructing a MultiIndex. Otherwise they will be inferred from the keys. -

names: list, default None. Names for the levels in the resulting hierarchical index. -

verify_integrity: boolean, default False. Check whether the new concatenated axis contains duplicates. This can be very expensive relative to the actual data concatenation. -

copy: boolean, default True. If False, do not copy data unnecessarily.

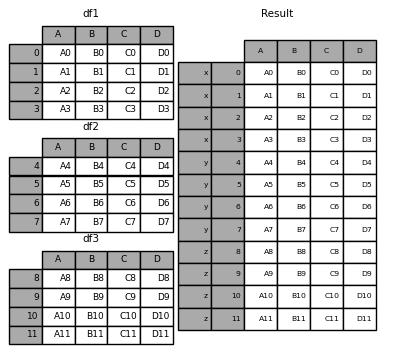

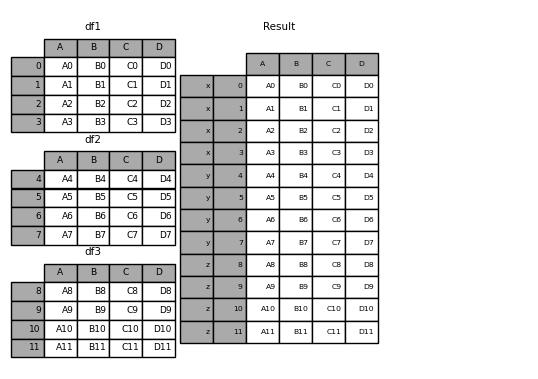

Without a little bit of context many of these arguments don’t make much sense. Let’s revisit the above example. Suppose we wanted to associate specific keys with each of the pieces of the chopped up DataFrame. We can do this using the keys argument:

In [6]: result = pd.concat(frames, keys=["x", "y", "z"])

As you can see (if you’ve read the rest of the documentation), the resulting object’s index has a hierarchical index. This means that we can now select out each chunk by key:

In [7]: result.loc["y"] Out[7]: A B C D 4 A4 B4 C4 D4 5 A5 B5 C5 D5 6 A6 B6 C6 D6 7 A7 B7 C7 D7

2 Set logic on the other axes

When gluing together multiple DataFrames, you have a choice of how to handle the other axes (other than the one being concatenated). This can be done in the following two ways:

-

Take the union of them all,

join=\'outer\'. This is the default option as it results in zero information loss. -

Take the intersection,

join=\'inner\'.

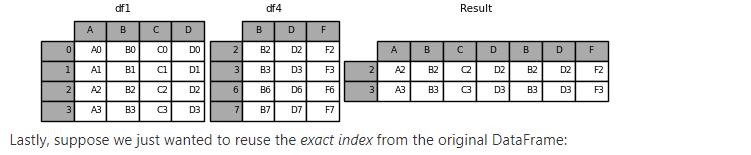

Here is an example of each of these methods. First, the default join=\'outer\' behavior:

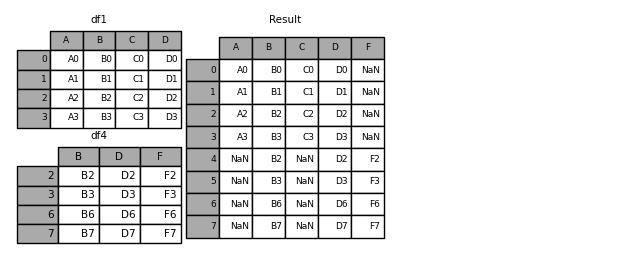

In [8]: df4 = pd.DataFrame( ...: { ...: "B": ["B2", "B3", "B6", "B7"], ...: "D": ["D2", "D3", "D6", "D7"], ...: "F": ["F2", "F3", "F6", "F7"], ...: }, ...: index=[2, 3, 6, 7], ...: ) ...: In [9]: result = pd.concat([df1, df4], axis=1)

Here is the same thing with join=\'inner\':

In [10]: result = pd.concat([df1, df4], axis=1, join="inner")

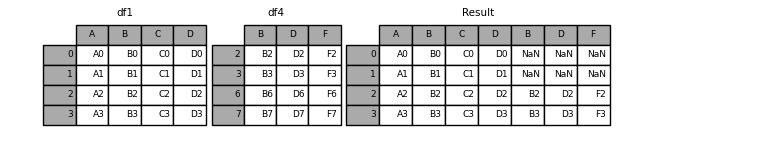

In [11]: result = pd.concat([df1, df4], axis=1).reindex(df1.index)

Similarly, we could index before the concatenation:

In [12]: pd.concat([df1, df4.reindex(df1.index)], axis=1) Out[12]: A B C D B D F 0 A0 B0 C0 D0 NaN NaN NaN 1 A1 B1 C1 D1 NaN NaN NaN 2 A2 B2 C2 D2 B2 D2 F2 3 A3 B3 C3 D3 B3 D3 F3

3 Concatenating using append

A useful shortcut to concat() are the append() instance methods on Series and DataFrame. These methods actually predated concat. They concatenate along axis=0, namely the index:

In [13]: result = df1.append(df2)

In the case of DataFrame, the indexes must be disjoint but the columns do not need to be:

In [14]: result = df1.append(df4, sort=False)

append may take multiple objects to concatenate:

In [15]: result = df1.append([df2, df3])

4 Ignoring indexes on the concatenation axis

For DataFrame objects which don’t have a meaningful index, you may wish to append them and ignore the fact that they may have overlapping indexes. To do this, use the ignore_index argument:

In [16]: result = pd.concat([df1, df4], ignore_index=True, sort=False)

This is also a valid argument to DataFrame.append():

In [17]: result = df1.append(df4, ignore_index=True, sort=False)

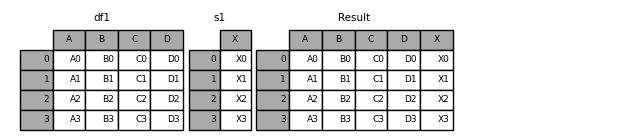

5 Concatenating with mixed ndims

You can concatenate a mix of Series and DataFrame objects. The Series will be transformed to DataFrame with the column name as the name of the Series.

In [18]: s1 = pd.Series(["X0", "X1", "X2", "X3"], name="X") In [19]: result = pd.concat([df1, s1], axis=1)

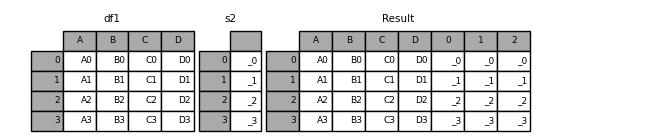

If unnamed Series are passed they will be numbered consecutively.

In [20]: s2 = pd.Series(["_0", "_1", "_2", "_3"]) In [21]: result = pd.concat([df1, s2, s2, s2], axis=1)

Passing ignore_index=True will drop all name references.

In [22]: result = pd.concat([df1, s1], axis=1, ignore_index=True)

6 More concatenating with group keys

A fairly common use of the keys argument is to override the column names when creating a new DataFrame based on existing Series. Notice how the default behaviour consists on letting the resulting DataFrame inherit the parent Series’ name, when these existed.

In [23]: s3 = pd.Series([0, 1, 2, 3], name="foo") In [24]: s4 = pd.Series([0, 1, 2, 3]) In [25]: s5 = pd.Series([0, 1, 4, 5]) In [26]: pd.concat([s3, s4, s5], axis=1) Out[26]: foo 0 1 0 0 0 0 1 1 1 1 2 2 2 4 3 3 3 5

Through the keys argument we can override the existing column names.

In [27]: pd.concat([s3, s4, s5], axis=1, keys=["red", "blue", "yellow"]) Out[27]: red blue yellow 0 0 0 0 1 1 1 1 2 2 2 4 3 3 3 5

Let’s consider a variation of the very first example presented:

In [28]: result = pd.concat(frames, keys=["x", "y", "z"])

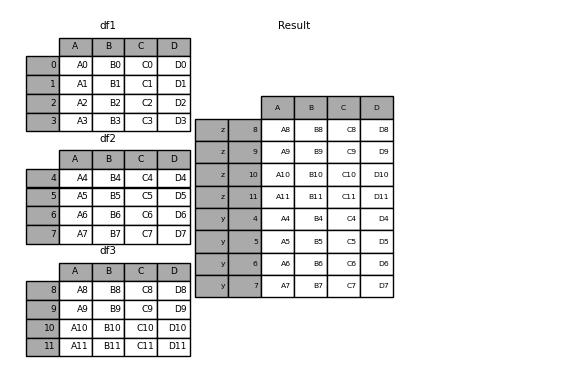

You can also pass a dict to concat in which case the dict keys will be used for the keys argument (unless other keys are specified):

In [29]: pieces = {"x": df1, "y": df2, "z": df3}

In [30]: result = pd.concat(pieces)

In [31]: result = pd.concat(pieces, keys=["z", "y"])

The MultiIndex created has levels that are constructed from the passed keys and the index of the DataFrame pieces:

In [32]: result.index.levels Out[32]: FrozenList([[\'z\', \'y\'], [4, 5, 6, 7, 8, 9, 10, 11]])

If you wish to specify other levels (as will occasionally be the case), you can do so using the levels argument:

In [33]: result = pd.concat( ....: pieces, keys=["x", "y", "z"], levels=[["z", "y", "x", "w"]], names=["group_key"] ....: ) ....:

In [34]: result.index.levels Out[34]: FrozenList([[\'z\', \'y\', \'x\', \'w\'], [0, 1, 2, 3, 4, 5, 6, 7, 8, 9, 10, 11]])

This is fairly esoteric, but it is actually necessary for implementing things like GroupBy where the order of a categorical variable is meaningful.

7 Appending rows to a DataFrame

While not especially efficient (since a new object must be created), you can append a single row to a DataFrame by passing a Series or dict to append, which returns a new DataFrame as above.

In [35]: s2 = pd.Series(["X0", "X1", "X2", "X3"], index=["A", "B", "C", "D"]) In [36]: result = df1.append(s2, ignore_index=True)

You should use ignore_index with this method to instruct DataFrame to discard its index. If you wish to preserve the index, you should construct an appropriately-indexed DataFrame and append or concatenate those objects.

You can also pass a list of dicts or Series:

In [37]: dicts = [{"A": 1, "B": 2, "C": 3, "X": 4}, {"A": 5, "B": 6, "C": 7, "Y": 8}]

In [38]: result = df1.append(dicts, ignore_index=True, sort=False)

以上是关于数据可视化基础专题(二十八):Pandas基础 合并concat的主要内容,如果未能解决你的问题,请参考以下文章