Prometheus-Prometheus-Opterator中添加监控etcd集群

Posted Devops代哲

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了Prometheus-Prometheus-Opterator中添加监控etcd集群相关的知识,希望对你有一定的参考价值。

一、环境声明

- kubeadm kubernetes 1.15

- etcd 也是集群内 pod 部署方式,自带metrics接口

- Prometheus-Operator

二、监控etcd集群

2.1、查看接口信息

# https

# curl --cert /etc/kubernetes/pki/etcd/server.crt --key /etc/kubernetes/pki/etcd/server.key https://127.0.0.1:2379/metrics -k

# http

# curl -L http://localhost:2379/metrics

2.2、查看etcd集群信息获取使用的证书信息

# kubectl describe pods -n kube-system etcd-wt-rd-k8s-control-plane-01-beijing

Name: etcd-wt-rd-k8s-control-plane-01-beijing

Namespace: kube-system

Priority: 2000000000

Priority Class Name: system-cluster-critical

Node: wt-rd-k8s-control-plane-01-beijing/10.2.3.141

Start Time: Thu, 27 May 2021 07:19:25 +0000

Labels: component=etcd

tier=control-plane

Annotations: kubernetes.io/config.hash: 2c510faa262b7e6cc922f5c10917a5a4

kubernetes.io/config.mirror: 2c510faa262b7e6cc922f5c10917a5a4

kubernetes.io/config.seen: 2019-09-03T07:15:31.882345426Z

kubernetes.io/config.source: file

Status: Running

IP: 10.2.3.141

Containers:

etcd:

Container ID: docker://7c0fece5de2b5ea89b5b648bebf2f076320d379500ee2f677dd0619963449bc5

Image: k8s.gcr.io/etcd:3.3.10

Image ID: docker://sha256:2c4adeb21b4ff8ed3309d0e42b6b4ae39872399f7b37e0856e673b13c4aba13d

Port: <none>

Host Port: <none>

Command:

etcd

--advertise-client-urls=https://10.2.3.141:2379

--cert-file=/etc/kubernetes/pki/etcd/server.crt

--client-cert-auth=true

--data-dir=/var/lib/etcd

--initial-advertise-peer-urls=https://10.2.3.141:2380

--initial-cluster=wt-rd-k8s-control-plane-01-beijing=https://10.2.3.141:2380

--key-file=/etc/kubernetes/pki/etcd/server.key

--listen-client-urls=https://127.0.0.1:2379,https://10.2.3.141:2379

--listen-peer-urls=https://10.2.3.141:2380

--name=wt-rd-k8s-control-plane-01-beijing

--peer-cert-file=/etc/kubernetes/pki/etcd/peer.crt

--peer-client-cert-auth=true

--peer-key-file=/etc/kubernetes/pki/etcd/peer.key

--peer-trusted-ca-file=/etc/kubernetes/pki/etcd/ca.crt

--snapshot-count=10000

--trusted-ca-file=/etc/kubernetes/pki/etcd/ca.crt

2.3、利用 kubectl 命令将三个证书文件存入 Kubernetes 的 Secret 资源下

- 可以看出etcd使用的证书都在对应节点的/etc/kubernetes/pki/etcd/目录下面。所以先将需要使用的证书通过secret对象保存到集群中:

# kubectl -n monitoring create secret generic etcd-certs \\

--from-file=/etc/kubernetes/pki/etcd/healthcheck-client.crt \\

--from-file=/etc/kubernetes/pki/etcd/healthcheck-client.key \\

--from-file=/etc/kubernetes/pki/etcd/ca.crt

2.4、创建etcd-certs对象配置到prometheus资源对象

# kubectl edit prometheus k8s -n monitoring

# 添加secrets的如下属性:

nodeSelector:

kubernetes.io/os: linux

podMonitorSelector: {}

replicas: 2

# 添加如下两行

secrets:

- etcd-certs

# 更新完成后,就可以在Prometheus的Pod中获取之前创建的etcd证书文件了。先查看一下pod名字

kubectl get po -n monitoring

NAME READY STATUS RESTARTS AGE

...

prometheus-k8s-0 3/3 Running 1 2m20s

prometheus-k8s-1 3/3 Running 1 3m19s

...

# 进入两个容器,查看一下证书的具体路径

kubectl exec -it prometheus-k8s-0 /bin/sh -n monitoring

Defaulting container name to prometheus.

Use \'kubectl describe pod/prometheus-k8s-0 -n monitoring\' to see all of the containers in this pod.

/prometheus $ ls /etc/prometheus/secrets/etcd-certs/

ca.crt healthcheck-client.crt healthcheck-client.key

2.5、创建ServiceMonitor

MonitorEtcd# cat prometheus-serviceMonitorEtcd.yaml

apiVersion: monitoring.coreos.com/v1

kind: ServiceMonitor

metadata:

name: etcd-k8s

namespace: monitoring

labels:

k8s-app: etcd-k8s

spec:

jobLabel: k8s-app

endpoints:

- port: port

interval: 15s

scheme: https

tlsConfig:

caFile: /etc/prometheus/secrets/etcd-certs/ca.crt

certFile: /etc/prometheus/secrets/etcd-certs/healthcheck-client.crt

keyFile: /etc/prometheus/secrets/etcd-certs/healthcheck-client.key

insecureSkipVerify: true

selector:

matchLabels:

k8s-app: etcd

namespaceSelector:

matchNames:

- kube-system

# kubectl apply -f prometheus-serviceMonitorEtcd.yaml

servicemonitor.monitoring.coreos.com/etcd-k8s created

2.6、创建Service

- ServiceMonitor已经创建完成了,需要创建一个对应的Service对象。prometheus-etcdService.yaml内容如下:

MonitorEtcd# cat prometheus-etcdService.yaml

apiVersion: v1

kind: Service

metadata:

name: etcd-k8s

namespace: kube-system

labels:

k8s-app: etcd

spec:

type: ClusterIP

clusterIP: None #设置为None,不分配Service IP

ports:

- name: port

port: 2379

---

apiVersion: v1

kind: Endpoints

metadata:

name: etcd-k8s

namespace: kube-system

labels:

k8s-app: etcd

subsets:

- addresses:

- ip: 10.2.3.141 # 指定etcd节点地址,如果是集群则继续向下添加

- ip: 10.2.3.179

- ip: 10.2.4.121

ports:

- name: port

port: 2379 # ETCD端口

protocol: TCP

# etcd集群独立于集群之外,所以需要定义一个Endpoints。Endpoints的metadata区域的内容要和Service保持一致,并且将Service的clusterIP设置为None。

# 在Endpoints的subsets中填写etcd的地址,如果是集群,则在addresses属性下面添加多个地址。

# kubectl apply -f prometheus-etcdService.yaml

service/etcd-k8s created

endpoints/etcd-k8s created

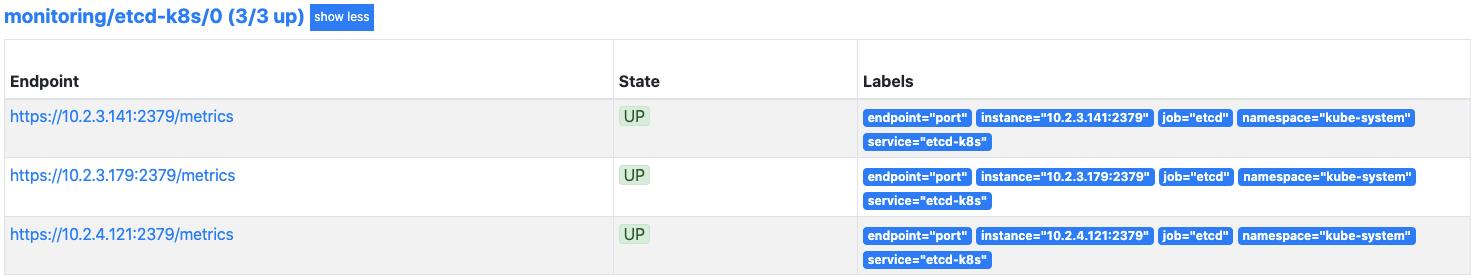

三、查看 Prometheus 规则及Grafana 引入 ETCD 仪表盘

3.1、查看 Prometheus 规则

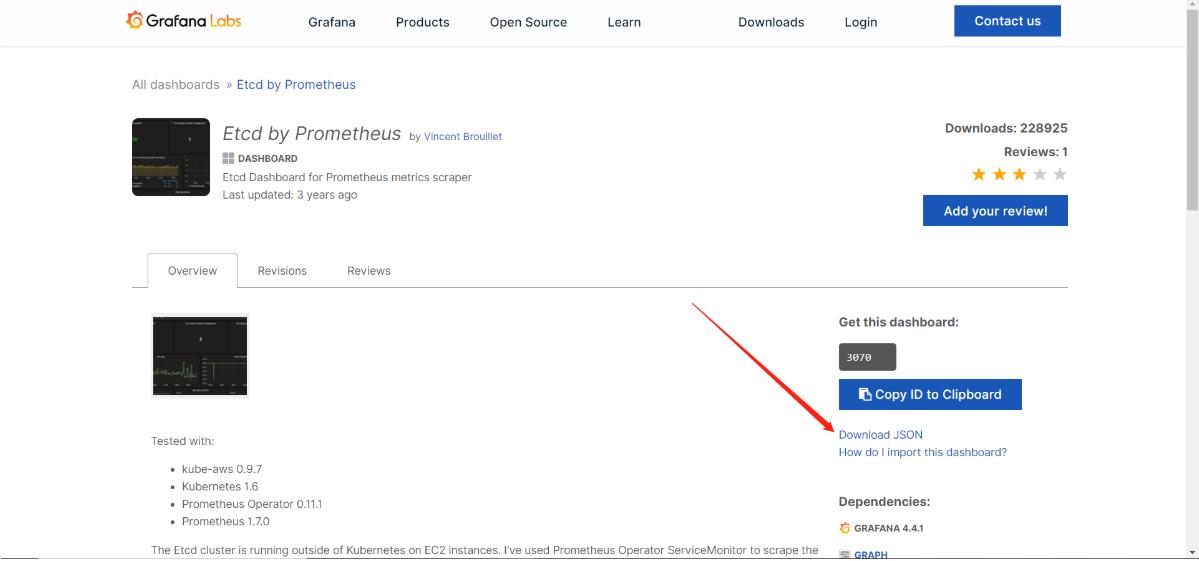

3.2、Grafana 引入 ETCD 仪表盘

- 打开官网来的如下图所示,点击下载JSO文件

- grafana官网:https://grafana.com/grafana/dashboards/3070

- 中文版ETCD集群插件:https://grafana.com/grafana/dashboards/9733

以上是关于Prometheus-Prometheus-Opterator中添加监控etcd集群的主要内容,如果未能解决你的问题,请参考以下文章