WebRTC学习之九:摄像头的捕捉和显示

Posted 草上爬

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了WebRTC学习之九:摄像头的捕捉和显示相关的知识,希望对你有一定的参考价值。

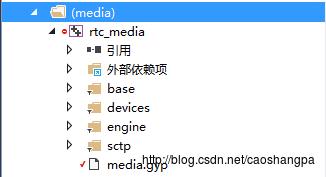

上图中base目录中是一些抽象类,engine目录中是对应抽象类的实现,使用时直接调用engine目录中的接口即可。WebRtcVoiceEngine实际上是VoiceEngine的再次封装,它使用VoiceEngine进行音频处理。注意命名,WebRtcVideoEngine2带了个2字,不用想,这肯定是个升级版本的VideoEngine,还有个WebRtcVideoEngine类。WebRtcVideoEngine2比WebRtcVideoEngine改进之处在于将视频流一分为二:发送流(WebRtcVideoSendStream)和接收流(WebRtcVideoReceiveStream),从而结构上更合理,源码更清晰。

本文的实现主要是使用了WebRtcVideoEngine2中WebRtcVideoCapturer类。

一.环境

参考上篇:WebRTC学习之三:录音和播放

二.实现

打开WebRtcVideoCapturer的头文件webrtcvideocapture.h,公有的函数基本上都是base目录中VideoCapturer类的实现,用于初始化设备和启动捕捉。私有函数OnIncomingCapturedFrame和OnCaptureDelayChanged会在摄像头采集模块VideoCaptureModeule中回调,将采集的图像传给OnIncomingCapturedFrame,并将采集的延时变化传给OnCaptureDelayChanged。

WebRTC中也实现了类似Qt中的信号和槽机制,详见WebRTC学习之七:精炼的信号和槽机制 。但是就像在该文中提到的,sigslot.h中的emit函数名会和Qt中的emit宏冲突,我将sigslot.h中的emit改成了Emit,当然改完之后,需要重新编译rtc_base工程。

VideoCapturer类有两个信号sigslot::signal2<VideoCapturer*, CaptureState> SignalStateChange和sigslot::signal2<VideoCapturer*, const CapturedFrame*, sigslot::multi_threaded_local> SignalFrameCaptured,从SignalFrameCaptured的参数可以看出我们只要实现对应的槽函数就能获取到CapturedFrame,在槽函数中将 CapturedFrame进行转换显示即可。SignalStateChange信号的参数CaptureState是个枚举,标识捕捉的状态(停止、开始、正在进行、失败)。

信号SignalFrameCaptured正是在回调函数OnIncomingCapturedFrame中发射出去的。OnIncomingCapturedFrame里面用到了函数的异步执行,详见WebRTC学习之八:函数的异步执行。

mainwindow.h

#ifndef MAINWINDOW_H

#define MAINWINDOW_H

#include <QMainWindow>

#include <QDebug>

#include <map>

#include <memory>

#include <string>

#include "webrtc/base/sigslot.h"

#include "webrtc/modules/video_capture/video_capture.h"

#include "webrtc/modules/video_capture/video_capture_factory.h"

#include "webrtc/media/base/videocapturer.h"

#include "webrtc/media/engine/webrtcvideocapturer.h"

#include "webrtc/media/engine/webrtcvideoframe.h"

namespace Ui {

class MainWindow;

}

class MainWindow : public QMainWindow,public sigslot::has_slots<>

{

Q_OBJECT

public:

explicit MainWindow(QWidget *parent = 0);

~MainWindow();

void OnFrameCaptured(cricket::VideoCapturer* capturer, const cricket::CapturedFrame* frame);

void OnStateChange(cricket::VideoCapturer* capturer, cricket::CaptureState state);

private slots:

void on_pushButtonOpen_clicked();

private:

void getDeviceList();

private:

Ui::MainWindow *ui;

cricket::WebRtcVideoCapturer *videoCapturer;

cricket::WebRtcVideoFrame *videoFrame;

std::unique_ptr<uint8_t[]> videoImage;

QStringList deviceNameList;

QStringList deviceIDList;

};

#endif // MAINWINDOW_H

#include "mainwindow.h"

#include "ui_mainwindow.h"

MainWindow::MainWindow(QWidget *parent) :

QMainWindow(parent),

ui(new Ui::MainWindow),

videoCapturer(new cricket::WebRtcVideoCapturer()),

videoFrame(new cricket::WebRtcVideoFrame())

{

ui->setupUi(this);

getDeviceList();

}

MainWindow::~MainWindow()

{

delete ui;

videoCapturer->SignalFrameCaptured.disconnect(this);

videoCapturer->SignalStateChange.disconnect(this);

videoCapturer->Stop();

}

void MainWindow::OnFrameCaptured(cricket::VideoCapturer* capturer,const cricket::CapturedFrame* frame)

{

videoFrame->Init(frame, frame->width, frame->height,true);

//将视频图像转成RGB格式

videoFrame->ConvertToRgbBuffer(cricket::FOURCC_ARGB,

videoImage.get(),

videoFrame->width()*videoFrame->height()*32/8,

videoFrame->width()*32/8);

QImage image(videoImage.get(), videoFrame->width(), videoFrame->height(), QImage::Format_RGB32);

ui->label->setPixmap(QPixmap::fromImage(image));

}

void MainWindow::OnStateChange(cricket::VideoCapturer* capturer, cricket::CaptureState state)

{

}

void MainWindow::getDeviceList()

{

deviceNameList.clear();

deviceIDList.clear();

webrtc::VideoCaptureModule::DeviceInfo *info=webrtc::VideoCaptureFactory::CreateDeviceInfo(0);

int deviceNum=info->NumberOfDevices();

for (int i = 0; i < deviceNum; ++i)

{

const uint32_t kSize = 256;

char name[kSize] = {0};

char id[kSize] = {0};

if (info->GetDeviceName(i, name, kSize, id, kSize) != -1)

{

deviceNameList.append(QString(name));

deviceIDList.append(QString(id));

ui->comboBoxDeviceList->addItem(QString(name));

}

}

if(deviceNum==0)

{

ui->pushButtonOpen->setEnabled(false);

}

}

void MainWindow::on_pushButtonOpen_clicked()

{

static bool flag=true;

if(flag)

{

ui->pushButtonOpen->setText(QStringLiteral("关闭"));

const std::string kDeviceName = ui->comboBoxDeviceList->currentText().toStdString();

const std::string kDeviceId = deviceIDList.at(ui->comboBoxDeviceList->currentIndex()).toStdString();

videoCapturer->Init(cricket::Device(kDeviceName, kDeviceId));

int width=videoCapturer->GetSupportedFormats()->at(0).width;

int height=videoCapturer->GetSupportedFormats()->at(0).height;

cricket::VideoFormat format(videoCapturer->GetSupportedFormats()->at(0));

//开始捕捉

if(cricket::CS_STARTING == videoCapturer->Start(format))

{

qDebug()<<"Capture is started";

}

//连接WebRTC的信号和槽

videoCapturer->SignalFrameCaptured.connect(this,&MainWindow::OnFrameCaptured);

videoCapturer->SignalStateChange.connect(this,&MainWindow::OnStateChange);

if(videoCapturer->IsRunning())

{

qDebug()<<"Capture is running";

}

videoImage.reset(new uint8_t[width*height*32/8]);

}

else

{

ui->pushButtonOpen->setText(QStringLiteral("打开"));

//重复连接会报错,需要先断开,才能再次连接

videoCapturer->SignalFrameCaptured.disconnect(this);

videoCapturer->SignalStateChange.disconnect(this);

videoCapturer->Stop();

if(!videoCapturer->IsRunning())

{

qDebug()<<"Capture is stoped";

}

ui->label->clear();

}

flag=!flag;

}

#include "mainwindow.h"

#include <QApplication>

int main(int argc, char *argv[])

{

QApplication a(argc, argv);

MainWindow w;

w.show();

while(true)

{

//WebRTC消息循环

rtc::Thread::Current()->ProcessMessages(0);

rtc::Thread::Current()->SleepMs(1);

//Qt消息循环

a.processEvents( );

}

}三.效果

以上是关于WebRTC学习之九:摄像头的捕捉和显示的主要内容,如果未能解决你的问题,请参考以下文章