k8s内运行ubuntu容器

Posted liuluopeng

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了k8s内运行ubuntu容器相关的知识,希望对你有一定的参考价值。

k8s内运行ubuntu镜像

环境

互相能访问的4台机器master,node01,node02,node03,4核心,内存8G

使用root操作

安装k8s

在master安装docker、kubeadm

添加kubernetes软件源:

在/etc/apt/sorce.list中添加一行:deb https://mirrors.aliyun.com/kubernetes/apt kubernetes-xenial main

添加秘钥 apt-key adv --recv-keys --keyserver keyserver.ubuntu.com 6A030B21BA07F4FB

更新

apt update

安装docker、kubeadm

apt install -y docker.io kubeadm

初始化k8s

查询需要下载的镜像:kubeadm config images list

比如1.17.0需要的是:

k8s.gcr.io/kube-apiserver:v1.17.0

k8s.gcr.io/kube-controller-manager:v1.17.0

k8s.gcr.io/kube-scheduler:v1.17.0

k8s.gcr.io/kube-proxy:v1.17.0

k8s.gcr.io/pause:3.1

k8s.gcr.io/etcd:3.4.3-0

k8s.gcr.io/coredns:1.6.5利用国内的仓库下载需要的镜像:

docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/kube-apiserver:v1.17.0

docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/kube-controller-manager:v1.17.0

docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/kube-scheduler:v1.17.0

docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/kube-proxy:v1.17.0

docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/pause:3.1

docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/etcd:3.4.3-0

docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/coredns:1.6.5将镜像打上标记,使k8s能识别:

docker tag registry.cn-hangzhou.aliyuncs.com/google_containers/pause:3.1 k8s.gcr.io/pause:3.1

docker tag registry.cn-hangzhou.aliyuncs.com/google_containers/kube-apiserver:v1.17.0 k8s.gcr.io/kube-apiserver:v1.17.0

docker tag registry.cn-hangzhou.aliyuncs.com/google_containers/kube-controller-manager:v1.17.0 k8s.gcr.io/kube-controller-manager:v1.17.0

docker tag registry.cn-hangzhou.aliyuncs.com/google_containers/kube-scheduler:v1.17.0 k8s.gcr.io/kube-scheduler:v1.17.0

docker tag registry.cn-hangzhou.aliyuncs.com/google_containers/kube-proxy:v1.17.0 k8s.gcr.io/kube-proxy:v1.17.0

docker tag registry.cn-hangzhou.aliyuncs.com/google_containers/etcd:3.4.3-0 k8s.gcr.io/etcd:3.4.3-0

docker tag registry.cn-hangzhou.aliyuncs.com/google_containers/coredns:1.6.5 k8s.gcr.io/coredns:1.6.5关闭swap:swapoff -a

初始化k8s:kubeadm init

初始化成功的信息如下:

Your Kubernetes control-plane has initialized successfully!

To start using your cluster, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

You should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

https://kubernetes.io/docs/concepts/cluster-administration/addons/

Then you can join any number of worker nodes by running the following on each as root:

kubeadm join 192.168.8.61:6443 --token xh3fjq.f5kzistanapm6ar1 --discovery-token-ca-cert-hash sha256:63c15d5be7a677165c7867187dd063dd5ed72b3d51c8f99b61a3efe3dade029b

根据上面的提示,依次执行:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

然后在https://kubernetes.io/docs/concepts/cluster-administration/addons/选择一个网络插件,这里使用的是weave net。

添加weave net插件:

kubectl apply -n kube-system -f "https://cloud.weave.works/k8s/net?k8s-version=$(kubectl version | base64 | tr -d ‘

‘)"

将节点加入到集群中

在每一个节点安装docker、kubeadm:

apt install docker.io kubeadm -y

在24小时内在节点执行master安装k8s后的提示信息,例如:

kubeadm join 192.168.8.61:6443 --token xh3fjq.f5kzistanapm6ar1 --discovery-token-ca-cert-hash sha256:63c15d5be7a677165c7867187dd063dd5ed72b3d51c8f99b61a3efe3dade029b

在master下查看node的加入情况:

root@desktop:~# kubectl get node

NAME STATUS ROLES AGE VERSION

desktop Ready master 125m v1.17.0

node01 Ready <none> 117m v1.17.0

node02 NotReady <none> 116m v1.17.0

node03 Ready <none> 104m v1.17.0如果状态是NotReady,可能是节点没有禁用swap。

安装dashboard

使用yaml安装dashboard:

kubectl apply -f https://raw.githubusercontent.com/kubernetes/dashboard/v2.0.0-beta5/aio/deploy/recommended.yaml

创建 ServiceAccount 和 ClusterRoleBinding,创建一个 auth.yaml,内容如下:

apiVersion: v1

kind: ServiceAccount

metadata:

name: admin-user

namespace: kubernetes-dashboard

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: admin-user

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: cluster-admin

subjects:

- kind: ServiceAccount

name: admin-user

namespace: kubernetes-dashboard执行kubectl apply -f auth.yaml

获得token:

kubectl -n kubernetes-dashboard describe secret $(kubectl -n kubernetes-dashboard get secret | grep admin-user | awk ‘{print $1}‘)

输出的信息如下:

root@desktop:~# kubectl -n kubernetes-dashboard describe secret $(kubectl -n kubernetes-dashboard get secret | grep admin-user | awk '{print $1}')

Name: admin-user-token-vpr7v

Namespace: kubernetes-dashboard

Labels: <none>

Annotations: kubernetes.io/service-account.name: admin-user

kubernetes.io/service-account.uid: f44f954e-581c-4b9f-88a7-98e566442ed8

Type: kubernetes.io/service-account-token

Data

====

ca.crt: 1025 bytes

namespace: 20 bytes

token: eyJhbGciOiJSUzI1NiIsImtpZCI6Ik41c1ZnR2tIRU4tNktOQV84YzQ0UUNGZzhQRHZPZENsRjkza21iejQ4M2MifQ.eyJpc3MiOiJrdWJlcm5ldGVzL3NlcnZpY2VhY2NvdW50Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9uYW1lc3BhY2UiOiJrdWJlcm5ldGVzLWRhc2hib2FyZCIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VjcmV0Lm5hbWUiOiJhZG1pbi11c2VyLXRva2VuLXZwcjd2Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9zZXJ2aWNlLWFjY291bnQubmFtZSI6ImFkbWluLXVzZXIiLCJrdWJlcm5ldGVzLmlvL3NlcnZpY2VhY2NvdW50L3NlcnZpY2UtYWNjb3VudC51aWQiOiJmNDRmOTU0ZS01ODFjLTRiOWYtODhhNy05OGU1NjY0NDJlZDgiLCJzdWIiOiJzeXN0ZW06c2VydmljZWFjY291bnQ6a3ViZXJuZXRlcy1kYXNoYm9hcmQ6YWRtaW4tdXNlciJ9.a3Bj81-2xIHsSJ0isP6qXjmpazJmh1bkn3tjaQefOmrLUmgmnrEsDMobeD-6YasJ0i4Iq69hT8ITWRr5XyZ1MZx7ueGwsqdGzYQIgnGS5xIUISi7sJjRQ_K9aoh29WaL4WBBkiOQb8xBOShH7-Lp72a6EqZnko5UkorolLNJzquow27sDc4gcB-c8wRs_bl2hD-BuraPremQlBhleKgsab49xUWjgE45GYIW46nzmqwPTl-B6MBUNyj442WrHecf7Yy50mgf6lXFVHzkHaHjcWH3OfgKu7GV3WBoc0K6oLen2R5awYmJe31sLcoFFBp64MRfbhO3kGGRboXTqRUeEQ

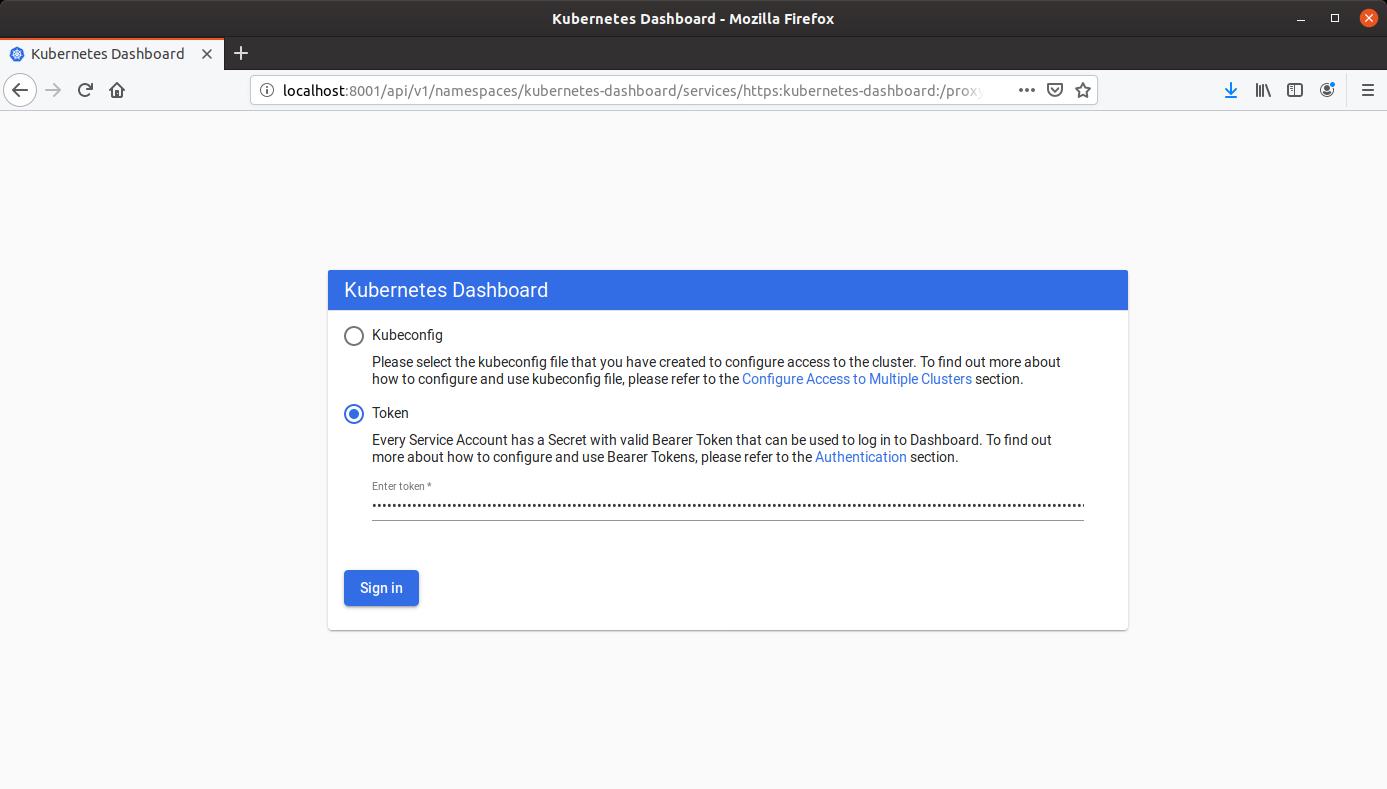

记录下token,以便登录bashboard。

启动proxy:

kubectl procy

然后登录 dashboard,使用token方式登录:

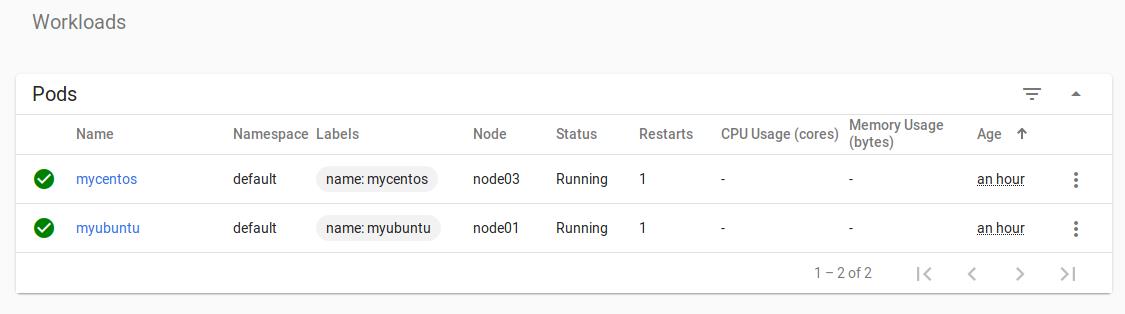

运行ubuntu镜像

下载镜像

每一个节点都需要下载ubuntu:

docker pull ubuntu

编写yaml

myubuntu.yaml如下:

apiVersion: v1

kind: Pod

metadata:

#Pod的名称,全局唯一

name: myubuntu

labels:

name: myubuntu

spec:

#设置存储卷

volumes:

- name: myubuntulogs

hostPath:

path: /home/user/myubuntu

containers:

#容器名称

- name: myubuntu

#容器对应的Docker Image

image: ubuntu

command: [ "/bin/bash", "-c", "--" ]

args: [ "while true; do sleep 3600; done;" ]

volumeMounts:

- mountPath: /mydata-log

name: myubuntulogs

创建pod:kubectl apply -f myubuntu.yaml

打开dashboard,选择Pod->myubuntu ,右键点击exec,在dashboard进入ubuntu容器:

检查网络设置:

在容器内下载常用的网络工具:

apt install net-tools inetutils-ping -y

检查路由:

root@myubuntu:/# route

Kernel IP routing table

Destination Gateway Genmask Flags Metric Ref Use Iface

default 10.44.0.0 0.0.0.0 UG 0 0 0 eth0

10.32.0.0 0.0.0.0 255.240.0.0 U 0 0 0 eth0特别感谢yytlmm博主

以上是关于k8s内运行ubuntu容器的主要内容,如果未能解决你的问题,请参考以下文章

cron 作业未在 ubuntu 上的 docker 容器内运行

将在 Ubuntu VM 内运行的 docker 容器端口与 VM 的主机网络连接 [关闭]