Apache Hadoop集群安装(NameNode HA + SPARK + 机架感知)

Posted

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了Apache Hadoop集群安装(NameNode HA + SPARK + 机架感知)相关的知识,希望对你有一定的参考价值。

| 序号 | 主机名 | IP地址 | 角色 |

| 1 | nn-1 | 192.168.9.21 | NameNode、mr-jobhistory、zookeeper、JournalNode |

| 2 | nn-2 | 192.168.9.22 | Secondary NameNode、JournalNode |

| 3 | dn-1 | 192.168.9.23 | DataNode、JournalNode、zookeeper、ResourceManager、NodeManager |

| 4 | dn-2 | 192.168.9.24 | DataNode、zookeeper、NodeManager |

| 5 | dn-3 | 192.168.9.25 | DataNode、NodeManager |

--查看java版本[[email protected] ~]# java -versionjava version "1.7.0_45"OpenJDK Runtime Environment (rhel-2.4.3.3.el6-x86_64 u45-b15)OpenJDK 64-Bit Server VM (build 24.45-b08, mixed mode)--查看安装源[[email protected] ~]# rpm -qa | grep javajava-1.7.0-openjdk-1.7.0.45-2.4.3.3.el6.x86_64-- 卸载[[email protected] ~]# rpm -e --nodeps java-1.7.0-openjdk-1.7.0.45-2.4.3.3.el6.x86_64--验证是否卸载成功[[email protected] ~]# rpm -qa | grep java[[email protected] ~]# java -version-bash: /usr/bin/java: 没有那个文件或目录

-- 下载并解压java源码包[[email protected] java]# mkdir /usr/local/java[[email protected] java]# mv jdk-7u79-linux-x64.tar.gz /usr/local/java[[email protected] java]# cd /usr/local/java[[email protected] java]# tar xvf jdk-7u79-linux-x64.tar.gz[[email protected] java]# lsjdk1.7.0_79 jdk-7u79-linux-x64.tar.gz[[email protected] java]#--- 添加环境变量[[email protected] java]# vim /etc/profile[[email protected] java]# tail /etc/profileexport JAVA_HOME=/usr/local/java/jdk1.7.0_79export JRE_HOME=/usr/local/java/jdk1.7.0_79/jreexport CLASSPATH=.:$JAVA_HOME/lib/dt.jar:$JAVA_HOME/lib/tools.jar:$JRE_HOME/lib:$CLASSPATHexport PATH=$JAVA_HOME/bin:$PATH-- 生效环境变量[[email protected] ~]# source /etc/profile-- 验证[[email protected] ~]# java -versionjava version "1.7.0_79"Java(TM) SE Runtime Environment (build 1.7.0_79-b15)Java HotSpot(TM) 64-Bit Server VM (build 24.79-b02, mixed mode)[[email protected] ~]# javac -versionjavac 1.7.0_79

[[email protected]-3 ~]# cat /etc/sysconfig/networkNETWORKING=yesHOSTNAME=dn-3[[email protected]-3 ~]# hostname dn-3

[[email protected]-3 ~]# cat /etc/hosts127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4::1 localhost localhost.localdomain localhost6 localhost6.localdomain6192.168.9.21 nn-1192.168.9.22 nn-2192.168.9.23 dn-1192.168.9.24 dn-2192.168.9.25 dn-3

[[email protected]-3 ~]# useradd -d /hadoop hadoop

[[email protected]-3 ~]# service iptables stop[[email protected]-3 ~]# chkconfig iptables off[[email protected]-3 ~]# chkconfig --list | grep iptablesiptables 0:关闭 1:关闭 2:关闭 3:关闭 4:关闭 5:关闭 6:关闭[[email protected]-3 ~]#

[[email protected]-3 ~]# setenforce 0setenforce: SELinux is disabled[[email protected]-3 ~]# vim /etc/sysconfig/selinuxSELINUX=disabled

[hadoop@nn-1 ~]$ ssh-keygen -t rsa

[hadoop@nn-1 ~]$ ssh nn-1 ‘cat ./.ssh/id_rsa.pub‘ >> authorized_keyshadoop@nn-1‘s password:[hadoop@nn-1 ~]$

[[email protected]-1 .ssh]$ chmod 644 authorized_keys

[[email protected]-1 .ssh]$ scp authorized_keys [email protected]-2:/hadoop/.ssh/

export JAVA_HOME=/usr/local/java/jdk1.7.0_79export HADOOP_HEAPSIZE=2000export HADOOP_NAMENODE_INIT_HEAPSIZE=10000export HADOOP_OPTS="-server $HADOOP_OPTS -Djava.net.preferIPv4Stack=true"export HADOOP_NAMENODE_OPTS="-Xmx15000m -Xms15000m -Dhadoop.security.logger=${HADOOP_SECURITY_LOGGER:-INFO,RFAS} -Dhdfs.audit.logger=${HDFS_AUDIT_LOGGER:-INFO,NullAppender} $HADOOP_NAMENODE_OPTS"

<configuration><property><name>fs.defaultFS</name><value>hdfs://dpi</value></property><property><name>io.file.buffer.size</name><value>131072</value></property><property><name>hadoop.tmp.dir</name><value>file:/hadoop/hdfs/temp</value><description>Abase for other temporary directories.</description></property><property><name>hadoop.proxyuser.hduser.hosts</name><value>*</value></property><property><name>hadoop.proxyuser.hduser.groups</name><value>*</value></property><property><name>ha.zookeeper.quorum</name><value>dn-1:2181,dn-2:2181,dn-3:2181</value></property></configuration>

<configuration><property><name>dfs.namenode.secondary.http-address</name><value>nn-1:9001</value></property><property><name>dfs.namenode.name.dir</name><value>file:/hadoop/hdfs/name</value></property><property><name>dfs.datanode.data.dir</name><value>file:/hadoop/hdfs/data,file:/hadoopdata/hdfs/data</value></property><property><name>dfs.replication</name><value>3</value></property><property><name>dfs.webhdfs.enabled</name><value>true</value></property><property><name>dfs.nameservices</name><value>dpi</value></property><property><name>dfs.ha.namenodes.dpi</name><value>nn-1,nn-2</value></property><property><name>dfs.namenode.rpc-address.dpi.nn-1</name><value>nn-1:9000</value></property><property><name>dfs.namenode.http-address.dpi.nn-1</name><value>nn-1:50070</value></property><property><name>dfs.namenode.rpc-address.dpi.nn-2</name><value>nn-2:9000</value></property><property><name>dfs.namenode.http-address.dpi.nn-2</name><value>nn-2:50070</value></property><property><name>dfs.namenode.servicerpc-address.dpi.nn-1</name><value>nn-1:53310</value></property><property><name>dfs.namenode.servicerpc-address.dpi.nn-2</name><value>nn-2:53310</value></property><property><name>dfs.ha.automatic-failover.enabled</name><value>true</value></property><property><name>dfs.namenode.shared.edits.dir</name><value>qjournal://nn-1:8485;nn-2:8485;dn-1:8485/dpi</value></property><property><name>dfs.client.failover.proxy.provider.dpi</name><value>org.apache.hadoop.hdfs.server.namenode.ha.ConfiguredFailoverProxyProvider</value></property><property><name>dfs.journalnode.edits.dir</name><value>/hadoop/hdfs/journal</value></property><property><name>dfs.ha.fencing.methods</name><value>sshfence</value></property><property><name>dfs.ha.fencing.ssh.private-key-files</name><value>/hadoop/.ssh/id_rsa</value></property></configuration>

mkdir -p /hadoop/hdfs/namemkdir -p /hadoop/hdfs/datamkdir -p /hadoop/hdfs/tempmkdir -p /hadoop/hdfs/journal授权:chmod 755 /hadoop/hdfsmkdir -p /hadoopdata/hdfs/datachmod 755 /hadoopdata/hdfs

<configuration><property><name>mapreduce.framework.name</name><value>yarn</value></property><property><name>mapreduce.jobhistory.address</name><value>nn-1:10020</value></property><property><name>mapreduce.jobhistory.webapp.address</name><value>nn-1:19888</value></property></configuration>

<configuration><!-- Site specific YARN configuration properties --><property><name>yarn.nodemanager.aux-services</name><value>mapreduce_shuffle</value></property><property><name>yarn.nodemanager.aux-services.mapreduce.shuffle.class</name><value>org.apache.hadoop.mapred.ShuffleHandler</value></property><property><name>yarn.resourcemanager.address</name><value>dn-1:8032</value></property><property><name>yarn.resourcemanager.scheduler.address</name><value>dn-1:8030</value></property><property><name>yarn.resourcemanager.resource-tracker.address</name><value>dn-1:8031</value></property><property><name>yarn.resourcemanager.admin.address</name><value>dn-1:8033</value></property><property><name>yarn.resourcemanager.webapp.address</name><value>dn-1:8088</value></property></configuration>

dn-1dn-2dn-3

# some Java parameters# export JAVA_HOME=/home/y/libexec/jdk1.6.0/if [ "$JAVA_HOME" != "" ]; then#echo "run java in $JAVA_HOME"JAVA_HOME=/usr/local/java/jdk1.7.0_79fiJAVA_HEAP_MAX=-Xmx15000mYARN_HEAPSIZE=15000export YARN_RESOURCEMANAGER_HEAPSIZE=5000export YARN_TIMELINESERVER_HEAPSIZE=10000export YARN_NODEMANAGER_HEAPSIZE=10000

[[email protected]-1 ~]$ scp -rp hadoop [email protected]-2:/hadoop[[email protected]-1 ~]$ scp -rp hadoop [email protected]-1:/hadoop[[email protected]-1 ~]$ scp -rp hadoop [email protected]-2:/hadoop[[email protected]-1 ~]$ scp -rp hadoop [email protected]-3:/hadoop

dataDir=/hadoop/zookeeper/data/dataLogDir=/hadoop/zookeeper/log/# the port at which the clients will connectclientPort=2181server.1=nn-1:2887:3887server.2=dn-1:2888:3888server.3=dn-2:2889:3889

[[email protected]-1 ~]$ scp -rp zookeeper [email protected]-1:/hadoop[[email protected]-1 ~]$ scp -rp zookeeper [email protected]-2:/hadoop

[[email protected]-1 ~]$ mkdir /hadoop/zookeeper/data/[[email protected]-1 ~]$ mkdir /hadoop/zookeeper/log/

$ echo "export ZOOKEEPER_HOME=/hadoop/zookeeper" >> $HOME/.bash_profile$ echo "export PATH=$ZOOKEEPER_HOME/bin:\\$PATH" >> $HOME/.bash_profile$ source $HOME/.bash_profile

[[email protected]-1 ~]$ /hadoop/zookeeper/bin/zkServer.sh startJMX enabled by defaultUsing config: /hadoop/zookeeper/bin/../conf/zoo.cfgStarting zookeeper ... STARTED

[ha[email protected]-1 ~]$ jps9382 QuorumPeerMain9407 Jps

[[email protected]-1 ~]$ /hadoop/zookeeper/bin/zkServer.sh statusJMX enabled by defaultUsing config: /hadoop/zookeeper/bin/../conf/zoo.cfgMode: follower

[[email protected]-1 data]$ /hadoop/zookeeper/bin/zkServer.sh statusJMX enabled by defaultUsing config: /hadoop/zookeeper/bin/../conf/zoo.cfgMode: leader

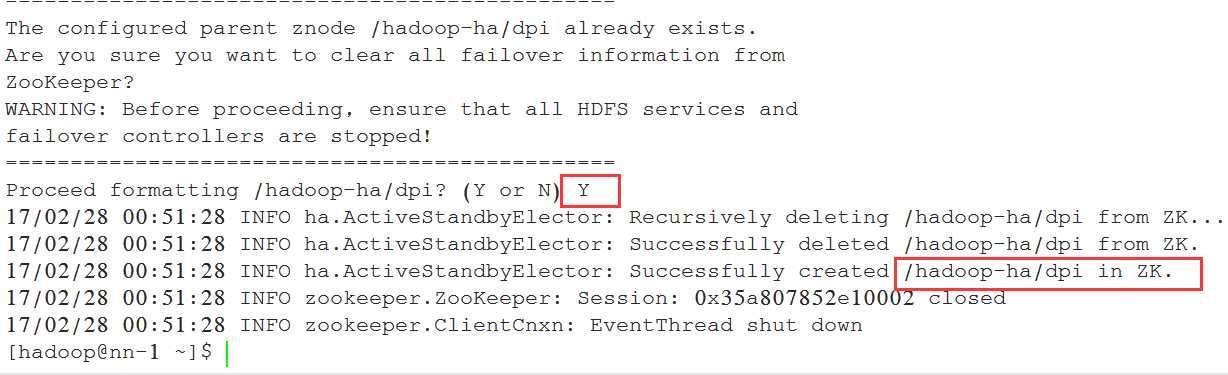

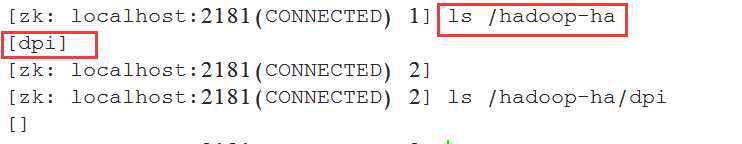

[[email protected]-1 ~]$ /hadoop/hadoop/bin/hdfs zkfc -formatZK

[[email protected]-1 bin]$ ./zkCli.sh

[[email protected]-1 ~]$ /hadoop/hadoop/sbin/hadoop-daemon.sh start zkfcstarting zkfc, logging to /hadoop/hadoop/logs/hadoop-hadoop-zkfc-nn-1.out

[[email protected]-1 ~]$ jps9681 Jps9638 DFSZKFailoverController9382 QuorumPeerMain

[[email protected]-1 ~]$ /hadoop/hadoop/sbin/hadoop-daemon.sh start journalnodestarting journalnode, logging to /hadoop/hadoop/logs/hadoop-hadoop-journalnode-nn-1.out

[[email protected]-1 ~]$ jps9714 JournalNode9638 DFSZKFailoverController9382 QuorumPeerMain9762 Jps

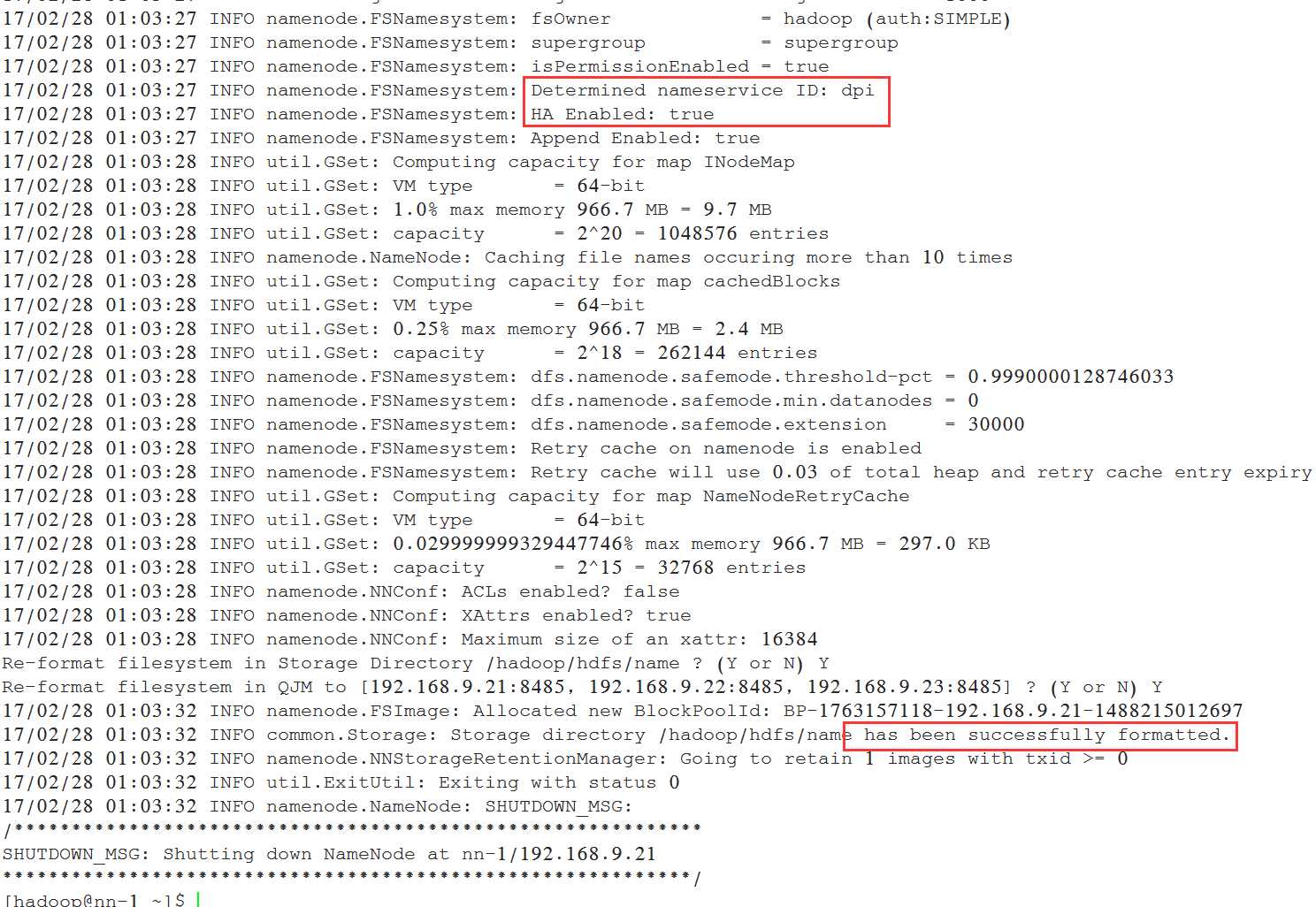

[[email protected]-1 ~]$ /hadoop/hadoop/bin/hadoop namenode -format

[[email protected]-1 ~]$ /hadoop/hadoop/sbin/hadoop-daemon.sh start namenodestarting namenode, logging to /hadoop/hadoop/logs/hadoop-hadoop-namenode-nn-1.out

[[email protected]-1 ~]$ jps9714 JournalNode9638 DFSZKFailoverController9382 QuorumPeerMain10157 NameNode10269 Jps

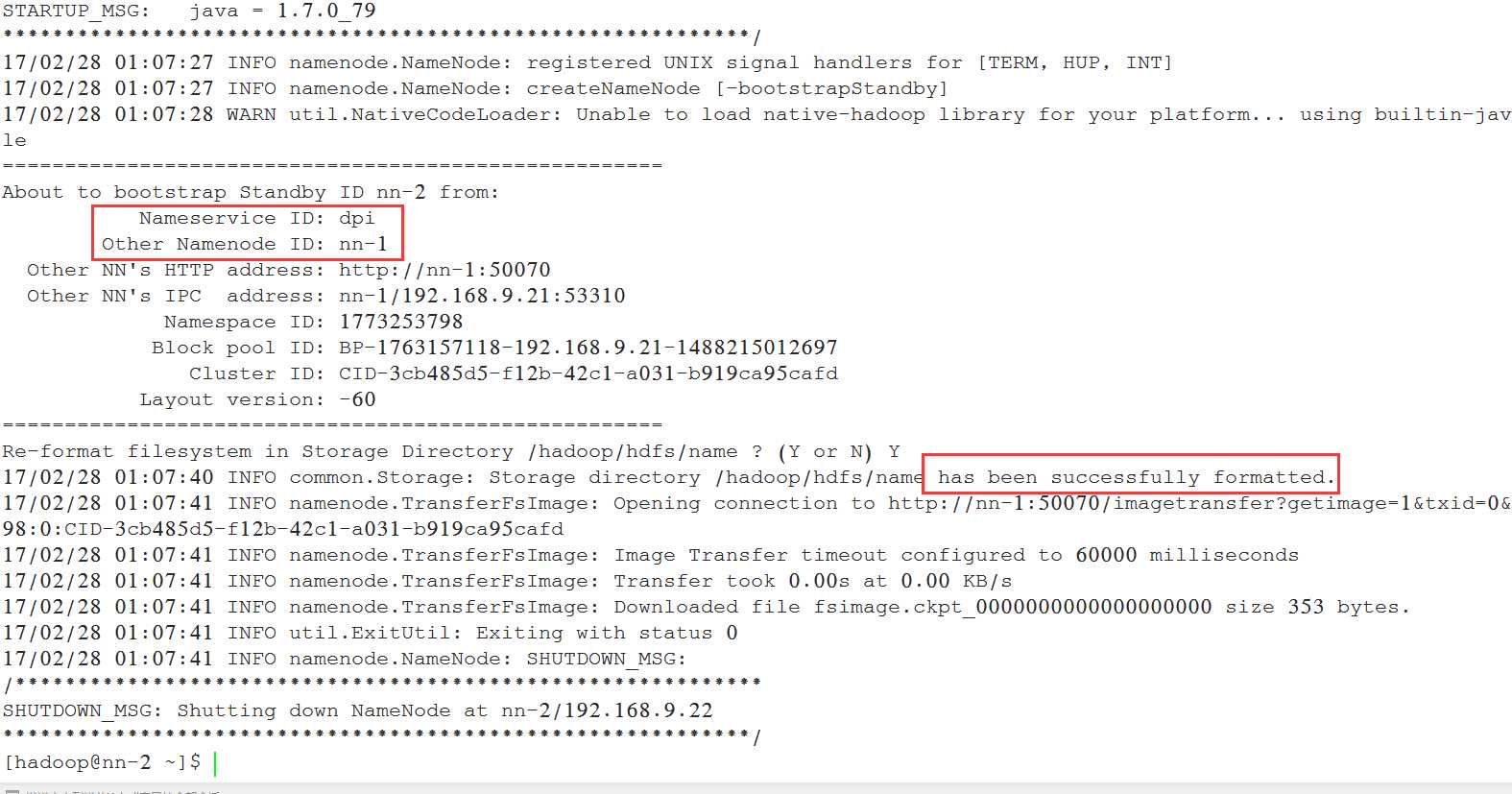

[[email protected]-2 ~]$ /hadoop/hadoop/bin/hdfs namenode -bootstrapStandby

[[email protected]-2 ~]$ /hadoop/hadoop/sbin/hadoop-daemon.sh start namenodestarting namenode, logging to /hadoop/hadoop/logs/hadoop-hadoop-namenode-nn-2.out

[[email protected]-2 ~]$ jps53990 NameNode54083 Jps53824 JournalNode53708 DFSZKFailoverController

[[email protected]-1 ~]$ /hadoop/hadoop/sbin/hadoop-daemon.sh start datanode

[[email protected]-1 temp]$ jps57007 Jps56927 DataNode56223 QuorumPeerMain

[[email protected]-1 ~]$ /hadoop/hadoop/sbin/yarn-daemon.sh start resourcemanagerstarting resourcemanager, logging to /hadoop/hadoop/logs/yarn-hadoop-resourcemanager-dn-1.out

[[email protected]-1 ~]$ jps57173 QuorumPeerMain58317 Jps57283 JournalNode58270 ResourceManager58149 DataNode

[[email protected]-1 ~]$ /hadoop/hadoop/sbin/mr-jobhistory-daemon.sh start historyserverstarting historyserver, logging to /hadoop/hadoop/logs/mapred-hadoop-historyserver-nn-1.out

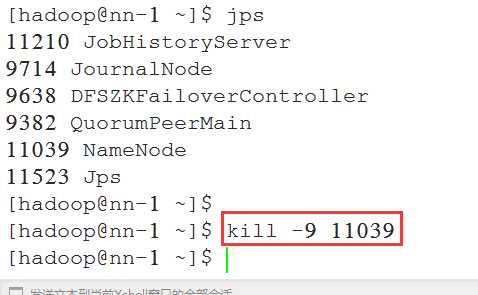

[[email protected]-1 ~]$ jps11210 JobHistoryServer9714 JournalNode9638 DFSZKFailoverController9382 QuorumPeerMain11039 NameNode11303 Jps

[[email protected]-1 ~]$ /hadoop/hadoop/sbin/yarn-daemon.sh start nodemanagerstarting nodemanager, logging to /hadoop/hadoop/logs/yarn-hadoop-nodemanager-dn-1.out

[[email protected]-1 ~]$ jps58559 NodeManager57173 QuorumPeerMain58668 Jps57283 JournalNode58270 ResourceManager58149 DataNode

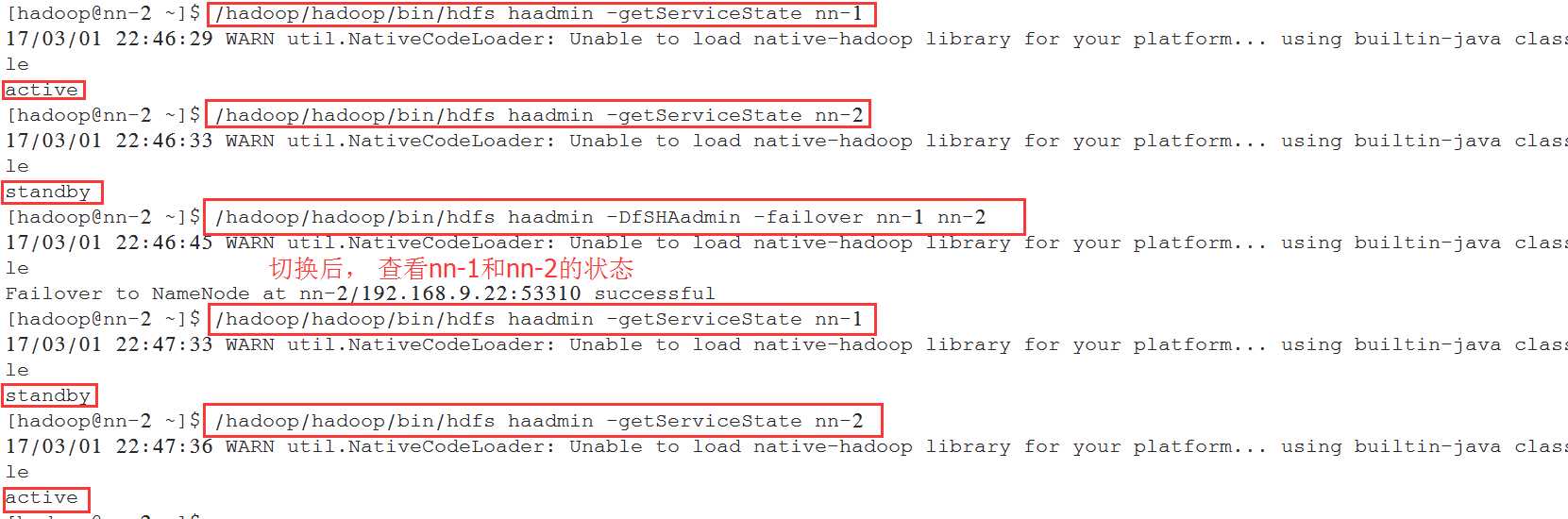

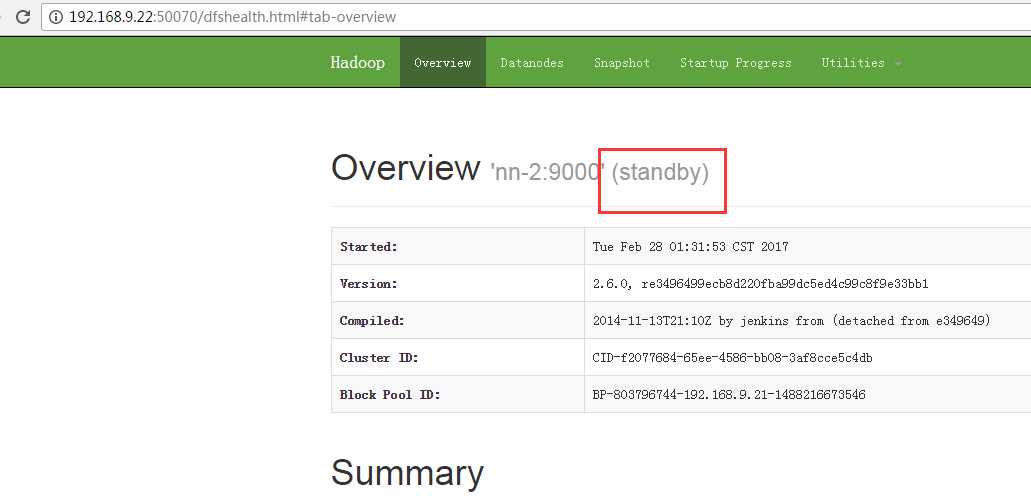

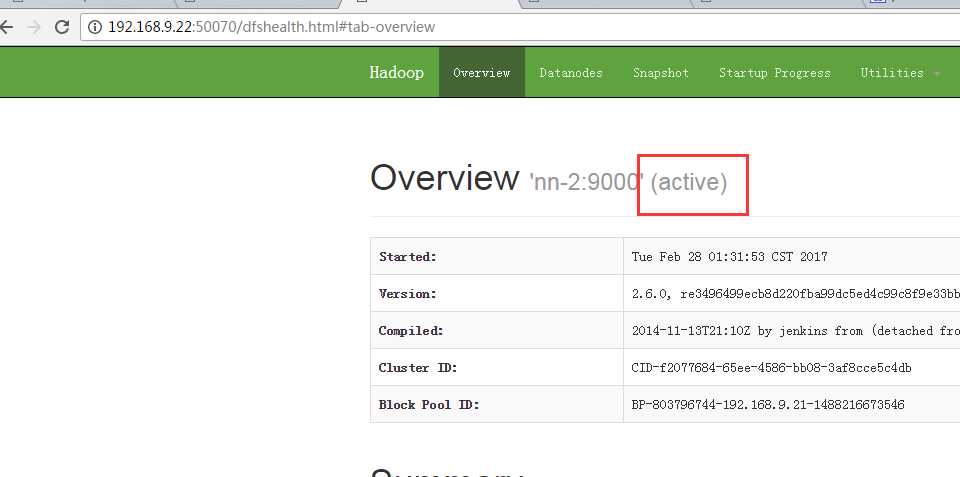

[[email protected]-2 ~]$ /hadoop/hadoop/bin/hdfs haadmin -getServiceState nn-1

[[email protected]-2 ~]$ /hadoop/hadoop/bin/hdfs haadmin -DfSHAadmin -failover nn-1 nn-2

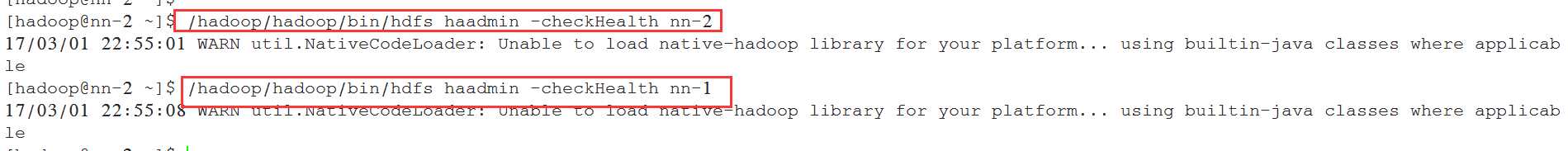

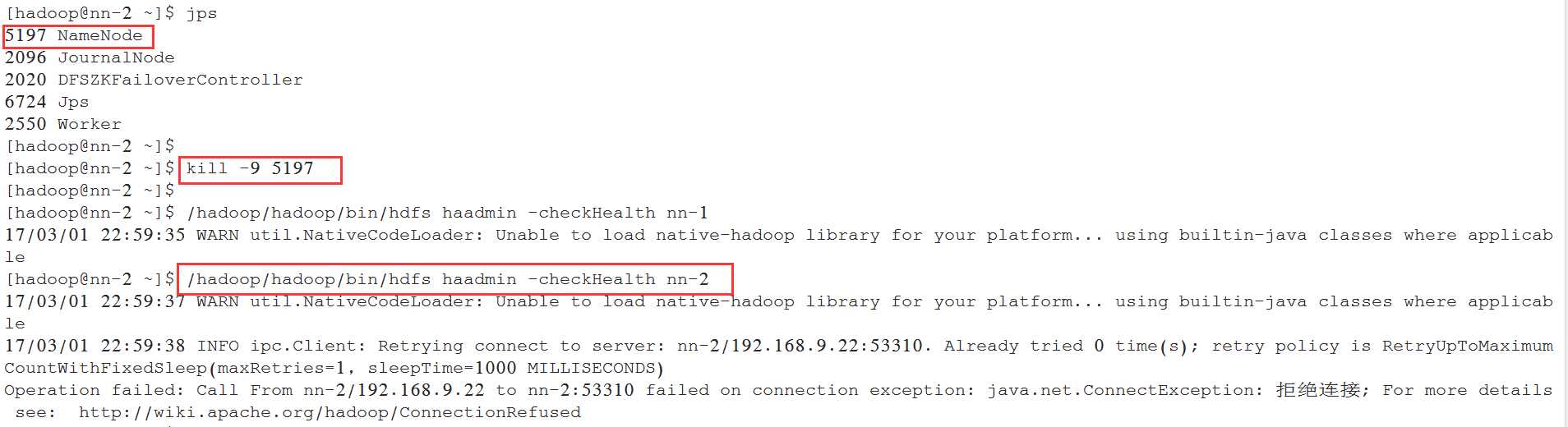

[[email protected]-2 ~]$ /hadoop/hadoop/bin/hdfs haadmin -checkHealth nn-1

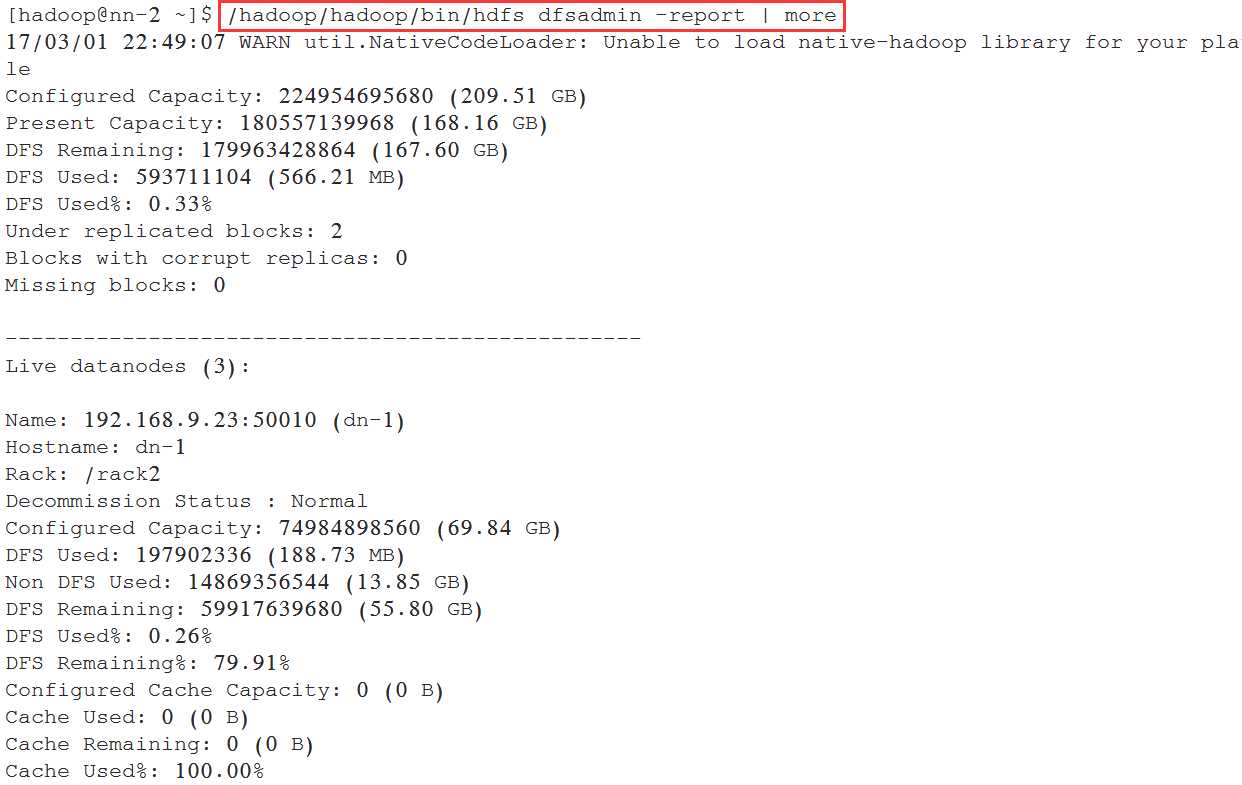

[[email protected]-2 ~]$ /hadoop/hadoop/bin/hdfs dfsadmin -report | more

[[email protected]-2 ~]$ /hadoop/hadoop/bin/hdfs dfsadmin -report -live17/03/01 22:49:43 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicableConfigured Capacity: 224954695680 (209.51 GB)Present Capacity: 180557139968 (168.16 GB)DFS Remaining: 179963428864 (167.60 GB)DFS Used: 593711104 (566.21 MB)DFS Used%: 0.33%Under replicated blocks: 2Blocks with corrupt replicas: 0Missing blocks: 0-------------------------------------------------Live datanodes (3):Name: 192.168.9.23:50010 (dn-1)Hostname: dn-1Rack: /rack2Decommission Status : NormalConfigured Capacity: 74984898560 (69.84 GB)DFS Used: 197902336 (188.73 MB)Non DFS Used: 14869356544 (13.85 GB)DFS Remaining: 59917639680 (55.80 GB)DFS Used%: 0.26%DFS Remaining%: 79.91%Configured Cache Capacity: 0 (0 B)Cache Used: 0 (0 B)Cache Remaining: 0 (0 B)Cache Used%: 100.00%Cache Remaining%: 0.00%Xceivers: 1Last contact: Wed Mar 01 22:49:42 CST 2017

[[email protected]-2 ~]$ /hadoop/hadoop/bin/hdfs dfsadmin -report -dead

[hadoop[email protected]-2 ~]$ /hadoop/hadoop/bin/hdfs haadmin -checkHealth nn-217/03/01 22:55:01 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable[[email protected]-2 ~]$ /hadoop/hadoop/bin/hdfs haadmin -checkHealth nn-117/03/01 22:55:08 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

[[email protected]-1 hadoop]$ yarn rmadmin -getServiceState rm1- active

[[email protected]-1 hadoop]$ yarn rmadmin -getServiceState rm2- standby

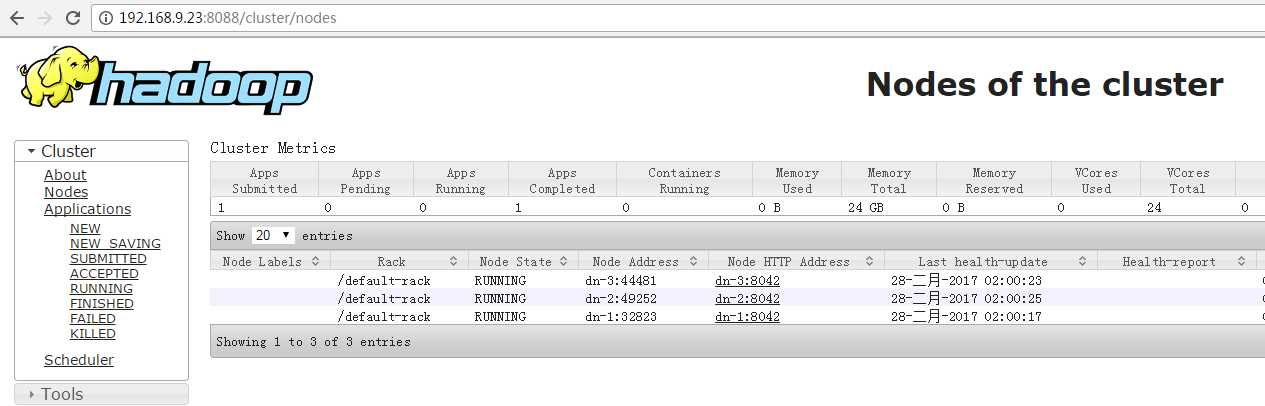

[[email protected]-1 hadoop]$ /hadoop/hadoop/bin/yarn node -all -list17/03/01 23:06:40 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicableTotal Nodes:3Node-Id Node-State Node-Http-Address Number-of-Running-Containersdn-2:55506 RUNNING dn-2:8042 0dn-1:56447 RUNNING dn-1:8042 0dn-3:37533 RUNNING dn-3:8042 0

[[email protected]-1 hadoop]$ /hadoop/hadoop/bin/yarn node -list17/03/01 23:07:41 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicableTotal Nodes:3Node-Id Node-State Node-Http-Address Number-of-Running-Containersdn-2:55506 RUNNING dn-2:8042 0dn-1:56447 RUNNING dn-1:8042 0dn-3:37533 RUNNING dn-3:8042 0

[[email protected]-1 hadoop]$ /hadoop/hadoop/bin/yarn node -status dn-2:5550617/03/01 23:08:16 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicableNode Report :Node-Id : dn-2:55506Rack : /default-rackNode-State : RUNNINGNode-Http-Address : dn-2:8042Last-Health-Update : 星期三 01/三月/17 11:06:21:373CSTHealth-Report :Containers : 0Memory-Used : 0MBMemory-Capacity : 8192MBCPU-Used : 0 vcoresCPU-Capacity : 8 vcoresNode-Labels :

[[email protected]-2 ~]$ /hadoop/hadoop/bin/yarn application -list17/03/01 23:10:09 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicableTotal number of applications (application-types: [] and states: [SUBMITTED, ACCEPTED, RUNNING]):1Application-Id Application-Name Application-Type User Queue State Final-State Progress Tracking-URLapplication_1488375590901_0004 QuasiMonteCarlo MAPREDUCE hadoop default RUNNING UNDEFINED

[[email protected]-1 ~]$ cd hadoop/[[email protected]-1 hadoop]$[[email protected]-1 hadoop]$ ./bin/hadoop jar share/hadoop/mapreduce/hadoop-mapreduce-examples-2.6.0.jar pi 2 200

[[email protected]-1 hadoop]$ ./bin/hadoop jar share/hadoop/mapreduce/hadoop-mapreduce-examples-2.6.0.jar pi 2 200Number of Maps = 2Samples per Map = 20017/02/28 01:51:12 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicableWrote input for Map #0Wrote input for Map #1Starting Job17/02/28 01:51:15 INFO input.FileInputFormat: Total input paths to process : 217/02/28 01:51:15 INFO mapreduce.JobSubmitter: number of splits:217/02/28 01:51:15 INFO mapreduce.JobSubmitter: Submitting tokens for job: job_1488216892564_000117/02/28 01:51:16 INFO impl.YarnClientImpl: Submitted application application_1488216892564_000117/02/28 01:51:16 INFO mapreduce.Job: The url to track the job: http://dn-1:8088/proxy/application_1488216892564_0001/17/02/28 01:51:16 INFO mapreduce.Job: Running job: job_1488216892564_000117/02/28 01:51:24 INFO mapreduce.Job: Job job_1488216892564_0001 running in uber mode : false17/02/28 01:51:24 INFO mapreduce.Job: map 0% reduce 0%17/02/28 01:51:38 INFO mapreduce.Job: map 100% reduce 0%17/02/28 01:51:49 INFO mapreduce.Job: map 100% reduce 100%17/02/28 01:51:49 INFO mapreduce.Job: Job job_1488216892564_0001 completed successfully17/02/28 01:51:50 INFO mapreduce.Job: Counters: 49File System CountersFILE: Number of bytes read=50FILE: Number of bytes written=326922FILE: Number of read operations=0FILE: Number of large read operations=0FILE: Number of write operations=0HDFS: Number of bytes read=510HDFS: Number of bytes written=215HDFS: Number of read operations=11HDFS: Number of large read operations=0HDFS: Number of write operations=3Job CountersLaunched map tasks=2Launched reduce tasks=1Data-local map tasks=2Total time spent by all maps in occupied slots (ms)=25604Total time spent by all reduces in occupied slots (ms)=7267Total time spent by all map tasks (ms)=25604Total time spent by all reduce tasks (ms)=7267Total vcore-seconds taken by all map tasks=25604Total vcore-seconds taken by all reduce tasks=7267Total megabyte-seconds taken by all map tasks=26218496Total megabyte-seconds taken by all reduce tasks=7441408Map-Reduce FrameworkMap input records=2Map output records=4Map output bytes=36Map output materialized bytes=56Input split bytes=274Combine input records=0Combine output records=0Reduce input groups=2Reduce shuffle bytes=56Reduce input records=4Reduce output records=0Spilled Records=8Shuffled Maps =2Failed Shuffles=0Merged Map outputs=2GC time elapsed (ms)=419CPU time spent (ms)=6940Physical memory (bytes) snapshot=525877248Virtual memory (bytes) snapshot=2535231488Total committed heap usage (bytes)=260186112Shuffle ErrorsBAD_ID=0CONNECTION=0IO_ERROR=0WRONG_LENGTH=0WRONG_MAP=0WRONG_REDUCE=0File Input Format CountersBytes Read=236File Output Format CountersBytes Written=97Job Finished in 35.466 secondsEstimated value of Pi is 3.17000000000000000000

[[email protected]-1 ~]$ tar -xzvf spark-1.6.0-bin-hadoop2.6.tgz

[[email protected]-1 ~]$ tar -xzvf spark-1.6.0-bin-hadoop2.6.tgz

[[email protected]-1 conf]$ pwd/hadoop/spark-1.6.0-bin-hadoop2.6/conf[[email protected]-1 conf]$ cp spark-env.sh.template spark-env.sh[[email protected] conf]$ cp slaves.template slaves

export JAVA_HOME=/usr/local/java/jdk1.7.0_79export SCALA_HOME=/hadoop/scala-2.11.7export SPARK_HOME=/hadoop/spark-1.6.0-bin-hadoop2.6export SPARK_MASTER_IP=nn-1export SPARK_WORKER_MEMORY=2gexport HADOOP_CONF_DIR=/hadoop/hadoop/etc/hadoop

nn-2dn-1dn-2dn-3

[[email protected]-1 ~]$ vim .bash_profile

export HADOOP_HOME=/hadoop/hadoopexport SCALA_HOME=/hadoop/scala-2.11.7export SPARK_HOME=/hadoop/spark-1.6.0-bin-hadoop2.6export PATH=$HADOOP_HOME/bin:$HADOOP_HOME/sbin:$SCALA_HOME/bin:$SPARK_HOME/bin:$SPARK_HOME/sbin:$PATH

[[email protected] bin]$ cd /hadoop[[email protected] ~]$ scp -rp spark-1.6.0-bin-hadoop2.6 [email protected]:/hadoop[[email protected] ~]$ scp -rp scala-2.11.7 [email protected]:/hadoop

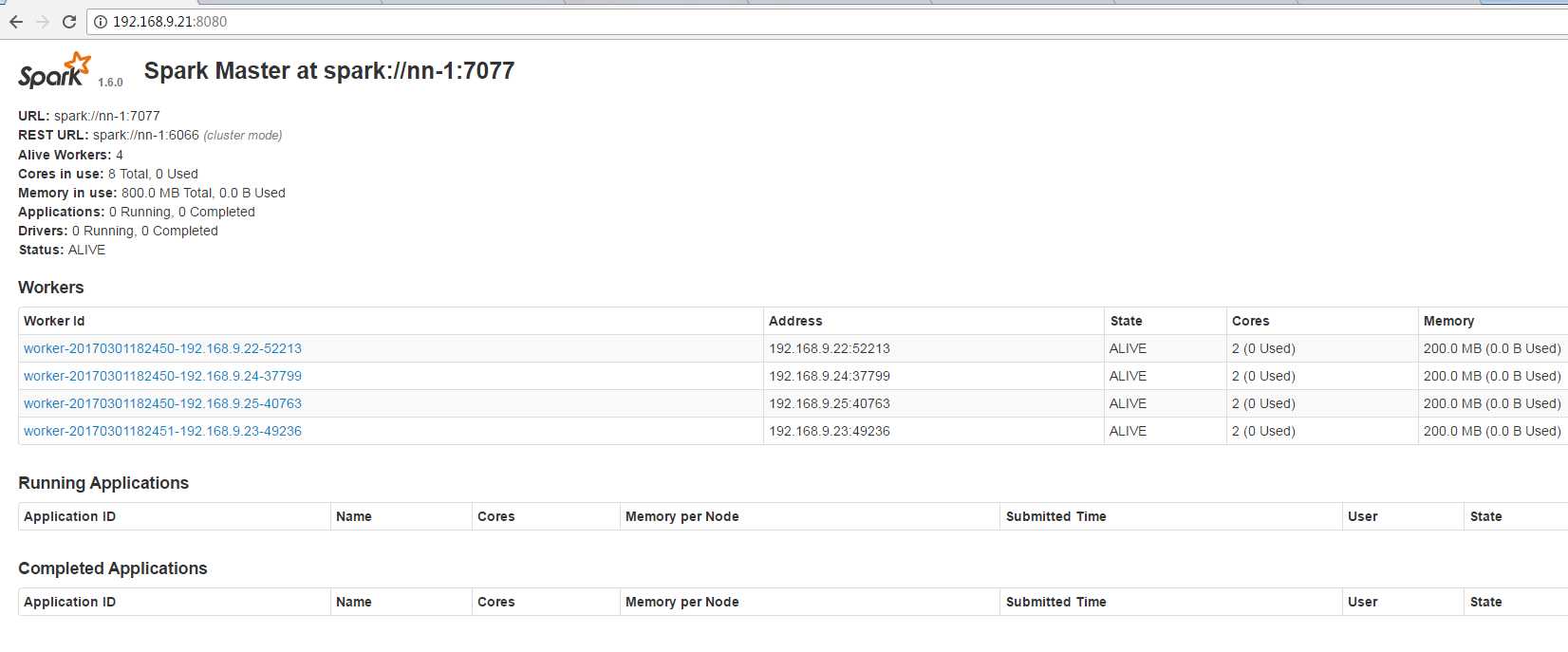

[[email protected]-1 ~]$ /hadoop/spark-1.6.0-bin-hadoop2.6/sbin/start-all.sh

[[email protected]-1 ~]$ jps2473 JournalNode2541 NameNode4401 Jps2399 DFSZKFailoverController2687 JobHistoryServer2775 Master2351 QuorumPeerMain

[[email protected]-1 ~]$ jps2522 NodeManager3449 Jps2007 QuorumPeerMain2141 DataNode2688 Worker2061 JournalNode2258 ResourceManager

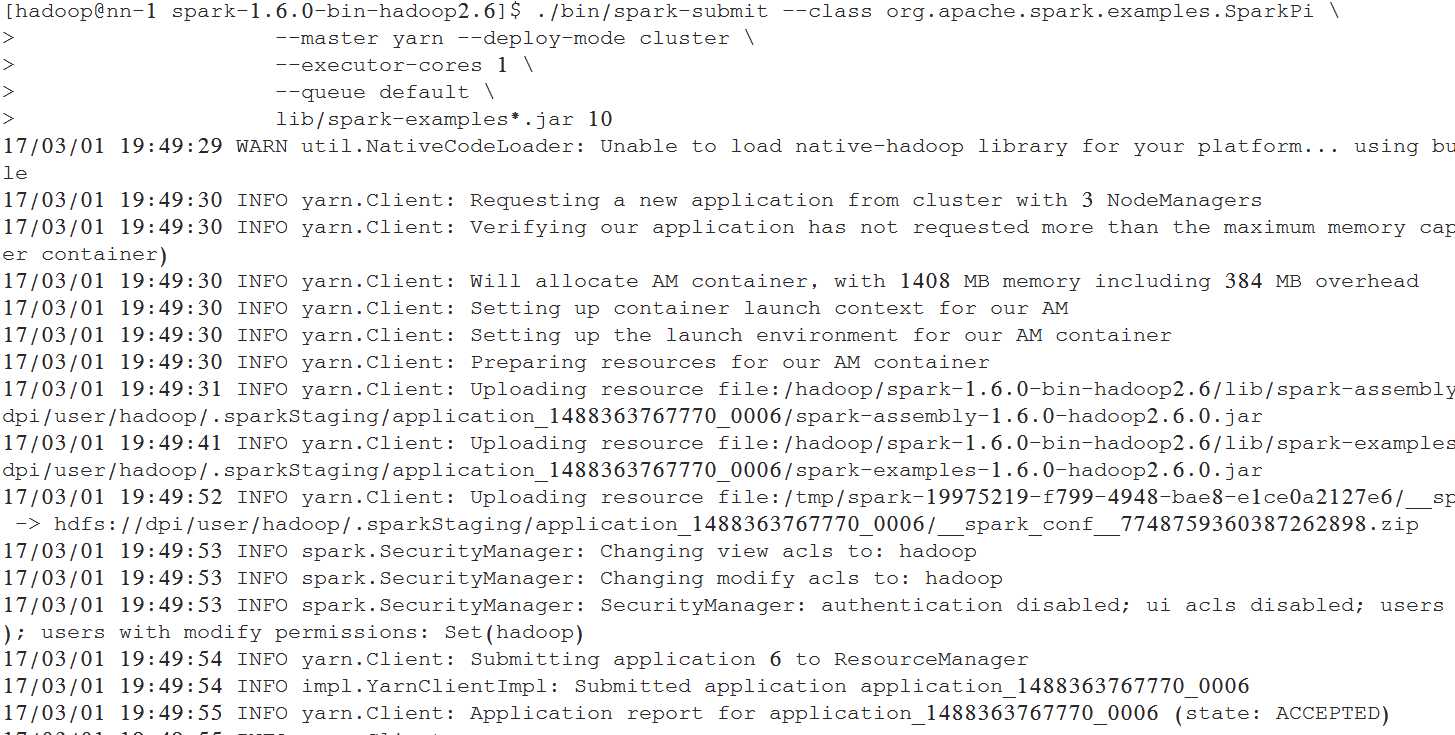

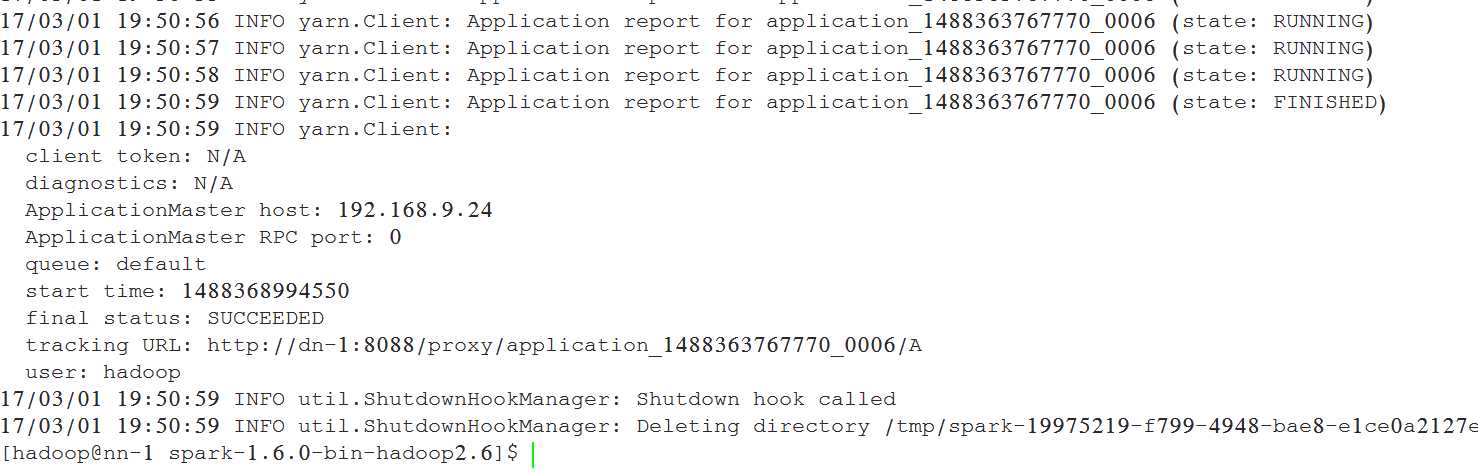

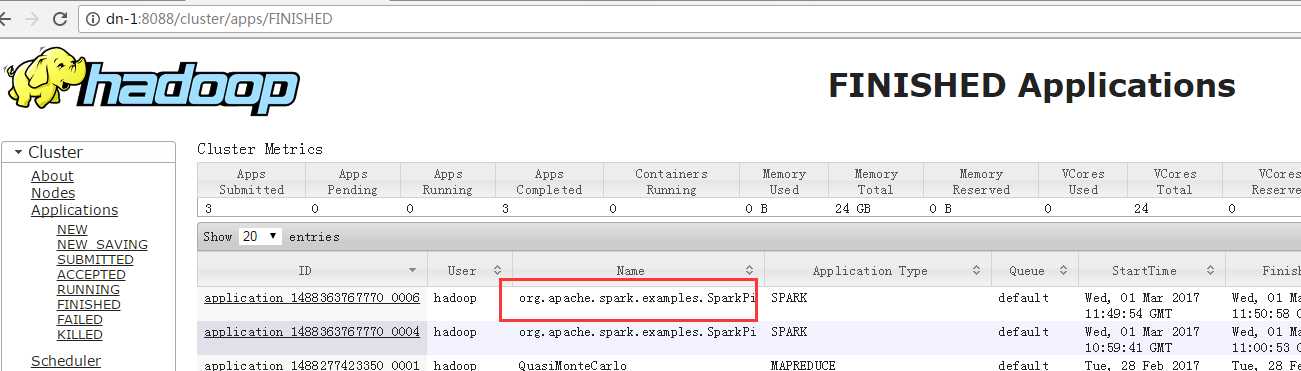

./bin/spark-submit --class org.apache.spark.examples.SparkPi \\

--master yarn --deploy-mode cluster \\

--driver-memory 100M \\

--executor-memory 200M \\

--executor-cores 1 \\

--queue default \\

lib/spark-examples*.jar 10

或者:

./bin/spark-submit --class org.apache.spark.examples.SparkPi \\

--master yarn --deploy-mode cluster \\

--executor-cores 1 \\

--queue default \\

lib/spark-examples*.jar 10

<property><name>topology.script.file.name</name><value>/hadoop/hadoop/etc/hadoop/RackAware.py</value></property>

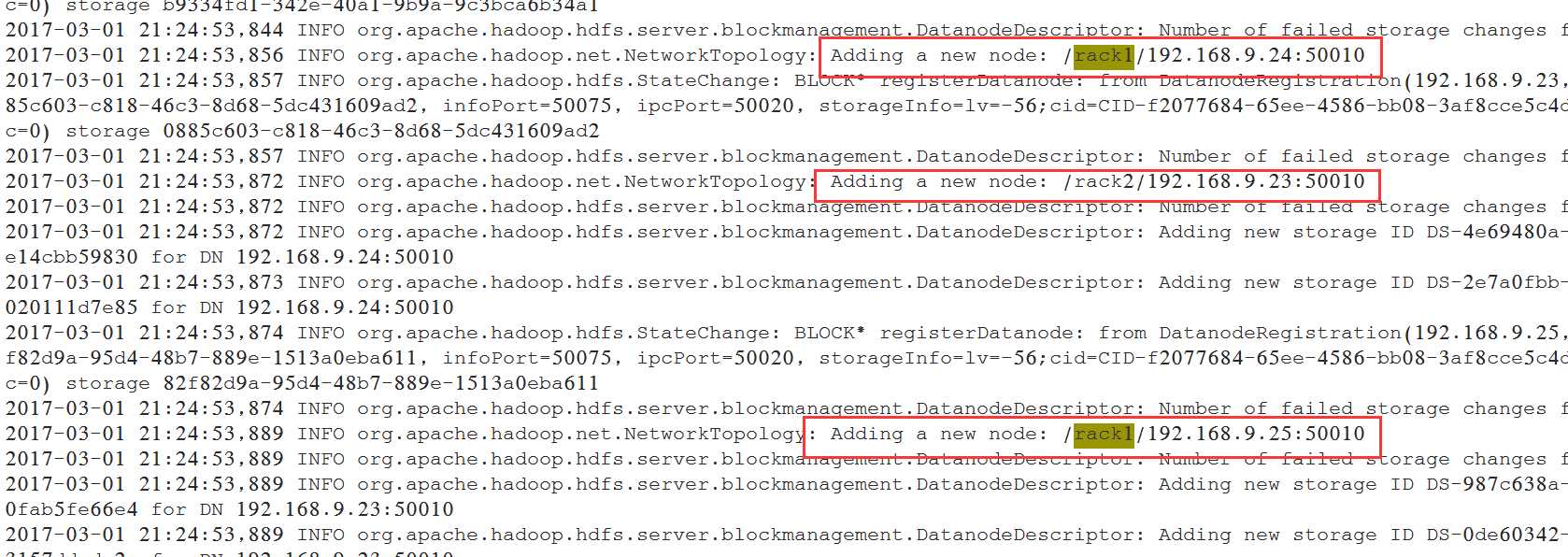

#!/usr/bin/python#-*-coding:UTF-8 -*-import sysrack = {"dn-1":"rack2","dn-2":"rack1","dn-3":"rack1","192.168.9.23":"rack2","192.168.9.24":"rack1","192.168.9.25":"rack1",}if __name__=="__main__":print "/" + rack.get(sys.argv[1],"rack0")

[[email protected]-1 hadoop]# chmod +x RackAware.py[[email protected]-1 hadoop]# ll RackAware.py-rwxr-xr-x 1 hadoop hadoop 294 3月 1 21:24 RackAware.py

[[email protected]-1 ~]$ hadoop-daemon.sh stop namenodestopping namenode[[email protected]-1 ~]$ hadoop-daemon.sh start namenodestarting namenode, logging to /hadoop/hadoop/logs/hadoop-hadoop-namenode-nn-1.out

[[email protected]-1 logs]# pwd/hadoop/hadoop/logs[[email protected]-1 logs]# vim hadoop-hadoop-namenode-nn-1.log

以上是关于Apache Hadoop集群安装(NameNode HA + SPARK + 机架感知)的主要内容,如果未能解决你的问题,请参考以下文章