opencv动态背景下运动目标检测 FAST+SURF+FLANN配准差分 17/12/13更新图片

Posted BHY_

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了opencv动态背景下运动目标检测 FAST+SURF+FLANN配准差分 17/12/13更新图片相关的知识,希望对你有一定的参考价值。

FAST检测特征点+SURF描述特征点速度上要比SURF在多尺度下检测特征点后描述要快的多

在自己的电脑上做了两种实验的对比,通过VS性能分析可以看到结果

配置I5 2.7GHZ X64 VS2012 OPENCV249

代码中大津法二值化可以直接用opencv提供的大津法接口

| 代码功能 | SURF提取描述 | FAST提取SURF描述 |

| 特征点提取 | 24.2% | 0.9% |

| 特征点描述 | 25% | 14.7% |

| 特征点匹配 | 12.2% | 8.9% |

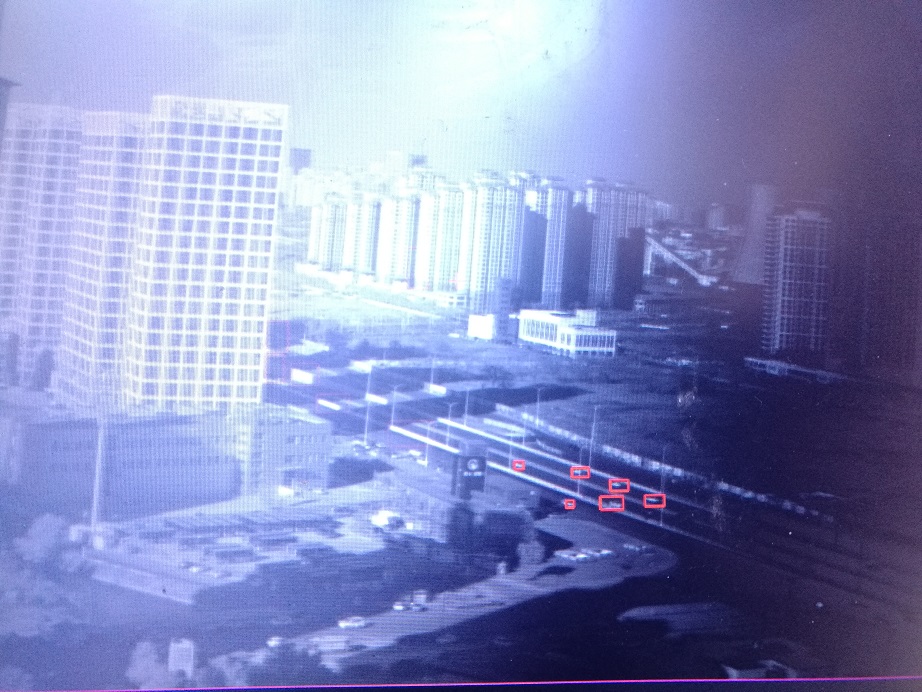

检测效果:

原软件界面:

怪自己手残,不应该中文命名的,调试会出现很多问题

// 动态背景目标探测Dlg.cpp : 实现文件

//

#include "stdafx.h"

#include "动态背景目标探测.h"

#include "动态背景目标探测Dlg.h"

#include "afxdialogex.h"

#include <opencv2/opencv.hpp>

#include <opencv2/nonfree/nonfree.hpp>

using namespace cv;

using namespace std;

CString strFilePath; //视频文件名

VideoCapture capture;//视频源

Mat image01,image02;

bool bExit,bGetTemplat;

bool bFirst = true;//第一次SURF循环

Point g_pt(-1,-1);

C动态背景目标探测Dlg *dlg;

int picSize = 912;

#ifdef _DEBUG

#define new DEBUG_NEW

#endif

// C动态背景目标探测Dlg 对话框

C动态背景目标探测Dlg::C动态背景目标探测Dlg(CWnd* pParent /*=NULL*/)

: CDialogEx(C动态背景目标探测Dlg::IDD, pParent)

m_hIcon = AfxGetApp()->LoadIcon(IDR_MAINFRAME);

void C动态背景目标探测Dlg::DoDataExchange(CDataExchange* pDX)

CDialogEx::DoDataExchange(pDX);

BEGIN_MESSAGE_MAP(C动态背景目标探测Dlg, CDialogEx)

ON_WM_PAINT()

ON_WM_QUERYDRAGICON()

ON_BN_CLICKED(IDC_CHOSEFILE, &C动态背景目标探测Dlg::OnBnClickedChosefile)

ON_BN_CLICKED(IDC_TARGET, &C动态背景目标探测Dlg::OnBnClickedTarget)

ON_BN_CLICKED(IDC_STOP, &C动态背景目标探测Dlg::OnBnClickedStop)

END_MESSAGE_MAP()

// C动态背景目标探测Dlg 消息处理程序

BOOL C动态背景目标探测Dlg::OnInitDialog()

CDialogEx::OnInitDialog();

// 设置此对话框的图标。当应用程序主窗口不是对话框时,框架将自动

// 执行此操作

SetIcon(m_hIcon, TRUE); // 设置大图标

SetIcon(m_hIcon, FALSE); // 设置小图标

// TODO: 在此添加额外的初始化代码

HWND hWnd ;

HWND hParent;

namedWindow("pic", WINDOW_AUTOSIZE);

hWnd = (HWND)cvGetWindowHandle("pic");

hParent = ::GetParent(hWnd);

::SetParent(hWnd, GetDlgItem(IDC_PIC)->m_hWnd);

::ShowWindow(hParent, SW_HIDE);

namedWindow("diff", WINDOW_AUTOSIZE);

hWnd = (HWND)cvGetWindowHandle("diff");

hParent = ::GetParent(hWnd);

::SetParent(hWnd, GetDlgItem(IDC_DIFF)->m_hWnd);

::ShowWindow(hParent, SW_HIDE);

SetDlgItemInt(IDC_THRESHOLD, 21);

dlg = (C动态背景目标探测Dlg*)theApp.m_pMainWnd;

bExit = 0;

bGetTemplat = 0;

return TRUE; // 除非将焦点设置到控件,否则返回 TRUE

// 如果向对话框添加最小化按钮,则需要下面的代码

// 来绘制该图标。对于使用文档/视图模型的 MFC 应用程序,

// 这将由框架自动完成。

void C动态背景目标探测Dlg::OnPaint()

if (IsIconic())

CPaintDC dc(this); // 用于绘制的设备上下文

SendMessage(WM_ICONERASEBKGND, reinterpret_cast<WPARAM>(dc.GetSafeHdc()), 0);

// 使图标在工作区矩形中居中

int cxIcon = GetSystemMetrics(SM_CXICON);

int cyIcon = GetSystemMetrics(SM_CYICON);

CRect rect;

GetClientRect(&rect);

int x = (rect.Width() - cxIcon + 1) / 2;

int y = (rect.Height() - cyIcon + 1) / 2;

// 绘制图标

dc.DrawIcon(x, y, m_hIcon);

else

CDialogEx::OnPaint();

//当用户拖动最小化窗口时系统调用此函数取得光标

//显示。

HCURSOR C动态背景目标探测Dlg::OnQueryDragIcon()

return static_cast<HCURSOR>(m_hIcon);

void C动态背景目标探测Dlg::OnBnClickedChosefile()

// 设置过滤器

TCHAR szFilter[] = _T("|所有文件(*.*)|*.*||");

// 构造打开文件对话框

CFileDialog fileDlg(TRUE, _T(""), NULL, 0, szFilter, this);

// 显示打开文件对话框

if (IDOK == fileDlg.DoModal())

// 如果点击了文件对话框上的“打开”按钮,则将选择的文件路径显示到编辑框里

strFilePath = fileDlg.GetPathName();

capture.open(string(strFilePath));

bExit = 0;

bFirst = TRUE;

//对轮廓按面积降序排列

bool biggerSort(vector<Point> v1, vector<Point> v2)

return contourArea(v1)>contourArea(v2);

void on_MouseHandle(int event, int x, int y, int flags, void* param)

Mat& image = *(cv::Mat*) param;

switch(event)

//左键按下消息

case EVENT_LBUTTONDOWN:

g_pt = Point(x, y);

CString str;

CRect rect;

dlg->GetDlgItem(IDC_PIC)->GetClientRect(&rect);

str.Format("%d,%d", int(g_pt.x*picSize/rect.right), int(g_pt.y*picSize/rect.bottom));

dlg->SetDlgItemText(IDC_POS, str);

g_pt.x = int(g_pt.x*picSize/rect.right);

g_pt.y = int(g_pt.y*picSize/rect.bottom);

bGetTemplat = TRUE;

break;

case EVENT_RBUTTONDOWN:

bGetTemplat = FALSE;

break;

break;

//大津法求阈值函数

int otsuThreshold(IplImage* img)

int T = 0;//阈值

int height = img->height;

int width = img->width;

int step = img->widthStep;

int channels = img->nChannels;

uchar* data = (uchar*)img->imageData;

double gSum0;//第一类灰度总值

double gSum1;//第二类灰度总值

double N0 = 0;//前景像素数

double N1 = 0;//背景像素数

double u0 = 0;//前景像素平均灰度

double u1 = 0;//背景像素平均灰度

double w0 = 0;//前景像素点数占整幅图像的比例为ω0

double w1 = 0;//背景像素点数占整幅图像的比例为ω1

double u = 0;//总平均灰度

double tempg = -1;//临时类间方差

double g = -1;//类间方差

double Histogram[256]=0;// = new double[256];//灰度直方图

double N = width*height;//总像素数

for(int i=0;i<height;i++)

//计算直方图

for(int j=0;j<width;j++)

double temp =data[i*step + j * 3] * 0.114 + data[i*step + j * 3+1] * 0.587 + data[i*step + j * 3+2] * 0.299;

temp = temp<0? 0:temp;

temp = temp>255? 255:temp;

Histogram[(int)temp]++;

//计算阈值

for (int i = 0;i<256;i++)

gSum0 = 0;

gSum1 = 0;

N0 += Histogram[i];

N1 = N-N0;

if(0==N1)break;//当出现前景无像素点时,跳出循环

w0 = N0/N;

w1 = 1-w0;

for (int j = 0;j<=i;j++)

gSum0 += j*Histogram[j];

u0 = gSum0/N0;

for(int k = i+1;k<256;k++)

gSum1 += k*Histogram[k];

u1 = gSum1/N1;

//u = w0*u0 + w1*u1;

g = w0*w1*(u0-u1)*(u0-u1);

if (tempg<g)

tempg = g;

T = i;

return T;

//检测与点选线程函数

UINT C动态背景目标探测Dlg::ThreadFunc(LPVOID pParam)

//线程函数实现

C动态背景目标探测Dlg *dlg = (C动态背景目标探测Dlg*)pParam;

Mat image1,image2;

Mat imagetemp1,imagetemp2;

Mat temp,image02temp;

vector<Rect> target;

Mat imageGray1,imageGray2;

namedWindow("pic");

setMouseCallback("pic",on_MouseHandle,(void*)&image02temp);

FastFeatureDetector fast(130);//FAST特征点检测

//SurfFeatureDetector surfDetector(4000);//SURF特征点检测

vector<KeyPoint> keyPoint1,keyPoint2;//两幅图中检测到的特征点

SurfDescriptorExtractor SurfDescriptor;//SURF特征点描述

Mat imageDesc1,imageDesc2;

while (!bExit)

if (!bGetTemplat)

target.clear();

if (bFirst)//第一次处理

//前一帧图

capture >> imagetemp1;

if (imagetemp1.empty())

bExit = TRUE;

bFirst = TRUE;

bGetTemplat = FALSE;

break;

//这里因为我处理需要,从原视频中抠出picSize*picSize部分

if (imagetemp1.cols > picSize + 200 && imagetemp1.rows > picSize)

image01 = imagetemp1(Rect(200, 0, picSize, picSize));

else

image01 = imagetemp1.clone();

//后一帧图

capture >> imagetemp2;

capture >> imagetemp2;

capture >> imagetemp2;

if (imagetemp2.empty())

bExit = TRUE;

bFirst = TRUE;

bGetTemplat = FALSE;

break;

//这里因为我处理需要,从原视频中抠出picSize*picSize部分

if (imagetemp2.cols > picSize + 200 && imagetemp2.rows > picSize)

image02 = imagetemp2(Rect(200, 0, picSize, picSize));

else

image02 = imagetemp2.clone();

//灰度图转换

cvtColor(image01,image1,CV_RGB2GRAY);

cvtColor(image02,image2,CV_RGB2GRAY);

//提取特征点

fast.detect(image1,keyPoint1);//FAST特征点提取

fast.detect(image2,keyPoint2);//FAST特征点提取

//surfDetector.detect(image1,keyPoint1);//SURF特征点提取

//surfDetector.detect(image2,keyPoint2);//SURF特征点提取

//特征点描述,为下边的特征点匹配做准备

SurfDescriptor.compute(image1,keyPoint1,imageDesc1);//SURF特征点描述

SurfDescriptor.compute(image2,keyPoint2,imageDesc2);//SURF特征点描述

bFirst = false;

else//对于后面的处理,只需要提取一帧图像的特征点就可以了,把上次的结果给这次的第一帧

image01 = image02.clone();

imageDesc1 = imageDesc2.clone();

keyPoint1 = keyPoint2;

//后一帧图

capture >> imagetemp2;

capture >> imagetemp2;

capture >> imagetemp2;

if (imagetemp2.empty())

bExit = TRUE;

bFirst = TRUE;

bGetTemplat = FALSE;

break;

//这里因为我处理需要,从原视频中抠出picSize*picSize部分

if (imagetemp2.cols > picSize + 200 && imagetemp2.rows > picSize)

image02 = imagetemp2(Rect(200, 0, picSize, picSize));

else

image02 = imagetemp2.clone();

//灰度图转换

cvtColor(image02,image2,CV_RGB2GRAY);

double time0 = static_cast<double>(getTickCount());//开始计时,需要计时的是FAST-SURF配准的时间

//提取特征点

fast.detect(image2,keyPoint2);

//surfDetector.detect(image2,keyPoint2);

//特征点描述,为下边的特征点匹配做准备

SurfDescriptor.compute(image2,keyPoint2,imageDesc2);

//获得匹配特征点,并提取最优配对

FlannBasedMatcher matcher;

vector<DMatch> matchePoints;

matcher.match(imageDesc1,imageDesc2,matchePoints,Mat());

sort(matchePoints.begin(),matchePoints.end()); //特征点排序

vector<Point2f> imagePoints1,imagePoints2;

if (matchePoints.size()<50)//对特征点的数量做一个限制

continue;

//筛除误匹配特征点

for(int i=0; i<matchePoints.size()*0.5; i++)

imagePoints1.push_back(keyPoint1[matchePoints[i].queryIdx].pt);

imagePoints2.push_back(keyPoint2[matchePoints[i].trainIdx].pt);

//获取图像1到图像2的投影映射矩阵 尺寸为3*3

Mat homo=findHomography(imagePoints1,imagePoints2,CV_RANSAC);

也可以使用getPerspectiveTransform方法获得透视变换矩阵,不过要求只能有4个点,效果稍差

//Mat homo=getPerspectiveTransform(imagePoints1,imagePoints2);

//cout<<"变换矩阵为:\\n"<<homo<<endl<<endl; //输出映射矩阵

//图像配准

Mat imageTransform1,imgpeizhun,imgerzhi;

warpPerspective(image01,imageTransform1,homo,Size(image02.cols,image02.rows));

//imshow("经过透视矩阵变换后",imageTransform1);

absdiff(image02, imageTransform1, imgpeizhun);

//imshow("配准diff", imgpeizhun);

int t = otsuThreshold(&IplImage(imgpeizhun));//大津法得到差分图的二值化阈值

threshold(imgpeizhun, imgerzhi, t, 255.0 , CV_THRESH_BINARY);

//imshow("配准二值化", imgerzhi);

image02temp = image02.clone();

cvtColor(imgerzhi,temp,CV_RGB2GRAY);

//检索连通域

Mat se=getStructuringElement(MORPH_RECT, Size(3,3));

morphologyEx(temp, temp, MORPH_OPEN, se);

int dialate_size = dlg->GetDlgItemInt(IDC_THRESHOLD);

Mat se2=getStructuringElement(MORPH_RECT, Size(dialate_size,dialate_size));

morphologyEx(temp, temp, MORPH_DILATE, se2);

vector<vector<Point>> contours;

findContours(temp, contours, RETR_EXTERNAL, CHAIN_APPROX_NONE);

//轮廓数量的筛选

if (contours.size()<1)

continue;

//std::sort(contours.begin(), contours.end(), biggerSort);//轮廓大小的排序,这里注释了因为计算费事

float m_BiLi = 0.8;//由于两幅图配准,边缘不会一致,因此对原图大小0.8的比例中搜索检测到的目标

for (int k = 0; k < contours.size(); k++)

Rect bomen = boundingRect(contours[k]);

//省略由于配准带来的边缘无效信息

if (bomen.x > image02temp.cols * (1 - m_BiLi) && bomen.y > image02temp.rows * (1 - m_BiLi)

&& bomen.x + bomen.width < image02temp.cols * m_BiLi && bomen.y + bomen.height < image02temp.rows * m_BiLi

/*&& contourArea(contours[k]) > contourArea(contours[0])/10*/

&& contourArea(contours[k]) > 900 && contourArea(contours[k]) < 30000)

rectangle(image02temp, bomen, Scalar(0,0,255), 4, 8, 0);

target.push_back(bomen);

//输出帧率

time0 = ((double)getTickCount()-time0)/getTickFrequency();

dlg->SetDlgItemInt(IDC_EDIT_FRE, (int)(1/time0), 1);

//显示图像

CRect rect;

dlg -> GetDlgItem(IDC_DIFF)->GetClientRect(&rect);

resize(temp, temp, cv::Size(rect.Width(), rect.Height()));

imshow("diff", temp);

dlg -> GetDlgItem(IDC_PIC)->GetClientRect(&rect);

resize(image02temp, image02temp, cv::Size(rect.Width(), rect.Height()));

imshow("pic", image02temp);

waitKey(20);

else//鼠标点击 选择目标,可以进行跟踪(这里没写跟踪部分,只写了点选部分)

int minIndex;

int minDis = 9999999;

int aimDis;

//距离与点选的坐标最近的目标是哪个

for (int i = 0; i < target.size(); i++)

aimDis = sqrt((target[i].x + target[i].width/2 - g_pt.x) * (target[i].x + target[i].width/2 - g_pt.x) + (target[i].y + target[i].height/2 - g_pt.y) * (target[i].y + target[i].height/2 - g_pt.y));

if (aimDis < minDis)

minDis = aimDis;

minIndex = i;

//绘制点选目标

rectangle(image02, target[minIndex], Scalar(0,255,255), 4, 8, 0);

//显示点选目标

CRect rect;

Mat image02show;

dlg -> GetDlgItem(IDC_PIC)->GetClientRect(&rect);

resize(image02, image02show, cv::Size(rect.Width(), rect.Height()));

imshow("pic", image02show);

waitKey(20);

return 0;

void C动态背景目标探测Dlg::OnBnClickedTarget()

AfxBeginThread(ThreadFunc, this); //启动线程

void C动态背景目标探测Dlg::OnBnClickedStop()

capture.release();

bExit = TRUE;

bGetTemplat = FALSE;

FAST(image1, keyPoint1, dlg->GetDlgItemInt(IDC_EDIT_FAST));

FAST(image2, keyPoint2, dlg->GetDlgItemInt(IDC_EDIT_FAST));

//fast.detect(image1,keyPoint1);//FAST特征点提取

//fast.detect(image2,keyPoint2);//FAST特征点提取

以上是关于opencv动态背景下运动目标检测 FAST+SURF+FLANN配准差分 17/12/13更新图片的主要内容,如果未能解决你的问题,请参考以下文章