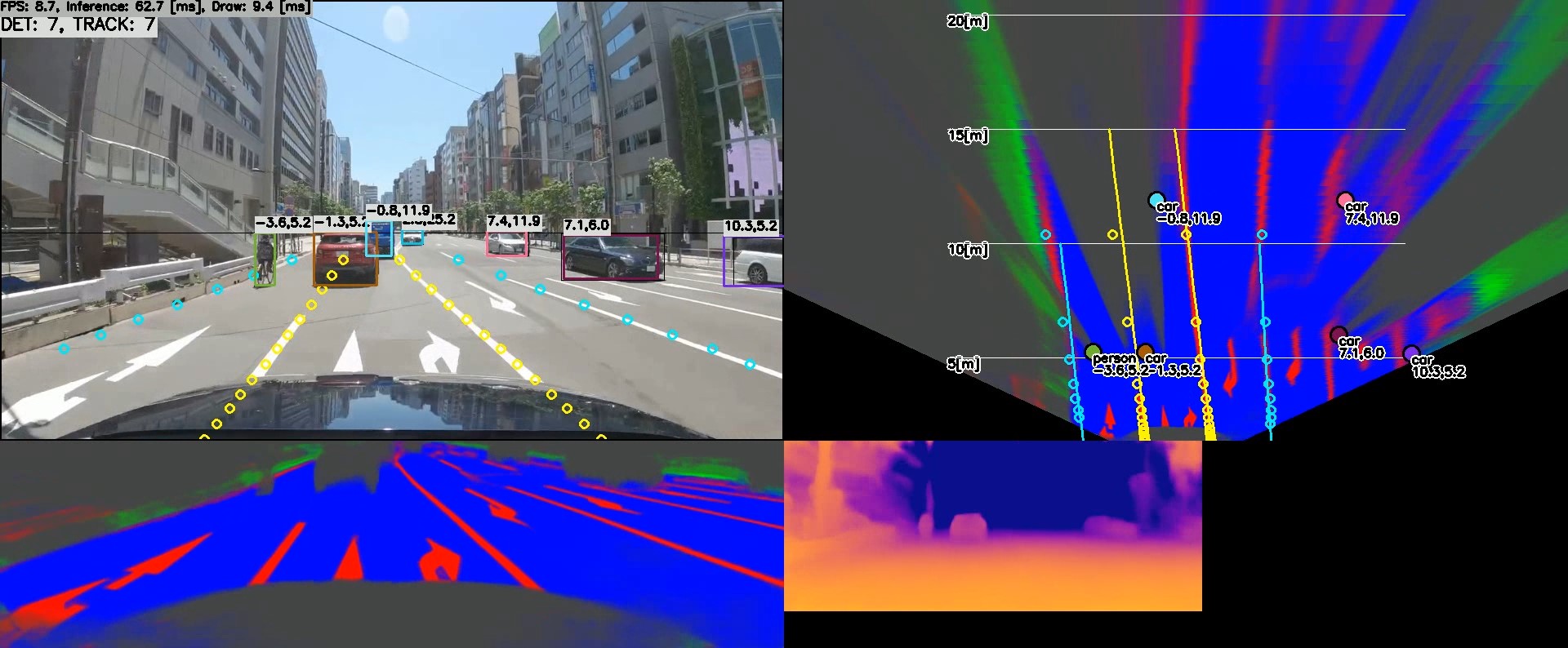

全景驾驶感知网络YOLOP部署与实现(交通目标检测可驾驶区域分割车道线检测)

Posted 踟蹰横渡口,彳亍上滩舟。

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了全景驾驶感知网络YOLOP部署与实现(交通目标检测可驾驶区域分割车道线检测)相关的知识,希望对你有一定的参考价值。

全景驾驶感知网络YOLOP部署与实现(交通目标检测、可驾驶区域分割、车道线检测)

项目下载地址

包含C++和Python两种版本的程序实现:下载地址

YOLOP开源项目:

https://github.com/hustvl/YOLOP

ONNX导出:

import torch

import torch.nn as nn

from lib.models.common import Conv, SPP, Bottleneck, BottleneckCSP, Focus, Concat, Detect, SharpenConv

from torch.nn import Upsample

import cv2

# The lane line and the driving area segment branches without share information with each other and without link

YOLOP = [

[24, 33, 42], # Det_out_idx, Da_Segout_idx, LL_Segout_idx

[-1, Focus, [3, 32, 3]], # 0

[-1, Conv, [32, 64, 3, 2]], # 1

[-1, BottleneckCSP, [64, 64, 1]], # 2

[-1, Conv, [64, 128, 3, 2]], # 3

[-1, BottleneckCSP, [128, 128, 3]], # 4

[-1, Conv, [128, 256, 3, 2]], # 5

[-1, BottleneckCSP, [256, 256, 3]], # 6

[-1, Conv, [256, 512, 3, 2]], # 7

[-1, SPP, [512, 512, [5, 9, 13]]], # 8

[-1, BottleneckCSP, [512, 512, 1, False]], # 9

[-1, Conv, [512, 256, 1, 1]], # 10

[-1, Upsample, [None, 2, 'nearest']], # 11

[[-1, 6], Concat, [1]], # 12

[-1, BottleneckCSP, [512, 256, 1, False]], # 13

[-1, Conv, [256, 128, 1, 1]], # 14

[-1, Upsample, [None, 2, 'nearest']], # 15

[[-1, 4], Concat, [1]], # 16 #Encoder

[-1, BottleneckCSP, [256, 128, 1, False]], # 17

[-1, Conv, [128, 128, 3, 2]], # 18

[[-1, 14], Concat, [1]], # 19

[-1, BottleneckCSP, [256, 256, 1, False]], # 20

[-1, Conv, [256, 256, 3, 2]], # 21

[[-1, 10], Concat, [1]], # 22

[-1, BottleneckCSP, [512, 512, 1, False]], # 23

[[17, 20, 23], Detect,

[1, [[3, 9, 5, 11, 4, 20], [7, 18, 6, 39, 12, 31], [19, 50, 38, 81, 68, 157]], [128, 256, 512]]],

# Detection head 24

[16, Conv, [256, 128, 3, 1]], # 25

[-1, Upsample, [None, 2, 'nearest']], # 26

[-1, BottleneckCSP, [128, 64, 1, False]], # 27

[-1, Conv, [64, 32, 3, 1]], # 28

[-1, Upsample, [None, 2, 'nearest']], # 29

[-1, Conv, [32, 16, 3, 1]], # 30

[-1, BottleneckCSP, [16, 8, 1, False]], # 31

[-1, Upsample, [None, 2, 'nearest']], # 32

[-1, Conv, [8, 2, 3, 1]], # 33 Driving area segmentation head

[16, Conv, [256, 128, 3, 1]], # 34

[-1, Upsample, [None, 2, 'nearest']], # 35

[-1, BottleneckCSP, [128, 64, 1, False]], # 36

[-1, Conv, [64, 32, 3, 1]], # 37

[-1, Upsample, [None, 2, 'nearest']], # 38

[-1, Conv, [32, 16, 3, 1]], # 39

[-1, BottleneckCSP, [16, 8, 1, False]], # 40

[-1, Upsample, [None, 2, 'nearest']], # 41

[-1, Conv, [8, 2, 3, 1]] # 42 Lane line segmentation head

]

class MCnet(nn.Module):

def __init__(self, block_cfg):

super(MCnet, self).__init__()

layers, save = [], []

self.nc = 1

self.detector_index = -1

self.det_out_idx = block_cfg[0][0]

self.seg_out_idx = block_cfg[0][1:]

self.num_anchors = 3

self.num_outchannel = 5 + self.nc

# Build model

for i, (from_, block, args) in enumerate(block_cfg[1:]):

block = eval(block) if isinstance(block, str) else block # eval strings

if block is Detect:

self.detector_index = i

block_ = block(*args)

block_.index, block_.from_ = i, from_

layers.append(block_)

save.extend(x % i for x in ([from_] if isinstance(from_, int) else from_) if x != -1) # append to savelist

assert self.detector_index == block_cfg[0][0]

self.model, self.save = nn.Sequential(*layers), sorted(save)

self.names = [str(i) for i in range(self.nc)]

# set stride、anchor for detector

# Detector = self.model[self.detector_index] # detector

# if isinstance(Detector, Detect):

# s = 128 # 2x min stride

# # for x in self.forward(torch.zeros(1, 3, s, s)):

# # print (x.shape)

# with torch.no_grad():

# model_out = self.forward(torch.zeros(1, 3, s, s))

# detects, _, _ = model_out

# Detector.stride = torch.tensor([s / x.shape[-2] for x in detects]) # forward

# # print("stride"+str(Detector.stride ))

# Detector.anchors /= Detector.stride.view(-1, 1, 1) # Set the anchors for the corresponding scale

# check_anchor_order(Detector)

# self.stride = Detector.stride

def forward(self, x):

cache = []

out = []

det_out = None

for i, block in enumerate(self.model):

if block.from_ != -1:

x = cache[block.from_] if isinstance(block.from_, int) else [x if j == -1 else cache[j] for j in block.from_] # calculate concat detect

x = block(x)

if i in self.seg_out_idx: # save driving area segment result

# m = nn.Sigmoid()

# out.append(m(x))

out.append(torch.sigmoid(x))

if i == self.detector_index:

det_out = x

cache.append(x if block.index in self.save else None)

out[0] = out[0].view(2, 640, 640)

out[1] = out[1].view(2, 640, 640)

return det_out, out[0], out[1]

if __name__ == "__main__":

device = 'cuda' if torch.cuda.is_available() else 'cpu'

model = MCnet(YOLOP)

checkpoint = torch.load('weights/End-to-end.pth', map_location=device)

model.load_state_dict(checkpoint['state_dict'])

model.eval()

output_onnx = 'yolop.onnx'

inputs = torch.randn(1, 3, 640, 640)

# with torch.no_grad():

# output = model(inputs)

# print(output)

torch.onnx.export(model, inputs, output_onnx, verbose=False, opset_version=12, input_names=['images'], output_names=['det_out', 'drive_area_seg', 'lane_line_seg'])

print('convert', output_onnx, 'to onnx finish!!!')

try:

dnnnet = cv2.dnn.readNet(output_onnx)

print('read sucess')

except:

print('read failed')

以上是关于全景驾驶感知网络YOLOP部署与实现(交通目标检测可驾驶区域分割车道线检测)的主要内容,如果未能解决你的问题,请参考以下文章