pytorch.optimizer 优化算法

Posted 东东就是我

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了pytorch.optimizer 优化算法相关的知识,希望对你有一定的参考价值。

https://zhuanlan.zhihu.com/p/346205754

https://blog.csdn.net/google19890102/article/details/69942970

https://zhuanlan.zhihu.com/p/32626442

https://zhuanlan.zhihu.com/p/32230623

文章目录

1.优化器optimizer

import torch

import numpy as np

import warnings

warnings.filterwarnings('ignore') #ignore warnings

x = torch.linspace(-np.pi, np.pi, 2000)

y = torch.sin(x)

p = torch.tensor([1, 2, 3])

xx = x.unsqueeze(-1).pow(p)

model = torch.nn.Sequential(

torch.nn.Linear(3, 1),

torch.nn.Flatten(0, 1)

)

loss_fn = torch.nn.MSELoss(reduction='sum')

learning_rate = 1e-3

optimizer = torch.optim.RMSprop(model.parameters(), lr=learning_rate)

for t in range(1, 1001):

y_pred = model(xx)

loss = loss_fn(y_pred, y)

if t % 100 == 0:

print('No.: 5d, loss: :.6f'.format(t, loss.item()))

optimizer.zero_grad() # 梯度清零

loss.backward() # 反向传播计算梯度

optimizer.step() # 梯度下降法更新参数

1.1step才是更新参数

def step(self, closure=None):

"""Performs a single optimization step.

Arguments:

closure (callable, optional): A closure that reevaluates the model

and returns the loss.

"""

loss = None

if closure is not None:

with torch.enable_grad():

loss = closure()

for group in self.param_groups:

weight_decay = group['weight_decay']

momentum = group['momentum']

dampening = group['dampening']

nesterov = group['nesterov']

for p in group['params']:

if p.grad is None:

continue

d_p = p.grad #获取参数梯度

if weight_decay != 0:

d_p = d_p.add(p, alpha=weight_decay)

if momentum != 0:

param_state = self.state[p]

if 'momentum_buffer' not in param_state:

buf = param_state['momentum_buffer'] = torch.clone(d_p).detach()

else:

buf = param_state['momentum_buffer']

buf.mul_(momentum).add_(d_p, alpha=1 - dampening)

if nesterov:

d_p = d_p.add(buf, alpha=momentum)

else:

d_p = buf

p.add_(d_p, alpha=-group['lr']) #更新参数

return loss

1.0 常见优化器变量定义

模型参数

θ

\\theta

θ ,

目标函数

J

(

θ

)

J(\\theta)

J(θ)

每一个时刻t(假设是一个batch)的梯度

g

t

=

▽

θ

J

(

θ

)

g_t=\\bigtriangledown _\\thetaJ(\\theta)

gt=▽θJ(θ)

学习率为

η

\\eta

η

根据历史梯度的一阶动量

m

t

=

ϕ

(

g

1

,

g

2

,

.

.

.

g

t

)

m_t=\\phi (g_1,g_2,...g_t)

mt=ϕ(g1,g2,...gt)

根据历史梯度的二阶动量

v

t

=

ψ

(

g

1

,

g

2

,

.

.

.

g

t

)

v_t=\\psi (g_1,g_2,...g_t)

vt=ψ(g1,g2,...gt)

更新模型参数

θ

t

+

1

=

θ

t

−

1

v

t

+

ϵ

m

t

\\theta_t+1=\\theta_t-\\frac1\\sqrtv_t+\\epsilon m_t

θt+1=θt−vt+ϵ1mt ,

平滑项,防止分母为0

1.1 SGD

m

t

=

η

∗

g

t

m_t=\\eta*g_t

mt=η∗gt

v

t

=

I

2

v_t=I^2

vt=I2

SGD 的缺点在于收敛速度慢,可能在鞍点处震荡。并且,如何合理的选择学习率是 SGD 的一大难点。

1.2 Momentum 动量

m

t

=

γ

∗

m

t

−

1

+

η

∗

g

t

m_t=\\gamma*m_t-1+\\eta*g_t

mt=γ∗mt−1+η∗gt

也就是按照一定比例的前一次的变化方向和大小,一般比例是0.9

1.3SGD-M

带一阶动量的SGD

m

t

=

γ

∗

m

t

−

1

+

η

∗

g

t

m_t=\\gamma*m_t-1+\\eta*g_t

mt=γ∗mt−1+η∗gt

v

t

=

I

2

v_t=I^2

vt=I2

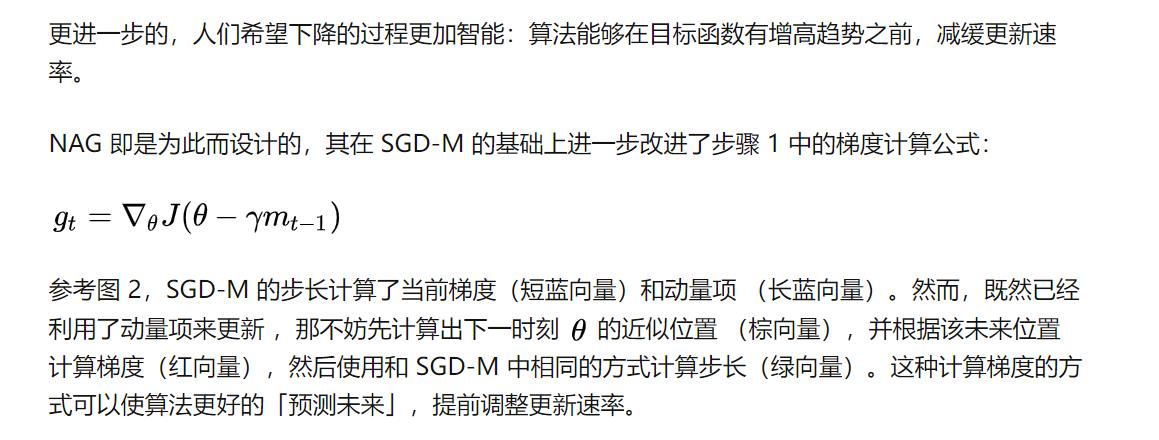

1.4Nesterov Accelerated Gradient

也就是在求解梯度的时候把参数改成参数经过动量变换后的参数的梯度

g

t

=

▽

θ

J

(

θ

−

γ

∗

m

t

−

1

)

g_t=\\bigtriangledown _\\thetaJ(\\theta-\\gamma*m_t-1)

gt=▽θJ(θ−γ∗mt−1)

m

t

=

γ

∗

m

t

−

1

+

η

∗

g

t

m_t=\\gamma*m_t-1+\\eta*g_t

mt=γ∗mt−1+η∗gt

v

t

=

I

2

v_t=I^2

vt=I2

1.5Adagrad

也就是根据参数改变的频率修改相应参数变化的快慢

v

t

=

∑

g

t

2

v_t=\\sum g_t^2

vt=∑gt2

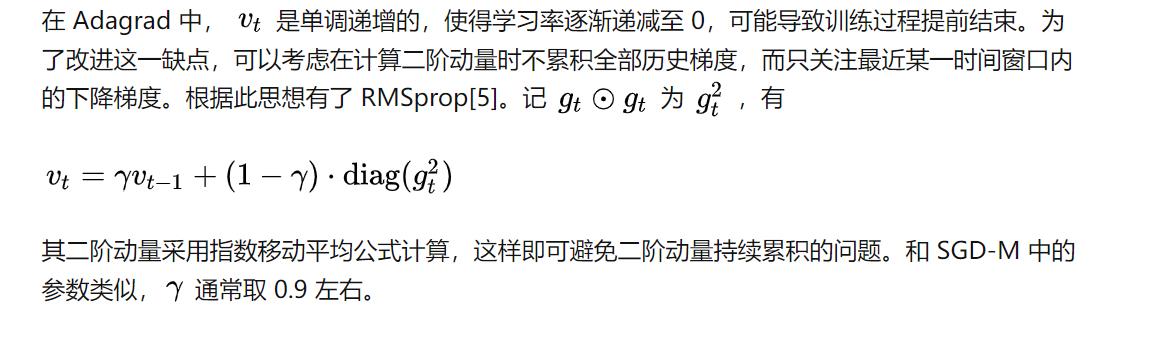

1.6RMSprop

v

t

=

γ

∗

v

t

−

1

+

(

1

−

γ

)

∗

g

t

2

v_t=\\gamma*v_t-1+(1-\\gamma)*g_t^2

vt=γ∗vt−1+以上是关于pytorch.optimizer 优化算法的主要内容,如果未能解决你的问题,请参考以下文章