hivesql 迁移spark3.0 sparksql报错如Cannot safely cast '字段':StringType to IntegerType的问题

Posted 宋朝林

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了hivesql 迁移spark3.0 sparksql报错如Cannot safely cast '字段':StringType to IntegerType的问题相关的知识,希望对你有一定的参考价值。

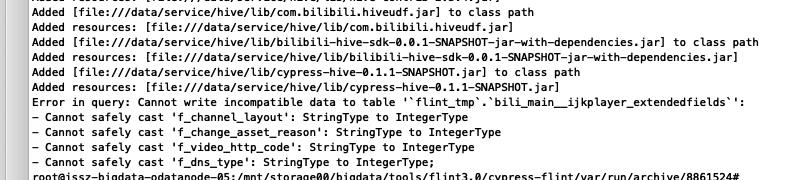

一 问题

hivesql可以正常运行,spark3.0运行报错如图

spark3.0配置 查看源码新增一个

val STORE_ASSIGNMENT_POLICY = buildConf("spark.sql.storeAssignmentPolicy") .doc("When inserting a value into a column with different data type, Spark will perform " + "type coercion. Currently, we support 3 policies for the type coercion rules: ANSI, " + "legacy and strict. With ANSI policy, Spark performs the type coercion as per ANSI SQL. " + "In practice, the behavior is mostly the same as PostgreSQL. " + "It disallows certain unreasonable type conversions such as converting " + "`string` to `int` or `double` to `boolean`. " + "With legacy policy, Spark allows the type coercion as long as it is a valid `Cast`, " + "which is very loose. e.g. converting `string` to `int` or `double` to `boolean` is " + "allowed. It is also the only behavior in Spark 2.x and it is compatible with Hive. " + "With strict policy, Spark doesn\'t allow any possible precision loss or data truncation " + "in type coercion, e.g. converting `double` to `int` or `decimal` to `double` is " + "not allowed." ) .stringConf .transform(_.toUpperCase(Locale.ROOT)) .checkValues(StoreAssignmentPolicy.values.map(_.toString)) .createWithDefault(StoreAssignmentPolicy.ANSI.toString)

看下配置有三种类型

object StoreAssignmentPolicy extends Enumeration { val ANSI, LEGACY, STRICT = Value }

对于ANSI策略,Spark根据ANSI SQL执行类型强制。这种行为基本上与PostgreSQL相同

它不允许某些不合理的类型转换,如转换“`string`to`int`或`double` to`boolean`

对于LEGACY策略 Spark允许类型强制,只要它是有效的\'Cast\' 这也是Spark 2.x中的唯一行为,它与Hive兼容。

对于STRICT策略 Spark不允许任何可能的精度损失或数据截断

所以我们增加配置

spark.sql.storeAssignmentPolicy=LEGACY

之后能正常运行

以上是关于hivesql 迁移spark3.0 sparksql报错如Cannot safely cast '字段':StringType to IntegerType的问题的主要内容,如果未能解决你的问题,请参考以下文章

将运行时 7.3LTS(Spark3.0.1) 升级到 9.1LTS(Spark3.1.2) 后创建 PySpark 数据帧 Databricks 时,json 文件中的重复列会引发错误