环境篇:DolphinScheduler-1.2.0.release安装部署

1 配置jdk

JDK百度网盘:https://pan.baidu.com/s/1og3mfefJrwl1QGZGZDZ8Sw 提取码:t6l1

#查看命令

rpm -qa | grep java

#删除命令

rpm -e --nodeps xxx

-

将oracle-j2sdk1.8-1.8.0+update181-1.x86_64.rpm上传至每个节点安装

rpm -ivh oracle-j2sdk1.8-1.8.0+update181-1.x86_64.rpm -

修改配置文件

vim /etc/profile #添加 export JAVA_HOME=/usr/java/jdk1.8.0_181-cloudera export PATH=$JAVA_HOME/bin:$PATH export CLASSPATH=.:$JAVA_HOME/lib/dt.jar:$JAVA_HOME/lib/tools.jar -

刷新源

source /etc/profile -

检验

java javac

2 创建用户

# 创建部署用户

userdel -r dolphinscheduler

useradd dolphinscheduler && echo dolphinscheduler | passwd --stdin dolphinscheduler

# 赋予 sudo 权限

chmod 640 /etc/sudoers

vim /etc/sudoers

# 大概在100行,在root下添加如下(注意更改dolphinscheduler)

dolphinscheduler ALL=(ALL) NOPASSWD: NOPASSWD: ALL

# 并且需要注释掉 Default requiretty 一行。如果有则注释,没有没有跳过

#Default requiretty

3 对部署用户配置免密

所有节点

su dolphinscheduler

#生成密钥对(公钥和私钥)三次回车生成密钥

ssh-keygen -t rsa

#查看公钥

cat ~/.ssh/id_rsa.pub

#将密匙输出到/root/.ssh/authorized_keys

cat ~/.ssh/id_rsa.pub > ~/.ssh/authorized_keys

chmod 600 ~/.ssh/authorized_keys

主节点

#追加密钥到主节点(需要操作及密码验证,追加完后查看一下该文件)--在主节点上操作,拷取从节点密匙

ssh 从节点机器IP cat ~/.ssh/id_rsa.pub >> ~/.ssh/authorized_keys

cat ~/.ssh/authorized_keys

#从主节点复制密钥到从节点

scp ~/.ssh/authorized_keys dolphinscheduler@从节点机器IP:~/.ssh/authorized_keys

所有节点互相进行ssh连接

ssh dolphinscheduler@172.xx.xx.xxx

ssh dolphinscheduler@172.xx.xx.xxx

4 pip、kazoo 安装

yum -y install epel-release

yum -y install python-pip

yum -y install pip

pip --version

pip install kazoo

#使用python

#import kazoo,不报错即可

5 安装包下载

- 百度网盘下载地址:https://pan.baidu.com/s/1lWI6k-XgbUhmBSwX-SmThA 提取码:c2ig

- git 下载地址:https://github.com/apache/incubator-dolphinscheduler/releases

# 创建安装目录

sudo mkdir /opt/DolphinScheduler && sudo chown -R dolphinscheduler:dolphinscheduler /opt/DolphinScheduler

6 解压 dolphinscheduler安装包(需要对应部署用户权限)

#切换用户上传tar包

su dolphinscheduler

cd /opt/DolphinScheduler && mkdir dolphinScheduler-backend

tar -zxvf apache-dolphinscheduler-incubating-1.2.0-dolphinscheduler-backend-bin.tar.gz -C dolphinScheduler-backend

cd /opt/DolphinScheduler && mkdir dolphinScheduler-ui

tar -zxvf apache-dolphinscheduler-incubating-1.2.0-dolphinscheduler-front-bin.tar.gz -C dolphinScheduler-ui

7 部署mysql用户

# 设置数据用户 dolphinscheduler 的访问密码为 dolphinscheduler,并且不对访问的 ip 做限制

# 测试环境将访问设置为所有,如果是生产,可以限制只能子网段的ip才能访问(\'192.168.1.%\')

CREATE DATABASE dolphinscheduler DEFAULT CHARACTER SET utf8 DEFAULT COLLATE utf8_general_ci;

GRANT ALL PRIVILEGES ON dolphinscheduler.* TO \'dolphinscheduler\'@\'%\' IDENTIFIED BY \'dolphinscheduler\';

GRANT ALL PRIVILEGES ON dolphinscheduler.* TO \'dolphinscheduler\'@\'localhost\' IDENTIFIED BY \'dolphinscheduler\';

flush privileges;

8 创建表和导入基础数据 修改 application-dao.properties中的下列属性

vim /opt/DolphinScheduler/dolphinScheduler-backend/apache-dolphinscheduler-incubating-1.2.0-dolphinscheduler-backend-bin/conf/application-dao.properties

#注意注释postgre连接,打开mysql连接

>>>>

spring.datasource.url=jdbc:mysql://10.xx.xx.xx:3306/dolphinscheduler?useUnicode=true&characterEncoding=UTF-8

spring.datasource.username=dolphinscheduler

spring.datasource.password=dolphinscheduler

#执行创建表和导入基础数据脚本(1.2缺少mysql连接jar,先copy一个mysql-connector-java-5.1.34.jar到lib下)

cd /opt/DolphinScheduler/dolphinScheduler-backend/apache-dolphinscheduler-incubating-1.2.0-dolphinscheduler-backend-bin

sh script/create-dolphinscheduler.sh

9 后端配置文件修改

9.1 dolphinscheduler_env.sh

vim /opt/DolphinScheduler/dolphinScheduler-backend/apache-dolphinscheduler-incubating-1.2.0-dolphinscheduler-backend-bin/conf/env/.dolphinscheduler_env.sh

>>>>

export HADOOP_HOME=/opt/cloudera/parcels/CDH/lib/hadoop

export HADOOP_CONF_DIR=/etc/hadoop/conf

export SPARK_HOME1=/opt/cloudera/parcels/CDH/lib/spark

#export SPARK_HOME2=/opt/soft/spark2

export PYTHON_HOME=/usr/bin/python

export JAVA_HOME=/usr/java/jdk1.8.0_181-cloudera

export HIVE_HOME=/opt/cloudera/parcels/CDH/lib/hive

#export FLINK_HOME=/opt/soft/flink

#export PATH=$HADOOP_HOME/bin:$SPARK_HOME1/bin:$SPARK_HOME2/bin:$PYTHON_HOME:$JAVA_HOME/bin:$HIVE_HOME/bin:$PATH

export PATH=$HADOOP_HOME/bin:$SPARK_HOME1/bin:$PYTHON_HOME:$JAVA_HOME/bin:$HIVE_HOME/bin:$PATH

export PATH=$HADOOP_HOME/bin:$SPARK_HOME1/bin:$SPARK_HOME2/bin:$PYTHON_HOME:$JAVA_HOME/bin:$HIVE_HOME/bin:$PATH

9.2 install.sh

vim /opt/DolphinScheduler/dolphinScheduler-backend/apache-dolphinscheduler-incubating-1.2.0-dolphinscheduler-backend-bin/install.sh

>>>>

# 1. mysql 配置

# 安装完成后以下几项配置位于 $installPath/conf/quartz.properties 中

# mysql 地址,端口;数据库名称;用户名;密码(注意:如果有特殊字符,请用 \\ 转移符进行转移)

# for example postgresql or mysql ...

#dbtype="postgresql"

dbtype="mysql"

# db config

# db address and port

dbhost="10.xx.xx.xx:3306"

# db name

dbname="dolphinscheduler"

# db username

username="dolphinscheduler"

# db passwprd

# Note: if there are special characters, please use the \\ transfer character to transfer

passowrd="dolphinscheduler"

# 2. 集群架构配置

# 2.1 集群部署环境配置

# 安装完成后以下几项配置位于 $installPath/conf/config/install_config.conf 中

# conf/config/install_config.conf config

# Note: the installation path is not the same as the current path (pwd)

# dolphinscheduler 集群安装目录(不能与先有路径相同)

installPath="/opt/DolphinScheduler/dolphinscheduler"

# deployment user

# Note: the deployment user needs to have sudo privileges and permissions to operate hdfs. If hdfs is enabled, the root directory needs to be created by itself

# 部署用户。注意:部署用户需要有 sudo 权限及操作 hdfs 的权限,如果开启 hdfs,根目录需要自行创建

deployUser="dolphinscheduler"

# zk cluster

# zk 集群

zkQuorum="172.xx.xx.xx:2181,172.xx.xx.xx:2181,172.xx.xx.xx:2181"

# install hosts

# Note: install the scheduled hostname list. If it is pseudo-distributed, just write a pseudo-distributed hostname

# 安装 DolphinScheduler 的机器 hostname 列表,如果是一台ips="xx.xx.xx.xx"

ips="xx.xx.xx.232,xx.xx.xx.233"

# 2.2 各节点服务配置(必须是hostname,如果是单台就只写一个)

# 安装完成后以下几项配置位于 $installPath/conf/config/run_config.conf 中

# conf/config/run_config.conf config

# run master machine

# Note: list of hosts hostname for deploying master

# 运行 Master 的机器

masters="ds1.com,ds2.com"

# run worker machine

# note: list of machine hostnames for deploying workers

# 运行 Worker 的机器

workers="ds1.com,ds2.com"

# run alert machine

# note: list of machine hostnames for deploying alert server

# 运行 Alert 的机器(提供告警相关接口)

alertServer="ds1.com,ds2.com"

# run api machine

# note: list of machine hostnames for deploying api server

# 运行 Api 的机器(API 接口层,主要负责处理前端UI层的请求)

apiServers="ds1.com,ds2.com"

# 3. alert 配置

# 安装完成后以下几项配置位于 $installPath/conf/alert.properties 中

# alert config

# mail protocol

# 邮件协议

mailProtocol="SMTP"

# mail server host

# 邮件服务host

mailServerHost="smtp.qq.com"

# mail server port

# 邮件服务端口

mailServerPort="587"

# sender

# 发送人

mailSender="xxx@qq.com"

# user

mailUser="xxx@qq.com"

# sender password

# 发送人密码(如果线下使用25端口则是邮箱密码,否则是开启SMTP服务的验证码)

mailPassword="xxxxx"

# TLS mail protocol support

starttlsEnable="true"

#认证

sslTrust="smtp.qq.com"

# SSL mail protocol support

# note: The SSL protocol is enabled by default.

# only one of TLS and SSL can be in the true state.

# SSL邮件协议支持

# 注意:默认开启的是SSL协议,TLS和SSL只能有一个处于true状态

sslEnable="false"

# 下载Excel路径

xlsFilePath="/opt/DolphinScheduler/xls"

# 企业微信企业ID配置

enterpriseWechatCorpId="xxxxxxxxxx"

# 企业微信应用Secret配置

enterpriseWechatSecret="xxxxxxxxxx"

# 企业微信应用AgentId配置

enterpriseWechatAgentId="xxxxxxxxxx"

# 企业微信用户配置,多个用户以,分割

enterpriseWechatUsers="xxxxx,xxxxx"

# 4. 开启监控自启动脚本

# 控制是否启动自启动脚本(监控master,worker状态,如果掉线会自动启动)

#monitorServerState="false"

monitorServerState="true"

# 5. 资源中心配置

# 安装完成后以下几项配置位于 $installPath/conf/common/* 中

# 5.1 Hadoop 相关配置

# resource Center upload and select storage method:HDFS,S3,NONE

# 资源中心上传选择存储方式:HDFS,S3,NONE

resUploadStartupType="HDFS"

# if resUploadStartupType is HDFS,defaultFS write namenode address,HA you need to put core-site.xml and hdfs-site.xml in the conf directory.

# if S3,write S3 address,HA,for example :s3a://dolphinscheduler,

# Note,s3 be sure to create the root directory /dolphinscheduler

# 如果存储方式为HDFS,defaultFS 写 namenode 地址;支持 HA,需要将 core-site.xml 和 hdfs-site.xml 放到 conf 目录下

defaultFS="hdfs://xx.xx.xx.xx:8020"

# if S3 is configured, the following configuration is required.

# 如果配置了S3,则需要有以下配置

#s3Endpoint="http://192.168.199.91:9010"

#s3AccessKey="A3DXS30FO22544RE"

#s3SecretKey="OloCLq3n+8+sdPHUhJ21XrSxTC+JK"

# 5.2 yarn 相关配置

# resourcemanager HA配置,如果是单 resourcemanager, 这里为 yarnHaIps="",填写下面

#yarnHaIps=""

yarnHaIps="xx.xx.xx.xx,xx.xx.xx.xx"

# 如果是单 resourcemanager, 只需要配置一个主机名称,如果是 resourcemanager HA,则默认配置就好

#singleYarnIp="xx.xx.xx.xx"

# 5.3 HDFS 根目录及权限配置

# hdfs root path, the owner of the root path must be the deployment user.

# versions prior to 1.1.0 do not automatically create the hdfs root directory, you need to create it yourself.

# hdfs 根路径,根路径的 owner 必须是部署用户。1.1.0之前版本不会自动创建hdfs根目录,需要自行创建

hdfsPath="/dolphinscheduler"

# have users who create directory permissions under hdfs root path /

# Note: if kerberos is enabled, hdfsRootUser="" can be used directly.

# 拥有在hdfs根路径下创建目录权限的用户(此处不建议配置为超级管理员用户,根目录需自行创建并修改权限)

# 注意:如果开启了kerberos,则直接hdfsRootUser="",就可以

hdfsRootUser="dolphinscheduler"

# 6. common 配置(默认配置)

# 安装完成后以下几项配置位于 $installPath/conf/common/common.properties 中

# 程序路径

programPath="/tmp/dolphinscheduler"

#下载路径

downloadPath="/tmp/dolphinscheduler/download"

# 任务执行路径

execPath="/tmp/dolphinscheduler/exec"

# SHELL环境变量路径

shellEnvPath="$installPath/conf/env/.dolphinscheduler_env.sh"

# 资源文件的后缀

resSuffixs="txt,log,sh,conf,cfg,py,java,sql,hql,xml"

# 开发状态,如果是true,对于SHELL脚本可以在execPath目录下查看封装后的SHELL脚本,如果是false则执行完成直接删除

devState="true"

# kerberos 配置

# kerberos 是否启动

kerberosStartUp="false"

# kdc krb5 配置文件路径

krb5ConfPath="$installPath/conf/krb5.conf"

# keytab 用户名

keytabUserName="hdfs-mycluster@ESZ.COM"

# 用户 keytab路径

keytabPath="$installPath/conf/hdfs.headless.keytab"

# 7. zk 配置(默认配置)

# 安装完成后以下几项配置位于 $installPath/conf/zookeeper.properties 中

# zk根目录

zkRoot="/dolphinscheduler"

# 用来记录挂掉机器的zk目录

zkDeadServers="$zkRoot/dead-servers"

# masters目录

zkMasters="$zkRoot/masters"

# workers目录

zkWorkers="$zkRoot/workers"

# zk master分布式锁

mastersLock="$zkRoot/lock/masters"

# zk worker分布式锁

workersLock="$zkRoot/lock/workers"

# zk master容错分布式锁

mastersFailover="$zkRoot/lock/failover/masters"

# zk worker容错分布式锁

workersFailover="$zkRoot/lock/failover/workers"

# zk master启动容错分布式锁

mastersStartupFailover="$zkRoot/lock/failover/startup-masters"

# zk session 超时

zkSessionTimeout="300"

# zk 连接超时

zkConnectionTimeout="300"

# zk 重试间隔

zkRetrySleep="100"

# zk重试最大次数

zkRetryMaxtime="5"

# 8. master 配置(默认配置)

# 安装完成后以下几项配置位于 $installPath/conf/master.properties 中

# master执行线程最大数,流程实例的最大并行度

masterExecThreads="100"

# master任务执行线程最大数,每一个流程实例的最大并行度

masterExecTaskNum="20"

# master心跳间隔

masterHeartbeatInterval="10"

# master任务提交重试次数

masterTaskCommitRetryTimes="5"

# master任务提交重试时间间隔

masterTaskCommitInterval="100"

# master最大cpu平均负载,用来判断master是否还有执行能力

masterMaxCpuLoadAvg="10"

# master预留内存,用来判断master是否还有执行能力

masterReservedMemory="1"

# 9. worker 配置(默认配置)

# 安装完成后以下几项配置位于 $installPath/conf/worker.properties 中

# worker执行线程

workerExecThreads="100"

# worker心跳间隔

workerHeartbeatInterval="10"

# worker一次抓取任务数

workerFetchTaskNum="3"

# worker最大cpu平均负载,用来判断worker是否还有执行能力,保持系统默认,默认为cpu核数的2倍,当负载达到2倍时,

#workerMaxCupLoadAvg="10"

# worker预留内存,用来判断master是否还有执行能力

workerReservedMemory="1"

# 10. api 配置(默认配置)

# 安装完成后以下几项配置位于 $installPath/conf/application.properties 中

# api 服务端口

apiServerPort="12345"

# api session 超时

apiServerSessionTimeout="7200"

# api 上下文路径

apiServerContextPath="/dolphinscheduler/"

# spring 最大文件大小

springMaxFileSize="1024MB"

# spring 最大请求文件大小

springMaxRequestSize="1024MB"

# api 最大post请求大小

apiMaxHttpPostSize="5000000"

9.3 添加 Hadoop 配置文件

# 若 install.sh 中,resUploadStartupType 为 HDFS,且配置为 HA,则需拷贝 hadoop 配置文件到 conf 目录下

cp /etc/hadoop/conf.cloudera.yarn/hdfs-site.xml /opt/DolphinScheduler/dolphinScheduler-backend/apache-dolphinscheduler-incubating-1.2.0-dolphinscheduler-backend-bin/conf/

cp /etc/hadoop/conf.cloudera.yarn/core-site.xml /opt/DolphinScheduler/dolphinScheduler-backend/apache-dolphinscheduler-incubating-1.2.0-dolphinscheduler-backend-bin/conf/

#若是部署HA,则所有机器都需要有

scp /etc/hadoop/conf.cloudera.yarn/hdfs-site.xml dolphinscheduler@xx.xx.xx.232:/opt/DolphinScheduler/dolphinScheduler-backend/apache-dolphinscheduler-incubating-1.2.0-dolphinscheduler-backend-bin/conf/

scp /etc/hadoop/conf.cloudera.yarn/core-site.xml dolphinscheduler@xx.xx.xx.232:/opt/DolphinScheduler/dolphinScheduler-backend/apache-dolphinscheduler-incubating-1.2.0-dolphinscheduler-backend-bin/conf/

9.4 创建 hdfs 根目录

#sudo -u hdfs hadoop fs -rmr /dolphinscheduler

sudo -u hdfs hadoop fs -mkdir /dolphinscheduler

sudo -u hdfs hadoop fs -chown dolphinscheduler:dolphinscheduler /dolphinscheduler

9.5 一键部署(需要执行第两次,因为第一次有文件未被创建)

#在日志中可能看到找不到java,修改其$JAVA_HOME

vim /opt/DolphinScheduler/dolphinScheduler-backend/apache-dolphinscheduler-incubating-1.2.0-dolphinscheduler-backend-bin/bin/dolphinscheduler-daemon.sh

#进入脚本目录启动

cd /opt/DolphinScheduler/dolphinScheduler-backend/apache-dolphinscheduler-incubating-1.2.0-dolphinscheduler-backend-bin/

sh install.sh

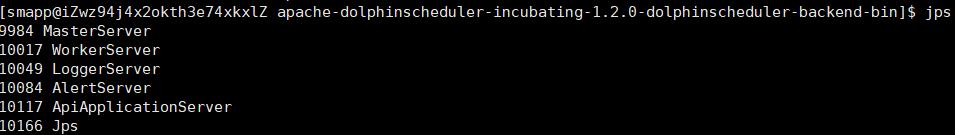

9.6 查看Java进程ll

10 前端部署

10.1 需要niginx

yum -y install nginx

sudo vim /etc/nginx/nginx.conf

》》》http中替换server内容

upstream dolphinsch {

server xx.xx.xx.232:12345;

server xx.xx.xx.233:12345;

}

server {

listen 8899;# 访问端口

server_name localhost;

#charset koi8-r;

#access_log /var/log/nginx/host.access.log main;

location / {

root /opt/DolphinScheduler/dolphinScheduler-ui/apache-dolphinscheduler-incubating-1.2.0-dolphinscheduler-front-bin/dist; # 静态文件目录

index index.html index.html;

}

location /dolphinscheduler {

proxy_pass http://dolphinsch; # 接口地址(自行修改)

proxy_set_header Host $host;

proxy_set_header X-Real-IP $remote_addr;

proxy_set_header x_real_ipP $remote_addr;

proxy_set_header remote_addr $remote_addr;

proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for;

proxy_http_version 1.1;

proxy_connect_timeout 4s;

proxy_read_timeout 30s;

proxy_send_timeout 12s;

proxy_set_header Upgrade $http_upgrade;

proxy_set_header Connection "upgrade";

}

#error_page 404 /404.html;

# redirect server error pages to the static page /50x.html

#

error_page 500 502 503 504 /50x.html;

location = /50x.html {

root /usr/share/nginx/html;

}

}

》》》http中加入

client_max_body_size 1024m; #上传文件大小限制

#重启 nginx 服务

systemctl restart nginx

10.2 访问

admin

dolphinscheduler123