Redis 集群部署

Posted 认真对待世界的小白

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了Redis 集群部署相关的知识,希望对你有一定的参考价值。

Windows 下 Redis 集群搭建

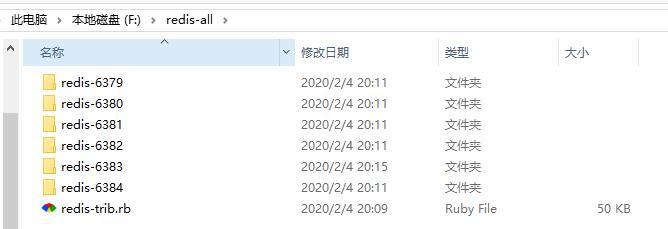

第一步:首先我们构建集群节点目录:

(集群正常运作至少需要三个主节点,不过在刚开始试用集群功能时, 强烈建议使用六个节点: 其中三个为主节点, 而其余三个则是各个主节点的从节点。主节点崩溃,从节点的Redis就会提升为主节点,代替原来的主节点工作,崩溃的主Redis回复工作后,会成为从节点)

拷贝开始下载的 redis 解压后的目录,并修改文件名(比如按集群下 redis 端口命名)如下:

6379、6380、6381、6382、6383、6384 对应的就是后面个节点下启动 redis 的端口。

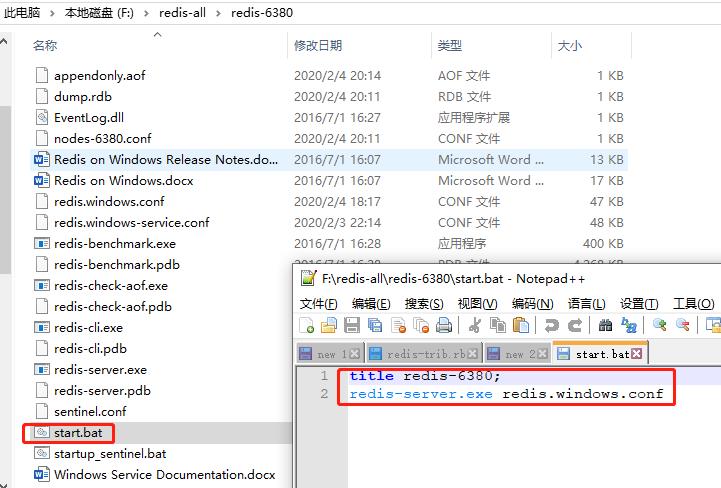

在节点目录下新建文件,输入(举例在 6380 文件夹下新建文件)

title redis-6380;

redis-server.exe redis.windows.conf

然后保存为 start.bat 下次启动时直接执行该脚本即可。

接着分别打开各个文件下的 redis.windows.conf,分别修改如下配置(举例修改 6380 文件下的 redis.window.conf 文件):

port 6380 //修改为与当前文件夹名字一样的端口号

appendonly yes //指定是否在每次更新操作后进行日志记录,Redis在默认情况下是异步的把数据写入磁盘,如果不开启,可能会在断电时导致一段时间内的数据丢失。 yes表示:存储方式,aof,将写操作记录保存到日志中

cluster-enabled yes //开启集群模式

cluster-config-file nodes-6380.conf //保存节点配置,自动创建,自动更新(建议命名时加上端口号)

cluster-node-timeout 15000 //集群超时时间,节点超过这个时间没反应就断定是宕机

注意:在修改配置文件这几项配置时,配置项前面不能有空格,否则启动时会报错(参考下面)

其他文件节点 6379~6384 也修改相应的节点配置信息和建立启动脚本(略)。

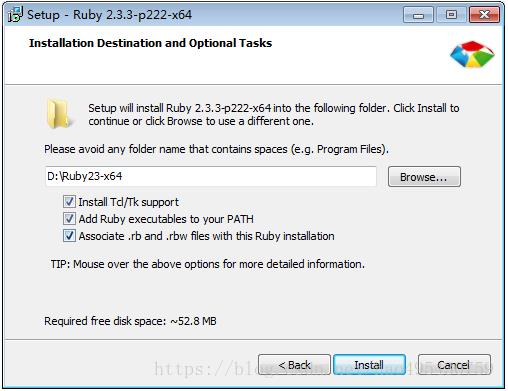

第二步:下载Ruby并安装

下载完成后安装,一步步点 next 直到安装完成(安装时勾选3个选项)

然后对 ruby 进行配置:

构建集群脚本redis-trib.rb

可以打开 https://raw.githubusercontent.com/MSOpenTech/redis/3.0/src/redis-trib.rb 然后复制里面的内容到本地并保存为 redis-trib.rb;

#!/usr/bin/env ruby # TODO (temporary here, we\'ll move this into the Github issues once # redis-trib initial implementation is completed). # # - Make sure that if the rehashing fails in the middle redis-trib will try # to recover. # - When redis-trib performs a cluster check, if it detects a slot move in # progress it should prompt the user to continue the move from where it # stopped. # - Gracefully handle Ctrl+C in move_slot to prompt the user if really stop # while rehashing, and performing the best cleanup possible if the user # forces the quit. # - When doing "fix" set a global Fix to true, and prompt the user to # fix the problem if automatically fixable every time there is something # to fix. For instance: # 1) If there is a node that pretend to receive a slot, or to migrate a # slot, but has no entries in that slot, fix it. # 2) If there is a node having keys in slots that are not owned by it # fix this condition moving the entries in the same node. # 3) Perform more possibly slow tests about the state of the cluster. # 4) When aborted slot migration is detected, fix it. require \'rubygems\' require \'redis\' ClusterHashSlots = 16384 def xputs(s) case s[0..2] when ">>>" color="29;1" when "[ER" color="31;1" when "[OK" color="32" when "[FA","***" color="33" else color=nil end color = nil if ENV[\'TERM\'] != "xterm" print "\\033[#{color}m" if color print s print "\\033[0m" if color print "\\n" end class ClusterNode def initialize(addr) s = addr.split(":") if s.length < 2 puts "Invalid IP or Port (given as #{addr}) - use IP:Port format" exit 1 end port = s.pop # removes port from split array ip = s.join(":") # if s.length > 1 here, it\'s IPv6, so restore address @r = nil @info = {} @info[:host] = ip @info[:port] = port @info[:slots] = {} @info[:migrating] = {} @info[:importing] = {} @info[:replicate] = false @dirty = false # True if we need to flush slots info into node. @friends = [] end def friends @friends end def slots @info[:slots] end def has_flag?(flag) @info[:flags].index(flag) end def to_s "#{@info[:host]}:#{@info[:port]}" end def connect(o={}) return if @r print "Connecting to node #{self}: " STDOUT.flush begin @r = Redis.new(:host => @info[:host], :port => @info[:port], :timeout => 60) @r.ping rescue xputs "[ERR] Sorry, can\'t connect to node #{self}" exit 1 if o[:abort] @r = nil end xputs "OK" end def assert_cluster info = @r.info if !info["cluster_enabled"] || info["cluster_enabled"].to_i == 0 xputs "[ERR] Node #{self} is not configured as a cluster node." exit 1 end end def assert_empty if !(@r.cluster("info").split("\\r\\n").index("cluster_known_nodes:1")) || (@r.info[\'db0\']) xputs "[ERR] Node #{self} is not empty. Either the node already knows other nodes (check with CLUSTER NODES) or contains some key in database 0." exit 1 end end def load_info(o={}) self.connect nodes = @r.cluster("nodes").split("\\n") nodes.each{|n| # name addr flags role ping_sent ping_recv link_status slots split = n.split name,addr,flags,master_id,ping_sent,ping_recv,config_epoch,link_status = split[0..6] slots = split[8..-1] info = { :name => name, :addr => addr, :flags => flags.split(","), :replicate => master_id, :ping_sent => ping_sent.to_i, :ping_recv => ping_recv.to_i, :link_status => link_status } info[:replicate] = false if master_id == "-" if info[:flags].index("myself") @info = @info.merge(info) @info[:slots] = {} slots.each{|s| if s[0..0] == \'[\' if s.index("->-") # Migrating slot,dst = s[1..-1].split("->-") @info[:migrating][slot.to_i] = dst elsif s.index("-<-") # Importing slot,src = s[1..-1].split("-<-") @info[:importing][slot.to_i] = src end elsif s.index("-") start,stop = s.split("-") self.add_slots((start.to_i)..(stop.to_i)) else self.add_slots((s.to_i)..(s.to_i)) end } if slots @dirty = false @r.cluster("info").split("\\n").each{|e| k,v=e.split(":") k = k.to_sym v.chop! if k != :cluster_state @info[k] = v.to_i else @info[k] = v end } elsif o[:getfriends] @friends << info end } end def add_slots(slots) slots.each{|s| @info[:slots][s] = :new } @dirty = true end def set_as_replica(node_id) @info[:replicate] = node_id @dirty = true end def flush_node_config return if !@dirty if @info[:replicate] begin @r.cluster("replicate",@info[:replicate]) rescue # If the cluster did not already joined it is possible that # the slave does not know the master node yet. So on errors # we return ASAP leaving the dirty flag set, to flush the # config later. return end else new = [] @info[:slots].each{|s,val| if val == :new new << s @info[:slots][s] = true end } @r.cluster("addslots",*new) end @dirty = false end def info_string # We want to display the hash slots assigned to this node # as ranges, like in: "1-5,8-9,20-25,30" # # Note: this could be easily written without side effects, # we use \'slots\' just to split the computation into steps. # First step: we want an increasing array of integers # for instance: [1,2,3,4,5,8,9,20,21,22,23,24,25,30] slots = @info[:slots].keys.sort # As we want to aggregate adjacent slots we convert all the # slot integers into ranges (with just one element) # So we have something like [1..1,2..2, ... and so forth. slots.map!{|x| x..x} # Finally we group ranges with adjacent elements. slots = slots.reduce([]) {|a,b| if !a.empty? && b.first == (a[-1].last)+1 a[0..-2] + [(a[-1].first)..(b.last)] else a + [b] end } # Now our task is easy, we just convert ranges with just one # element into a number, and a real range into a start-end format. # Finally we join the array using the comma as separator. slots = slots.map{|x| x.count == 1 ? x.first.to_s : "#{x.first}-#{x.last}" }.join(",") role = self.has_flag?("master") ? "M" : "S" if self.info[:replicate] and @dirty is = "S: #{self.info[:name]} #{self.to_s}" else is = "#{role}: #{self.info[:name]} #{self.to_s}\\n"+ " slots:#{slots} (#{self.slots.length} slots) "+ "#{(self.info[:flags]-["myself"]).join(",")}" end if self.info[:replicate] is += "\\n replicates #{info[:replicate]}" elsif self.has_flag?("master") && self.info[:replicas] is += "\\n #{info[:replicas].length} additional replica(s)" end is end # Return a single string representing nodes and associated slots. # TODO: remove slaves from config when slaves will be handled # by Redis Cluster. def get_config_signature config = [] @r.cluster("nodes").each_line{|l| s = l.split slots = s[8..-1].select {|x| x[0..0] != "["} next if slots.length == 0 config << s[0]+":"+(slots.sort.join(",")) } config.sort.join("|") end def info @info end def is_dirty? @dirty end def r @r end end class RedisTrib def initialize @nodes = [] @fix = false @errors = [] end def check_arity(req_args, num_args) if ((req_args > 0 and num_args != req_args) || (req_args < 0 and num_args < req_args.abs)) xputs "[ERR] Wrong number of arguments for specified sub command" exit 1 end end def add_node(node) @nodes << node end def cluster_error(msg) @errors << msg xputs msg end def get_node_by_name(name) @nodes.each{|n| return n if n.info[:name] == name.downcase } return nil end # This function returns the master that has the least number of replicas # in the cluster. If there are multiple masters with the same smaller # number of replicas, one at random is returned. def get_master_with_least_replicas masters = @nodes.select{|n| n.has_flag? "master"} sorted = masters.sort{|a,b| a.info[:replicas].length <=> b.info[:replicas].length } sorted[0] end def check_cluster xputs ">>> Performing Cluster Check (using node #{@nodes[0]})" show_nodes check_config_consistency check_open_slots check_slots_coverage end # Merge slots of every known node. If the resulting slots are equal # to ClusterHashSlots, then all slots are served. def covered_slots slots = {} @nodes.each{|n| slots = slots.merge(n.slots) } slots end def check_slots_coverage xputs ">>> Check slots coverage..." slots = covered_slots if slots.length == ClusterHashSlots xputs "[OK] All #{ClusterHashSlots} slots covered." else cluster_error \\ "[ERR] Not all #{ClusterHashSlots} slots are covered by nodes." fix_slots_coverage if @fix end end def check_open_slots xputs ">>> Check for open slots..." open_slots = [] @nodes.each{|n| if n.info[:migrating].size > 0 cluster_error \\ "[WARNING] Node #{n} has slots in migrating state (#{n.info[:migrating].keys.join(",")})." open_slots += n.info[:migrating].keys elsif n.info[:importing].size > 0 cluster_error \\ "[WARNING] Node #{n} has slots in importing state (#{n.info[:importing].keys.join(",")})." open_slots += n.info[:importing].keys end } open_slots.uniq! if open_slots.length > 0 xputs "[WARNING] The following slots are open: #{open_slots.join(",")}" end if @fix open_slots.each{|slot| fix_open_slot slot} end end def nodes_with_keys_in_slot(slot) nodes = [] @nodes.each{|n| nodes << n if n.r.cluster("getkeysinslot",slot,1).length > 0 } nodes end def fix_slots_coverage not_covered = (0...ClusterHashSlots).to_a - covered_slots.keys xputs ">>> Fixing slots coverage..." xputs "List of not covered slots: " + not_covered.join(",") # For every slot, take action depending on the actual condition: # 1) No node has keys for this slot. # 2) A single node has keys for this slot. # 3) Multiple nodes have keys for this slot. slots = {} not_covered.each{|slot| nodes = nodes_with_keys_in_slot(slot) slots[slot] = nodes xputs "Slot #{slot} has keys in #{nodes.length} nodes: #{nodes.join}" } none = slots.select {|k,v| v.length == 0} single = slots.select {|k,v| v.length == 1} multi = slots.select {|k,v| v.length > 1} # Handle case "1": keys in no node. if none.length > 0 xputs "The folowing uncovered slots have no keys across the cluster:" xputs none.keys.join(",") yes_or_die "Fix these slots by covering with a random node?" none.each{|slot,nodes| node = @nodes.sample xputs ">>> Covering slot #{slot} with #{node}" node.r.cluster("addslots",slot) } end # Handle case "2": keys only in one node. if single.length > 0 xputs "The folowing uncovered slots have keys in just one node:" puts single.keys.join(",") yes_or_die "Fix these slots by covering with those nodes?" single.each{|slot,nodes| xputs ">>> Covering slot #{slot} with #{nodes[0]}" nodes[0].r.cluster("addslots",slot) } end # Handle case "3": keys in multiple nodes. if multi.length > 0 xputs "The folowing uncovered slots have keys in multiple nodes:" xputs multi.keys.join(",") yes_or_die "Fix these slots by moving keys into a single node?" multi.each{|slot,nodes| xputs ">>> Covering slot #{slot} moving keys to #{nodes[0]}" # TODO # 1) Set all nodes as "MIGRATING" for this slot, so that we # can access keys in the hash slot using ASKING. # 2) Move everything to node[0] # 3) Clear MIGRATING from nodes, and ADDSLOTS the slot to # node[0]. raise "TODO: Work in progress" } end end # Return the owner of the specified slot def get_slot_owner(slot) @nodes.each{|n| n.slots.each{|s,_| return n if s == slot } } nil end # Slot \'slot\' was found to be in importing or migrating state in one or # more nodes. This function fixes this condition by migrating keys where # it seems more sensible. def fix_open_slot(slot) puts ">>> Fixing open slot #{slot}" # Try to obtain the current slot owner, according to the current # nodes configuration. owner = get_slot_owner(slot) # If there is no slot owner, set as owner the slot with the biggest # number of keys, among the set of migrating / importing nodes. if !owner xputs "*** Fix me, some work to do here." # Select owner... # Use ADDSLOTS to assign the slot. exit 1 end migrating = [] importing = [] @nodes.each{|n| next if n.has_flag? "slave" if n.info[:migrating][slot] migrating << n elsif n.info[:importing][slot] importing << n elsif n.r.cluster("countkeysinslot",slot) > 0 && n != owner xputs "*** Found keys about slot #{slot} in node #{n}!" importing << n end } puts "Set as migrating in: #{migrating.join(",")}" puts "Set as importing in: #{importing.join(",")}" # Case 1: The slot is in migrating state in one slot, and in # importing state in 1 slot. That\'s trivial to address. if migrating.length == 1 && importing.length == 1 move_slot(migrating[0],importing[0],slot,:verbose=>true,:fix=>true) # Case 2: There are multiple nodes that claim the slot as importing, # they probably got keys about the slot after a restart so opened # the slot. In this case we just move all the keys to the owner # according to the configuration. elsif migrating.length == 0 && importing.length > 0 xputs ">>> Moving all the #{slot} slot keys to its owner #{owner}" importing.each {|node| next if node == owner move_slot(node,owner,slot,:verbose=>true,:fix=>true,:cold=>true) xputs ">>> Setting #{slot} as STABLE in #{node}" node.r.cluster("setslot",slot,"stable") } # Case 3: There are no slots claiming to be in importing state, but # there is a migrating node that actually don\'t have any key. We # can just close the slot, probably a reshard interrupted in the middle. elsif importing.length == 0 && migrating.length == 1 && migrating[0].r.cluster("getkeysinslot",slot,10).length == 0 migrating[0].r.cluster("setslot",slot,"stable") else xputs "[ERR] Sorry, Redis-trib can\'t fix this slot yet (work in progress). Slot is set as migrating in #{migrating.join(",")}, as importing in #{importing.join(",")}, owner is #{owner}" end end # Check if all the nodes agree about the cluster configuration def check_config_consistency if !is_config_consistent? cluster_error "[ERR] Nodes don\'t agree about configuration!" else xputs "[OK] All nodes agree about slots configuration." end end def is_config_consistent? signatures=[] @nodes.each{|n| signatures << n.get_config_signature } return signatures.uniq.length == 1 end def wait_cluster_join print "Waiting for the cluster to join" while !is_config_consistent? print "." STDOUT.flush sleep 1 end print "\\n" end def alloc_slots nodes_count = @nodes.length masters_count = @nodes.length / (@replicas+1) masters = [] #以上是关于Redis 集群部署的主要内容,如果未能解决你的问题,请参考以下文章