CentOS7 安装 Spark3.0.0-preview2-bin-hadoop3.2

Posted PHPdragon

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了CentOS7 安装 Spark3.0.0-preview2-bin-hadoop3.2相关的知识,希望对你有一定的参考价值。

版本信息

CentOS: Linux localhost.localdomain 3.10.0-862.el7.x86_64 #1 SMP Fri Apr 20 16:44:24 UTC 2018 x86_64 x86_64 x86_64 GNU/Linux

JDK: Oracle jdk1.8.0_241 , https://www.oracle.com/java/technologies/javase-jdk8-downloads.html

Hadoop : hadoop-3.2.1.tar.gz

Scala : scala-2.11.8.tgz ,https://www.scala-lang.org/download/2.11.8.html

Spark :spark-3.0.0-preview2-bin-hadoop3.2.tgz,http://spark.apache.org/downloads.html

设置自身免登陆

# ssh-keygen Generating public/private rsa key pair. Enter file in which to save the key (/root/.ssh/id_rsa): Enter passphrase (empty for no passphrase): Enter same passphrase again: Your identification has been saved in /root/.ssh/id_rsa. Your public key has been saved in /root/.ssh/id_rsa.pub. The key fingerprint is: 1b:e4:ff:13:55:69:6a:2f:46:10:b0:ec:42:fe:5b:80 root@localhost.localdomain The key\'s randomart image is: +--[ RSA 2048]----+ | .... .| | . .. o.| | ..o . o. | | ooo +. | | ESo o.. | | o+. .o . | | ....... | | o.. | | . .. | +-----------------+

# cat ~/.ssh/id_rsa.pub >> ~/.ssh/authorized_keys

# chmod 600 ~/.ssh/authorized_keys # ssh root@localhost Last login: Sun Mar 29 15:00:23 2020

关闭selinux、防火墙

a. 永久有效

修改 /etc/selinux/config 文件中的 SELINUX=enforcing 修改为 SELINUX=disabled ,然后重启。

b. 临时生效

# setenforce 0

# systemctl stop firewalld.service # systemctl disable iptables.service

安装JDK

下载Java SDK,前往 https://www.oracle.com/java/technologies/javase-jdk8-downloads.html 下载

mkdir /data/server/spark/ cd /data/server/spark/ rz #选择你下载好的文件,上传到当前目录下 tar zxvf jdk-8u241-linux-x64.tar.gz

安装Scala

cd /data/server/spark cd /data/server/spark wget https://downloads.lightbend.com/scala/2.11.8/scala-2.11.8.tgz tar zxvf scala-2.11.8.tgz && rm -f scala-2.11.8.tgz

安装Spark

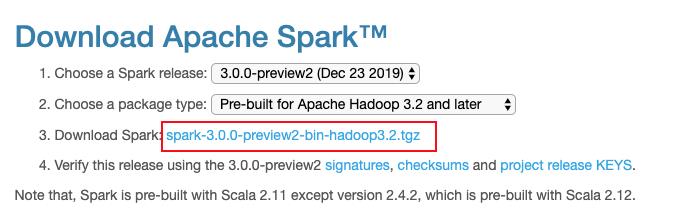

1. 前往http://spark.apache.org/downloads.html 下载对应的版本:

2. 下载并解压到指定目录 /data/server/spark,并重命名

cd /data/server/spark wget https://mirrors.tuna.tsinghua.edu.cn/apache/spark/spark-3.0.0-preview2/spark-3.0.0-preview2-bin-hadoop3.2.tgz tar zxvf spark-3.0.0-preview2-bin-hadoop3.2.tgz mv spark-3.0.0-preview2-bin-hadoop3.2/ 3.0.0-preview2-bin-hadoop3.2

3. 编辑 /etc/profile,文件末尾添加如下内容:

#sprak export SPARK_HOME=/data/server/spark/3.0.0-preview2-bin-hadoop3.2 export PATH=$PATH:$SPARK_HOME/bin:$SPARK_HOME/sbin

重载环境变量

source /etc/profile

4. 编辑 vi 3.0.0-preview2-bin-hadoop3.2/conf/spark-env.sh,在尾部增加以下内容:

#使用自定义的java版本,不依赖系统自带的java export JAVA_HOME=/data/server/spark/jdk1.8.0_241 export JRE_HOME=${JAVA_HOME}/jre #hadoop,不依赖在/etc/profile的hadoop配置 export HADOOP_HOME=/data/server/hadoop/3.2.1 export HADOOP_CONF_DIR=$HADOOP_HOME/etc/hadoop export YARN_CONF_DIR=$HADOOP_CONF_DIR #spark export SPARK_MASTER_HOST=172.16.1.126 #默认端口7077 export SPARK_MASTER_PORT=7077 #默认端口8080 export SPARK_MASTER_WEBUI_PORT=8080 export SPARK_WORK_PORT=7078 #默认端口8081,应该是从 SPARK_MASTER_WEBUI_PORT 的端口开始新增,如果有多个worker应该是无需配置的?! export SPARK_WORK_WEBUI_PORT=8081 export SPARK_WORKER_CORES=1 #export SPARK_EXECUTOR_MEMORY=256M #export SPARK_DRIVER_MEMORY=256M #export SPARK_WORKER_MEMORY=256M

以上配置也可以放在/etc/profile中

启动Sprak

1. 重命名启动文件,避免和hadoop的脚步名称冲突

cd /data/server/spark/3.0.0-preview2-bin-hadoop3.2/ mv ./sbin/start-all.sh ./sbin/start-spark-all.sh mv ./sbin/stop-all.sh ./sbin/stop-spark-all.sh

2. 启动sprak

start-spark-all.sh

查看Master地址:http://172.16.1.126:7078

查看Worker地址:http://172.16.1.126:7079

PS:

https://blog.csdn.net/boling_cavalry/article/details/86747258

以上是关于CentOS7 安装 Spark3.0.0-preview2-bin-hadoop3.2的主要内容,如果未能解决你的问题,请参考以下文章