使用scrapy框架爬取某商城部分数据并存入MongoDB

Posted 星辰

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了使用scrapy框架爬取某商城部分数据并存入MongoDB相关的知识,希望对你有一定的参考价值。

爬取电商网站的商品信息: URL为: https://www.zhe800.com/ju_type/baoyou 抓取不同分类下的商品数据 抓取内容为商品的名称, 价格数字, 商品图片 将商品图片二进制流, 商品名称和价格数字一同存储于MongoDB数据库 存储数据结构为: { ‘name’: ‘懒人神奇, 看电影必备’, ‘price’: ‘5.5’, ‘img’: …., “category”: ‘家纺’ }

这里抓包就不说了,很简单,利用xpath进行解析

- by.py

-

# -*- coding: utf-8 -*- import scrapy from ..items import BywItem class BySpider(scrapy.Spider): name = \'by\' # allowed_domains = [\'baidu.com\'] start_urls = [\'https://www.zhe800.com/ju_type/baoyou\']

def img_parse(self,response): item = BywItem() item[\'name\'] = response.meta[\'name\'] # print(name) item[\'cate\'] = response.meta[\'cate\'] # print(cate) item[\'price\'] = response.meta[\'price\'] item[\'img\'] = response.body yield item #详情 def xq_parse(self,response): cate = response.meta[\'cate\'] print(cate) xq_list = response.xpath(\'//div[@class="con "]\') print(xq_list) for xq in xq_list: name = xq.xpath(\'./h3/a/@title\').extract_first() print(name) price = xq.xpath(\'./h4/em/text()\').extract_first() print(price) img_link = \'https:\' +xq.xpath(\'.//a/img/@data-original\').extract_first() print(img_link) meta = { \'name\':name, \'price\':price, \'cate\':cate } yield scrapy.Request(url=img_link,callback=self.img_parse,meta=meta) def parse(self, response): a_list = response.xpath(\'//div[@class="area"]/a[position()>1]\') for a in a_list: cate = a.xpath(\'./em/text()\').extract_first() # print(cate) cate_link = \'https:\' +a.xpath(\'./@href\').extract_first() # print(cate_link) yield scrapy.Request(url=cate_link,callback=self.xq_parse,meta={\'cate\':cate})

-

- items.py

-

import scrapy class BywItem(scrapy.Item): # define the fields for your item here like: name = scrapy.Field() cate = scrapy.Field() price = scrapy.Field() img = scrapy.Field()

-

- pipelines.py

-

import pymongo conn = pymongo.MongoClient() #连接 db = conn.byw #创建数据库 table = db.by #创建表 class BywPipeline: def process_item(self, item, spider): table.insert_one(dict(item)) #插入数据 return item

-

- settings.py

-

#ua USER_AGENT = \'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (Khtml, like Gecko) Chrome/81.0.4044.92 Safari/537.36\' #robots协议 ROBOTSTXT_OBEY = False #管道 ITEM_PIPELINES = { \'byw.pipelines.BywPipeline\': 300, }

-

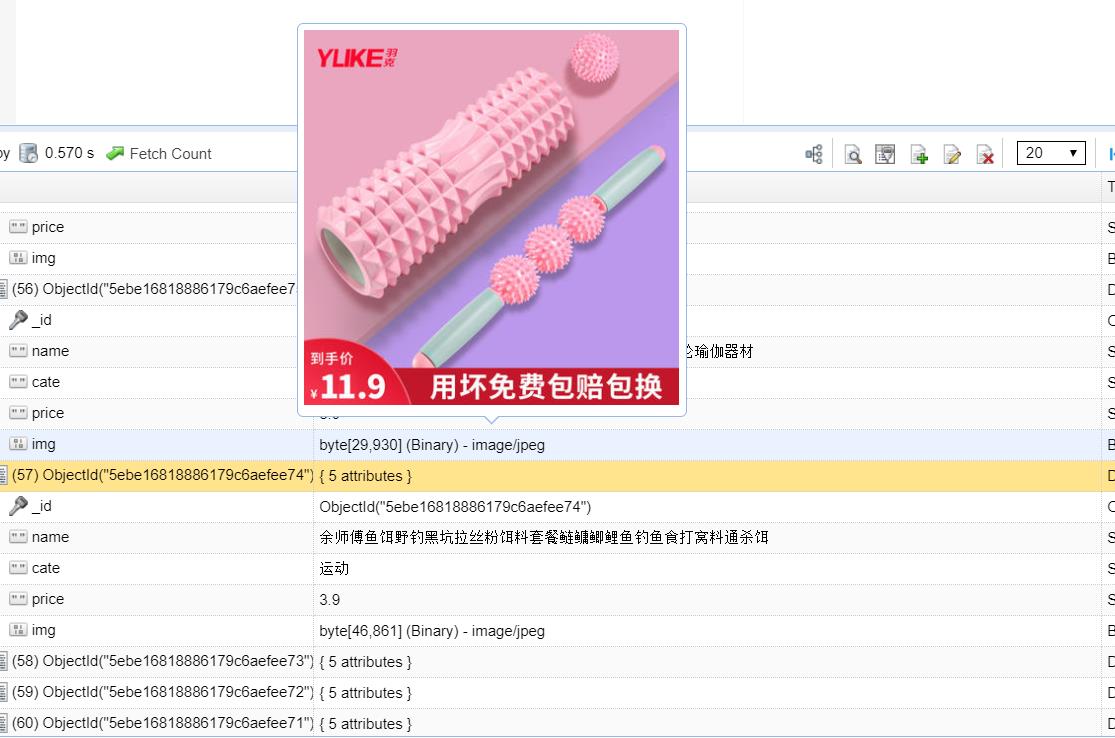

- 效果

以上是关于使用scrapy框架爬取某商城部分数据并存入MongoDB的主要内容,如果未能解决你的问题,请参考以下文章