中国农产品信息网站scrapy-redis分布式爬取数据

Posted bkwxx

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了中国农产品信息网站scrapy-redis分布式爬取数据相关的知识,希望对你有一定的参考价值。

---恢复内容开始---

基于scrapy_redis和mongodb的分布式爬虫

项目需求:

1:自动抓取每一个农产品的详细数据

2:对抓取的数据进行存储

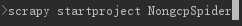

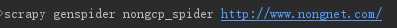

第一步:

创建scrapy项目

创建爬虫文件

在items.py里面定义我们要爬取的数据

# -*- coding: utf-8 -*- # Define here the models for your scraped items # # See documentation in: # https://doc.scrapy.org/en/latest/topics/items.html import scrapy class NongcpspiderItem(scrapy.Item): # define the fields for your item here like: # name = scrapy.Field() # 供求关系 supply = scrapy.Field() # 标题 title = scrapy.Field() # 发布时间 create_time = scrapy.Field() # 发布单位 unit = scrapy.Field() # 联系人 contact = scrapy.Field() # 手机号码 phone_number = scrapy.Field() # 地址 address = scrapy.Field() # 详细地址 detail_address = scrapy.Field() # 上市时间 market_time = scrapy.Field() # 价格 price = scrapy.Field()

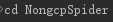

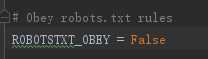

将settings.py改为false

写spider爬虫文件nongcp_spider.py,进行字段解析使用xpath,正则表达式

# -*- coding: utf-8 -*- import scrapy import re from ..items import NongcpspiderItem class NongcpSpiderSpider(scrapy.Spider): name = ‘nongcp_spider‘ allowed_domains = [‘http://www.nongnet.com/‘] start_urls = [‘http://http://www.nongnet.com/‘] def parse(self, response): """ 解析详情页和下一页url :param response: :return: """ detail_urls = response.xpath("//li[@class=‘lileft‘]/a/href").extract() for detail_url in detail_urls: yield scrapy.Request(url=self.start_urls[0] + detail_url, callback=self.detail_parse) next_url = response.xpath("//span[@id=‘ContentMain_lblPage‘]/a/href").extract() if next_url: yield scrapy.Request(url=self.start_urls[0] + next_url[-2], callback=self.parse) def detail_parse(self, response): """ 解析具体的数据 :param response: :return: """ items = NongcpspiderItem() title_result = response.xpath(‘//h1[@class="h1class"]/text()‘).extract_first() if title_result: items[‘supply‘] = title_result.strip()[1:2] items[‘title‘] = title_result.strip[3:] creatte_time = re.findall(r"<font color=‘999999‘>时间:(d+/d+/d+ d+:d+)  ", response.text) if creatte_time: items[‘create_time‘] = creatte_time[0] unit = re.findall(r"发布单位</div><div class=‘xinxisxr‘><a href=‘.*?.aspx‘>(.*?)</a>", response.text) if unit: items[‘unit‘] = unit[0] price = response.xpath(‘//div[@class="scdbj1"]//text()‘).extract() if price: items[‘price‘] = ‘‘.join(price) yield items

编写pipelines.py,往mongodb里面存储数据

# -*- coding: utf-8 -*- # Define your item pipelines here # # Don‘t forget to add your pipeline to the ITEM_PIPELINES setting # See: https://doc.scrapy.org/en/latest/topics/item-pipeline.html import pymongo class NongcpspiderPipeline(object): def process_item(self, item, spider): return item class MongoPipeline(object): def __init__(self): client = pymongo.MongoClient(host=‘127.0.0.1‘, port=27017) db = client[‘nong‘] self.connection = db[‘Info‘] self.dbinfo = self.db.authenticate(‘baikai‘, ‘8Wxx.ypa‘) def process_item(self, item, spider): self.connection.save(dict(item)) return item

---恢复内容结束---

以上是关于中国农产品信息网站scrapy-redis分布式爬取数据的主要内容,如果未能解决你的问题,请参考以下文章