Hive学习之二Hive SQL

Posted cac2020

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了Hive学习之二Hive SQL相关的知识,希望对你有一定的参考价值。

环境

虚拟机:VMware 10

Linux版本:CentOS-6.5-x86_64

客户端:Xshell4

FTP:Xftp4

jdk8

hadoop-3.1.1

apache-hive-3.1.1

参考:官网hive操作手册

一、DDL

1、数据类型

data_type : primitive_type | array_type | map_type | struct_type | union_type -- (Note: Available in Hive 0.7.0 and later) primitive_type : TINYINT | SMALLINT | INT | BIGINT | BOOLEAN | FLOAT | DOUBLE | DOUBLE PRECISION -- (Note: Available in Hive 2.2.0 and later) | STRING | BINARY -- (Note: Available in Hive 0.8.0 and later) | TIMESTAMP -- (Note: Available in Hive 0.8.0 and later) | DECIMAL -- (Note: Available in Hive 0.11.0 and later) | DECIMAL(precision, scale) -- (Note: Available in Hive 0.13.0 and later) | DATE -- (Note: Available in Hive 0.12.0 and later) | VARCHAR -- (Note: Available in Hive 0.12.0 and later) | CHAR -- (Note: Available in Hive 0.13.0 and later) array_type : ARRAY < data_type > map_type : MAP < primitive_type, data_type > struct_type : STRUCT < col_name : data_type [COMMENT col_comment], ...> union_type : UNIONTYPE < data_type, data_type, ... > -- (Note: Available in Hive 0.7.0 and later)

2、数据库的创建、删除、修改;

3、表的创建、删除、修改;

举例:创建表

hive>CREATE TABLE person( id INT, name STRING, age INT, likes ARRAY<STRING>, address MAP<STRING,STRING> ) ROW FORMAT DELIMITED FIELDS TERMINATED BY \',\' COLLECTION ITEMS TERMINATED BY \'-\' MAP KEYS TERMINATED BY \':\' LINES TERMINATED BY \'\\n\';

查看表结构:

hive> desc person; OK id int name string age int likes array<string> address map<string,string> Time taken: 0.095 seconds, Fetched: 5 row(s) hive> desc formatted person; OK # col_name data_type comment id int name string age int likes array<string> address map<string,string> # Detailed Table Information Database: default OwnerType: USER Owner: root CreateTime: Tue Jan 29 11:41:12 CST 2019 LastAccessTime: UNKNOWN Retention: 0 Location: hdfs://PCS102:9820/root/hive_remote/warehouse/person Table Type: MANAGED_TABLE Table Parameters: COLUMN_STATS_ACCURATE {\\"BASIC_STATS\\":\\"true\\",\\"COLUMN_STATS\\":{\\"address\\":\\"true\\",\\"age\\":\\"true\\",\\"id\\":\\"true\\",\\"likes\\":\\"true\\",\\"name\\":\\"true\\"}} bucketing_version 2 numFiles 0 numRows 0 rawDataSize 0 totalSize 0 transient_lastDdlTime 1548733272 # Storage Information SerDe Library: org.apache.hadoop.hive.serde2.lazy.LazySimpleSerDe InputFormat: org.apache.hadoop.mapred.TextInputFormat OutputFormat: org.apache.hadoop.hive.ql.io.HiveIgnoreKeyTextOutputFormat Compressed: No Num Buckets: -1 Bucket Columns: [] Sort Columns: [] Storage Desc Params: collection.delim - field.delim , line.delim \\n mapkey.delim : serialization.format , Time taken: 0.157 seconds, Fetched: 39 row(s)

向表内加载数据:

data: 1,小明1,18,lol-book-movie,beijing:shangxuetang-shanghai:pudong 2,小明2,20,lol-book-movie,beijing:shangxuetang-shanghai:pudong 3,小明3,21,lol-book-movie,beijing:shangxuetang-shanghai:pudong 4,小明4,21,lol-book-movie,beijing:shangxuetang-shanghai:pudong 5,小明5,21,lol-book-movie,beijing:shangxuetang-shanghai:pudong 6,小明6,21,lol-book-movie,beijing:shangxuetang-shanghai:pudong

hive> LOAD DATA LOCAL INPATH \'/root/data\' INTO TABLE person; Loading data to table default.person OK Time taken: 0.185 seconds hive> select * from person; OK 1 小明1 18 ["lol","book","movie"] {"beijing":"shangxuetang","shanghai":"pudong"} 2 小明2 20 ["lol","book","movie"] {"beijing":"shangxuetang","shanghai":"pudong"} 3 小明3 21 ["lol","book","movie"] {"beijing":"shangxuetang","shanghai":"pudong"} 4 小明4 21 ["lol","book","movie"] {"beijing":"shangxuetang","shanghai":"pudong"} 5 小明5 21 ["lol","book","movie"] {"beijing":"shangxuetang","shanghai":"pudong"} 6 小明6 21 ["lol","book","movie"] {"beijing":"shangxuetang","shanghai":"pudong"} Time taken: 0.126 seconds, Fetched: 6 row(s) hive>

备注:向表导入数据最好按照表定义的结构来安排数据,如果不按照这个格式,文件也能上传到HDFS,这是通过hive select查看的时候查不出来,无法格式化输出。

struct类型:

数据 /root/data:

1,xiaoming:12 2,xiaohong:11

建表 从linux本地文件系统导入数据:

hive> create table student( > id int, > info STRUCT <name:string,age:int> > ) > ROW FORMAT DELIMITED > FIELDS TERMINATED BY \',\' > COLLECTION ITEMS TERMINATED BY \':\' > ; OK Time taken: 0.712 seconds hive> show tables; OK logtbl person person3 psn2 psn3 psn4 student test01 Time taken: 0.1 seconds, Fetched: 8 row(s) hive> load data local inpath \'/root/data\' into table student; Loading data to table default.student OK Time taken: 0.365 seconds hive> select * from student; OK 1 {"name":"xiaoming","age":12} 2 {"name":"xiaohong","age":11} Time taken: 1.601 seconds, Fetched: 2 row(s) hive>

对比从hdfs导入数据:

先上传文件到hdfs 根目录:

[root@PCS102 ~]# hdfs dfs -put data /

[root@PCS102 ~]#

去掉 local:

hive> load data inpath \'/data\' into table student; Loading data to table default.student OK Time taken: 0.161 seconds hive> select * from student; OK 1 {"name":"xiaoming","age":12} 2 {"name":"xiaohong","age":11} 1 {"name":"xiaoming","age":12} 2 {"name":"xiaohong","age":11} Time taken: 0.118 seconds, Fetched: 4 row(s) hive>

导入之后,hdfs根目录下data文件被移动(注意不是复制)到student下面:

Hive 内部表:CREATE TABLE [IF NOT EXISTS] table_name,删除表时,元数据与数据都会被删除

Hive 外部表:CREATE EXTERNAL TABLE [IF NOT EXISTS] table_name LOCATION hdfs_path,删除外部表只删除metastore的元数据,不删除hdfs中的表数据

举例:

CREATE EXTERNAL TABLE person3( id INT, name STRING, age INT, likes ARRAY<STRING>, address MAP<STRING,STRING> ) ROW FORMAT DELIMITED FIELDS TERMINATED BY \',\' COLLECTION ITEMS TERMINATED BY \'-\' MAP KEYS TERMINATED BY \':\' LINES TERMINATED BY \'\\n\' LOCATION \'/usr/\';

Hive 建表

Create Table Like:

CREATE TABLE empty_key_value_store LIKE key_value_store;

Create Table As Select (CTAS):

CREATE TABLE new_key_value_store

AS

SELECT columA, columB FROM key_value_store;

4、分区 提高查询效率,根据需求确定分区

(1)创建分区(分区字段不能再表的列中)

举例:

CREATE TABLE psn2( id INT, name STRING, likes ARRAY<STRING>, address MAP<STRING,STRING> ) PARTITIONED BY (age int) ROW FORMAT DELIMITED FIELDS TERMINATED BY \',\' COLLECTION ITEMS TERMINATED BY \'-\' MAP KEYS TERMINATED BY \':\' LINES TERMINATED BY \'\\n\'; 否则报错: FAILED: SemanticException [Error 10035]: Column repeated in partitioning columns

hive> CREATE TABLE psn2( > id INT, > name STRING, > likes ARRAY<STRING>, > address MAP<STRING,STRING> > ) > PARTITIONED BY (age int) > ROW FORMAT DELIMITED > FIELDS TERMINATED BY \',\' > COLLECTION ITEMS TERMINATED BY \'-\' > MAP KEYS TERMINATED BY \':\' > LINES TERMINATED BY \'\\n\'; OK Time taken: 0.167 seconds hive> desc psn2; OK id int name string likes array<string> address map<string,string> age int # Partition Information # col_name data_type comment age int Time taken: 0.221 seconds, Fetched: 9 row(s) hive>

导入数据: hive> LOAD DATA LOCAL INPATH \'/root/data1\' INTO TABLE psn2 partition (age=10); Loading data to table default.psn2 partition (age=10) OK Time taken: 0.678 seconds hive> select * from psn2; OK 1 小明1 ["lol","book","movie"] {"beijing":"shangxuetang","shanghai":"pudong"} 10 2 小明2 ["lol","book","movie"] {"beijing":"shangxuetang","shanghai":"pudong"} 10 3 小明3 ["lol","book","movie"] {"beijing":"shangxuetang","shanghai":"pudong"} 10 4 小明4 ["lol","book","movie"] {"beijing":"shangxuetang","shanghai":"pudong"} 10 5 小明5 ["lol","book","movie"] {"beijing":"shangxuetang","shanghai":"pudong"} 10 6 小明6 ["lol","book","movie"] {"beijing":"shangxuetang","shanghai":"pudong"} 10 Time taken: 1.663 seconds, Fetched: 6 row(s) hive>

hive> LOAD DATA LOCAL INPATH \'/root/data1\' INTO TABLE psn2 partition (age=20); Loading data to table default.psn2 partition (age=20) OK Time taken: 0.36 seconds hive>

(2)修改分区

创建分区:

hive> CREATE TABLE psn3( > id INT, > name STRING, > likes ARRAY<STRING>, > address MAP<STRING,STRING> > ) > PARTITIONED BY (age int,sex string) > ROW FORMAT DELIMITED > FIELDS TERMINATED BY \',\' > COLLECTION ITEMS TERMINATED BY \'-\' > MAP KEYS TERMINATED BY \':\' > LINES TERMINATED BY \'\\n\'; OK Time taken: 0.061 seconds

导入数据:

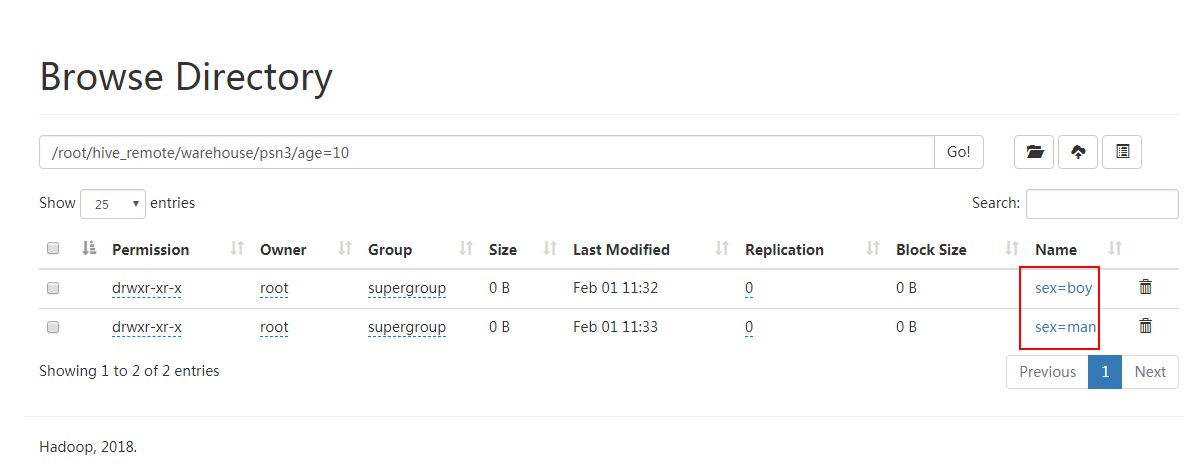

hive> LOAD DATA LOCAL INPATH \'/root/data1\' INTO TABLE psn3 partition (age=10,sex=\'boy\'); Loading data to table default.psn3 partition (age=10, sex=boy) OK Time taken: 0.351 seconds hive> LOAD DATA LOCAL INPATH \'/root/data1\' INTO TABLE psn3 partition (age=20,sex=\'boy\'); Loading data to table default.psn3 partition (age=20, sex=boy) OK Time taken: 0.339 seconds

增加分区:

hive> alter table psn3 add partition (age=10,sex=\'man\'); OK Time taken: 0.1 seconds hive> alter table psn3 add partition (age=20,sex=\'man\'); OK Time taken: 0.067 seconds

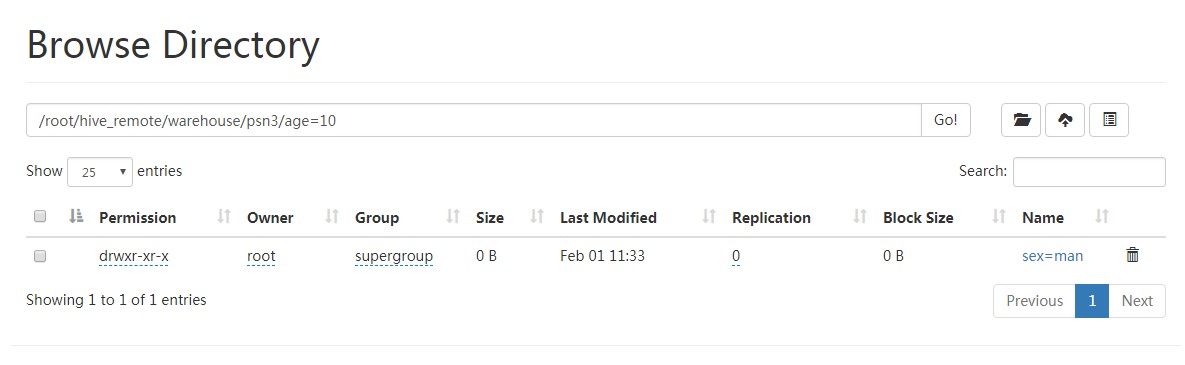

删除分区:

hive> alter table psn3 drop partition (sex=\'boy\'); Dropped the partition age=10/sex=boy Dropped the partition age=20/sex=boy OK Time taken: 0.472 seconds hive>

二、DML

导入数据

1、load 其实就是hdfs dfs -put 上传文件

2、insert 插入数据,作用:(1)复制表;(2)中间表;(3)向不同表插入不同数据

CREATE TABLE psn4( id INT, name STRING, likes ARRAY<STRING> ) ROW FORMAT DELIMITED FIELDS TERMINATED BY \',\' COLLECTION ITEMS TERMINATED BY \'-\' LINES TERMINATED BY \'\\n\'; from psn3 insert overwrite table psn4 select id,name,likes;

或者

from psn3 insert overwrite table psn4 select id,name,likes insert overwrite table psn5 select id,name;

三、Hive SerDe - Serializer and Deserializer

SerDe 用于做序列化和反序列化。

构建在数据存储和执行引擎之间,对两者实现解耦。

Hive通过ROW FORMAT DELIMITED以及SERDE进行内容的读写。

row_format

: DELIMITED

[FIELDS TERMINATED BY char [ESCAPED BY char]]

[COLLECTION ITEMS TERMINATED BY char]

[MAP KEYS TERMINATED BY char]

[LINES TERMINATED BY char]

: SERDE serde_name [WITH SERDEPROPERTIES (property_name=property_value, property_name=property_value, ...)]

建表:

hive> CREATE TABLE logtbl ( > host STRING, > identity STRING, > t_user STRING, > a_time STRING, > request STRING, > referer STRING, > agent STRING) > ROW FORMAT SERDE \'org.apache.hadoop.hive.serde2.RegexSerDe\' > WITH SERDEPROPERTIES ( > "input.regex" = "([^ ]*) ([^ ]*) ([^ ]*) \\\\[(.*)\\\\] \\"(.*)\\" (-|[0-9]*) (-|[0-9]*)" > ) > STORED AS TEXTFILE; OK Time taken: 0.059 seconds

数据:

192.168.57.4 - - [29/Feb/2016:18:14:35 +0800] "GET /bg-upper.png HTTP/1.1" 304 - 192.168.57.4 - - [29/Feb/2016:18:14:35 +0800] "GET /bg-nav.png HTTP/1.1" 304 - 192.168.57.4 - - [29/Feb/2016:18:14:35 +0800] "GET /asf-logo.png HTTP/1.1" 304 - 192.168.57.4 - - [29/Feb/2016:18:14:35 +0800] "GET /bg-button.png HTTP/1.1" 304 - 192.168.57.4 - - [29/Feb/2016:18:14:35 +0800] "GET /bg-middle.png HTTP/1.1" 304 - 192.168.57.4 - - [29/Feb/2016:18:14:36 +0800] "GET / HTTP/1.1" 200 11217 192.168.57.4 - - [29/Feb/2016:18:14:36 +0800] "GET / HTTP/1.1" 200 11217 192.168.57.4 - - [29/Feb/2016:18:14:36 +0800] "GET /tomcat.css HTTP/1.1" 304 - 192.168.57.4 - - [29/Feb/2016:18:14:36 +0800] "GET /tomcat.png HTTP/1.1" 304 - 192.168.57.4 - - [29/Feb/2016:18:14:36 +0800] "GET /asf-logo.png HTTP/1.1" 304 - 192.168.57.4 - - [29/Feb/2016:18:14:36 +0800] "GET /bg-middle.png HTTP/1.1" 304 - 192.168.57.4 - - [29/Feb/2016:18:14:36 +0800] "GET /bg-button.png HTTP/1.1" 304 - 192.168.57.4 - - [29/Feb/2016:18:14:36 +0800] "GET /bg-nav.png HTTP/1.1" 304 - 192.168.57.4 - - [29/Feb/2016:18:14:36 +0800] "GET /bg-upper.png HTTP/1.1" 304 - 192.168.57.4 - - [29/Feb/2016:18:14:36 +0800] "GET / HTTP/1.1" 200 11217 192.168.57.4 - - [29/Feb/2016:18:14:36 +0800] "GET /tomcat.css HTTP/1.1" 304 - 192.168.57.4 - - [29/Feb/2016:18:14:36 +0800] "GET /tomcat.png HTTP/1.1" 304 - 192.168.57.4 - - [29/Feb/2016:18:14:36 +0800] "GET / HTTP/1.1" 200 11217 192.168.57.4 - - [29/Feb/2016:18:14:36 +0800] "GET /tomcat.css HTTP/1.1" 304 - 192.168.57.4 - - [29/Feb/2016:18:14:36 +0800] "GET /tomcat.png HTTP/1.1" 304 - 192.168.57.4 - - [29/Feb/2016:18:14:36 +0800] "GET /bg-button.png HTTP/1.1" 304 - 192.168.57.4 - - [29/Feb/2016:18:14:36 +0800] "GET /bg-upper.png HTTP/1.1" 304 -

导入数据:

hive> load data local inpath \'/root/log\' into table logtbl; Loading data to table default.logtbl OK Time taken: 0.137 seconds

查询数据:

hive> select * from logtbl; OK 192.168.57.4 - - 29/Feb/2016:18:14:35 +0800 GET /bg-upper.png HTTP/1.1 304 - 192.168.57.4 - -