分布式爬虫 redis + mongodb +scrapy

Posted 青春叛逆者

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了分布式爬虫 redis + mongodb +scrapy相关的知识,希望对你有一定的参考价值。

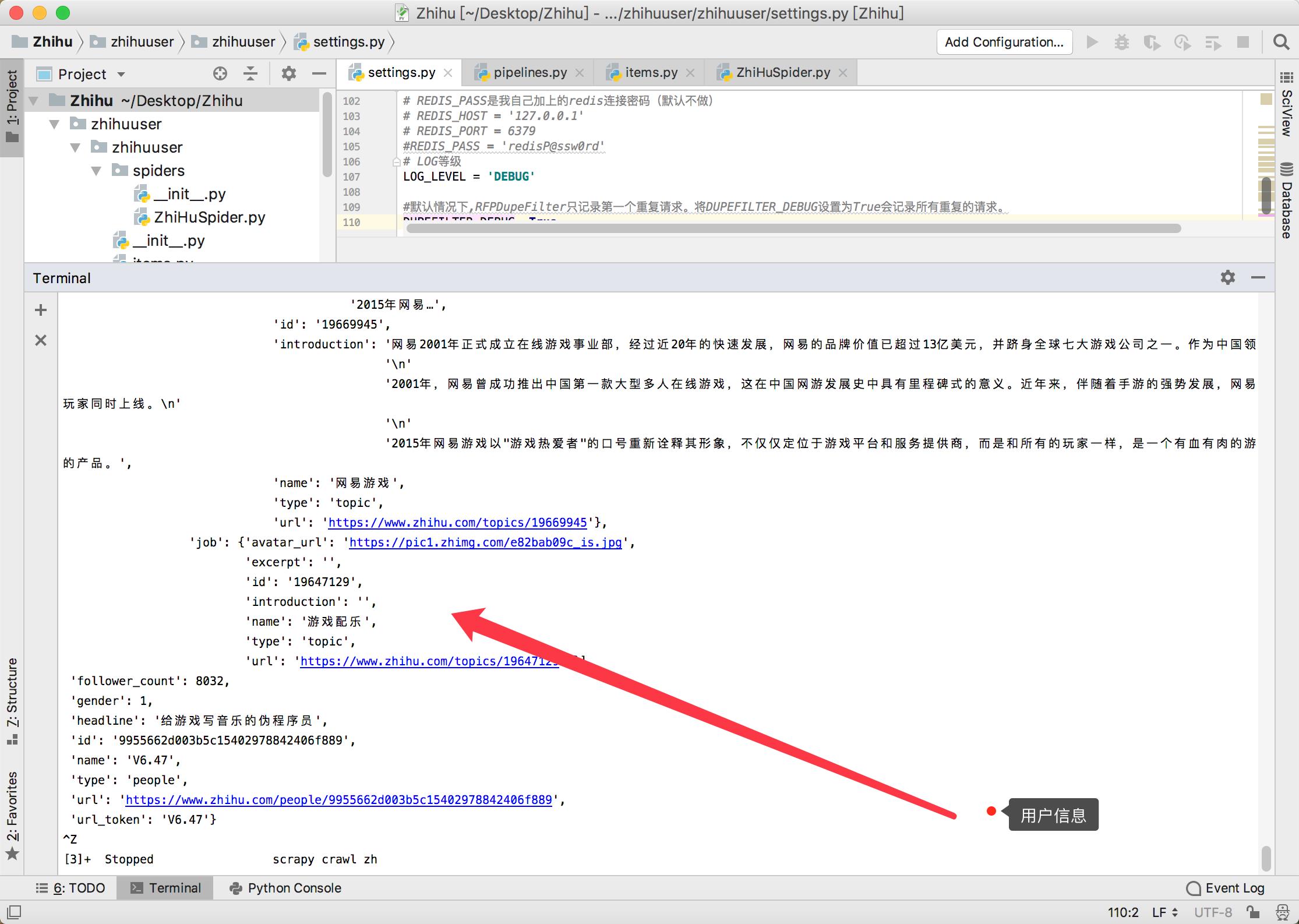

zhihuspider.py # -*- coding: utf-8 -*- import json import scrapy from scrapy import Request from zhihuuser.items import ZhihuuserItem class ZhihuspiderSpider(scrapy.Spider): name = \'zh\' # url=\'https://www.zhihu.com/api/v4/members/letitiachow?include=allow_message%2Cis_followed%2Cis_following%2Cis_org%2Cis_blocking%2Cemployments%2Canswer_count%2Cfollower_count%2Carticles_count%2Cgender%2Cbadge%5B%3F(type%3Dbest_answerer)%5D.topics\' allowed_domains = ["www.zhihu.com"] start_user = \'excited-vczh\' user_url = \'https://www.zhihu.com/api/v4/members/{user}?include={include}\' user_query=\'allow_message,is_followed,is_following,is_org,is_blocking,employments,answer_count,follower_count,articles_count,gender,badge[?(type=best_answerer)].topics\' # follows_url=\'https://www.zhihu.com/api/v4/members/excited-vczh/followees?include=\' follows_url = \'https://www.zhihu.com/api/v4/members/{user}/followees?include={include}&offset={offset}&limit={limit}\' follows_query = \'data[*].answer_count,articles_count,gender,follower_count,is_followed,is_following,badge[?(type=best_answerer)].topics\' #粉丝列表 followers_url = \'https://www.zhihu.com/api/v4/members/{user}/followers?include={include}&offset={offset}&limit={limit}\' followers_query = \'data[*].answer_count,articles_count,gender,follower_count,is_followed,is_following,badge[?(type=best_answerer)].topics\' def start_requests(self): yield Request(self.user_url.format(user=self.start_user, include=self.user_query), self.parse_user) #自己本身信息 yield Request(self.follows_url.format(user=self.start_user, include=self.follows_query, limit=20, offset=0), self.parse_follows) #粉丝列表 yield Request(self.followers_url.format(user=self.start_user, include=self.followers_query, limit=20, offset=0), self.parse_followers) def parse_user(self, response): \'\'\' 解析 :param response: :return: \'\'\' # print(response.text) result = json.loads(response.text) item = ZhihuuserItem() for field in item.fields: if field in result.keys():#键名其中之一 item[field] = result.get(field)#就返回item yield item yield Request( self.follows_url.format(user=result.get(\'url_token\'), include=self.follows_query, limit=20, offset=0), self.parse_follows) yield Request( self.followers_url.format(user=result.get(\'url_token\'), include=self.followers_query, limit=20, offset=0), self.parse_followers) def parse_follows(self, response): # print(response.text) results = json.loads(response.text) #判断分页是否结束 if \'data\' in results.keys(): for result in results.get(\'data\'): yield Request(self.user_url.format(user=result.get(\'url_token\'), include=self.user_query), self.parse_user) if \'paging\' in results.keys() and results.get(\'paging\').get(\'is_end\') == False: next_page = results.get(\'paging\').get(\'next\') yield Request(next_page, self.parse_follows) def parse_followers(self, response): results = json.loads(response.text) if \'data\' in results.keys(): for result in results.get(\'data\'): yield Request(self.user_url.format(user=result.get(\'url_token\'), include=self.user_query), self.parse_user) if \'paging\' in results.keys() and results.get(\'paging\').get(\'is_end\') == False: next_page = results.get(\'paging\').get(\'next\') yield Request(next_page, self.parse_followers)

items.py # -*- coding: utf-8 -*- # Define here the models for your scraped items # # See documentation in: # https://doc.scrapy.org/en/latest/topics/items.html import scrapy from scrapy import Field class ZhihuuserItem(scrapy.Item): # define the fields for your item here like: # name = scrapy.Field() id = Field() name = Field() avatar_url = Field() headline = Field() description = Field() url = Field() url_token = Field() gender = Field() cover_url = Field() type = Field() badge = Field() answer_count = Field() articles_count = Field() commercial_question_count = Field() favorite_count = Field() favorited_count = Field() follower_count = Field() following_columns_count = Field() following_count = Field() pins_count = Field() question_count = Field() thank_from_count = Field() thank_to_count = Field() thanked_count = Field() vote_from_count = Field() vote_to_count = Field() voteup_count = Field() following_favlists_count = Field() following_question_count = Field() following_topic_count = Field() marked_answers_count = Field() mutual_followees_count = Field() hosted_live_count = Field() participated_live_count = Field() locations = Field() educations = Field() employments = Field()

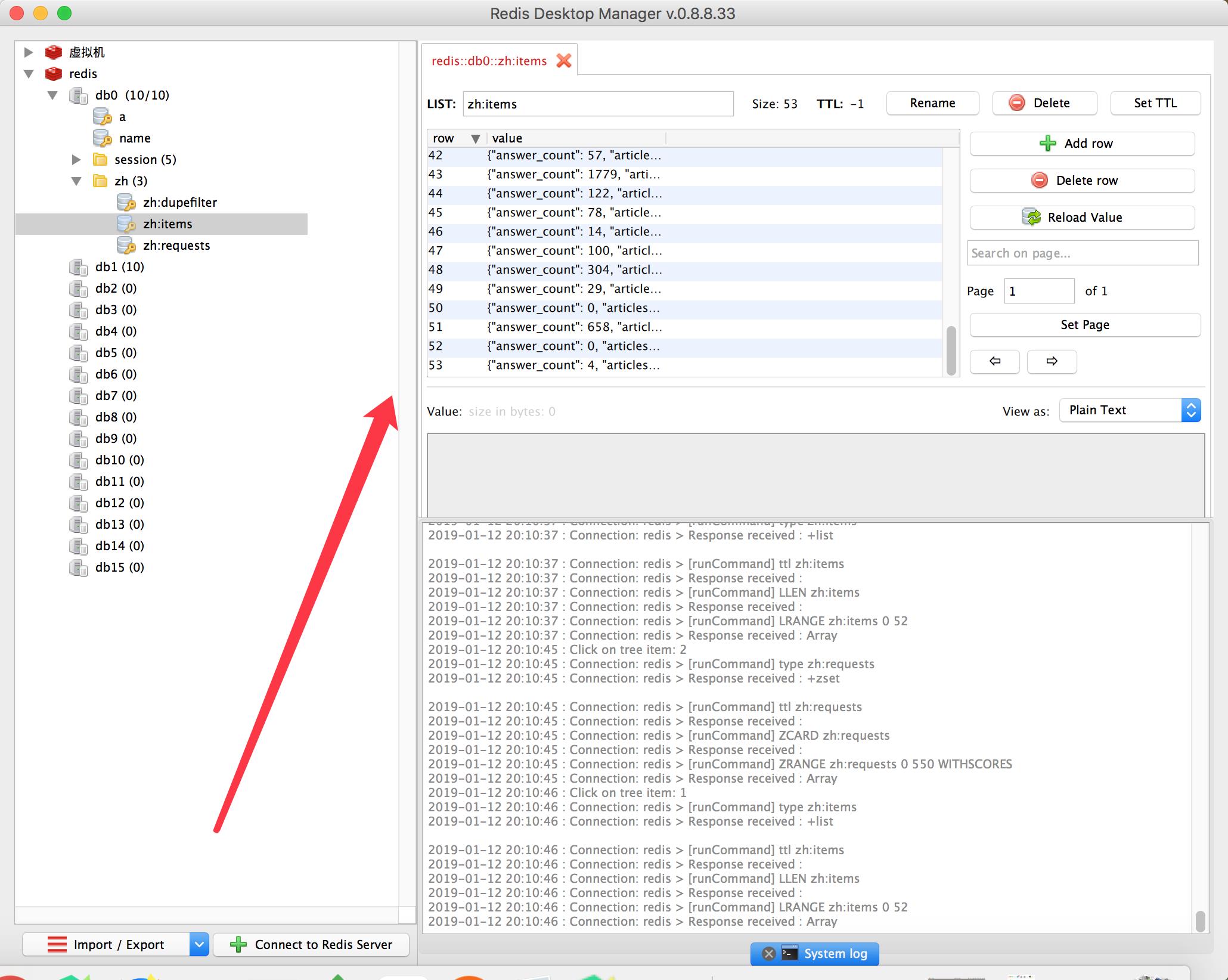

pipeline.py class MongoPipeline(object): def __init__(self, mongo_uri, mongo_db): self.mongo_uri = mongo_uri self.mongo_db = mongo_db @classmethod def from_crawler(cls, crawler): return cls( mongo_uri=crawler.settings.get(\'MONGO_URI\'), mongo_db=crawler.settings.get(\'MONGO_DATABASE\') ) def open_spider(self, spider): self.client = pymongo.MongoClient(self.mongo_uri) self.db = self.client[self.mongo_db] def close_spider(self, spider): self.client.close() def process_item(self, item, spider): self.db[\'user\'].update({\'url_token\':item[\'url_token\']},{\'$set\':item},True) return item

# -*- coding: utf-8 -*- # Scrapy settings for zhihuuser project # # For simplicity, this file contains only settings considered important or # commonly used. You can find more settings consulting the documentation: # # https://doc.scrapy.org/en/latest/topics/settings.html # https://doc.scrapy.org/en/latest/topics/downloader-middleware.html # https://doc.scrapy.org/en/latest/topics/spider-middleware.html BOT_NAME = \'zhihuuser\' SPIDER_MODULES = [\'zhihuuser.spiders\'] NEWSPIDER_MODULE = \'zhihuuser.spiders\' # Crawl responsibly by identifying yourself (and your website) on the user-agent #USER_AGENT = \'zhihuuser (+http://www.yourdomain.com)\' # Obey robots.txt rules ROBOTSTXT_OBEY = False # Configure maximum concurrent requests performed by Scrapy (default: 16) #CONCURRENT_REQUESTS = 32 # Configure a delay for requests for the same website (default: 0) # See https://doc.scrapy.org/en/latest/topics/settings.html#download-delay # See also autothrottle settings and docs DOWNLOAD_DELAY = 3 # The download delay setting will honor only one of: #CONCURRENT_REQUESTS_PER_DOMAIN = 16 #CONCURRENT_REQUESTS_PER_IP = 16 # Disable cookies (enabled by default) #COOKIES_ENABLED = False # Disable Telnet Console (enabled by default) #TELNETCONSOLE_ENABLED = False # Override the default request headers: DEFAULT_REQUEST_HEADERS = { \'Accept\': \'text/html,application/xhtml+xml,application/xml;q=0.9,*/*;q=0.8\', \'Accept-Language\': \'en\', \'User-Agent\': \'Mozilla/5.0 (Macintosh; Intel Mac OS X 10_12_6) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/71.0.3578.98 Safari/537.36\' } # Enable or disable spider middlewares # See https://doc.scrapy.org/en/latest/topics/spider-middleware.html #SPIDER_MIDDLEWARES = { # \'zhihuuser.middlewares.ZhihuuserSpiderMiddleware\': 543, #} # Enable or disable downloader middlewares # See https://doc.scrapy.org/en/latest/topics/downloader-middleware.html #DOWNLOADER_MIDDLEWARES = { # \'zhihuuser.middlewares.ZhihuuserDownloaderMiddleware\': 543, #} # Enable or disable extensions # See https://doc.scrapy.org/en/latest/topics/extensions.html #EXTENSIONS = { # \'scrapy.extensions.telnet.TelnetConsole\': None, #} # Configure item pipelines # See https://doc.scrapy.org/en/latest/topics/item-pipeline.html ITEM_PIPELINES = { \'zhihuuser.pipelines.MongoPipeline\': 300, \'scrapy_redis.pipelines.RedisPipeline\': 400 } # Enable and configure the AutoThrottle extension (disabled by default) # See https://doc.scrapy.org/en/latest/topics/autothrottle.html #AUTOTHROTTLE_ENABLED = True # The initial download delay #AUTOTHROTTLE_START_DELAY = 5 # The maximum download delay to be set in case of high latencies #AUTOTHROTTLE_MAX_DELAY = 60 # The average number of requests Scrapy should be sending in parallel to # each remote server #AUTOTHROTTLE_TARGET_CONCURRENCY = 1.0 # Enable showing throttling stats for every response received: #AUTOTHROTTLE_DEBUG = False # Enable and configure HTTP caching (disabled by default) # See https://doc.scrapy.org/en/latest/topics/downloader-middleware.html#httpcache-middleware-settings #HTTPCACHE_ENABLED = True #HTTPCACHE_EXPIRATION_SECS = 0 #HTTPCACHE_DIR = \'httpcache\' #HTTPCACHE_IGNORE_HTTP_CODES = [] #HTTPCACHE_STORAGE = \'scrapy.extensions.httpcache.FilesystemCacheStorage\' MONGO_URI=\'localhost\' MONGO_DATABASE=\'Zhihu\' SCHEDULER = "scrapy_redis.scheduler.Scheduler" DUPEFILTER_CLASS = "scrapy_redis.dupefilter.RFPDupeFilter" REDIS_URL = \'redis//root:password@ip:port # 指定redis数据库的连接参数 # REDIS_PASS是我自己加上的redis连接密码 # REDIS_HOST = \'127.0.0.1\' # REDIS_PORT = 6379 #REDIS_PASS = \'redisP@ssw0rd\' # LOG等级 LOG_LEVEL = \'DEBUG\' #默认情况下,RFPDupeFilter只记录第一个重复请求。将DUPEFILTER_DEBUG设置为True会记录所有重复的请求。 DUPEFILTER_DEBUG =True

以上是关于分布式爬虫 redis + mongodb +scrapy的主要内容,如果未能解决你的问题,请参考以下文章