Hadoop(21)-数据清洗(ELT)简单版

Posted duoduotouhenying

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了Hadoop(21)-数据清洗(ELT)简单版相关的知识,希望对你有一定的参考价值。

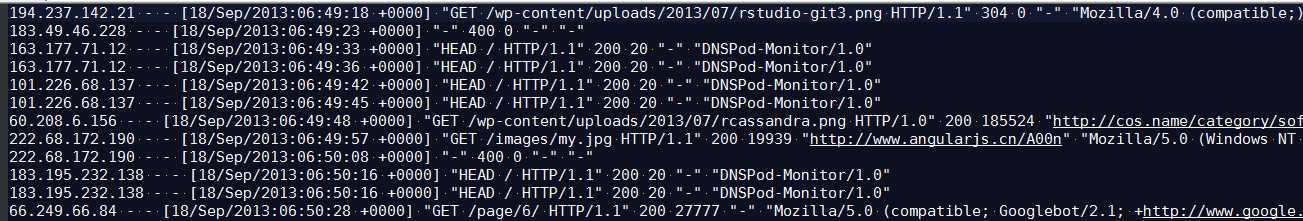

有一个诸如这样的log日志

去除长度不合法,并且状态码不正确的记录

LogBean

package com.nty.elt; /** * author nty * date time 2018-12-14 15:27 */ public class Log { private String remote_addr;// 记录客户端的ip地址 private String remote_user;// 记录客户端用户名称,忽略属性"-" private String time_local;// 记录访问时间与时区 private String request;// 记录请求的url与http协议 private String status;// 记录请求状态;成功是200 private String body_bytes_sent;// 记录发送给客户端文件主体内容大小 private String http_referer;// 用来记录从那个页面链接访问过来的 private String http_user_agent;// 记录客户浏览器的相关信息 private boolean valid = true;// 判断数据是否合法 public String getRemote_addr() { return remote_addr; } public Log setRemote_addr(String remote_addr) { this.remote_addr = remote_addr; return this; } public String getRemote_user() { return remote_user; } public Log setRemote_user(String remote_user) { this.remote_user = remote_user; return this; } public String getTime_local() { return time_local; } public Log setTime_local(String time_local) { this.time_local = time_local; return this; } public String getRequest() { return request; } public Log setRequest(String request) { this.request = request; return this; } public String getStatus() { return status; } public Log setStatus(String status) { this.status = status; return this; } public String getBody_bytes_sent() { return body_bytes_sent; } public Log setBody_bytes_sent(String body_bytes_sent) { this.body_bytes_sent = body_bytes_sent; return this; } public String getHttp_referer() { return http_referer; } public Log setHttp_referer(String http_referer) { this.http_referer = http_referer; return this; } public String getHttp_user_agent() { return http_user_agent; } public Log setHttp_user_agent(String http_user_agent) { this.http_user_agent = http_user_agent; return this; } public boolean isValid() { return valid; } public Log setValid(boolean valid) { this.valid = valid; return this; } @Override public String toString() { StringBuilder sb = new StringBuilder(); sb.append(this.valid); sb.append("�01").append(this.remote_addr); sb.append("�01").append(this.remote_user); sb.append("�01").append(this.time_local); sb.append("�01").append(this.request); sb.append("�01").append(this.status); sb.append("�01").append(this.body_bytes_sent); sb.append("�01").append(this.http_referer); sb.append("�01").append(this.http_user_agent); return sb.toString(); } }

Mapper类

package com.nty.elt; import org.apache.hadoop.io.LongWritable; import org.apache.hadoop.io.NullWritable; import org.apache.hadoop.io.Text; import org.apache.hadoop.mapreduce.Mapper; import java.io.IOException; /** * author nty * date time 2018-12-14 15:28 */ public class LogMapper extends Mapper<LongWritable, Text, Text, NullWritable> { private Text logKey = new Text(); @Override protected void map(LongWritable key, Text value, Context context) throws IOException, InterruptedException { //分割一行数据 String[] fields = value.toString().split(" "); Log result = parseLog(fields); if (!result.isValid()) { return; } logKey.set(result.toString()); // 3 输出 context.write(logKey, NullWritable.get()); } private Log parseLog(String[] fields) { Log log = new Log(); if (fields.length > 11) { log.setRemote_addr(fields[0]) .setRemote_user(fields[1]) .setTime_local(fields[3].substring(1)) .setRequest(fields[6]) .setStatus(fields[8]) .setBody_bytes_sent(fields[9]) .setHttp_referer(fields[10]); if (fields.length > 12) { log.setHttp_user_agent(fields[11] + " " + fields[12]); } else { log.setHttp_user_agent(fields[11]); } // 大于400,HTTP错误 if (Integer.parseInt(log.getStatus()) >= 400) { log.setValid(false); } } else { log.setValid(false); } return log; } }

Driver

package com.nty.elt; import org.apache.hadoop.conf.Configuration; import org.apache.hadoop.fs.Path; import org.apache.hadoop.io.NullWritable; import org.apache.hadoop.io.Text; import org.apache.hadoop.mapreduce.Job; import org.apache.hadoop.mapreduce.lib.input.FileInputFormat; import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat; /** * author nty * date time 2018-12-14 15:40 */ public class LogDriver { public static void main(String[] args) throws Exception { // 1 获取job信息 Configuration conf = new Configuration(); Job job = Job.getInstance(conf); // 2 加载jar包 job.setJarByClass(LogDriver.class); // 3 关联map job.setMapperClass(LogMapper.class); // 4 设置最终输出类型 job.setOutputKeyClass(Text.class); job.setOutputValueClass(NullWritable.class); // 5 设置输入和输出路径 FileInputFormat.setInputPaths(job, new Path("d:\\Hadoop_test")); FileOutputFormat.setOutputPath(job, new Path("d:\\Hadoop_test_out")); // 6 提交 job.waitForCompletion(true); } }

结果

以上是关于Hadoop(21)-数据清洗(ELT)简单版的主要内容,如果未能解决你的问题,请参考以下文章