AIX平台安装Oracle11gR2数据库

Posted wandering-mind

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了AIX平台安装Oracle11gR2数据库相关的知识,希望对你有一定的参考价值。

1. 前提条件

1.1 认证操作系统

Certification Information for Oracle Database on IBM AIX on Power systems(Doc ID 1307544.1)

Certification Information for Oracle Database on IBM Linux on System z(Doc ID 1309988.1)

AIX 5L V5.3 TL 09 SP1 ("5300-09-01") or higher, 64 bit kernel (Part Number E10854-01)

AIX 6.1 TL 02 SP1 ("6100-02-01") or higher, 64-bit kernel

AIX 7.1 TL 00 SP1 ("7100-00-01") or higher, 64-bit kernel

AIX 7.2 TL 0 SP 1 ("7200-00-01") or higher, 64-bit kernel (11.2.0.4 only)

-- 检查操作系统版本

# oslevel -s

-- 检查操作系统内核

# bootinfo -K

1.2 系统硬件环境检查

1)物理内存至少4G

# lsattr -El sys0 -a realmem

2)swap需求

Between 1GB and 2GB then 1.5 times RAM

Between 2GB and 16 GB then match RAM

More than 16 GB then 16GB RAM

# lsps -a

若不满足,可以使用smit chps进行扩容

3)tmp至少1G

若不符合,可以使用chfs -a size=5G /tmp进行扩容

4)Oracle软件目录建议80G

5)系统架构--64位硬件架构

# getconf HARDWARE_BITMODE

6)系统所需补丁

<1> AIX 5.3 required packages:

bos.adt.base

bos.adt.lib

bos.adt.libm

bos.perf.libperfstat 5.3.9.0 or later

bos.perf.perfstat

bos.perf.proctools

rsct.basic.rte (For RAC configurations only)

rsct.compat.clients.rte (For RAC configurations only)

xlC.aix50.rte:10.1.0.0 or later

xlC.rte.10.1.0.0 or later

gpfs.base 3.2.1.8 or later (Only for RAC systems that will use GPFS cluster filesystems)

<2> AIX 6.1 required packages:

bos.adt.base

bos.adt.lib

bos.adt.libm

bos.perf.libperfstat 6.1.2.1 or later

bos.perf.perfstat

bos.perf.proctools

rsct.basic.rte (For RAC configurations only)

rsct.compat.clients.rte (For RAC configurations only)

xlC.aix61.rte:10.1.0.0 or later

xlC.rte.10.1.0.0 or later

gpfs.base 3.2.1.8 or later (Only for RAC systems that will use GPFS cluster filesystems)

<3> AIX 7.1 required packages:

bos.adt.base

bos.adt.lib

bos.adt.libm

bos.perf.libperfstat

bos.perf.perfstat

bos.perf.proctools

xlC.rte.11.1.0.2 or later

gpfs.base 3.3.0.11 or later (Only for RAC systems that will use GPFS cluster filesystems)

<i> Authorized Problem Analysis Reports (APARs) for AIX 5.3:

IZ42940

IZ49516

IZ52331

IY84780

See Note:1379908.1 for other AIX 5.3 patches that may be required

<ii> APARs for AIX 6.1:

IZ41855

IZ51456

IZ52319

IZ97457

IZ89165

IY84780

See Note:1264074.1 and Note:1379908.1 for other AIX 6.1 patches that may be required

<iii> APARs for AIX 7.1:

IZ87216

IZ87564

IZ89165

IZ97035

IY84780 <<< AIX 5.3 上,应用 APAR IY84780 以修复每个 cpu 的空闲列表的已知内核问题

See Note:1264074.1 and Note:1379908.1 for other AIX 7.1 patches that may be required

--使用lslpp -l xxx确认补丁是否安装

-- aix 7.1

lslpp -l bos.adt.base bos.adt.lib bos.adt.libm bos.perf.libperfstat bos.perf.perfstat bos.perf.proctools xlC.rte gpfs.base

/usr/sbin/instfix -i -k "IZ87216 IZ87564 IZ89165 IZ97035"

1.3 创建用户和组并赋予权限

-- 创建用户组

# mkgroup -‘A‘ id=‘1000‘ adms=‘root‘ oinstall

# mkgroup -‘A‘ id=‘1100‘ adms=‘root‘ asmadmin

# mkgroup -‘A‘ id=‘1200‘ adms=‘root‘ dba

# mkgroup -‘A‘ id=‘1300‘ adms=‘root‘ asmdba

# mkgroup -‘A‘ id=‘1301‘ adms=‘root‘ asmoper

-- 创建用户

# mkuser id=‘1100‘ pgrp=‘oinstall‘ groups=‘asmadmin,asmdba,asmoper‘ home=‘/home/grid‘ grid

# mkuser id=‘1101‘ pgrp=‘oinstall‘ groups=‘dba,asmdba‘ home=‘/home/oracle‘ oracle

-- 查看用户能力

# lsuser -a capabilities grid

# lsuser -a capabilities oracle

-- 修改用户能力

# chuser capabilities=CAP_NUMA_ATTACH,CAP_BYPASS_RAC_VMM,CAP_PROPAGATE grid

# chuser capabilities=CAP_NUMA_ATTACH,CAP_BYPASS_RAC_VMM,CAP_PROPAGATE oracle

-- 相关参数含义

CAP_BYPASS_RAC_VMM:进程具有绕过对 VMM 资源用法限制的能力。

CAP_NUMA_ATTACH:进程具有绑定到特定资源的能力。

CAP_PROPAGATE:子进程继承所有能力。

注意:在11g中,必须确保 GI 和 ORACLE 所有者帐户具有 CAP_NUMA_ATTACH、CAP_BYPASS_RAC_VMM 和 CAP_PROPAGATE 功能。若缺少此功能,将在跑root.sh脚本时,会如下错误:

Creating trace directory

User oracle is missing the following capabilities required to run CSSD in realtime:

CAP_NUMA_ATTACH,CAP_BYPASS_RAC_VMM,CAP_PROPAGATEITPUB

To add the required capabilities, please run:

/usr/bin/chuser capabilities=CAP_NUMA_ATTACH,CAP_BYPASS_RAC_VMM,CAP_PROPAGATE oracle

CSS cannot be run in realtime mode at /grid/crs/install/crsconfig_lib.pm line 8119.

-- 修改grid、oracle密码

# passwd oracle

# passwd grid

若登录异常,如下:

[compat]: 3004-610 You are required to change your password.

Please choose a new one.

则执行命令

# pwdadm -f NOCHECK oracle

# pwdadm -f NOCHECK grid

1.4 修改grid、oracle用户环境变量

su - grid

vi ~/.profile

export ORACLE_SID=+ASM1

export ORACLE_BASE=/oracle/app/grid

export ORACLE_HOME=/oracle/app/11.2.0/grid

export PATH=$ORACLE_HOME/bin:$ORACLE_HOME/OPatch:$PATH:$HOME/bin

su - oracle

vi ~/.profile

export ORACLE_SID=orcl1

export ORACLE_BASE=/oracle/app/oracle

export ORACLE_HOME=$ORACLE_BASE/product/11.2.0/db_1

export ORA_CRS_HOME=/oracle/app/11.2.0/grid

export LIBPATH=$ORACLE_HOME/lib

export PATH=$ORACLE_HOME/bin:$ORACLE_HOME/OPatch:/bin:/usr/bin:/usr/sbin:/usr/local/bin:/usr/X11R6/bin:${HOME}/dba:$PATH

umask 022

export TNS_ADMIN=$ORA_CRS_HOME/network/admin

其它节点参照配置

1.5 创建软件安装目录

mkdir -p /oracle/app/grid

mkdir -p /oracle/app/11.2.0/grid

chown -R grid:oinstall /oracle

mkdir -p /oracle/app/oracle

mkdir -p /oracle/app/oracle/product/11.2.0/db_1

chown oracle:oinstall /oracle/app/oracle

chmod -R 775 /oracle

1.6 调整SHELL限制

vi /etc/security/limits

default:

fsize = -1

core = -1

cpu = -1

data = -1

rss = -1

stack_hard = -1

stack = -1

nofiles = 65536

1.7 检查及配置内核参数

--查看最大进程数:

lsattr -El sys0 |grep maxuproc

lsattr -E -l sys0 -a maxuproc

-- 修改最大进程数为16384:

smitty chgsys

Maximum number of PROCESSES allowed per user [16384]

或

chdev -l sys0 -a maxuproc=16384

-- 检查系统块大小ncargs(至少128)

# lsattr -E -l sys0 -a ncargs

-- 配置系统块大小为256

# chdev -l sys0 -a ncargs=256

--查看当前值:

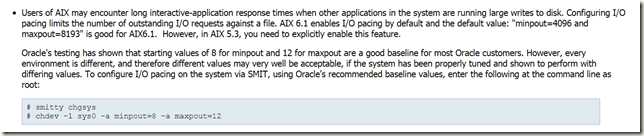

lsattr -E -l sys0 -a minpout

lsattr -E -l sys0 -a maxpout

-- 修改

# smitty chgsys

# chdev -l sys0 -a minpout=8 -a maxpout=12

Oracle 测试表明,minpout 为 8 和 maxpout 为 12 的起始值对于大多数 Oracle 客户都是比较好的基准。

1.8 配置AIO

-- AIX 6.1以上会自动开启,无需配置。推荐的 aio_maxreqs 值为 64k (65536)

# ioo -a | more

# ioo -o aio_maxreqs

# lsattr -El aio0 -a maxreqs #<< aix 5.3

1.9 配置优化内存参数

-- 查看内存参数

vmo -aF

--修改内存参数:

vmo -p -o minperm%=3

vmo -p -o maxclient%=15

vmo -p -o maxperm%=15

vmo -p -o strict_maxclient=1

vmo -p -o strict_maxperm=1 <<< Doc ID 1526555.1 中的值改成0;

vmo -p -o strict maxperm=1 <<< Doc ID 1526555.1 中的值改成0;

vmo -p -o strict maxclient=1

vmo -r -o page_steal_method=1 <<< 需要重启生效;

vmo -p -o lru_file_repage=0 <<< aix 7.1 默认值是0;

注意:lru_file_repage 建议设置为"0",表示VMM仅窃取文件缓冲区高速缓存并保留计算内存(SGA);lru_file_repage参数仅在AIX 5.2 ML04或更高版本以及AIX 5.3 ML01或更高版本上可用

-- 通过设置vmm_klock_mode=2来确保AIX的Kernel Memory被固定在内存中(在AIX7.1 是默认设定)。 对于AIX 6.1来说,这个功能选项需要AIX6.1 TL06或者以上版本的支持

-- 检查参数设置:

# vmo -L vmm_klock_mode

-- 设置参数:

# vmo -r -o vmm_klock_mode=2

-- AIX:数据库运行的时间越长,数据库性能就越慢(Doc ID 316533.1)

strict_maxperm = 0(默认值)

strict_maxclient = 1(默认值)

lru_file_repage = 0

maxperm% = 90 (BM建议不要降低maxpin%值)

minperm% = 5(物理RAM <32 GB)

minperm% = 10(物理RAM> 32 GB但<64 GB)

minperm% = 20(物理RAM> 64 GB)

v_pinshm = 1

maxpin% = (( SGA的大小/物理内存的大小 ) * 100 ) + 3

1.10 配置网络参数

-- 检查

# lsattr -El sys0 -a pre520tune

pre520tune disable Pre-520 tuning compatibility mode True <<不兼容,需要修改配置

# /usr/sbin/no -a | more

-- 执行以下命令修改配置

/usr/sbin/no -r -o ipqmaxlen=512

/usr/sbin/no -p -o rfc1323=1

/usr/sbin/no -p -o sb_max=4194304

/usr/sbin/no -p -o tcp_recvspace=65536

/usr/sbin/no -p -o tcp_sendspace=65536

/usr/sbin/no -p -o udp_recvspace=655360 <<< 该值是udp_sendspace的10倍,但须小于sb_max)

/usr/sbin/no -p -o udp_sendspace=65536 <<< ((DB_BLOCK_SIZE * DB_MULTIBLOCK_READ_COUNT) + 4 KB) but no lower than 65536

/usr/sbin/no -p -o tcp_ephemeral_low=9000

/usr/sbin/no -p -o udp_ephemeral_low=9000

/usr/sbin/no -p -o tcp_ephemeral_high=65500

/usr/sbin/no -p -o udp_ephemeral_high=65500

注意: 对于 GI 版本 11.2.0.2 的安装,设置 udp_sendspace 失败将导致 root.sh 失败。

可以查看mos:11.2.0.2 Grid Infrastructure Upgrade/Install on More Than One Node Cluster Fails With "gipchaLowerProcessNode: no valid interfaces found to node" in crsd.log (文档 ID 1280234.1)

如果安装遇到检测通不过,则遇到bug 13077654(文档号:1373242.1),可以通过以下方式修复

1)vi /etc/rc.net

if [ -f /usr/sbin/bo ]; then

/usr/sbin/no -r -o ipqmaxlen=512

/usr/sbin/no -p -o rfc1323=1

/usr/sbin/no -p -o sb_max=4194304

/usr/sbin/no -p -o tcp_recvspace=65536

/usr/sbin/no -p -o tcp_sendspace=65536

/usr/sbin/no -p -o udp_recvspace=655360

/usr/sbin/no -p -o udp_sendspace=65536

/usr/sbin/no -p -o tcp_ephemeral_low=9000

/usr/sbin/no -p -o udp_ephemeral_low=9000

/usr/sbin/no -p -o tcp_ephemeral_high=65500

/usr/sbin/no -p -o udp_ephemeral_high=65500

fi

2)root用户创建软链接

ln -s /usr/sbin/no /etc/no

1.11 NTP配置

1)时区一般为Asia/Shanghai

-- 检查操作系统主机时区:

grep TZ /etc/environment

TZ=Asia/Shanghai

-- 检查ORACLE集群时区:

grep TZ $GRID_HOME/crs/install/s_crsconfig_$(hostname)_env.txt

TZ=Asia/Shanghai

2) NTP的同步设置 编辑 /etc/ntp.conf文件, 内容如下:

#broadcastclient

server 127.127.0.1

driftfile /etc/ntp.driff

tracefile /etc/ntp.trace

slewalways yes

注意:微调slewalways ,这个值的默认设置是no,也就是说如果您不设置,NTP最大可一次调整1000秒. 根据IBM的官方说明,如果我们不指定slewthreshold 那么默认值是 0.128 seconds. 如果想特别设置,请指定slewthreshold 的值,注意单位是second。

3)启用网络同步时间的SLEWING选项

-- 将 /etc/rc.tcpip 下的start /usr/sbin/xntpd "$src_running"改成如下:

start /usr/sbin/xntpd "$src_running" "-x"

4)在NTP客户端启动xntpd守护进程

# startsrc -s xntpd -a "-x"

5)查询xntpd的状态

-- 当 system peer 不为 ‘insane‘ 时, 表明客户端已与服务器端成功地进行了同步.

# lssrc -ls xntpd

###

-- 检查ntp客户端 xntpd 运行状态:

# lssrc -ls xntpd

-- 当 sys peer 为 “insane” ,表明 xntpd 还没有完成,等待几分钟后,sys peer 会显示为NTP服务器地址(127.127.0.1),表明同步完成。

6) 通过-d命令看客户端跟时钟服务端时间偏差

#ntpdate -d 127.127.0.1

0ffset: 应小于几s

offset正负概念:正数代表NTP server比NTP client快,负数代表NTP server比NTP client慢

PS:如果server,client时间超过1000s,则需先手动设置client的时间,保证在1000s以内做同步

1.12 修改/etc/hosts文件

cp /etc/hosts{,_$(date +%Y%m%d)}

cat >> /etc/hosts <EOF

127.0.0.1 loopback localhost

::1 loopback localhost

# Public Network - (bond0)

192.168.8.145 orcl1

192.168.8.146 orcl2

# Private Interconnect - (bond1)

192.168.168.145 orcl1-priv

192.168.168.146 orcl2-priv

# Public Virtual IP (VIP) addresses - (bond0:X)

192.168.8.147 orcl1-vip

192.168.8.148 orcl2-vip

# SCAN IP - (bond0:X)

192.168.8.149 orcl-scan

EOF

注意:引用官方文档The host name of each node must conform to the RFC 952 standard, which permits alphanumeric characters. Host names using underscores ("_") are not allowed.(11g hostname是不允许用下划线 _ 进行命名。)

1.13 配置SSH

# su - grid

$ mkdir ~/.ssh

$ chmod 700 ~/.ssh

$ ssh-keygen -t rsa -P ‘‘ -f ~/.ssh/id_rsa

$ ssh-keygen -t dsa -P ‘‘ -f ~/.ssh/id_dsa

节点一:

-- 将生成的rsa和dsa密钥复制到authorized_keys

$ cat ~/.ssh/*.pub >> ~/.ssh/authorized_keys

-- 将节点2的生成的密钥追加到节点1的authorized_keys中,若有多个节点,依次执行

$ ssh node2 "cat ~/.ssh/*.pub" >> ~/.ssh/authorized_keys

-- 将最终的authorized_keys文件传输到其它所有节点

$ scp ~/.ssh/authorized_keys node2:~/.ssh/authorized_keys

-- 修改文件权限

[email protected]:~/.ssh$ chmod 600 ~/.ssh/authorized_keys

-- oracle用户参照上面步骤配置

-- 互信验证(oracle,grid)所有节点操作

su - grid

export SSH=‘ssh -o ConnectTimeout=3 -o ConnectionAttempts=5 -o PasswordAuthentication=no -o StrictHostKeyChecking=no‘

$ ${SSH} rac1 date

$ ${SSH} rac1-priv date

$ ${SSH} rac2 date

$ ${SSH} rac2-priv date

1.14 共享存储配置

-- 容量规划

1) ocr voting:3个2G的lun,ASM对应划分1个normal的dg

2) 控制文件:3个2G的lun,ASM对应划分3个dg

3) redo:2个64G的lun(建议raid 1+0,尽量不用raid5),ASM对应划分2个dg

4) 数据文件:最多500G 1个lun,ASM对应划分1个dg,注意单个dg不超过10T

-- 设置磁盘属性(RAC:存储驱动器能够并发读/写)

Error ORA-27091, ORA-27072 When Mounting Diskgroup (文档 ID 422075.1)

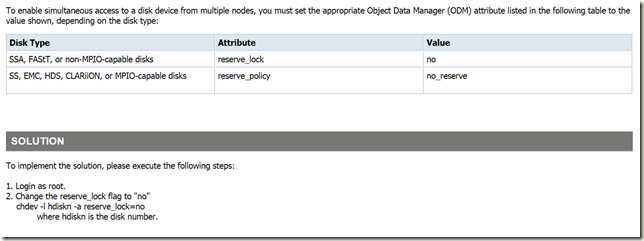

To enable simultaneous access to a disk device from multiple nodes, you must set the appropriate Object Data Manager (ODM) attribute listed in the following table to the value shown, depending on the disk type:

Disk Type

Attribute

Value

SSA, FAStT, or non-MPIO-capable disks

reserve_lock

no

SS, EMC, HDS, CLARiiON, or MPIO-capable disks

reserve_policy

no_reserve

To determine whether the attribute has the correct value, enter a command similar to the following on all cluster nodes for each disk device that you want to use:

# /usr/sbin/lsattr -E -l hdiskn

If the required attribute is not set to the correct value on any node, then enter a command similar to one of the following on that node:

■ SSA and FAStT devices

# /usr/sbin/chdev -l hdiskn -a reserve_lock=no

■ ESS, EMC, HDS, CLARiiON, and MPIO-capable devices

# /usr/sbin/chdev -l hdiskn -a reserve_policy=no_reserve

-- 查看磁盘属性

lsattr -El hdisk4 | grep reserve

-- 修改磁盘属性

-- node1

chdev -l hdisk4 -a reserve_policy=no_reserve

chdev -l hdisk5 -a reserve_policy=no_reserve

chdev -l hdisk6 -a reserve_policy=no_reserve

chdev -l hdisk7 -a reserve_policy=no_reserve

chdev -l hdisk8 -a reserve_policy=no_reserve

chdev -l hdisk9 -a reserve_policy=no_reserve

chdev -l hdisk10 -a reserve_policy=no_reserve

chdev -l hdisk11 -a reserve_policy=no_reserve

-- node2

chdev -l hdisk2 -a reserve_policy=no_reserve

chdev -l hdisk3 -a reserve_policy=no_reserve

chdev -l hdisk4 -a reserve_policy=no_reserve

chdev -l hdisk5 -a reserve_policy=no_reserve

chdev -l hdisk6 -a reserve_policy=no_reserve

chdev -l hdisk7 -a reserve_policy=no_reserve

chdev -l hdisk8 -a reserve_policy=no_reserve

chdev -l hdisk9 -a reserve_policy=no_reserve

--磁盘属组及权限

-- 查看权限

ls -l /dev/rhisk*

-- 修改权限

chown grid:asmadmin /dev/rhdisk4

chown grid:asmadmin /dev/rhdisk5

chown grid:asmadmin /dev/rhdisk6

chown grid:asmadmin /dev/rhdisk7

chown grid:asmadmin /dev/rhdisk8

chown grid:asmadmin /dev/rhdisk9

chown grid:asmadmin /dev/rhdisk10

chown grid:asmadmin /dev/rhdisk11

chmod 775 /dev/rhdisk4

chmod 775 /dev/rhdisk5

chmod 775 /dev/rhdisk6

chmod 775 /dev/rhdisk7

chmod 775 /dev/rhdisk8

chmod 775 /dev/rhdisk9

chmod 775 /dev/rhdisk10

chmod 775 /dev/rhdisk11

-- node2

chown grid:asmadmin /dev/rhdisk2

chown grid:asmadmin /dev/rhdisk3

chown grid:asmadmin /dev/rhdisk4

chown grid:asmadmin /dev/rhdisk5

chown grid:asmadmin /dev/rhdisk6

chown grid:asmadmin /dev/rhdisk7

chown grid:asmadmin /dev/rhdisk8

chown grid:asmadmin /dev/rhdisk9

chmod 775 /dev/rhdisk2

chmod 775 /dev/rhdisk3

chmod 775 /dev/rhdisk4

chmod 775 /dev/rhdisk5

chmod 775 /dev/rhdisk6

chmod 775 /dev/rhdisk7

chmod 775 /dev/rhdisk8

chmod 775 /dev/rhdisk9

-- 创建软链接,固定盘符(可选)

-- node1

mkdir /sharedisk

chown grid:oinstall /sharedisk

chmod 775 /sharedisk

su - grid

ln -s /dev/rhdisk4 /sharedisk/asm_data1

ln -s /dev/rhdisk5 /sharedisk/asm_data2

ln -s /dev/rhdisk6 /sharedisk/asm_data3

ln -s /dev/rhdisk7 /sharedisk/asm_data4

ln -s /dev/rhdisk8 /sharedisk/asm_data5

ln -s /dev/rhdisk9 /sharedisk/asm_grid1

ln -s /dev/rhdisk10 /sharedisk/asm_grid2

ln -s /dev/rhdisk11 /sharedisk/asm_grid3

-- node2

mkdir /sharedisk

chown grid:oinstall /sharedisk

chmod 775 /sharedisk

su - grid

ln -s /dev/rhdisk2 /sharedisk/asm_data1

ln -s /dev/rhdisk3 /sharedisk/asm_data2

ln -s /dev/rhdisk4 /sharedisk/asm_data3

ln -s /dev/rhdisk5 /sharedisk/asm_data4

ln -s /dev/rhdisk6 /sharedisk/asm_data5

ln -s /dev/rhdisk7 /sharedisk/asm_grid1

ln -s /dev/rhdisk8 /sharedisk/asm_grid2

ln -s /dev/rhdisk9 /sharedisk/asm_grid3

2. 图形界面安装

2.1 预检查

su - grid

export AIXTHREAD_SCOPE=S << only on AIX5L, AIX 6.1 及更高版本上默认为 S

./runcluvfy.sh stage -pre crsinst -n node1,node2 -fixup -verbose

2.2 图形界面安装GI软件

以grid用户登录,进入到安装文件解压目录

./runInstaller

-- 后面图形界面操作略

以上是关于AIX平台安装Oracle11gR2数据库的主要内容,如果未能解决你的问题,请参考以下文章

Oracle Study之--AIX 6.1安装Oracle 10gR2

Oracle Study之-AIX6.1构建Oracle 11gR2 RAC