mariadb审计日志导入 hive

Posted 运维从入门到放弃

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了mariadb审计日志导入 hive相关的知识,希望对你有一定的参考价值。

我们使用的 mariadb, 用的这个审计工具 https://mariadb.com/kb/en/library/mariadb-audit-plugin/

这个工具一点都不考虑后期对数据的处理, 因为他的日志是这样的

20180727 11:40:17,aaa-main-mariadb-bjc-001,user,10.1.111.11,3125928,6493942844,QUERY,account,\'select

id, company_id, user_id, department, title, role, create_time, update_time, status,

is_del, receive_email, contact

from company

WHERE ( user_id = 101

and is_del = 0 )\',0

所以需要上 logstash 格式化一下

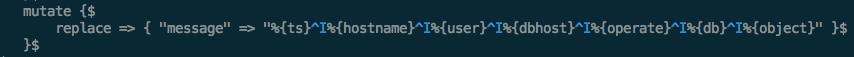

input { file { path => ["/data/logs/mariadb/server_audit.log"] start_position => "end" codec => multiline { charset => "ISO-8859-1" pattern => "^[0-9]{8}" negate => true what => "previous" } } } filter { if "quartz" in [message] { drop {} } mutate { gsub => [ "message", "\\s", " ", "message", "\\s+", " " ] } dissect { mapping => { "message" => "%{ts} %{+ts},%{hostname},%{user},%{dbhost},%{connid},%{queryid},%{operate},%{db},%{object}" } } mutate { replace => { "message" => "%{ts} %{hostname} %{user} %{dbhost} %{operate} %{db} %{object}" } } } output { file { path => "/data/logs/mariadb/%{host}_%{+YYYY-MM-dd_HH}.gz" gzip => true codec => line { format => "%{message}" } } }

注意 !!!

replace 那个地方各个字段之间我用的 tab 隔开的, 如果用 vim 一定不能写\\t, 这在hive中不识别的, 要在 vim 中先按 ctrl+v, 再按 tab

在 vim 中 set list 如下显示才对

mariadb 的审计日志不能按小时切割,上面 logstash 我把日志按小时生成 gz 文件了,后面就是推到 hdfs 中了, 期间试了各种方法

logstash的 output [webhdfs] 效率不行还丢数据

syslog-ng

rsyslog

统统不好用,最终直接使用 hdfs cli简直完美

我把 logstash 的配置和推送到 hdfs 的命令都打到 rpm 里,下面贴一下 rpm SPEC 文件 也记录一下吧

Name: logstash Version: 1.0.0 Release: 1%{?dist} Summary: specialize to mysql audit log collection License: GPL AutoReqProv: no %define __os_install_post %{nil} %description %prep %build %install rm -rf $RPM_BUILD_ROOT mkdir -p %{buildroot}/{apps,etc,usr,var/lib/logstash,var/log/logstash} cp -r %{_builddir}/etc/* %{buildroot}/etc/ cp -r %{_builddir}/usr/* %{buildroot}/usr/ cp -r %{_builddir}/apps/* %{buildroot}/apps/ %post chown -R root:root /usr/share/logstash chown -R root /var/log/logstash chown -R root:root /var/lib/logstash chown -R root:root /apps/hadoop-2.6.0 /usr/share/logstash/bin/system-install /etc/logstash/startup.options cat >> /etc/hosts <<EOF # for logstash push msyql audit to HDFS 这里填上 hdfs namenode和 datanode 的 hosts EOF echo "$(shuf -i 3-15 -n 1) * * * *" \'source /etc/profile;/apps/hadoop-2.6.0/bin/hdfs dfs -copyFromLocal /data/logs/mariadb/${HOSTNAME}_$(date -u +"\\%Y-\\%m-\\%d_\\%H" -d "last hour").gz hdfs://active_namenode/mysql_audit/$(date -u +"\\%Y-\\%m-\\%d")/ && rm -f /data/logs/mariadb/${HOSTNAME}_$(date -u +"\\%Y-\\%m-\\%d_\\%H" -d "last hour").gz\' >> /var/spool/cron/root initctl start logstash %files %defattr(-,root,root) /apps/hadoop-2.6.0 /etc/logstash /usr/share/logstash /var/lib/logstash /var/log/logstash %preun if [ $1 -eq 0 ]; then # Upstart if [ -r "/etc/init/logstash.conf" ]; then if [ -f "/sbin/stop" ]; then /sbin/stop logstash >/dev/null 2>&1 || true else /sbin/service logstash stop >/dev/null 2>&1 || true fi if [ -f "/etc/init/logstash.conf" ]; then rm /etc/init/logstash.conf fi # SYSV elif [ -r "/etc/init.d/logstash" ]; then /sbin/chkconfig --del logstash if [ -f "/etc/init.d/logstash" ]; then rm /etc/init.d/logstash fi # systemd else systemctl stop logstash >/dev/null 2>&1 || true if [ -f "/etc/systemd/system/logstash-prestart.sh" ]; then rm /etc/systemd/system/logstash-prestart.sh fi if [ -f "/etc/systemd/system/logstash.service" ]; then rm /etc/systemd/system/logstash.service fi fi if getent passwd logstash >/dev/null ; then userdel logstash fi if getent group logstash > /dev/null ; then groupdel logstash fi fi %postun %clean rm -rf $RPM_BUILD_ROOT

我把 hadoop 的程序也放进去了,方便

安装完 rpm 自动启动 logstash 省劲

现在日志已经按天写到 hdfs 中,下面再导入 hive 中

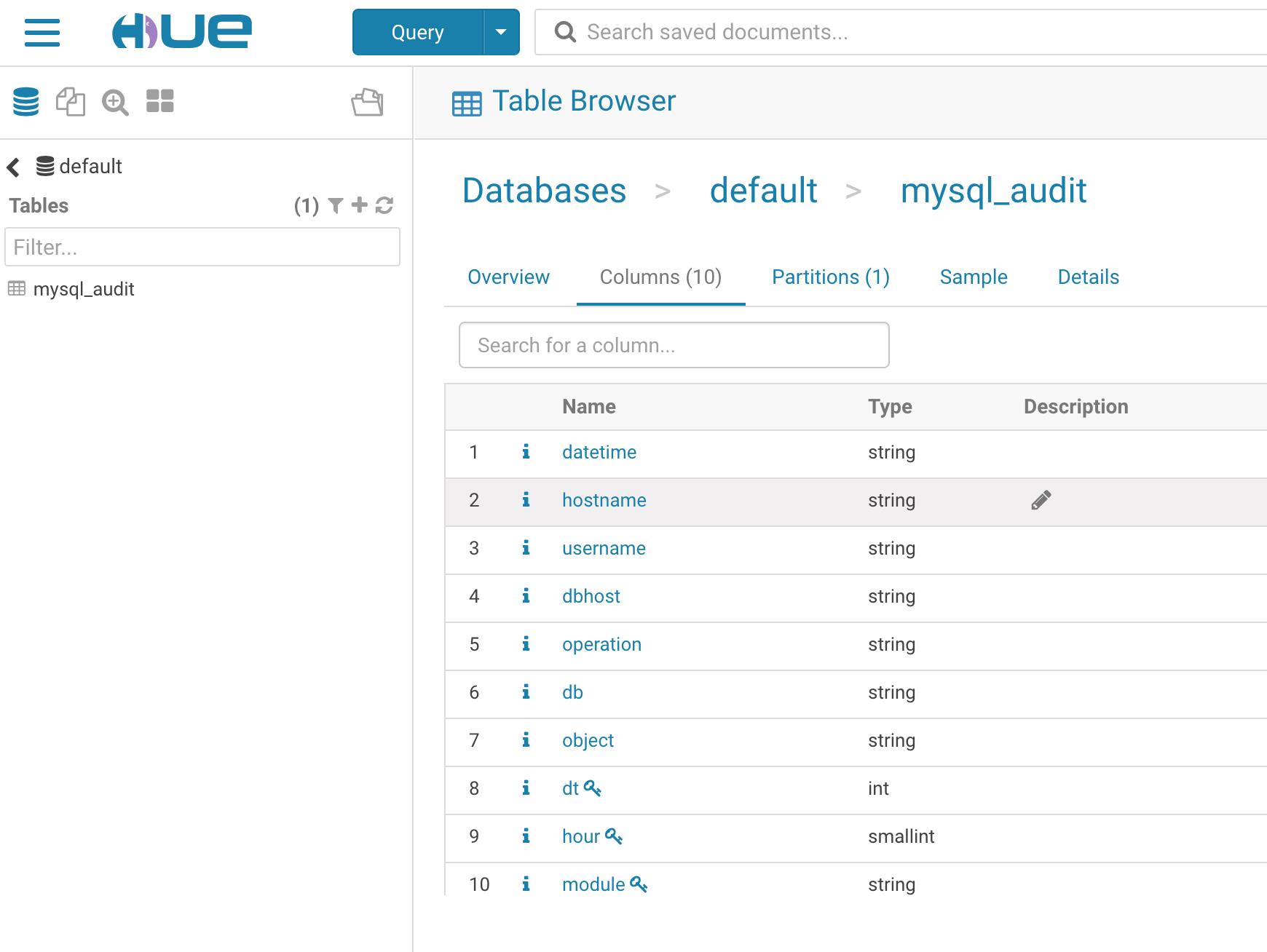

先创建 hive 表

create table mysql_audit(datetime string,hostname string,username string,dbhost string,operation string,db string,object string) partitioned by (dt int,hour smallint,module string) row format delimited fields terminated by \'\\t\';

分了3个 partition

load hdfs to hive

#!/bin/bash # Description: load hdfs mysql audit gz to hive # Author : quke # Date : 2018-07-27 source /root/.bash_profile cur_date=$(date -u +"%Y-%m-%d" -d "last hour") cur_date_short=$(date -u +"%Y%m%d" -d "last hour") cur_hour=$(date -u +"%H" -d "last hour") for fn in $(hdfs dfs -ls /mysql_audit/${cur_date}/*_${cur_hour}.gz|awk \'{print $NF}\');do host_name=$(echo $fn|awk -F [/_] \'{print $(NF-2)}\') module=${host_name%-bjc*} echo "load data inpath \'hdfs://ossmondb${fn}\' into table mysql_audit partition(dt=${cur_date_short},hour=${cur_hour},module=\'${module}\');" >> hive.sql done hive -f hive.sql && rm -f hive.sql

有任何疑问欢迎交流

以上是关于mariadb审计日志导入 hive的主要内容,如果未能解决你的问题,请参考以下文章