项目系统中使用Spring boot集成kafka业务实现系统处理消费实例

Posted

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了项目系统中使用Spring boot集成kafka业务实现系统处理消费实例相关的知识,希望对你有一定的参考价值。

参考技术A pom.xml文件org.apache.kafka

kafka-clients

2.8.1

org.apache.kafka

kafka-clients

0.8.2.0

application.yml 配置文件

app:

kafka:

event-bus:

cluster-name: teste_kcz8px7x01

system-url: http://tk.kafkasit.loud.local:1080/mestService.pub

topic: Test_COMMAND_BUS_PRODUCE

product-topic-tokens: COMMAND_BUS_PRODUCE:test

product-poolsize: 5

consume-thread-count: 1

consume-message-group-size: 20

consume-topic-tokens: COMMAND_BUS_MANAGER:test01

启动类处理

QiDongManagerApp.java

@Bean

@ConfigurationProperties(prefix = "app.kafka.event-bus")

public EventBusKafkaConf eventBusKafkaConf()

return new EventBusKafkaConf();

@Bean

@Profile(value = "sit","prod")

public TestCommandBusProduce TestCommandBusProduce(EventBusKafkaConf eventBusKafkaConf)

return new TestCommandBusProduceImpl(eventBusKafkaConf);

@Bean

@Profile(value = "sit","prod")

public TestCommandBusConsumer TestCommandBusConsumer(EventBusKafkaConf eventBusKafkaConf, IStringMessageConsumeListener ConsumeListener)

return new TestCommandBusConsumer(eventBusKafkaConf,ConsumeListener);

监听时间配置

EventBusKafkaConf.java

@Data

public class EventBusKafkaConf

/**集群名称*/

private String clusterName;

/**地址*/

private String systemUrl;

/**命令总线:主题*/

private String topic;

private String productTopicTokens;

/**线程池大小*/

private int productPoolsize;

private int consumeMessageGroupSize;

private int consumeThreadCount;

private String consumeTopicTokens;

TestCommandBusProduce.java

public interface TestCommandBusProduce extends InitializingBean, DisposableBean

/**

* 发送消息

*

* @param message

*/

public void sendSingleString(String id,String type,String message) ;

/**

* 发送消息到某个分区

* @param id 消息唯一编号

* @param type 消息类型

* @param key 分区key

* @param message 消息数据

*/

public void sendSingleStringWithKey(String id,String type,String key,String message) ;

/**

* 发送消息

*

* @param message

*/

public void batchSendString(String id,String type,List list) ;

public void batchSendList(List list);

public void sendSingleString(String message);

TestCommandBusProduceImpl.java

@Slf4j

public class TestCommandBusProduceImpl implements InitializingBean, DisposableBean,TestCommandBusProduce

private IKafkaProducer kafkaProducer;

private EventBusKafkaConf kafkaConf;

public TestCommandBusProduceImpl(EventBusKafkaConf kafkaConf)

this.kafkaConf = kafkaConf;

@Override

public void afterPropertiesSet() throws Exception

int poolSize = kafkaConf.getProductPoolsize();

String systemUrl = kafkaConf.getSystemUrl();

String clusterName = kafkaConf.getClusterName();

String topicTokens = kafkaConf.getProductTopicTokens();

ProduceConfig produceConfig = new ProduceConfig(poolSize, systemUrl, clusterName, topicTokens);

kafkaProducer = new ProducerPool(produceConfig);

/**

*

* @param topicTokens

* @throws Exception

*/

public void setProperties(String topicTokens) throws Exception

int poolSize = kafkaConf.getProductPoolsize();

String systemUrl = kafkaConf.getSystemUrl();

String clusterName = kafkaConf.getClusterName();

ProduceConfig produceConfig = new ProduceConfig(poolSize, systemUrl, clusterName, topicTokens);

kafkaProducer = new ProducerPool(produceConfig);

@Override

public void destroy() throws Exception

if (kafkaProducer != null)

kafkaProducer.close();

/**

* 发送消息

*

* @param message

*/

public void sendSingleString(String id,String type,String message)

JSONObject jsonObject = new JSONObject();

jsonObject.put("id", id);

jsonObject.put("type", type);

jsonObject.put("eventTime", new Date());

jsonObject.put("data", message);

log.info("sendSingleString jsonObject=",jsonObject);

String topic = kafkaConf.getTopic();

sendSingleString(topic, jsonObject.toString());

public void sendSingleString(String message)

log.info("sendSingleString jsonObject=",message);

sendSingleString(kafkaConf.getTopic(), message);

private void sendSingleString(String topic, String message)

//kafkaProducer.sendString(topic, message);

KeyedString keyedMessage=null;

String batchId = getBatchId(message);

if(StringUtils.isNotEmpty(batchId))

keyedMessage=new KeyedString(batchId,message);

else

keyedMessage=new KeyedString("Test-event-stream",message);

//保证消息次序

kafkaProducer.sendKeyedString(topic, keyedMessage);

/**

* 业务场景开启不同分区

* @param message

* @return

*/

private String getBatchId(String message)

try

JSONObject msg = JSONObject.parseObject(message);

String type = msg.getString("type");

JSONObject data = JSONObject.parseObject(msg.getString("data"));

//业务场景数据检查

if(TaskInfoConstants.SEND_CONTACT_CHECK.equals(type)

||TaskInfoConstants.SEND_CONTACT_CHECK_RESULT.equals(type))

return data.getString("batchId");

//业务场景计算

if(TaskInfoConstants.SEND_CONTACT_BILL.equals(type)

||TaskInfoConstants.SEND_CONTACT_BILL_RESULT.equals(type)

||TaskInfoConstants.SEND_BILL_CALCSTATUS.equals(type))

return data.getString("batchId");

//业务场景计算

if(TaskInfoConstants.WITHHOLD_CONTACT.equals(type)

||TaskInfoConstants.WITHHOLD_CONTACT_RESULT.equals(type)

||TaskInfoConstants.WITHHOLD_REPORT_RESULT.equals(type))

return data.getString("batchId");

//业务场景

if(TaskInfoConstants.ICS_CONTRACT.equals(type))

return data.getString("contractNo");

//业务场景回调

if(TaskInfoConstants.ZH_SALARY.equals(type))

return data.getString("taskId");

catch (Exception e)

log.error(e.getMessage(), e);

return null;

/**

* 发送消息

*

* @param message

*/

public void batchSendString(String id, String type, List list)

log.info("sendSingleString id=,type=,list=",id,type,list);

//保证消息次序

String topic = kafkaConf.getTopic();

batchSendString(topic, list);

private void batchSendString(String topic, List list)

//kafkaProducer.batchSendString(topic, list);

//保证消息次序

List keyedStringList= Lists.newArrayList();

for(String message:list)

keyedStringList.add(new KeyedString("Test-event-stream",message));

kafkaProducer.batchSendKeyedString(topic, keyedStringList);

/**

* 发送消息

*

* @param message

*/

@Override

public void batchSendList(List list)

log.info("batchSendList id=",list);

//保证消息次序

String topic = kafkaConf.getTopic();

kafkaProducer.batchSendString(topic, list);

@Override

public void sendSingleStringWithKey(String id, String type, String key, String message)

JSONObject jsonObject = new JSONObject();

jsonObject.put("id", id);

jsonObject.put("type", type);

jsonObject.put("eventTime", new Date());

jsonObject.put("data", message);

log.info("sendSingleStringWithKey key=, jsonObject=",key,jsonObject);

String topic = kafkaConf.getTopic();

KeyedString keyedMessage=new KeyedString(key,jsonObject.toString());

//保证消息次序

kafkaProducer.sendKeyedString(topic, keyedMessage);

TestCommandBusProduce.java

public interface TestCommandBusProduce extends InitializingBean, DisposableBean

/**

* 发送消息

*

* @param message

*/

public void sendSingleString(String id,String type,String message) ;

/**

* 发送消息到某个分区

* @param id 消息唯一编号

* @param type 消息类型

* @param key 分区key

* @param message 消息数据

*/

public void sendSingleStringWithKey(String id,String type,String key,String message) ;

/**

* 发送消息

*

* @param message

*/

public void batchSendString(String id,String type,List list) ;

public void batchSendList(List list);

public void sendSingleString(String message);

TestCommandBusConsumer.java

public class TestCommandBusConsumer implements InitializingBean

private EventBusKafkaConf kafkaConf;

private IStringMessageConsumeListener ConsumeListener;

public TestCommandBusConsumer(EventBusKafkaConf kafkaConf, IStringMessageConsumeListener ConsumeListener)

super();

this.kafkaConf = kafkaConf;

this.ConsumeListener = ConsumeListener;

@Override

public void afterPropertiesSet() throws Exception

initKafkaConfig();

public void initKafkaConfig() throws KafkaException

ConsumeOptionalConfig optionalConfig = new ConsumeOptionalConfig();

optionalConfig.setMessageGroupSize(kafkaConf.getConsumeMessageGroupSize());

optionalConfig.setAutoOffsetReset(AutoOffsetReset.NOW);

ConsumeConfig pickupConsumeConfig = getConsumeConfig(kafkaConf.getTopic());

KafkaConsumerRegister.registerStringConsumer(pickupConsumeConfig, ConsumeListener, optionalConfig);

private ConsumeConfig getConsumeConfig(String topicName)

return new ConsumeConfig(kafkaConf.getConsumeTopicTokens(), kafkaConf.getSystemUrl(), kafkaConf.getClusterName(), topicName, kafkaConf.getConsumeThreadCount());

TestCommandBusListener.java

@Slf4j

@Component

public class TestCommandBusListener implements IStringMessageConsumeListener

@Resource

private ContactAreaConfigService contactAreaConfigService;

@Resource

private IAgContactInfoSnapshotService iAgContactInfoSnapshotService;

@Resource

private BillDispatcherService billDispatcherService;

@Resource

private YtBillDispatcherService ytBillDispatcherService;

@Resource

private IFileSysDateService iFileSysDateService;

@Autowired

private ApplicationEventPublisher publisher;

@Resource

private IAgContactInfoBatchService iAgContactInfoBatchService;

@Resource

private ContactAreaJtInfoService contactAreaJtInfoService;

@Resource

ExceptionNotifier exceptionNotifier;

@Autowired

private MessageReceiver messageReceiver;

@Override

public void onMessage(List list) throws KafkaConsumeRetryException

try

DataPermissionHodler.disablePermissionFilter();

// 剥离消息日志记录过程与消息处理过程

for (int i = 0; i < list.size(); i++)

JSONObject jsonObject = JSON

.parseObject(list.get(i).toString());

publisher.publishEvent(new KafkaEvent(jsonObject

.getString("id"), jsonObject.getString("refId"),

jsonObject.getString("type"), jsonObject

.getDate("eventTime"), jsonObject

.getString("data")));

//消息异步处理分发

for (int i = 0; i < list.size(); i++)

JSONObject jsonObject = JSON.parseObject(list.get(i).toString());

Message msg=new KafkaEvent(jsonObject.getString("id"),jsonObject.getString("refId"),

jsonObject.getString("type"),jsonObject.getDate("eventTime"),jsonObject.getString("data"));

messageReceiver.pushMessage(msg);

for (int i = 0; i < list.size(); i++)

JSONObject jsonObject = JSON

.parseObject(list.get(i).toString());

String type = jsonObject.getString("type");

if(TaskInfoConstants.SEND_CONTACT_CHECK_RESULT.equals(type))

billDispatcherService.csAndJsCheck(jsonObject

.getJSONObject("data"));

if(TaskInfoConstants.SEND_CONTACT_BILL_RESULT.equals(type))

billDispatcherService.csAndJsJs(jsonObject

.getJSONObject("data"));

if(TaskInfoConstants.SEND_CONTRACT_CHANGED.equals(type))

contactAreaConfigService.sysnContractConf(jsonObject

.getJSONObject("data"));

contactAreaJtInfoService.sysnContractConfJtInfo(jsonObject

.getJSONObject("data"));

// if(TaskInfoConstants.RETURN_BILL_DATA.equals(type))

// iFileSysDateService.updatesysFileAttbill(jsonObject);

//

if(TaskInfoConstants.WITHHOLD_CONTACT_RESULT.equals(type))

ytBillDispatcherService.csAndJsJs(jsonObject

.getJSONObject("data"));

if(TaskInfoConstants.WITHHOLD_REPORT_RESULT.equals(type))

ytBillDispatcherService.csAndYtJsEndJs(jsonObject

.getJSONObject("data"));

if(TaskInfoConstants.SEND_BILL_CALCULATE_INFO.equals(type))

BillCalculateParam billCalculateParam = JSON

.parseObject(JSON.toJSONString(jsonObject

.getJSONObject("data")),

BillCalculateParam.class);

iAgContactInfoBatchService

.deleteBillCalculateData(billCalculateParam);

catch (Exception e)

exceptionNotifier.notify(e);

log.info("ContactAccountListener list=", JsonUtil.toJson(list));

log.error(e.getMessage(), e);

Spring boot集成Kafka消息中间件

一.创建Spring boot项目,添加如下依赖

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-web</artifactId>

</dependency>

<dependency>

<groupId>org.projectlombok</groupId>

<artifactId>lombok</artifactId>

<optional>true</optional>

</dependency>

<!-- https://mvnrepository.com/artifact/org.springframework.kafka/spring-kafka -->

<dependency>

<groupId>org.springframework.kafka</groupId>

<artifactId>spring-kafka</artifactId>

</dependency>

<!-- https://mvnrepository.com/artifact/org.apache.kafka/kafka-clients -->

<dependency>

<groupId>org.apache.kafka</groupId>

<artifactId>kafka-clients</artifactId>

</dependency>

<dependency>

<groupId>com.alibaba</groupId>

<artifactId>fastjson</artifactId>

<version>1.2.41</version>

</dependency>二.配置文件

server.port=4400

#kafka配置

#============== kafka ===================

# 指定kafka 代理地址,可以多个

spring.kafka.bootstrap-servers=192.168.102.88:9092

# 指定默认消费者group id

spring.kafka.consumer.group-id=jkafka.demo

#earliest 当各分区下有已提交的offset时,从提交的offset开始消费;无提交的offset时,从头开始消费

#latest 当各分区下有已提交的offset时,从提交的offset开始消费;无提交的offset时,消费新产生的该分区下的数据

#none topic各分区都存在已提交的offset时,从offset后开始消费;只要有一个分区不存在已提交的offset,则抛出异常

spring.kafka.consumer.auto-offset-reset=latest

spring.kafka.consumer.enable-auto-commit=false

spring.kafka.consumer.auto-commit-interval=100

# 指定消费者消息key和消息体的编解码方式

spring.kafka.consumer.key-deserializer=org.apache.kafka.common.serialization.StringDeserializer

spring.kafka.consumer.value-deserializer=org.apache.kafka.common.serialization.StringDeserializer三.编辑消息实体

@Data

public class Message implements Serializable{

/**

*

*/

private static final long serialVersionUID = 2522280475099635810L;

//消息ID

private String id;

//消息内容

private String msg;

// 消息发送时间

private Date sendTime;

}

四.消息发送类

@Component

public class KfkaProducer {

private static Logger logger = LoggerFactory.getLogger(KfkaProducer.class);

@Autowired

private KafkaTemplate<String, String> kafkaTemplate;

public void send(String topic,Message message) {

try {

logger.info("正在发送消息...");

kafkaTemplate.send(topic,JSON.toJSONString(message));

logger.info("发送消息成功 ----->>>>> message = {}", JSON.toJSONString(message));

} catch (Exception e) {

e.getMessage();

}

}

}

五.发现监听接收类

@Component

public class KfkaListener {

private static Logger logger = LoggerFactory.getLogger(KfkaListener.class);

@KafkaListener(topics = {"hello"})

public void listen(ConsumerRecord<?, ?> record) {

Optional<?> kafkaMessage = Optional.ofNullable(record.value());

if (kafkaMessage.isPresent()) {

Object message = kafkaMessage.get();

logger.info("接收消息------------ record =" + record);

logger.info("接收消息----------- message =" + message);

}

}

}

六.定时发送信息测试类

@EnableScheduling

@Component

public class PublisherController {

private static final Logger log = LoggerFactory.getLogger(PublisherController.class);

@Autowired

private KfkaProducer kfkaProducer;

@Scheduled(fixedRate = 5000)

public void pubMsg() {

Message msg=new Message();

msg.setId(UUID.randomUUID().toString());

msg.setMsg("发送这条消息给你,你好啊!!!!!!");

msg.setSendTime(new Date());

kfkaProducer.send("hello", msg);;

log.info("Publisher sendes Topic... ");

}

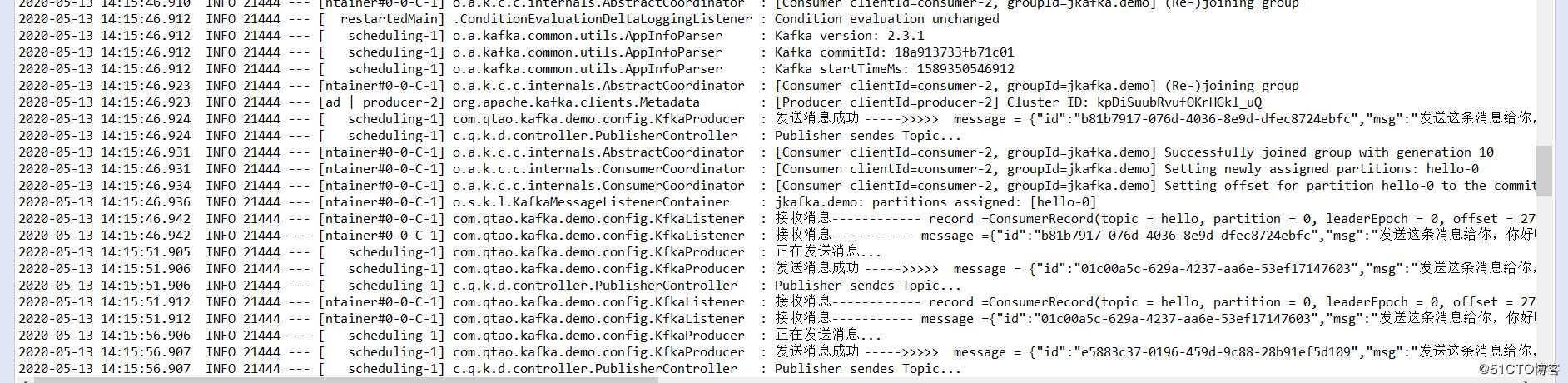

}七.测试结果

以上是关于项目系统中使用Spring boot集成kafka业务实现系统处理消费实例的主要内容,如果未能解决你的问题,请参考以下文章