使用docker搭建hive测试环境

Posted 麒思妙想

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了使用docker搭建hive测试环境相关的知识,希望对你有一定的参考价值。

前连天有读者提问,想让我搞一下calcite查询hive,然而无奈发现calcite本身有个bug,暂时无法通过 calcite jdbc 链接 hive。 暂时没时间提pr fix这个问题,所以先把hive环境搭建记录下来把

环境搭建

docker-compose.yml

version: "3"

services:

namenode:

image: bde2020/hadoop-namenode:2.0.0-hadoop2.7.4-java8

volumes:

- namenode:/hadoop/dfs/name

environment:

- CLUSTER_NAME=test

env_file:

- ./hadoop-hive.env

ports:

- "20070:50070"

datanode:

image: bde2020/hadoop-datanode:2.0.0-hadoop2.7.4-java8

volumes:

- datanode:/hadoop/dfs/data

env_file:

- ./hadoop-hive.env

environment:

SERVICE_PRECONDITION: "namenode:50070"

ports:

- "20075:50075"

hive-server:

image: bde2020/hive:2.3.2-postgresql-metastore

env_file:

- ./hadoop-hive.env

environment:

HIVE_CORE_CONF_javax_jdo_option_ConnectionURL: "jdbc:postgresql://hive-metastore/metastore"

SERVICE_PRECONDITION: "hive-metastore:9083"

ports:

- "10000:10000"

hive-metastore:

image: bde2020/hive:2.3.2-postgresql-metastore

env_file:

- ./hadoop-hive.env

command: /opt/hive/bin/hive --service metastore

environment:

SERVICE_PRECONDITION: "namenode:50070 datanode:50075 hive-metastore-postgresql:5432"

ports:

- "9083:9083"

hive-metastore-postgresql:

image: bde2020/hive-metastore-postgresql:2.3.0

presto-coordinator:

image: shawnzhu/prestodb:0.181

ports:

- "7080:8080"

volumes:

namenode:

datanode:

networks:

common-network:

driver: overlay

hadoop-hive.env

HIVE_SITE_CONF_javax_jdo_option_ConnectionURL=jdbc:postgresql://hive-metastore-postgresql/metastore

HIVE_SITE_CONF_javax_jdo_option_ConnectionDriverName=org.postgresql.Driver

HIVE_SITE_CONF_javax_jdo_option_ConnectionUserName=hive

HIVE_SITE_CONF_javax_jdo_option_ConnectionPassword=hive

HIVE_SITE_CONF_datanucleus_autoCreateSchema=false

HIVE_SITE_CONF_hive_metastore_uris=thrift://hive-metastore:9083

HDFS_CONF_dfs_namenode_datanode_registration_ip___hostname___check=false

HIVE_SITE_CONF_hive_server2_thrift_bind_host=0.0.0.0

HIVE_SITE_CONF_hive_server2_thrift_port=10000

HIVE_SITE_CONF_hive_metastore_event_db_notification_api_auth=false

CORE_CONF_fs_defaultFS=hdfs://namenode:8020

CORE_CONF_hadoop_http_staticuser_user=root

CORE_CONF_hadoop_proxyuser_hive_hosts=*

CORE_CONF_hadoop_proxyuser_hive_groups=*

CORE_CONF_hadoop_proxyuser_hue_hosts=*

CORE_CONF_hadoop_proxyuser_hue_groups=*

CORE_CONF_hadoop_proxyuser_root_hosts=*

CORE_CONF_hadoop_proxyuser_root_groups=*

HDFS_CONF_dfs_webhdfs_enabled=true

HDFS_CONF_dfs_permissions_enabled=false

YARN_CONF_yarn_log___aggregation___enable=true

YARN_CONF_yarn_resourcemanager_recovery_enabled=true

YARN_CONF_yarn_resourcemanager_store_class=org.apache.hadoop.yarn.server.resourcemanager.recovery.FileSystemRMStateStore

YARN_CONF_yarn_resourcemanager_fs_state___store_uri=/rmstate

YARN_CONF_yarn_nodemanager_remote___app___log___dir=/app-logs

YARN_CONF_yarn_log_server_url=http://historyserver:8188/applicationhistory/logs/

YARN_CONF_yarn_timeline___service_enabled=true

YARN_CONF_yarn_timeline___service_generic___application___history_enabled=true

YARN_CONF_yarn_resourcemanager_system___metrics___publisher_enabled=true

YARN_CONF_yarn_resourcemanager_hostname=resourcemanager

YARN_CONF_yarn_timeline___service_hostname=historyserver

YARN_CONF_yarn_resourcemanager_address=resourcemanager:8032

YARN_CONF_yarn_resourcemanager_scheduler_address=resourcemanager:8030

YARN_CONF_yarn_resourcemanager_resource__tracker_address=resourcemanager:8031

测试

启动docker环境

docker-compose up -d

测试代码

package com.dafei1288;

import java.sql.*;

public class Test

public static void main(String[] args)

String driverName = "org.apache.hive.jdbc.HiveDriver";

try

Class.forName(driverName);

catch (ClassNotFoundException e)

e.printStackTrace();

System.exit(1);

try

Connection con = DriverManager.getConnection("jdbc:hive2://172.30.214.156:10000", "", "");

Statement stmt = con.createStatement();

String sql = "select count(1)";

sql = "select * from pokes";

System.out.println("Running: " + sql);

ResultSet res = stmt.executeQuery(sql);

while (res.next())

System.out.println(String.valueOf(res.getString(1)));

catch (SQLException e)

e.printStackTrace();

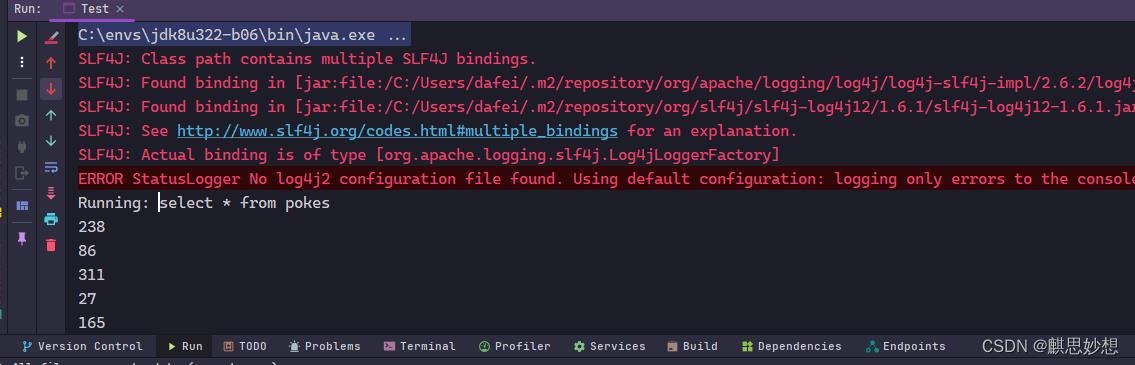

测试结果

以上是关于使用docker搭建hive测试环境的主要内容,如果未能解决你的问题,请参考以下文章