ELK学习实验019:ELK使用redis缓存

Posted 战五渣

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了ELK学习实验019:ELK使用redis缓存相关的知识,希望对你有一定的参考价值。

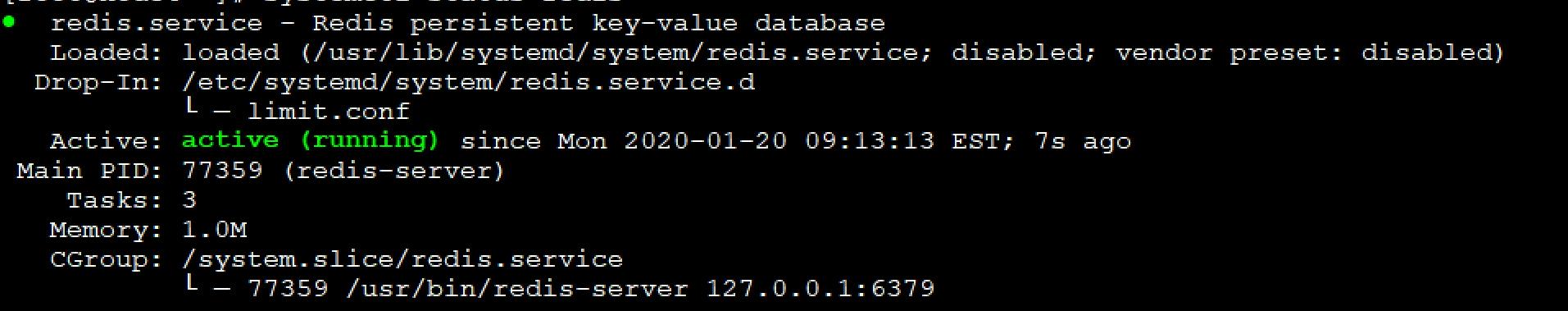

1 安装一个redis服务

[root@node4 ~]# yum -y install redis

直接启动

[root@node4 ~]# systemctl restart redis

[root@node4 ~]# systemctl status redis

[root@node4 ~]# redis-cli -h 127.0.0.1

2 配置filebeat,把数据传给redis

[root@node4 ~]# vim /etc/filebeat/filebeat.yml

filebeat.inputs: ##################################################### ## nginx log ##################################################### - type: log enabled: true paths: - /usr/local/nginx/logs/access.log json.key_under_root: true json.overwrite_keys: true tags: ["access"] #- type: log # enabled: true # paths: # - /usr/local/nginx/logs/error.log # tags: ["error"] ##################################################### ## tomcat log ##################################################### - type: log enabled: true paths: - /var/log/tomcat/localhost_access_log.*.txt json.key_under_root: true json.overwrite_keys: true tags: ["tomcat"] ##################################################### ## java log ##################################################### - type: log enabled: true paths: - /usr/local/elasticsearch/logs/my-elktest-cluster.log tags: ["es-java"] multiline.pattern: \'^\\[\' multiline.negate: true multiline.match: "after" ##################################################### ## docker log ##################################################### - type: docker containers.ids: - \'*\' json.key_under_root: true json.overwrite_keys: true tags: ["docker"] ##################################################### ## outout redis ##################################################### output.redis: hosts: ["127.0.0.1"] key: "filebeat" db: 0 timeout: 5

[root@node4 ~]# systemctl restart filebeat

访问产生日志

3 查看redis

127.0.0.1:6379> keys * 1) "filebeat" 127.0.0.1:6379> 127.0.0.1:6379> keys * 1) "filebeat" 127.0.0.1:6379> type filebeat #查看类型 list 127.0.0.1:6379> llen filebeat #查看长度 (integer) 22 127.0.0.1:6379> LRANGE filebeat 1 22 1) "{\\"@timestamp\\":\\"2020-01-20T14:22:15.291Z\\",\\"@metadata\\":{\\"beat\\":\\"filebeat\\",\\"type\\":\\"_doc\\",\\"version\\":\\"7.4.2\\"},\\"agent\\":{\\"hostname\\":\\"node4\\",\\"id\\":\\"bb3818f9-66e2-4eb2-8f0c-3f35b543e025\\",\\"version\\":\\"7.4.2\\",\\"type\\":\\"filebeat\\",\\"ephemeral_id\\":\\"663027a7-1bdc-4a9f-b9d3-1297ef06c0b0\\"},\\"log\\":{\\"offset\\":21185,\\"file\\":{\\"path\\":\\"/usr/local/nginx/logs/error.log\\"}},\\"message\\":\\"2020/01/20 09:22:08 [error] 2790#0: *32 open() \\\\\\"/usr/local/nginx/html/favicon.ico\\\\\\" failed (2: No such file or directory), client: 192.168.132.1, server: localhost, request: \\\\\\"GET /favicon.ico HTTP/1.1\\\\\\", host: \\\\\\"192.168.132.134\\\\\\", referrer: \\\\\\"http://192.168.132.134/\\\\\\"\\",\\"tags\\":[\\"error\\"],\\"input\\":{\\"type\\":\\"log\\"},\\"ecs\\":{\\"version\\":\\"1.1.0\\"},\\"host\\":{\\"name\\":\\"node4\\"}}"

使用json解析

{ "@timestamp": "2020-01-20T14:22:15.293Z", "@metadata": { "beat": "filebeat", "type": "_doc", "version": "7.4.2" }, "log": { "offset": 21460, "file": { "path": "/usr/local/nginx/logs/access.log" } }, "json": { "size": 612, "xff": "-", "upstreamhost": "-", "url": "/index.html", "domain": "192.168.132.134", "upstreamtime": "-", "@timestamp": "2020-01-20T09:22:08-05:00", "clientip": "192.168.132.1", "host": "192.168.132.134", "status": "200", "http_host": "192.168.132.134", "Agent": "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/79.0.3945.117 Safari/537.36", "responsetime": 0, "referer": "-" }, "tags": ["access"], "input": { "type": "log" }, "ecs": { "version": "1.1.0" }, "host": { "name": "node4" }, "agent": { "id": "bb3818f9-66e2-4eb2-8f0c-3f35b543e025", "version": "7.4.2", "type": "filebeat", "ephemeral_id": "663027a7-1bdc-4a9f-b9d3-1297ef06c0b0", "hostname": "node4" } }

4 使用logstash收集消费resdis的数据

再node4节点安装logstash

[root@node4 ~]# wget https://artifacts.elastic.co/downloads/logstash/logstash-7.5.1.rpm

[root@node4 ~]# rpm -ivh logstash-7.5.1.rpm

[root@node4 ~]# vim /etc/logstash/conf.d/logsatsh.conf

input { redis { host => "127.0.0.1" port => "6379" db => "0" key => "filebeat" data_type => "list" } } filter{ mutate { convert => ["upstream_time","float"] convert => ["request_time","float"] } } output{ stdout {} elasticsearch { hosts => "192.168.132.131:9200" manage_template => false index => "nginx_access-%{+yyyy.MM.dd}" } }

[root@node4 ~]# /usr/share/logstash/bin/logstash -f /etc/logstash/conf.d/logsatsh.conf

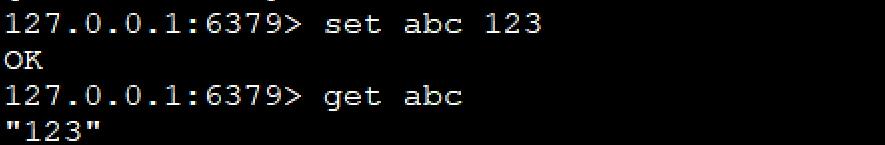

已经有索引

访问

[root@node5 ~]# ab -n 20000 -c 20 http://192.168.132.134

查看redis

127.0.0.1:6379> keys * (empty list or set) 127.0.0.1:6379> keys * 1) "filebeat" 127.0.0.1:6379> LLEN filebeat (integer) 16875 127.0.0.1:6379> LLEN filebeat (integer) 16000 127.0.0.1:6379> LLEN filebeat (integer) 15125 127.0.0.1:6379> LLEN filebeat (integer) 14375 127.0.0.1:6379> LLEN filebeat (integer) 13625 127.0.0.1:6379> LLEN filebeat (integer) 13000 127.0.0.1:6379> LLEN filebeat (integer) 12375

使用kibana查看

{ "_index": "nginx_access-2020.01.20", "_type": "_doc", "_id": "A1J-w28BOF7DoSFdyQr8", "_version": 1, "_score": null, "_source": { "host": { "name": "node4" }, "tags": [ "access" ], "input": { "type": "log" }, "ecs": { "version": "1.1.0" }, "log": { "file": { "path": "/usr/local/nginx/logs/access.log" }, "offset": 12386215 }, "json": { "host": "192.168.132.134", "upstreamtime": "-", "xff": "-", "status": "200", "referer": "-", "http_host": "192.168.132.134", "Agent": "ApacheBench/2.3", "url": "/index.html", "responsetime": 0, "domain": "192.168.132.134", "size": 612, "clientip": "192.168.132.135", "upstreamhost": "-", "@timestamp": "2020-01-20T10:07:11-05:00" }, "agent": { "hostname": "node4", "id": "bb3818f9-66e2-4eb2-8f0c-3f35b543e025", "type": "filebeat", "ephemeral_id": "efddca40-1d19-4036-9724-410b1b6d4c8b", "version": "7.4.2" }, "@version": "1", "@timestamp": "2020-01-20T15:07:16.439Z" }, "fields": { "json.@timestamp": [ "2020-01-20T15:07:11.000Z" ], "@timestamp": [ "2020-01-20T15:07:16.439Z" ] }, "sort": [ 1579532836439 ] }

5 filebeat添加错误日志

- type: log enabled: true paths: - /usr/local/nginx/logs/error.log tags: ["error"] output.redis: hosts: ["127.0.0.1"] keys: - key: "nginx_access" when.contains: tags: "access" - key: "nginx_error" when.contains: tags: "error"

访问的错误日志

[root@node5 ~]# ab -n 20000 -c 200 http://192.168.132.134/hehe

127.0.0.1:6379> keys * 1) "nginx_error" 2) "nginx_access"

6 有两个key,配置logstash

[root@node4 ~]# cat /etc/logstash/conf.d/logsatsh.conf

input { redis { host => "127.0.0.1" port => "6379" db => "0" key => "nginx_access" data_type => "list" } redis { host => "127.0.0.1" port => "6379" db => "0" key => "nginx_error" data_type => "list" } } filter{ mutate { convert => ["upstream_time","float"] convert => ["request_time","float"] } } output{ stdout {} if "access" in [tags]{ elasticsearch { hosts => "192.168.132.131:9200" manage_template => false index => "nginx_access-%{+yyyy.MM.dd}" } } if "error" in [tags]{ elasticsearch { hosts => "192.168.132.131:9200" manage_template => false index => "nginx_error-%{+yyyy.MM.dd}" } }

}

[root@node4 ~]# /usr/share/logstash/bin/logstash -f /etc/logstash/conf.d/logsatsh.conf

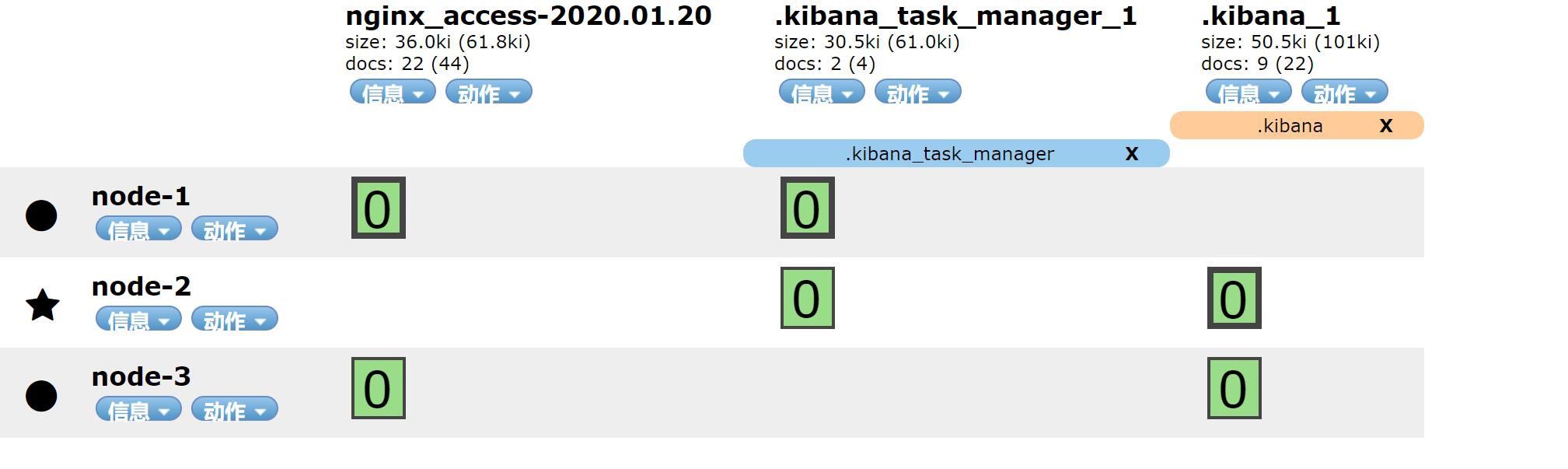

7 启动后,redis被消费

127.0.0.1:6379> keys * 1) "nginx_error" 2) "nginx_access" 127.0.0.1:6379> LLEN nginx_error (integer) 20000 127.0.0.1:6379> LLEN nginx_error (integer) 20000 127.0.0.1:6379> LLEN nginx_error (integer) 20000 127.0.0.1:6379> LLEN nginx_error (integer) 20000 127.0.0.1:6379> LLEN nginx_error (integer) 16125 127.0.0.1:6379> LLEN nginx_error (integer) 15750 127.0.0.1:6379> LLEN nginx_access (integer) 14625 127.0.0.1:6379> LLEN nginx_access (integer) 14375 127.0.0.1:6379> LLEN nginx_error (integer) 14000 127.0.0.1:6379> LLEN nginx_error

查看索引

8 优化配置

通过上面的传输,logstah重视通过tags来区分日志,所以再logstash的input中可以不配置两个key,只需要配置一个key即可

[root@node4 ~]# vim /etc/logstash/conf.d/logsatsh.conf

input { redis { host => "127.0.0.1" port => "6379" db => "0" key => "nginx" data_type => "list" } } filter{ mutate { convert => ["upstream_time","float"] convert => ["request_time","float"] } } output{ stdout {} if "access" in [tags]{ elasticsearch { hosts => "192.168.132.131:9200" manage_template => false index => "nginx_access-%{+yyyy.MM.dd}" } } if "error" in [tags]{ elasticsearch { hosts => "192.168.132.131:9200" manage_template => false index => "nginx_error-%{+yyyy.MM.dd}" } } }

filebeat配置

##################################################### ## outout redis ##################################################### output.redis: hosts: ["127.0.0.1"] keys: "nginx"

[root@node4 ~]# systemctl restart filebeat

[root@node4 ~]# /usr/share/logstash/bin/logstash -f /etc/logstash/conf.d/logsatsh.conf

访问生成日志

[root@node5 ~]# ab -n 20000 -c 200 http://192.168.132.134/hehe

查看redis

127.0.0.1:6379> keys * 1) "nginx" 127.0.0.1:6379> LLEN nginx (integer) 20125 127.0.0.1:6379> LLEN nginx (integer) 19625 127.0.0.1:6379> LLEN nginx (integer) 18250 127.0.0.1:6379> LLEN nginx (integer) 17500 127.0.0.1:6379> LLEN nginx (integer) 16750 127.0.0.1:6379> LLEN nginx (integer) 0

查看索引

索引生成,实验完成

以上是关于ELK学习实验019:ELK使用redis缓存的主要内容,如果未能解决你的问题,请参考以下文章