-

前置条件: 已经安装好了带有HDFS, MapReduce, Yarn 功能的 Hadoop集群

-

上传tar包并解压到指定目录:

tar -zxvf apache-hive-3.1.2-bin.tar.gz -C /opt/ronnie -

修改hive配置文件:

-

新建文件夹

mkdir /opt/ronnie/hive-3.1.2/warehouse hadoop fs -mkdir -p /opt/ronnie/hive-3.1.2/warehouse hadoop fs -chmod 777 /opt/ronnie/hive-3.1.2/warehouse hadoop fs -ls /opt/ronnie/hive-3.1.2/ -

复制配置文件

cd /opt/ronnie/hive-3.1.2/conf cp hive-exec-log4j2.properties.template hive-exec-log4j2.properties cp hive-log4j2.properties.template hive-log4j2.properties cp hive-default.xml.template hive-default.xml cp hive-default.xml.template hive-site.xml cp hive-env.sh.template hive-env.sh

-

-

修改环境配置文件

vim hive-env.sh HADOOP_HOME=/opt/ronnie/hadoop-3.1.2 export HIVE_CONF_DIR=/opt/ronnie/hive-3.1.2/conf export HIVE_AUX_JARS_PATH=/opt/ronnie/hive-3.1.2/lib-

vim hive-site.xml修改配置文件

-

这时候先回顾一下vim操作(由于这个文件页数比较多...):

-

gg: 到页首

-

G: 到页末

-

22, 6918 d(在此执行的删行操作) -

修改配置文件参数:

<configuration> <property> <name>javax.jdo.option.ConnectionUserName</name> <value>root</value> </property> <property> <name>javax.jdo.option.ConnectionPassword</name> <!--你的mysql数据库密码--> <value>xxxxxxx</value> </property> <property> <name>javax.jdo.option.ConnectionURL</name> <value>jdbc:mysql://192.168.180.130:3306/hive?allowMultiQueries=true&useSSL=false&verifyServerCertificate=false</value> </property> <property> <name>javax.jdo.option.ConnectionDriverName</name> <value>com.mysql.jdbc.Driver</value> </property> <property> <name>datanucleus.readOnlyDatastore</name> <value>false</value> </property> <property> <name>datanucleus.fixedDatastore</name> <value>false</value> </property> <property> <name>datanucleus.autoCreateSchema</name> <value>true</value> </property> <property> <name>datanucleus.autoCreateTables</name> <value>true</value> </property> <property> <name>datanucleus.autoCreateColumns</name> <value>true</value> </property> </configuration>

-

-

-

-

下载jdbc

cd /home/ronnie/soft wget http://mirrors.163.com/mysql/Downloads/Connector-J/mysql-connector-java-5.1.48.tar.gz -

MySQL设置

-

下载:

sudo apt-get install mysql -

mysql -uroot 进入mysql界面(Ubuntu mysql 下载后默认开机自启, Centos的话还需要service start mysqld 一下)

-

修改密码:

-

查看用户及密码:

-

老版本:

use mysql; select host,user,password from mysql.user; -

我用的5.7版本

use mysql; select user, host, authentication_string from user;

-

-

设置新密码

update mysql.user set authentication_string=\'你要设置的密码\' where user=\'root\';

-

-

这边有一个巨坑, 初始化数据库的时候报的:

org.apache.hadoop.hive.metastore.HiveMetaException: Failed to get schema version. Underlying cause: com.mysql.jdbc.exceptions.jdbc4.CommunicationsException : Communications link failure-

连接的问题, 但是grant all on hive.* to root@\'%\' identified by \'xxxxxx\'; 敲了好几次都没用。

mysql> select user, authentication_string, host from user; +------------------+-------------------------------------------+-----------+ | user | authentication_string | host | +------------------+-------------------------------------------+-----------+ | root | | localhost | | mysql.session | *THISISNOTAVALIDPASSWORDTHATCANBEUSEDHERE | localhost | | mysql.sys | *THISISNOTAVALIDPASSWORDTHATCANBEUSEDHERE | localhost | | debian-sys-maint | *19A653DDEEC19D326E8DFA1A3D00E26C16438DD8 | localhost | | root | *A63376A449EDC1A66FEFBC77E645D70EF6941893 | % | +------------------+-------------------------------------------+-----------+-

发现有重复的root用户, 删掉, 直接将root修改为%

delete from user where host = \'%\'; mysql> select user, authentication_string, host from user; +------------------+-------------------------------------------+-----------+ | user | authentication_string | host | +------------------+-------------------------------------------+-----------+ | root | | localhost | | mysql.session | *THISISNOTAVALIDPASSWORDTHATCANBEUSEDHERE | localhost | | mysql.sys | *THISISNOTAVALIDPASSWORDTHATCANBEUSEDHERE | localhost | | debian-sys-maint | *19A653DDEEC19D326E8DFA1A3D00E26C16438DD8 | localhost | +------------------+-------------------------------------------+-----------+ mysql> update user set host=\'%\' where user = \'root\'; Query OK, 1 row affected (0.00 sec) Rows matched: 1 Changed: 1 Warnings: 0 mysql> flush privileges; Query OK, 0 rows affected (0.00 sec) mysql> select user, authentication_string, host from user; +------------------+-------------------------------------------+-----------+ | user | authentication_string | host | +------------------+-------------------------------------------+-----------+ | root | | % | | mysql.session | *THISISNOTAVALIDPASSWORDTHATCANBEUSEDHERE | localhost | | mysql.sys | *THISISNOTAVALIDPASSWORDTHATCANBEUSEDHERE | localhost | | debian-sys-maint | *19A653DDEEC19D326E8DFA1A3D00E26C16438DD8 | localhost | +------------------+-------------------------------------------+-----------+ -

重启服务:

service mysqld restart -

还是报错......, 测试了一下远程navicat也连不上, 报的1251

-

vim /etc/mysql/mysql.conf.d/mysqld.cnf 把其中的bind-address改为0.0.0.0

-

还是报错, 最终的解决方案:

ALTER USER \'root\'@\'%\' IDENTIFIED WITH mysql_native_password BY \'密码\'; #记得提交 FLUSH PRIVILEGES;

-

-

然后就连上navicat了, 执行初始化成功了

root@node02:~# schematool -initSchema -dbType mysql SLF4J: Class path contains multiple SLF4J bindings. SLF4J: Found binding in [jar:file:/opt/ronnie/hive-3.1.2/lib/log4j-slf4j-impl-2.10.0.jar!/org/slf4j/impl/StaticLoggerBinder.class] SLF4J: Found binding in [jar:file:/opt/ronnie/hadoop-3.1.2/share/hadoop/common/lib/slf4j-log4j12-1.7.25.jar!/org/slf4j/impl/StaticLoggerBinder.class] SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation. SLF4J: Actual binding is of type [org.apache.logging.slf4j.Log4jLoggerFactory] Metastore connection URL: jdbc:mysql://192.168.180.131:3306/hive?allowMultiQueries=true&useSSL=false&verifyServerCertificate=false Metastore Connection Driver : com.mysql.jdbc.Driver Metastore connection User: root Starting metastore schema initialization to 3.1.0 Initialization script hive-schema-3.1.0.mysql.sql -

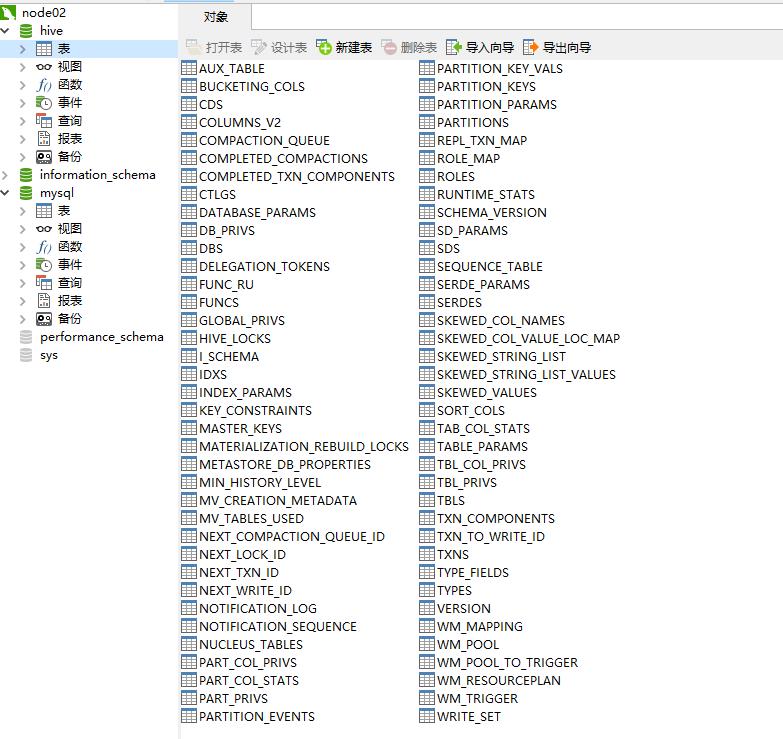

看一下mysql, 表生成成功

-

-

启动Hive

root@node02:~# hive SLF4J: Class path contains multiple SLF4J bindings. SLF4J: Found binding in [jar:file:/opt/ronnie/hive-3.1.2/lib/log4j-slf4j-impl-2.10.0.jar!/org/slf4j/impl/StaticLoggerBinder.class] SLF4J: Found binding in [jar:file:/opt/ronnie/hadoop-3.1.2/share/hadoop/common/lib/slf4j-log4j12-1.7.25.jar!/org/slf4j/impl/StaticLoggerBinder.class] SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation. SLF4J: Actual binding is of type [org.apache.logging.slf4j.Log4jLoggerFactory] SLF4J: Class path contains multiple SLF4J bindings. SLF4J: Found binding in [jar:file:/opt/ronnie/hbase-2.0.6/lib/slf4j-log4j12-1.7.25.jar!/org/slf4j/impl/StaticLoggerBinder.class] SLF4J: Found binding in [jar:file:/opt/ronnie/hive-3.1.2/lib/log4j-slf4j-impl-2.10.0.jar!/org/slf4j/impl/StaticLoggerBinder.class] SLF4J: Found binding in [jar:file:/opt/ronnie/hadoop-3.1.2/share/hadoop/common/lib/slf4j-log4j12-1.7.25.jar!/org/slf4j/impl/StaticLoggerBinder.class] SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation. SLF4J: Actual binding is of type [org.slf4j.impl.Log4jLoggerFactory] 2019-12-02 12:34:18,689 WARN [main] util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable SLF4J: Class path contains multiple SLF4J bindings. SLF4J: Found binding in [jar:file:/opt/ronnie/hive-3.1.2/lib/log4j-slf4j-impl-2.10.0.jar!/org/slf4j/impl/StaticLoggerBinder.class] SLF4J: Found binding in [jar:file:/opt/ronnie/hadoop-3.1.2/share/hadoop/common/lib/slf4j-log4j12-1.7.25.jar!/org/slf4j/impl/StaticLoggerBinder.class] SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation. SLF4J: Actual binding is of type [org.apache.logging.slf4j.Log4jLoggerFactory] Hive Session ID = b34ea22b-d5d7-4c0a-b8de-4ff47f241e34 Logging initialized using configuration in file:/opt/ronnie/hive-3.1.2/conf/hive-log4j2.properties Async: true Hive Session ID = 368bd863-0a45-4c46-94d6-df196a3b4d9b Hive-on-MR is deprecated in Hive 2 and may not be available in the future versions. Consider using a different execution engine (i.e. spark, tez) or using Hive 1.X releases. hive>- Hive 2以上版本已经将Hive on MR视为废弃, 将来版本可能会移除, 现在用spark或tez结合hive的会多一些。

- 现在企业主流使用的hive还是1.x, 部分企业逐渐向2.3版本靠拢, 3.1.2 确实还是太新了。

-

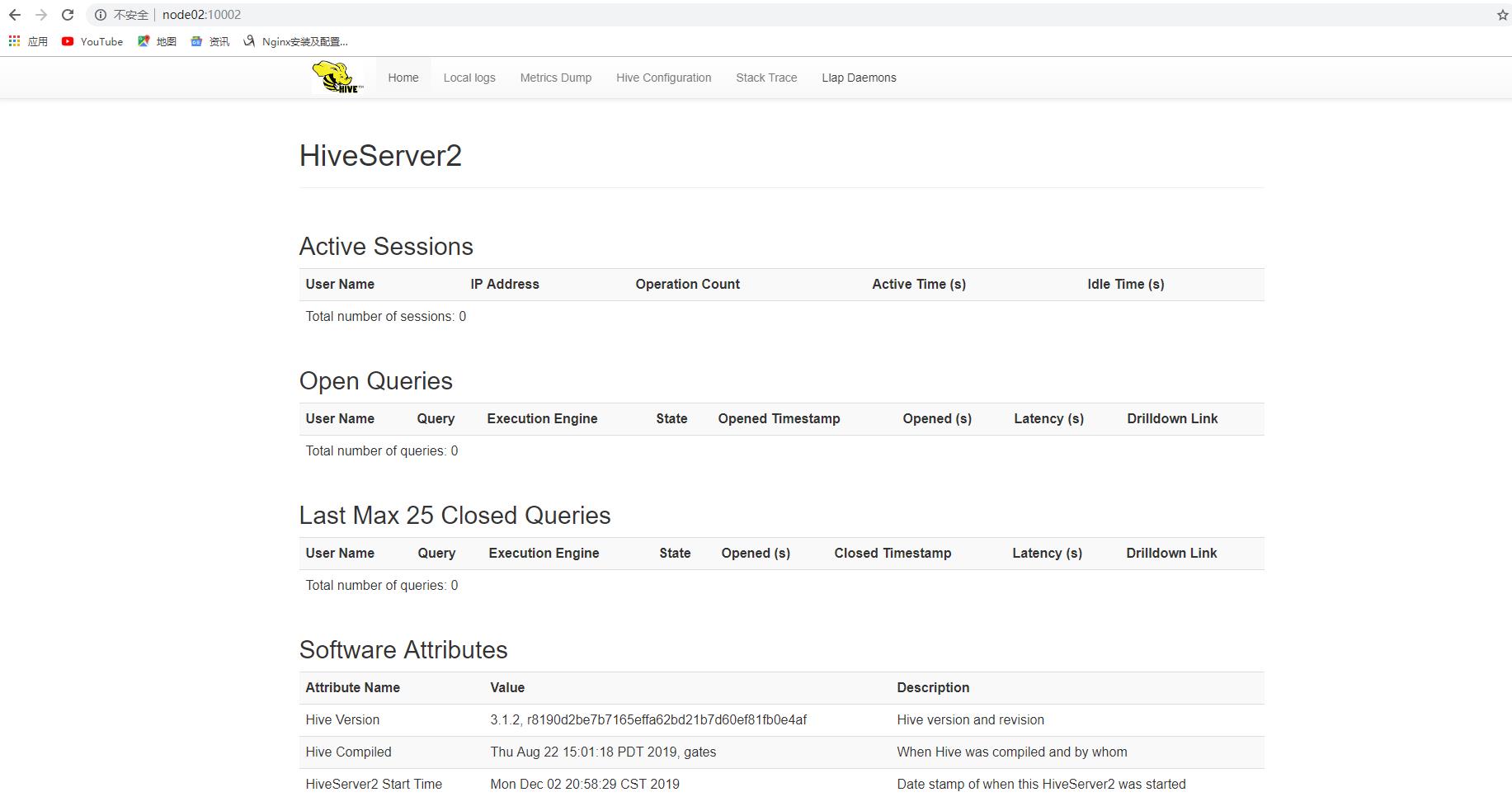

启动HiveServer2 (hiveserver2的服务端口默认是10000,WebUI端口默认是10002)

$HIVE_HOME/bin/./hive --service hiveserver2

-