1、准备:

centos 6.5

jdk 1.7

Java SE安装包下载地址:http://www.oracle.com/technetwork/java/javase/downloads/java-archive-downloads-javase7-521261.html

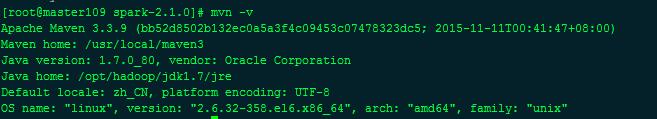

maven3.3.9

Maven3.3.9安装包下载地址:https://mirrors.tuna.tsinghua.edu.cn/apache//maven/maven-3/3.3.9/binaries/

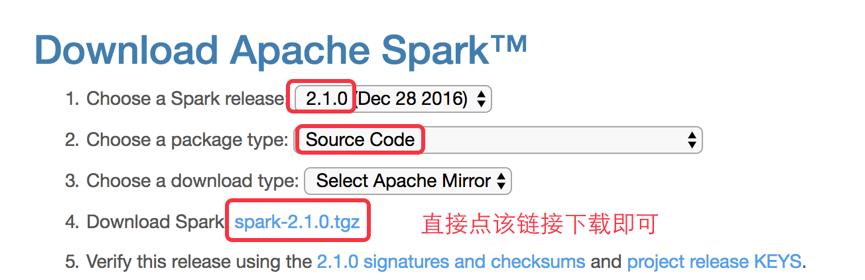

spark 2.1.0 下载

http://spark.apache.org/downloads.html

下载后文件名:

***************************************************分界线 编译开始*********************************************************************

上传到linux

安装maven,解压,配置环境变量

在此略掉...

mvn-v

说明mvn就已经没问题

*************************************************************分界线***********************************************************************************

我的hadoop版本是hadoop2.6.0-cdh5.7.0

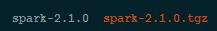

解压spark源码包

得到源码包

忽略我这边已经编译好的spark安装包

先设置maven的内存,不然会有问题,直接设置临时的

export MAVEN_OPTS="-Xmx2g -XX:ReservedCodeCacheSize=512m"

[root@master109 opt]# echo $MAVEN_OPTS -Xmx2g -XX:ReservedCodeCacheSize=512m

进入spark源码主目录

|

1

|

./dev/make-distribution.sh --name 2.6.0-cdh5.7.0 --tgz -Phadoop-2.6 -Dhadoop.version=2.6.0-cdh5.7.0 -Phive -Phive-thriftserver -Pyarn |

结果:

[INFO] BUILD FAILURE [INFO] ------------------------------------------------------------------------ [INFO] Total time: 9.810 s (Wall Clock) [INFO] Finished at: 2017-10-13T15:52:09+08:00 [INFO] Final Memory: 67M/707M [INFO] ------------------------------------------------------------------------ [ERROR] Failed to execute goal on project spark-launcher_2.11: Could not resolve dependencies for project org.apache.spark:spark-launcher_2.11:jar:2.1.0: Failure to find org.apache.hadoop:hadoop-client:jar:2.6.0-cdh5.7.0 in https://repo1.maven.org/maven2 was cached in the local repository, resolution will not be reattempted until the update interval of central has elapsed or updates are forced -> [Help 1] [ERROR] [ERROR] To see the full stack trace of the errors, re-run Maven with the -e switch. [ERROR] Re-run Maven using the -X switch to enable full debug logging. [ERROR] [ERROR] For more information about the errors and possible solutions, please read the following articles: [ERROR] [Help 1] http://cwiki.apache.org/confluence/display/MAVEN/DependencyResolutionException [ERROR] [ERROR] After correcting the problems, you can resume the build with the command [ERROR] mvn <goals> -rf :spark-launcher_2.11

编译失败,显示没有找到一些包,这里是数据源不对,默认的是Apache的源,这里要改成cdh的源

编辑 pom.xml

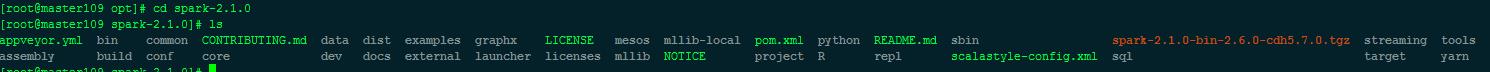

[root@master109 spark-2.1.0]# ls appveyor.yml bin common CONTRIBUTING.md data docs external launcher licenses mllib NOTICE project R repl scalastyle-config.xml streaming tools assembly build conf core dev examples graphx LICENSE mesos mllib-local pom.xml python README.md sbin sql target yarn [root@master109 spark-2.1.0]# vim pom.xml

在如下位置插入

#---------------------------------------------

中间的内容,改变数据源。记住,删掉上下的分隔符。

#---------------------------------------------

<repositories>

<repository>

<id>central</id>

<!-- This should be at top, it makes maven try the central repo first and then others and hence faster dep resolution -->

<name>Maven Repository</name>

<url>https://repo1.maven.org/maven2</url>

<releases>

<enabled>true</enabled>

</releases>

<snapshots>

<enabled>false</enabled>

</snapshots>

</repository>

#---------------------------------------------

<repository>

<id>cloudera</id>

<name>cloudera Repository</name>

<url>https://repository.cloudera.com/artifactory/cloudera-repos</url>

</repository>

#---------------------------------------------

</repositories>

重新编译开始:

[root@master109 spark-2.1.0]# ./dev/make-distribution.sh --name 2.6.0-cdh5.7.0 --tgz -Phadoop-2.6 -Dhadoop.version=2.6.0-cdh5.7.0 -Phive -Phive-thriftserver -Pyarn

等待几分钟:

[INFO] Reactor Summary: [INFO] [INFO] Spark Project Parent POM ........................... SUCCESS [ 3.997 s] [INFO] Spark Project Tags ................................. SUCCESS [ 3.394 s] [INFO] Spark Project Sketch ............................... SUCCESS [ 14.061 s] [INFO] Spark Project Networking ........................... SUCCESS [ 37.680 s] [INFO] Spark Project Shuffle Streaming Service ............ SUCCESS [ 12.750 s] [INFO] Spark Project Unsafe ............................... SUCCESS [ 33.158 s] [INFO] Spark Project Launcher ............................. SUCCESS [ 50.148 s] [INFO] Spark Project Core ................................. SUCCESS [04:16 min] [INFO] Spark Project ML Local Library ..................... SUCCESS [ 45.832 s] [INFO] Spark Project GraphX ............................... SUCCESS [ 26.712 s] [INFO] Spark Project Streaming ............................ SUCCESS [ 58.080 s] [INFO] Spark Project Catalyst ............................. SUCCESS [02:22 min] [INFO] Spark Project SQL .................................. SUCCESS [03:02 min] [INFO] Spark Project ML Library ........................... SUCCESS [02:16 min] [INFO] Spark Project Tools ................................ SUCCESS [ 2.588 s] [INFO] Spark Project Hive ................................. SUCCESS [01:19 min] [INFO] Spark Project REPL ................................. SUCCESS [ 6.337 s] [INFO] Spark Project YARN Shuffle Service ................. SUCCESS [ 13.252 s] [INFO] Spark Project YARN ................................. SUCCESS [ 57.556 s] [INFO] Spark Project Hive Thrift Server ................... SUCCESS [ 45.074 s] [INFO] Spark Project Assembly ............................. SUCCESS [ 7.410 s] [INFO] Spark Project External Flume Sink .................. SUCCESS [ 30.214 s] [INFO] Spark Project External Flume ....................... SUCCESS [ 19.359 s] [INFO] Spark Project External Flume Assembly .............. SUCCESS [ 6.082 s] [INFO] Spark Integration for Kafka 0.8 .................... SUCCESS [ 30.266 s] [INFO] Spark Project Examples ............................. SUCCESS [ 28.668 s] [INFO] Spark Project External Kafka Assembly .............. SUCCESS [ 6.919 s] [INFO] Spark Integration for Kafka 0.10 ................... SUCCESS [ 30.811 s] [INFO] Spark Integration for Kafka 0.10 Assembly .......... SUCCESS [ 6.551 s] [INFO] Kafka 0.10 Source for Structured Streaming ......... SUCCESS [ 17.707 s] [INFO] ------------------------------------------------------------------------ [INFO] BUILD SUCCESS [INFO] ------------------------------------------------------------------------ [INFO] Total time: 13:25 min (Wall Clock) [INFO] Finished at: 2017-10-13T16:35:47+08:00 [INFO] Final Memory: 90M/979M [INFO] ------------------------------------------------------------------------

完事!