MongoDB-复制集rs及sharding cluster

Posted 运维人在路上

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了MongoDB-复制集rs及sharding cluster相关的知识,希望对你有一定的参考价值。

一、mongoDB复制集

1.1、复制集简介

1)一组Mongodb复制集,就是一组mongod进程,这些进程维护同一个数据集合。复制集提供了数据冗余和高等级的可靠性,这是生产部署的基础。保证数据在生产部署时的冗余和可靠性,通过在不同的机器上保存副本来保证数据的不会因为单点损坏而丢失。能够随时应对数据丢失、机器损坏带来的风险。

2)换一句话来说,还能提高读取能力,用户的读取服务器和写入服务器在不同的地方,而且,由不同的服务器为不同的用户提供服务,提高整个系统的负载,简直就是云存储的翻版...

3)一组复制集就是一组mongod实例掌管同一个数据集,实例可以在不同的机器上面。实例中包含一个主导,接受客户端所有的写入操作,其他都是副本实例,从主服务器上获得数据并保持同步。主服务器很重要,包含了所有的改变操作(写)的日志。但是副本服务器集群包含有所有的主服务器数据,因此当主服务器挂掉了,就会在副本服务器上重新选取一个成为主服务器。

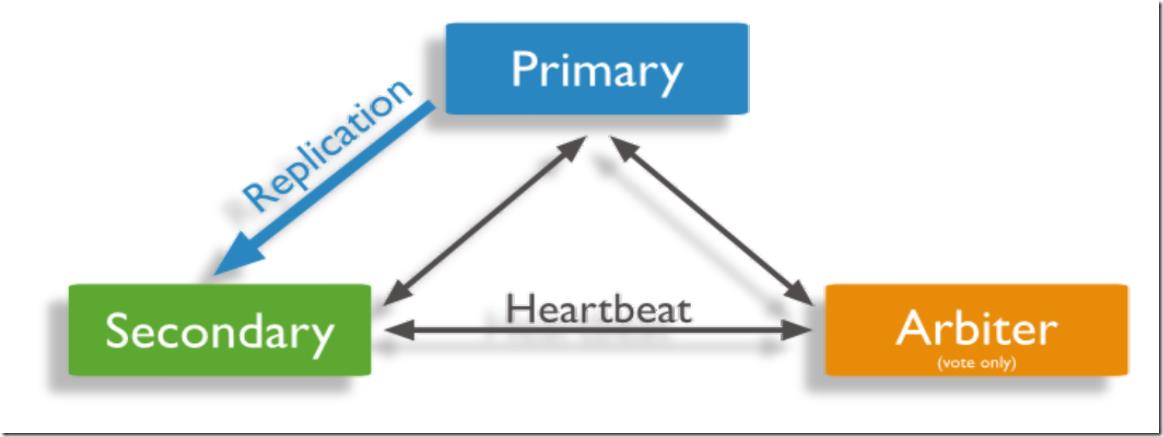

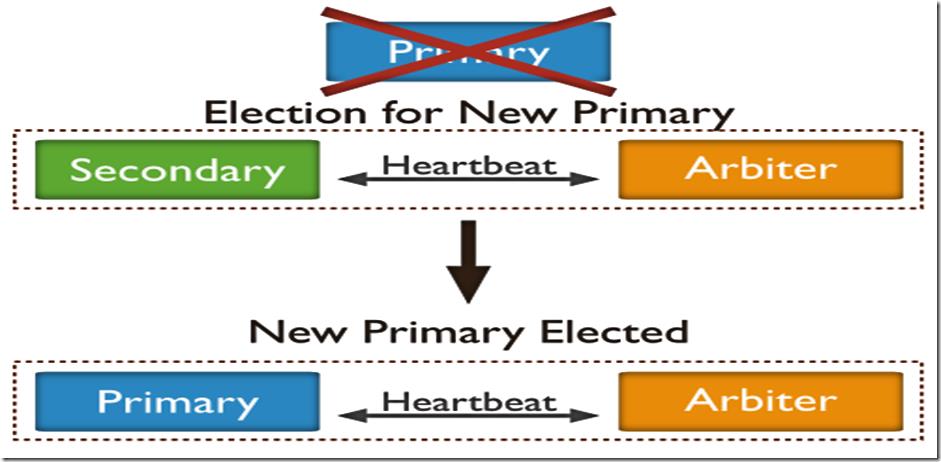

4)每个复制集还有一个仲裁者,仲裁者不存储数据,只是负责通过心跳包来确认集群中集合的数量,并在主服务器选举的时候作为仲裁决定结果。

1.2、复制集基本原理

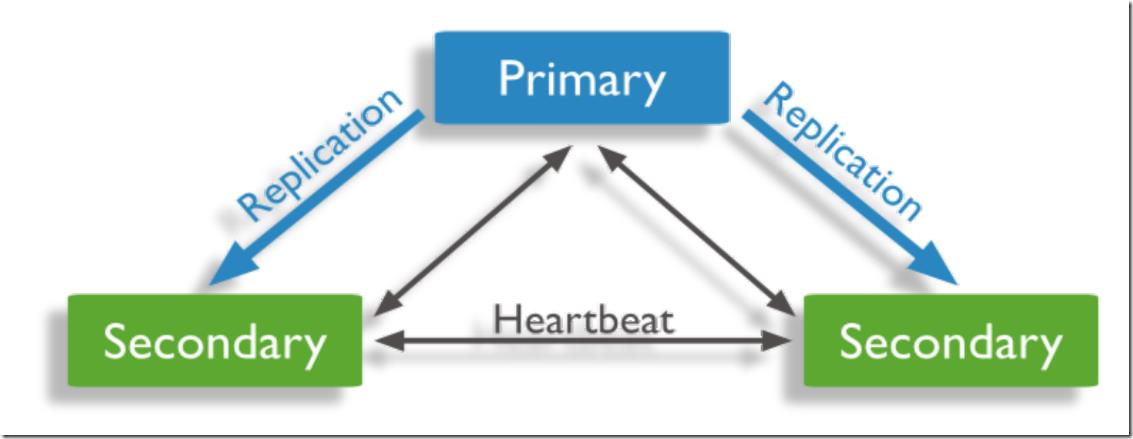

1、基本构成是1主2从的结构,自带互相监控投票机制(Raft(MongoDB),Paxos(mysql MGR 用的是变种))

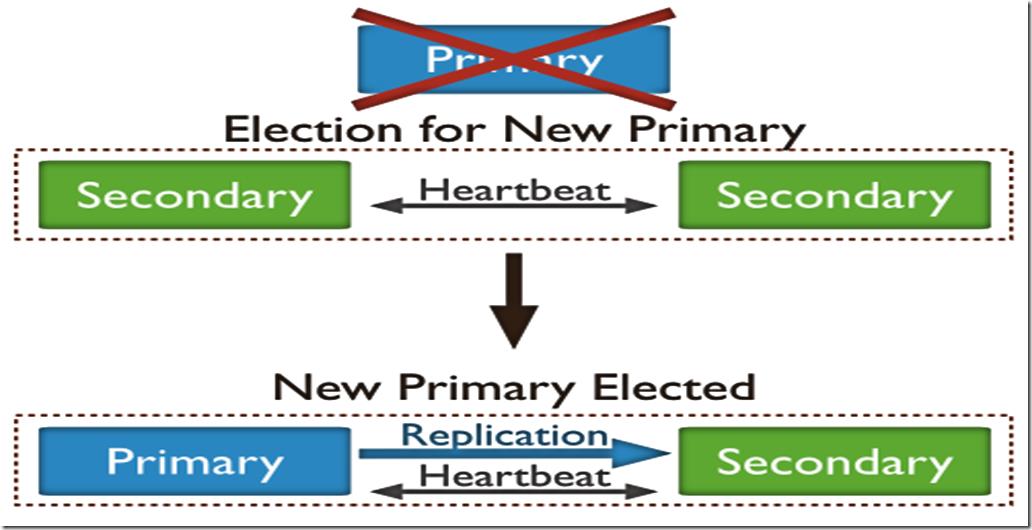

2、如果发生主库宕机,复制集内部会进行投票选举,选择一个新的主库替代原有主库对外提供服务。同时复制集会自动通知客户端程序,主库已经发生切换了。应用就会连接到新的主库。

1.3、复制集架构

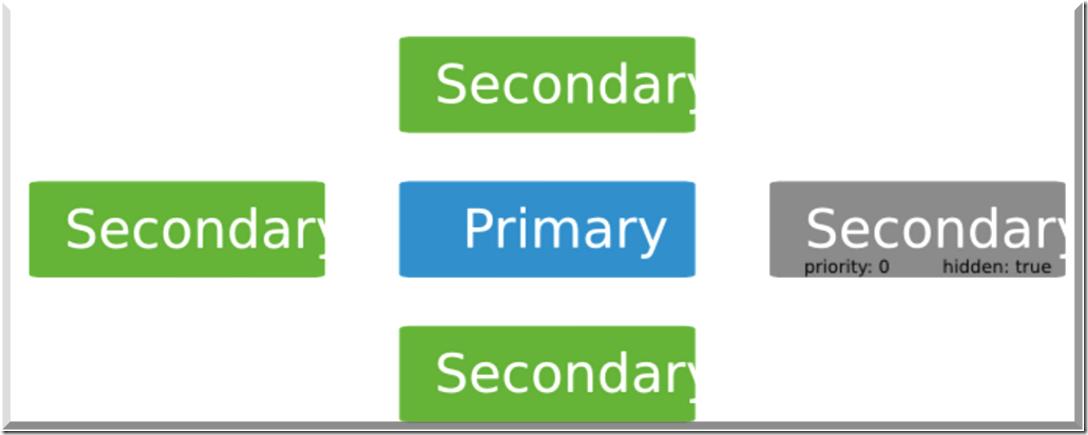

1.3.1、一主二从架构

一个包含3个mongod的复制集架构如下所示:

如果主服务器失效,会变成:

1.3.2、一主一从一仲裁

如果主服务器失效:

1.3.3、隐藏节点

客户端将不会把读请求分发到隐藏节点上,即使我们设定了 复制集读选项 。这些隐藏节点将不会收到来自应用程序的请求。我们可以将隐藏节点专用于报表节点或是备份节点。 延时节点也应该是一个隐藏节点。

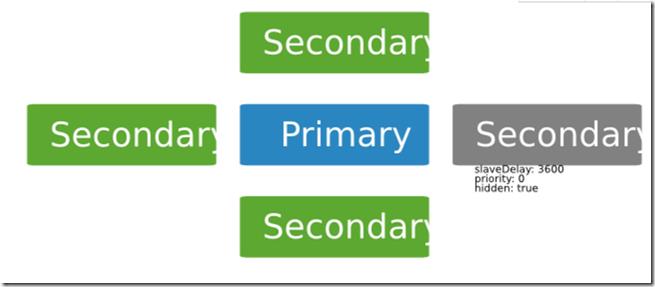

1.3.4、延时节点

延时节点的数据集是延时的,因此它可以帮助我们在人为误操作或是其他意外情况下恢复数据。举个例子,当应用升级失败,或是误操作删除了表和数据库时,我们可以通过延时节点进行数据恢复。

{

"_id" : <num>, "host" : <hostname:port>, "priority" : 0,

"slaveDelay" : <seconds>, "hidden" : true

}

1.4、复制集搭建

1.4.1、规划

1)三个以上的mongodb节点(或多实例)

2)多实例:

- 多个端口:28017、28018、28019、28020

- 多套目录

1.4.2、多实例搭建过程

1)创建目录

su - mongod

mkdir -p /mongodb/28017/{conf,data,log}

mkdir -p /mongodb/28018/{conf,data,log}

mkdir -p /mongodb/28019/{conf,data,log}

mkdir -p /mongodb/28020/{conf,data,log}

2)准备多套配置文件

cat > /mongodb/28017/conf/mongod.conf <<EOF

systemLog:

destination: file

path: /mongodb/28017/log/mongodb.log

logAppend: true

storage:

journal:

enabled: true

dbPath: /mongodb/28017/data

directoryPerDB: true

#engine: wiredTiger

wiredTiger:

engineConfig:

cacheSizeGB: 1

directoryForIndexes: true

collectionConfig:

blockCompressor: zlib

indexConfig:

prefixCompression: true

processManagement:

fork: true

net:

bindIp: 10.0.0.21,127.0.0.1

port: 28017

replication:

oplogSizeMB: 2048

replSetName: my_repl #复制集名称

EOF

\\cp /mongodb/28017/conf/mongod.conf /mongodb/28018/conf/

\\cp /mongodb/28017/conf/mongod.conf /mongodb/28019/conf/

\\cp /mongodb/28017/conf/mongod.conf /mongodb/28020/conf/

sed \'s#28017#28018#g\' /mongodb/28018/conf/mongod.conf -i

sed \'s#28017#28019#g\' /mongodb/28019/conf/mongod.conf -i

sed \'s#28017#28020#g\' /mongodb/28020/conf/mongod.conf -i3)启动多实例

mongod -f /mongodb/28017/conf/mongod.conf mongod -f /mongodb/28018/conf/mongod.conf mongod -f /mongodb/28019/conf/mongod.conf mongod -f /mongodb/28020/conf/mongod.conf #检查端口 [mongod@mongo ~]$ netstat -lntup|grep mongo (Not all processes could be identified, non-owned process info will not be shown, you would have to be root to see it all.) tcp 0 0 127.0.0.1:28017 0.0.0.0:* LISTEN 3490/mongod tcp 0 0 10.0.0.21:28017 0.0.0.0:* LISTEN 3490/mongod tcp 0 0 127.0.0.1:28018 0.0.0.0:* LISTEN 3519/mongod tcp 0 0 10.0.0.21:28018 0.0.0.0:* LISTEN 3519/mongod tcp 0 0 127.0.0.1:28019 0.0.0.0:* LISTEN 3548/mongod tcp 0 0 10.0.0.21:28019 0.0.0.0:* LISTEN 3548/mongod tcp 0 0 127.0.0.1:28020 0.0.0.0:* LISTEN 3577/mongod tcp 0 0 10.0.0.21:28020 0.0.0.0:* LISTEN 3577/mongod

4)配置复制集(1主2从,2个从库作为普通从库)

配置命令:

mongo --port 28017 admin

config = {_id: \'my_repl\', members: [

{_id: 0, host: \'10.0.0.53:28017\'},

{_id: 1, host: \'10.0.0.53:28018\'},

{_id: 2, host: \'10.0.0.53:28019\'}]

}

rs.initiate(config) #配置初始化

rs.status(); #查询复制集状态

操作过程:

[mongod@mongo ~]$ mongo --port 28017 admin

MongoDB shell version v3.6.11-14-g48d999c

connecting to: mongodb://127.0.0.1:28017/admin?gssapiServiceName=mongodb

Implicit session: session { "id" : UUID("8f4e21fd-0722-4fbf-91a9-027e6a633e95") }

MongoDB server version: 3.6.11-14-g48d999c

Server has startup warnings:

2019-10-04T19:08:54.444+0800 I CONTROL [initandlisten]

2019-10-04T19:08:54.444+0800 I CONTROL [initandlisten] ** WARNING: Access control is not enabled for the database.

2019-10-04T19:08:54.444+0800 I CONTROL [initandlisten] ** Read and write access to data and configuration is unrestricted.

2019-10-04T19:08:54.444+0800 I CONTROL [initandlisten]

> config = {_id: \'my_repl\', members: [

... {_id: 0, host: \'10.0.0.21:28017\'},

... {_id: 1, host: \'10.0.0.21:28018\'},

... {_id: 2, host: \'10.0.0.21:28019\'}]

... }

{

"_id" : "my_repl",

"members" : [

{

"_id" : 0,

"host" : "10.0.0.21:28017"

},

{

"_id" : 1,

"host" : "10.0.0.21:28018"

},

{

"_id" : 2,

"host" : "10.0.0.21:28019"

}

]

}

> rs.initiate(config)

{

"ok" : 1,

"operationTime" : Timestamp(1570187674, 1),

"$clusterTime" : {

"clusterTime" : Timestamp(1570187674, 1),

"signature" : {

"hash" : BinData(0,"AAAAAAAAAAAAAAAAAAAAAAAAAAA="),

"keyId" : NumberLong(0)

}

}

}

my_repl:OTHER>

my_repl:SECONDARY> #存在选举过程

my_repl:SECONDARY>

my_repl:PRIMARY> rs.status()

{

"set" : "my_repl",

"date" : ISODate("2019-10-04T11:15:34.581Z"),

"myState" : 1,

"term" : NumberLong(1),

"syncingTo" : "",

"syncSourceHost" : "",

"syncSourceId" : -1,

"heartbeatIntervalMillis" : NumberLong(2000),

"optimes" : {

"lastCommittedOpTime" : {

"ts" : Timestamp(1570187726, 1),

"t" : NumberLong(1)

},

"readConcernMajorityOpTime" : {

"ts" : Timestamp(1570187726, 1),

"t" : NumberLong(1)

},

"appliedOpTime" : {

"ts" : Timestamp(1570187726, 1),

"t" : NumberLong(1)

},

"durableOpTime" : {

"ts" : Timestamp(1570187726, 1),

"t" : NumberLong(1)

}

},

"members" : [

{

"_id" : 0,

"name" : "10.0.0.21:28017",

"health" : 1,

"state" : 1,

"stateStr" : "PRIMARY",

"uptime" : 401,

"optime" : {

"ts" : Timestamp(1570187726, 1),

"t" : NumberLong(1)

},

"optimeDate" : ISODate("2019-10-04T11:15:26Z"),

"syncingTo" : "",

"syncSourceHost" : "",

"syncSourceId" : -1,

"infoMessage" : "could not find member to sync from",

"electionTime" : Timestamp(1570187685, 1),

"electionDate" : ISODate("2019-10-04T11:14:45Z"),

"configVersion" : 1,

"self" : true,

"lastHeartbeatMessage" : ""

},

{

"_id" : 1,

"name" : "10.0.0.21:28018",

"health" : 1,

"state" : 2,

"stateStr" : "SECONDARY",

"uptime" : 59,

"optime" : {

"ts" : Timestamp(1570187726, 1),

"t" : NumberLong(1)

},

"optimeDurable" : {

"ts" : Timestamp(1570187726, 1),

"t" : NumberLong(1)

},

"optimeDate" : ISODate("2019-10-04T11:15:26Z"),

"optimeDurableDate" : ISODate("2019-10-04T11:15:26Z"),

"lastHeartbeat" : ISODate("2019-10-04T11:15:33.089Z"),

"lastHeartbeatRecv" : ISODate("2019-10-04T11:15:33.986Z"),

"pingMs" : NumberLong(0),

"lastHeartbeatMessage" : "",

"syncingTo" : "10.0.0.21:28017",

"syncSourceHost" : "10.0.0.21:28017",

"syncSourceId" : 0,

"infoMessage" : "",

"configVersion" : 1

},

{

"_id" : 2,

"name" : "10.0.0.21:28019",

"health" : 1,

"state" : 2,

"stateStr" : "SECONDARY",

"uptime" : 59,

"optime" : {

"ts" : Timestamp(1570187726, 1),

"t" : NumberLong(1)

},

"optimeDurable" : {

"ts" : Timestamp(1570187726, 1),

"t" : NumberLong(1)

},

"optimeDate" : ISODate("2019-10-04T11:15:26Z"),

"optimeDurableDate" : ISODate("2019-10-04T11:15:26Z"),

"lastHeartbeat" : ISODate("2019-10-04T11:15:33.089Z"),

"lastHeartbeatRecv" : ISODate("2019-10-04T11:15:34Z"),

"pingMs" : NumberLong(0),

"lastHeartbeatMessage" : "",

"syncingTo" : "10.0.0.21:28017",

"syncSourceHost" : "10.0.0.21:28017",

"syncSourceId" : 0,

"infoMessage" : "",

"configVersion" : 1

}

],

"ok" : 1,

"operationTime" : Timestamp(1570187726, 1),

"$clusterTime" : {

"clusterTime" : Timestamp(1570187726, 1),

"signature" : {

"hash" : BinData(0,"AAAAAAAAAAAAAAAAAAAAAAAAAAA="),

"keyId" : NumberLong(0)

}

}

}

my_repl:PRIMARY>

5)配置复制集(1主1从1个arbiter)

配置命令:

mongo -port 28017 admin

config = {_id: \'my_repl\', members: [

{_id: 0, host: \'10.0.0.21:28017\'},

{_id: 1, host: \'10.0.0.21:28018\'},

{_id: 2, host: \'10.0.0.21:28019\',"arbiterOnly":true}]

}

rs.initiate(config)

操作过程:

先将节点10.0.0.21:28019移除,然后添加10.0.0.21:28019为arbiter节点,移除后,重启即可

#移除节点

my_repl:PRIMARY> rs.remove("10.0.0.21:28019")

{

"ok" : 1,

"operationTime" : Timestamp(1570188004, 1),

"$clusterTime" : {

"clusterTime" : Timestamp(1570188004, 1),

"signature" : {

"hash" : BinData(0,"AAAAAAAAAAAAAAAAAAAAAAAAAAA="),

"keyId" : NumberLong(0)

}

}

}

#重启

[mongod@mongo ~]$ mongod -f /mongodb/28019/conf/mongod.conf

#登录添加arbiter

[mongod@mongo ~]$ mongo --port 28017 admin

my_repl:PRIMARY> rs.addArb("10.0.0.21:28019")

{

"ok" : 1,

"operationTime" : Timestamp(1570188231, 1),

"$clusterTime" : {

"clusterTime" : Timestamp(1570188231, 1),

"signature" : {

"hash" : BinData(0,"AAAAAAAAAAAAAAAAAAAAAAAAAAA="),

"keyId" : NumberLong(0)

}

}

}

#查看状态

my_repl:PRIMARY> rs.status()

{

"set" : "my_repl",

"date" : ISODate("2019-10-04T11:23:55.430Z"),

"myState" : 1,

"term" : NumberLong(1),

"syncingTo" : "",

"syncSourceHost" : "",

"syncSourceId" : -1,

"heartbeatIntervalMillis" : NumberLong(2000),

"optimes" : {

"lastCommittedOpTime" : {

"ts" : Timestamp(1570188231, 1),

"t" : NumberLong(1)

},

"readConcernMajorityOpTime" : {

"ts" : Timestamp(1570188231, 1),

"t" : NumberLong(1)

},

"appliedOpTime" : {

"ts" : Timestamp(1570188234, 1),

"t" : NumberLong(1)

},

"durableOpTime" : {

"ts" : Timestamp(1570188234, 1),

"t" : NumberLong(1)

}

},

"members" : [

{

"_id" : 0,

"name" : "10.0.0.21:28017",

"health" : 1,

"state" : 1,

"stateStr" : "PRIMARY",

"uptime" : 902,

"optime" : {

"ts" : Timestamp(1570188234, 1),

"t" : NumberLong(1)

},

"optimeDate" : ISODate("2019-10-04T11:23:54Z"),

"syncingTo" : "",

"syncSourceHost" : "",

"syncSourceId" : -1,

"infoMessage" : "",

"electionTime" : Timestamp(1570187685, 1),

"electionDate" : ISODate("2019-10-04T11:14:45Z"),

"configVersion" : 3,

"self" : true,

"lastHeartbeatMessage" : ""

},

{

"_id" : 1,

"name" : "10.0.0.21:28018",

"health" : 1,

"state" : 2,

"stateStr" : "SECONDARY",

"uptime" : 560,

"optime" : {

"ts" : Timestamp(1570188231, 1),

"t" : NumberLong(1)

},

"optimeDurable" : {

"ts" : Timestamp(1570188231, 1),

"t" : NumberLong(1)

},

"optimeDate" : ISODate("2019-10-04T11:23:51Z"),

"optimeDurableDate" : ISODate("2019-10-04T11:23:51Z"),

"lastHeartbeat" : ISODate("2019-10-04T11:23:53.528Z"),

"lastHeartbeatRecv" : ISODate("2019-10-04T11:23:55.044Z"),

"pingMs" : NumberLong(0),

"lastHeartbeatMessage" : "",

"syncingTo" : "",

"syncSourceHost" : "",

"syncSourceId" : -1,

"infoMessage" : "",

"configVersion" : 3

},

{

"_id" : 2,

"name" : "10.0.0.21:28019",

"health" : 1,

"state" : 7,

"stateStr" : "ARBITER", #仲裁者添加成功

"uptime" : 1,

"lastHeartbeat" : ISODate("2019-10-04T11:23:53.529Z"),

"lastHeartbeatRecv" : ISODate("2019-10-04T11:23:54.541Z"),

"pingMs" : NumberLong(0),

"lastHeartbeatMessage" : "",

"syncingTo" : "",

"syncSourceHost" : "",

"syncSourceId" : -1,

"infoMessage" : "",

"configVersion" : 3

}

],

"ok" : 1,

"operationTime" : Timestamp(1570188234, 1),

"$clusterTime" : {

"clusterTime" : Timestamp(1570188234, 1),

"signature" : {

"hash" : BinData(0,"AAAAAAAAAAAAAAAAAAAAAAAAAAA="),

"keyId" : NumberLong(0)

}

}

}1.5、复制集管理操作

1.5.1、查看复制集状态

rs.status(); //查看整体复制集状态 rs.isMaster(); // 查看当前是否是主节点

1.5.2、添加删除节点

rs.remove("ip:port"); // 删除一个节点

rs.add("ip:port"); // 新增从节点

rs.addArb("ip:port"); // 新增仲裁节点

1.6、特殊节点

1.6.1、添加方法

添加特殊节点时,

1、可以在搭建过程中设置特殊节点

2、可以通过修改配置的方式将普通从节点设置为特殊节点。/*找到需要改为延迟性同步的数组号*/;

1.6.2、特殊节点种类

arbiter节点:主要负责选主过程中的投票,但是不存储任何数据,也不提供任何服务

hidden节点:隐藏节点,不参与选主,也不对外提供服务。

delay节点:延时节点,数据落后于主库一段时间,因为数据是延时的,也不应该提供服务或参与选主,所以通常会配合hidden(隐藏)

一般情况下会将delay+hidden一起配置使用

1.7、配置延时节点

一般延时节点也配置成hidden

配置方法:中间数字最好通过rs.conf()查看时第几个

cfg=rs.conf() cfg.members[3].priority=0 #配置权重(0不参与选主) cfg.members[3].hidden=true #隐藏节点 cfg.members[3].slaveDelay=120 #延时时间 rs.reconfig(cfg) #重新配置 #取消以上配置: cfg=rs.conf() cfg.members[3].priority=1 cfg.members[3].hidden=false cfg.members[3].slaveDelay=0 rs.reconfig(cfg)

操作过程:

#添加节点

my_repl:PRIMARY> rs.add("10.0.0.21:28020")

{

"ok" : 1,

"operationTime" : Timestamp(1570189737, 1),

"$clusterTime" : {

"clusterTime" : Timestamp(1570189737, 1),

"signature" : {

"hash" : BinData(0,"AAAAAAAAAAAAAAAAAAAAAAAAAAA="),

"keyId" : NumberLong(0)

}

}

}

my_repl:PRIMARY> cfg=rs.conf()

{

"_id" : "my_repl",

"version" : 8,

"protocolVersion" : NumberLong(1),

"members" : [

{

"_id" : 0,

"host" : "10.0.0.21:28017",

"arbiterOnly" : false,

"buildIndexes" : true,

"hidden" : false,

"priority" : 1,

"tags" : {

},

"slaveDelay" : NumberLong(0),

"votes" : 1

},

{

"_id" : 1,

"host" : "10.0.0.21:28018",

"arbiterOnly" : false,

"buildIndexes" : true,

"hidden" : false,

"priority" : 1,

"tags" : {

},

"slaveDelay" : NumberLong(0),

"votes" : 1

},

{

"_id" : 2,

"host" : "10.0.0.21:28019",

"arbiterOnly" : true,

"buildIndexes" : true,

"hidden" : false,

"priority" : 0,

"tags" : {

},

"slaveDelay" : NumberLong(0),

"votes" : 1

},

{

"_id" : 3,

"host" : "10.0.0.21:28020",

"arbiterOnly" : false,

"buildIndexes" : true,

"hidden" : false,

"priority" : 1,

"tags" : {

},

"slaveDelay" : NumberLong(0),

"votes" : 1

}

],

"settings" : {

"chainingAllowed" : true,

"heartbeatIntervalMillis" : 2000,

"heartbeatTimeoutSecs" : 10,

"electionTimeoutMillis" : 10000,

"catchUpTimeoutMillis" : -1,

"catchUpTakeoverDelayMillis" : 30000,

"getLastErrorModes" : {

},

"getLastErrorDefaults" : {

"w" : 1,

"wtimeout" : 0

},

"replicaSetId" : ObjectId("5d97299a870e77ef769ee1de")

}

}

my_repl:PRIMARY> cfg.members[3].priority=0

0

my_repl:PRIMARY> cfg.members[3].hidden=true

true

my_repl:PRIMARY> cfg.members[3].slaveDelay=120

120

my_repl:PRIMARY> rs.reconfig(cfg)

{

"ok" : 1,

"operationTime" : Timestamp(1570189804, 1),

"$clusterTime" : {

"clusterTime" : Timestamp(1570189804, 1),

"signature" : {

"hash" : BinData(0,"AAAAAAAAAAAAAAAAAAAAAAAAAAA="),

"keyId" : NumberLong(0)

}

}

}

my_repl:PRIMARY>

#查看

my_repl:PRIMARY> rs.conf()

{

"_id" : "my_repl",

"version" : 9,

"protocolVersion" : NumberLong(1),

"members" : [

{

"_id" : 0,

"host" : "10.0.0.21:28017",

"arbiterOnly" : false,

"buildIndexes" : true,

"hidden" : false,

"priority" : 1,

"tags" : {

},

"slaveDelay" : NumberLong(0),

"votes" : 1

},

{

"_id" : 1,

"host" : "10.0.0.21:28018",

"arbiterOnly" : false,

"buildIndexes" : true,

"hidden" : false,

"priority" : 1,

"tags" : {

},

"slaveDelay" : NumberLong(0),

"votes" : 1

},

{

"_id" : 2,

"host" : "10.0.0.21:28019",

"arbiterOnly" : true,

"buildIndexes" : true,

"hidden" : false,

"priority" : 0,

"tags" : {

},

"slaveDelay" : NumberLong(0),

"votes" : 1

},

{

"_id" : 3,

"host" : "10.0.0.21:28020",

"arbiterOnly" : false,

"buildIndexes" : true,

"hidden" : true,

"priority" : 0,

"tags" : {

},

"slaveDelay" : NumberLong(120),

"votes" : 1

}

],

"settings" : {

"chainingAllowed" : true,

"heartbeatIntervalMillis" : 2000,

"heartbeatTimeoutSecs" : 10,

"electionTimeoutMillis" : 10000,

"catchUpTimeoutMillis" : -1,

"catchUpTakeoverDelayMillis" : 30000,

"getLastErrorModes" : {

},

"getLastErrorDefaults" : {

"w" : 1,

"wtimeout" : 0

},

"replicaSetId" : ObjectId("5d97299a870e77ef769ee1de")

}

}1.8、 相关命令总结

1)查看副本集的配置信息

rs.config() rs.conf()

2)查看副本集各成员的状态

rs.status()

3)副本集角色切换(不要人为随便操作)

rs.stepDown() rs.freeze(300) //锁定从,使其不会转变成主库 #注意:freeze()和stepDown单位都是秒。

1.9、副本节点可读配置

当在副本集上执行读操作时,会报错:

[mongod@mongo ~]$ mongo --port 28018 admin

my_repl:SECONDARY> show dbs

2019-10-04T19:59:20.967+0800 E QUERY [thread1] Error: listDatabases failed:{

"operationTime" : Timestamp(1570190357, 1),

"ok" : 0,

"errmsg" : "not master and slaveOk=false", #错误信息

"code" : 13435,

"codeName" : "NotMasterNoSlaveOk",

"$clusterTime" : {

"clusterTime" : Timestamp(1570190357, 1),

"signature" : {

"hash" : BinData(0,"AAAAAAAAAAAAAAAAAAAAAAAAAAA="),

"keyId" : NumberLong(0)

}

}

} :

_getErrorWithCode@src/mongo/shell/utils.js:25:13

Mongo.prototype.getDBs@src/mongo/shell/mongo.js:67:1

shellHelper.show@src/mongo/shell/utils.js:860:19

shellHelper@src/mongo/shell/utils.js:750:15

@(shellhelp2):1:1

my_repl:SECONDARY> 配置副本集可读方法:rs.slaveOk() #在副本集上配置

my_repl:SECONDARY> rs.slaveOk() my_repl:SECONDARY> show dbs admin 0.000GB config 0.000GB local 0.000GB my_repl:SECONDARY>

1.10、监控主从延时

[mongod@mongo ~]$ mongo --port 28017 admin my_repl:PRIMARY> rs.printSlaveReplicationInfo() source: 10.0.0.21:28018 syncedTo: Fri Oct 04 2019 20:02:17 GMT+0800 (CST) 0 secs (0 hrs) behind the primary source: 10.0.0.21:28020 syncedTo: Fri Oct 04 2019 20:00:17 GMT+0800 (CST) 120 secs (0.03 hrs) behind the primary #延时120s

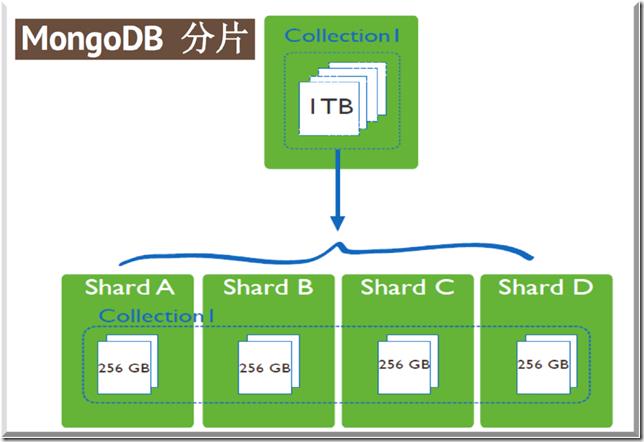

二、sharding cluster介绍

2.1、分片目的

高数据量和吞吐量的数据库应用会对单机的性能造成较大压力,大的查询量会将单机的CPU耗尽,大的数据量对单机的存储压力较大,最终会耗尽系统的内存而将压力转移到磁盘IO上。

为了解决这些问题,有两个基本的方法: 垂直扩展和水平扩展。

- 垂直扩展:增加更多的CPU和存储资源来扩展容量。

- 水平扩展:将数据集分布在多个服务器上。水平扩展即分片。

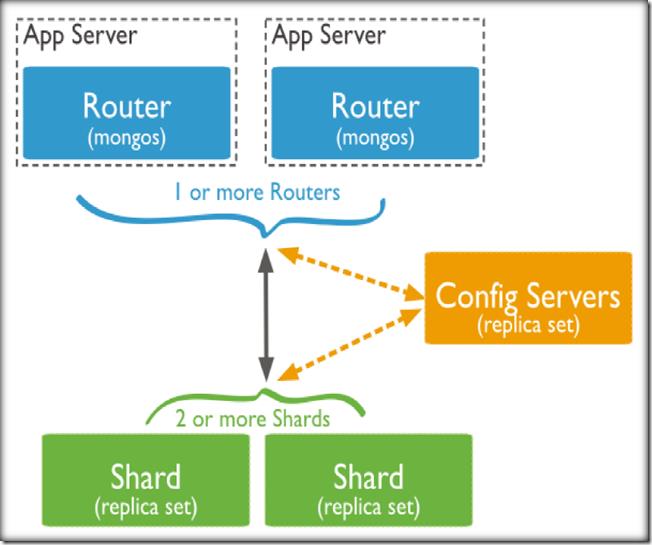

2.2、分片集群结构

1)config server:存储集群所有节点、分片数据路由信息。默认需要配置3个Config Server节点。

2)mongos:提供对外应用访问,所有操作均通过mongos执行。一般有多个mongos节点。数据迁移和数据自动平衡。

3)mongod:存储应用数据记录。一般有多个Mongod节点,达到数据分片目的。

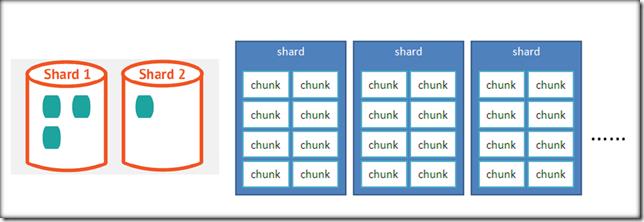

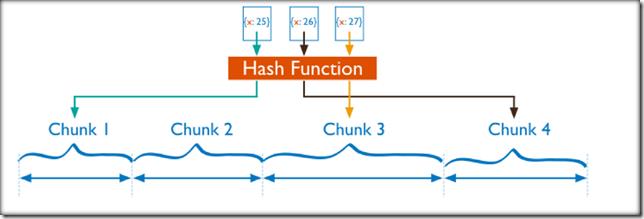

2.3、数据分布(chunk)

1)初始1个chunk

2)缺省chunk 大小:64MB

3)MongoDB:自动拆分& 迁移chunks

2.3.1、chunksize选择

1)适合业务的chunksize是最好的。

2)chunk的分裂和迁移:非常消耗IO资源。

3)chunk分裂的时机:插入和更新,读数据不会分裂。

4)chunksize的选择:

- 小的chunksize:数据均衡是迁移速度快,数据分布更均匀。数据分裂频繁,路由节点消耗更多资源。

- 大的chunksize:数据分裂少。数据块移动集中消耗IO资源。

- 通常100-200M

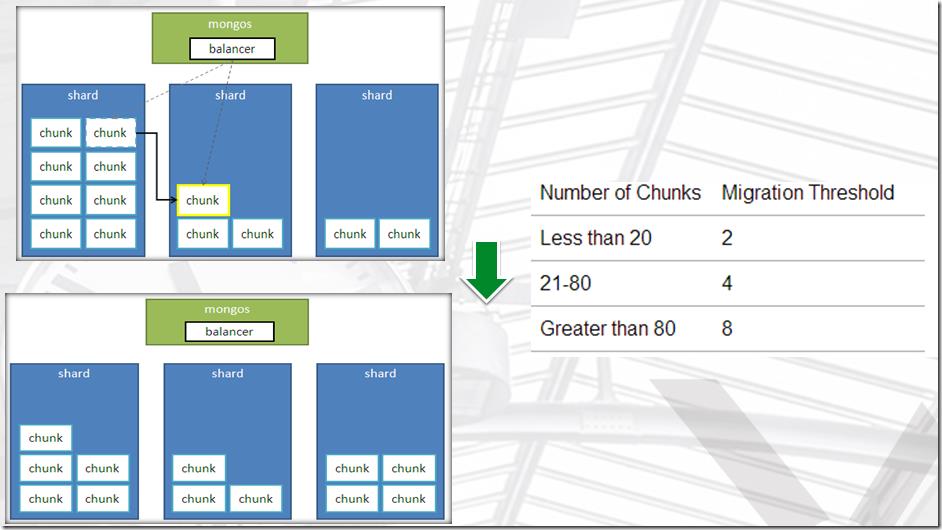

2.3.2、chunk分裂及迁移

随着数据的增长,其中的数据大小超过了配置的chunk size,默认是64M,则这个chunk就会分裂成两个。数据的增长会让chunk分裂得越来越多。这时候,各个shard 上的chunk数量就会不平衡。这时候,mongos中的一个组件balancer就会执行自动平衡。把chunk从chunk数量最多的shard节点挪动到数量最少的节点。

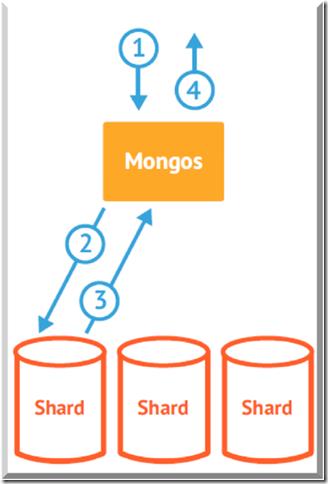

2.4、mongos路由功能

1)当数据写入时,MongoDB Cluster根据分片键设计写入数据。

2)当外部语句发起数据查询时,MongoDB根据数据分布自动路由至指定节点返回数据。

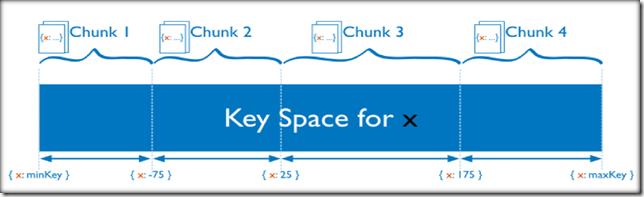

2.5、分片键shard key

1)必须为分片collection 定义分片键。

2)基于一个或多个列(类似一个索引)。

3)分片键定义数据空间。

4)想象key space 类似一条线上一个点数据。

5)一个key range 是一条线上一段数据。

6)我们可以按照分片键进行Range和Hash分片

2.6、Range分片

Sharded Cluster支持将单个集合的数据分散存储在多shard上,用户可以指定根据集合内文档的某个字段即shard key来进行范围分片(range sharding)。

2.7、Hash分片

Hash分片与范围分片互补,能将文档随机的分散到各个chunk,充分的扩展写能力,弥补了范围分片的不足,但不能高效的服务范围查询,所有的范围查询要分发到后端所有的Shard才能找出满足条件的文档。

2.8、分片注意事项

1)分片键是不可变。

2)分片键必须有索引。

3)分片键大小限制512bytes。

4)分片键用于路由查询。

5)MongoDB不接受已进行collection级分片的collection上插入无分片键的文档(也不支持空值插入)

2.9、分片键选择建议

1)递增的sharding key

- 数据文件挪动小。(优势)

- 因为数据文件递增,所以会把insert的写IO永久放在最后一片上,造成最后一片的写热点。

- 同时,随着最后一片的数据量增大,将不断的发生迁移至之前的片上。

2)随机的sharding key

- 数据分布均匀,insert的写IO均匀分布在多个片上。(优势)

- 大量的随机IO,磁盘不堪重荷。

3)混合型key

- 大方向随机递增。

- 小范围随机分布。

三、sharding cluster 配置

3.1、集群规划

10个实例:38017-38026

1)config server:

- 3台构成的复制集(1主两从,不支持arbiter)38018-38020(复制集名字configsvr)

2)shard节点

- sh1:38021-23 (1主两从,其中一个节点为arbiter,复制集名字sh1)

- sh2:38024-26 (1主两从,其中一个节点为arbiter,复制集名字sh2)

3)mongos节点

- 38017

3.2、shard复制集配置

1)目录创建

mkdir -p /mongodb/38021/conf /mongodb/38021/log /mongodb/38021/data mkdir -p /mongodb/38022/conf /mongodb/38022/log /mongodb/38022/data mkdir -p /mongodb/38023/conf /mongodb/38023/log /mongodb/38023/data mkdir -p /mongodb/38024/conf /mongodb/38024/log /mongodb/38024/data mkdir -p /mongodb/38025/conf /mongodb/38025/log /mongodb/38025/data mkdir -p /mongodb/38026/conf /mongodb/38026/log /mongodb/38026/data

2)配置文件修改

对于shard1:

cat >>/mongodb/38021/conf/mongodb.conf <<EOF

systemLog:

destination: file

path: /mongodb/38021/log/mongodb.log

logAppend: true

storage:

journal:

enabled: true

dbPath: /mongodb/38021/data

directoryPerDB: true

#engine: wiredTiger

wiredTiger:

engineConfig:

cacheSizeGB: 1

directoryForIndexes: true

collectionConfig:

blockCompressor: zlib

indexConfig:

prefixCompression: true

net:

bindIp: 10.0.0.21,127.0.0.1

port: 38021

replication:

oplogSizeMB: 2048

replSetName: sh1

sharding:

clusterRole: shardsvr

processManagement:

fork: true

EOF

cp /mongodb/38021/conf/mongodb.conf /mongodb/38022/conf/

cp /mongodb/38021/conf/mongodb.conf /mongodb/38023/conf/

sed \'s#38021#38022#g\' /mongodb/38022/conf/mongodb.conf -i

sed \'s#38021#38023#g\' /mongodb/38023/conf/mongodb.conf -i

对于shard2:

cat >>/mongodb/38024/conf/mongodb.conf <<EOF

systemLog:

destination: file

path: /mongodb/38024/log/mongodb.log

logAppend: true

storage:

journal:

enabled: true

dbPath: /mongodb/38024/data

directoryPerDB: true

wiredTiger:

engineConfig:

cacheSizeGB: 1

directoryForIndexes: true

collectionConfig:

blockCompressor: zlib

indexConfig:

prefixCompression: true

net:

bindIp: 10.0.0.21,127.0.0.1

port: 38024

replication:

oplogSizeMB: 2048

replSetName: sh2

sharding:

clusterRole: shardsvr

processManagement:

fork: true

EOF

cp /mongodb/38024/conf/mongodb.conf /mongodb/38025/conf/

cp /mongodb/38024/conf/mongodb.conf /mongodb/38026/conf/

sed \'s#38024#38025#g\' /mongodb/38025/conf/mongodb.conf -i

sed \'s#38024#38026#g\' /mongodb/38026/conf/mongodb.conf -i

3)启动shard节点

mongod -f /mongodb/38021/conf/mongodb.conf mongod -f /mongodb/38022/conf/mongodb.conf mongod -f /mongodb/38023/conf/mongodb.conf mongod -f /mongodb/38024/conf/mongodb.conf mongod -f /mongodb/38025/conf/mongodb.conf mongod -f /mongodb/38026/conf/mongodb.conf [mongod@mongo ~]$ ps -ef|grep mongo root 3386 3108 0 19:02 pts/2 00:00:00 su - mongod mongod 3387 3386 0 19:02 pts/2 00:00:00 -bash mongod 4836 1 5 20:43 ? 00:00:00 mongod -f /mongodb/38021/conf/mongodb.conf mongod 4864 1 5 20:43 ? 00:00:00 mongod -f /mongodb/38022/conf/mongodb.conf mongod 4892 1 5 20:43 ? 00:00:00 mongod -f /mongodb/38023/conf/mongodb.conf mongod 4920 1 5 20:43 ? 00:00:00 mongod -f /mongodb/38024/conf/mongodb.conf mongod 4948 1 6 20:43 ? 00:00:00 mongod -f /mongodb/38025/conf/mongodb.conf mongod 4976 1 6 20:43 ? 00:00:00 mongod -f /mongodb/38026/conf/mongodb.conf mongod 5002 3387 0 20:44 pts/2 00:00:00 ps -ef mongod 5003 3387 0 20:44 pts/2 00:00:00 grep --color=auto mongo

4)搭建复制集

在shard1上搭建复制集:

mongo --port 38021

use admin

config = {_id: \'sh1\', members: [

{_id: 0, host: \'10.0.0.21:38021\'},

{_id: 1, host: \'10.0.0.21:38022\'},

{_id: 2, host: \'10.0.0.21:38023\',"arbiterOnly":true}]

}

rs.initiate(config)

在shard2上搭建复制集:

mongo --port 38024

use admin

config = {_id: \'sh2\', members: [

{_id: 0, host: \'10.0.0.21:38024\'},

{_id: 1, host: \'10.0.0.21:38025\'},

{_id: 2, host: \'10.0.0.21:38026\',"arbiterOnly":true}]

}

rs.initiate(config)

3.3、 config节点配置

1)目录创建

mkdir -p /mongodb/38018/conf /mongodb/38018/log /mongodb/38018/data mkdir -p /mongodb/38019/conf /mongodb/38019/log /mongodb/38019/data mkdir -p /mongodb/38020/conf /mongodb/38020/log /mongodb/38020/data

2)修改配置文件

cat >>/mongodb/38018/conf/mongodb.conf<<EOF

systemLog:

destination: file

path: /mongodb/38018/log/mongodb.conf

logAppend: true

storage:

journal:

enabled: true

dbPath: /mongodb/38018/data

directoryPerDB: true

#engine: wiredTiger

wiredTiger:

engineConfig:

cacheSizeGB: 1

directoryForIndexes: true

collectionConfig:

blockCompressor: zlib

indexConfig:

prefixCompression: true

net:

bindIp: 10.0.0.21,127.0.0.1

port: 38018

replication:

oplogSizeMB: 2048

replSetName: configReplSet

sharding:

clusterRole: configsvr #不可更改

processManagement:

fork: true

EOF

cp /mongodb/38018/conf/mongodb.conf /mongodb/38019/conf/

cp /mongodb/38018/conf/mongodb.conf /mongodb/38020/conf/

sed \'s#38018#38019#g\' /mongodb/38019/conf/mongodb.conf -i

sed \'s#38018#38020#g\' /mongodb/38020/conf/mongodb.conf -i3)启动config节点

mongod -f /mongodb/38018/conf/mongodb.conf mongod -f /mongodb/38019/conf/mongodb.conf mongod -f /mongodb/38020/conf/mongodb.conf

4)配置复制集

mongo --port 38018

use admin

config = {_id: \'configReplSet\', members: [

{_id: 0, host: \'10.0.0.21:38018\'},

{_id: 1, host: \'10.0.0.21:38019\'},

{_id: 2, host: \'10.0.0.21:38020\'}]

}

rs.initiate(config)

注意:configserver 可以是一个节点,官方建议复制集。configserver不能有arbiter。

新版本中,要求必须是复制集。

注意:mongodb 3.4之后,虽然要求config server为replica set,但是不支持arbiter

3.4、mongos节点配置

1)创建目录

mkdir -p /mongodb/38017/conf /mongodb/38017/log

2)配置文件修改

cat >>/mongodb/38017/conf/mongos.conf<<EOF systemLog: destination: file path: /mongodb/38017/log/mongos.log logAppend: true net: bindIp: 10.0.0.21,127.0.0.1 port: 38017 sharding: configDB: configReplSet/10.0.0.21:38018,10.0.0.21:38019,10.0.0.21:38020 #config server信息 processManagement: fork: true EOF

3)启动mongos

mongos -f /mongodb/38017/conf/mongos.conf

4)查看各节点

[mongod@mongo ~]$ ps -ef|grep mongo root 3386 3108 0 19:02 pts/2 00:00:00 su - mongod mongod 3387 3386 0 19:02 pts/2 00:00:00 -bash mongod 4836 1 0 20:43 ? 00:00:06 mongod -f /mongodb/38021/conf/mongodb.conf mongod 4864 1 0 20:43 ? 00:00:06 mongod -f /mongodb/38022/conf/mongodb.conf mongod 4892 1 0 20:43 ? 00:00:04 mongod -f /mongodb/38023/conf/mongodb.conf mongod 4920 1 0 20:43 ? 00:00:06 mongod -f /mongodb/38024/conf/mongodb.conf mongod 4948 1 0 20:43 ? 00:00:06 mongod -f /mongodb/38025/conf/mongodb.conf mongod 4976 1 0 20:43 ? 00:00:04 mongod -f /mongodb/38026/conf/mongodb.conf mongod 5292 1 1 20:50 ? 00:00:03 mongod -f /mongodb/38018/conf/mongodb.conf mongod 5326 1 1 20:50 ? 00:00:03 mongod -f /mongodb/38019/conf/mongodb.conf mongod 5360 1 1 20:50 ? 00:00:03 mongod -f /mongodb/38020/conf/mongodb.conf mongod 5598 1 0 20:55 ? 00:00:00 mongos -f /mongodb/38017/conf/mongos.conf mongod 5630 3387 0 20:55 pts/2 00:00:00 ps -ef mongod 5631 3387 0 20:55 pts/2 00:00:00 grep --color=auto mongo

3.5、分片集群操作

3.5.1、连接到mongos的admin数据库

# su - mongod $ mongo 10.0.0.21:38017/admin mongos>

3.5.2、添加分片

db.runCommand( { addshard : "sh1/10.0.0.21:38021,10.0.0.21:38022,10.0.0.21:38023",name:"shard1"} )

db.runCommand( { addshard : "sh2/10.0.0.21:38024,10.0.0.21:38025,10.0.0.21:38026",name:"shard2"} )操作过程:

mongos> db.runCommand( { addshard : "sh1/10.0.0.21:38021,10.0.0.21:38022,10.0.0.21:38023",name:"shard1"} )

{

"shardAdded" : "shard1",

"ok" : 1,

"operationTime" : Timestamp(1570194077, 7),

"$clusterTime" : {

"clusterTime" : Timestamp(1570194077, 7),

"signature" : {

"hash" : BinData(0,"AAAAAAAAAAAAAAAAAAAAAAAAAAA="),

"keyId" : NumberLong(0)

}

}

}

mongos> db.runCommand( { addshard : "sh2/10.0.0.21:38024,10.0.0.21:38025,10.0.0.21:38026",name:"shard2"} )

{

"shardAdded" : "shard2",

"ok" : 1,

"operationTime" : Timestamp(1570194077, 11),

"$clusterTime" : {

"clusterTime" : Timestamp(1570194077, 11),

"signature" : {

"hash" : BinData(0,"AAAAAAAAAAAAAAAAAAAAAAAAAAA="),

"keyId" : NumberLong(0)

}

}

}

mongos> 3.5.3、列出分片

mongos> db.runCommand( { listshards : 1 } )操作过程:

mongos> db.runCommand( { listshards : 1 } )

{

"shards" : [

{

"_id" : "shard1",

"host" : "sh1/10.0.0.21:38021,10.0.0.21:38022",

"state" : 1

},

{

"_id" : "shard2",

"host" : "sh2/10.0.0.21:38024,10.0.0.21:38025",

"state" : 1

}

],

"ok" : 1,

"operationTime" : Timestamp(1570194225, 2),

"$clusterTime" : {

"clusterTime" : Timestamp(1570194225, 2),

"signature" : {

"hash" : BinData(0,"AAAAAAAAAAAAAAAAAAAAAAAAAAA="),

"keyId" : NumberLong(0)

}

}

}3.5.4、整体状态查看

sh.status();

操作过程:

mongos> sh.status();

--- Sharding Status ---

sharding version: {

"_id" : 1,

"minCompatibleVersion" : 5,

"currentVersion" : 6,

"clusterId" : ObjectId("5d97409fe2c89418706411cd")

}

shards:

{ "_id" : "shard1", "host" : "sh1/10.0.0.21:38021,10.0.0.21:38022", "state" : 1 }

{ "_id" : "shard2", "host" : "sh2/10.0.0.21:38024,10.0.0.21:38025", "state" : 1 }

active mongoses:

"3.6.11-14-g48d999c" : 1

autosplit:

Currently enabled: yes

balancer:

Currently enabled: yes

Currently running: no

Failed balancer rounds in last 5 attempts: 0

Migration Results for the last 24 hours:

No recent migrations

databases:

{ "_id" : "config", "primary" : "config", "partitioned" : true }

mongos> 3.6、Range分片配置

对test库下的vast大表进行手工分片

1)激活数据库分片功能

[mongod@mongo ~]$ mongo --port 38017 admin

mongos> db.runCommand( { enablesharding : "test" } )

{

"ok" : 1,

"operationTime" : Timestamp(1570195215, 8),

"$clusterTime" : {

"clusterTime" : Timestamp(1570195215, 8),

"signature" : {

"hash" : BinData(0,"AAAAAAAAAAAAAAAAAAAAAAAAAAA="),

"keyId" : NumberLong(0)

}

}

}2) 指定分片键对集合分片,使用范围片键,创建索引

use test

db.vast.ensureIndex( { id: 1 } )

#---------------------------------------------------------

mongos> use test

switched to db test

mongos> db

test

mongos> db.vast.ensureIndex( { id: 1 } )

{

"raw" : {

"sh2/10.0.0.21:38024,10.0.0.21:38025" : {

"createdCollectionAutomatically" : true,

"numIndexesBefore" : 1,

"numIndexesAfter" : 2,

"ok" : 1

}

},

"ok" : 1,

"operationTime" : Timestamp(1570195422, 3),

"$clusterTime" : {

"clusterTime" : Timestamp(1570195422, 3),

"signature" : {

"hash" : BinData(0,"AAAAAAAAAAAAAAAAAAAAAAAAAAA="),

"keyId" : NumberLong(0)

}

}

}

mongos> 3)开启分片

use admin

db.runCommand( { shardcollection : "test.vast",key : {id: 1} } )

#------------------------------------------------------------

mongos> use admin

switched to db admin

mongos> db

admin

mongos> db.runCommand( { shardcollection : "test.vast",key : {id: 1} } )

{

"collectionsharded" : "test.vast",

"collectionUUID" : UUID("fedf1f65-9215-4786-b54c-9449521abead"),

"ok" : 1,

"operationTime" : Timestamp(1570195519, 11),

"$clusterTime" : {

"clusterTime" : Timestamp(1570195519, 11),

"signature" : {

"hash" : BinData(0,"AAAAAAAAAAAAAAAAAAAAAAAAAAA="),

"keyId" : NumberLong(0)

}

}

}4)集合分片验证

mongos> use test

switched to db test

mongos> db

test

mongos> for(i=1;i<500000;i++){ db.vast.insert({"id":i,"name":"shenzheng","age":70,"date":new Date()}); }

mongos> db.vast.stats()

5)分片结果测试

shard1: mongo --port 38021 db.vast.count(); shard2: mongo --port 38024 db.vast.count(); #结果:先往一个分片中填充数据(64M),满了之后到另一个分片

3.7、Hash分片

对oldboy库下的vast大表进行hash

1)对于oldboy开启分片功能

mongo --port 38017 admin

use admin

db.runCommand( { enablesharding : "oldboy" } )

操作过程:

[mongod@mongo ~]$ mongo --port 38017 admin

mongos> use admin

switched to db admin

mongos> db.runCommand( { enablesharding : "oldboy" } )

{

"ok" : 1,

"operationTime" : Timestamp(1570196229, 277),

"$clusterTime" : {

"clusterTime" : Timestamp(1570196229, 283),

"signature" : {

"hash" : BinData(0,"AAAAAAAAAAAAAAAAAAAAAAAAAAA="),

"keyId" : NumberLong(0)

}

}

}

2)对于oldboy库下的vast表建立hash索引

use oldboy

db.vast.ensureIndex( { id: "hashed" } )

操作过程:

mongos> use oldboy

switched to db oldboy

mongos> db.vast.ensureIndex( { id: "hashed" } )

{

"raw" : {

"sh1/10.0.0.21:38021,10.0.0.21:38022" : {

"createdCollectionAutomatically" : true,

"numIndexesBefore" : 1,

"numIndexesAfter" : 2,

"ok" : 1

}

},

"ok" : 1,

"operationTime" : Timestamp(1570196318, 500),

"$clusterTime" : {

"clusterTime" : Timestamp(1570196318, 527),

"signature" : {

"hash" : BinData(0,"AAAAAAAAAAAAAAAAAAAAAAAAAAA="),

"keyId" : NumberLong(0)

}

}

}

3)开启分片

use admin

sh.shardCollection( "oldboy.vast", { id: "hashed" } )

操作过程:

mongos> use admin

switched to db admin

mongos> sh.shardCollection( "oldboy.vast", { id: "hashed" } )

{

"collectionsharded" : "oldboy.vast",

"collectionUUID" : UUID("f1152463-24dd-47f9-8107-fa981b556fc5"),

"ok" : 1,

"operationTime" : Timestamp(1570196393, 417),

"$clusterTime" : {

"clusterTime" : Timestamp(1570196393, 424),

"signature" : {

"hash" : BinData(0,"AAAAAAAAAAAAAAAAAAAAAAAAAAA="),

"keyId" : NumberLong(0)

}

}

}4)录入1w行数据测试

use oldboy

for(i=1;i<10000;i++){ db.vast.insert({"id":i,"name":"shenzheng","age":70,"date":new Date()}); }5)hash分片结果测试

mongo --port 38021 use oldboy db.vast.count(); sh1:PRIMARY> db.vast.count(); 50393 mongo --port 38024 use oldboy db.vast.count(); sh2:PRIMARY> db.vast.count(); 49606 #两个分片很均匀

3.8、其他命令

1)判断是否Shard集群

db.runCommand({ isdbgrid : 1})

mongos> db.runCommand({ isdbgrid : 1})

{

"isdbgrid" : 1,

"hostname" : "mongo",

"ok" : 1,

"operationTime" : Timestamp(1570196870, 1),

"$clusterTime" : {

"clusterTime" : Timestamp(1570196870, 1),

"signature" : {

"hash" : BinData(0,"AAAAAAAAAAAAAAAAAAAAAAAAAAA="),

"keyId" : NumberLong(0)

}

}

}2)列出开启分片的数据库

use config

db.databases.find( { "partitioned": true } )

或者:

db.databases.find() #列出所有数据库分片情况

mongos> use config

switched to db config

mongos> db.databases.find( { "partitioned": true } )

{ "_id" : "test", "primary" : "shard2", "partitioned" : true }

{ "_id" : "oldboy", "primary" : "shard1", "partitioned" : true }

mongos> db.databases.find()

{ "_id" : "test", "primary" : "shard2", "partitioned" : true }

{ "_id" : "oldboy", "primary" : "shard1", "partitioned" : true }3)查看分片的片键

mongos> db.collections.find().pretty()

{

"_id" : "config.system.sessions",

"lastmodEpoch" : ObjectId("5d9743a2e2c8941870641fe4"),

"lastmod" : ISODate("1970-02-19T17:02:47.296Z"),

"dropped" : false,

"key" : {

"_id" : 1

},

"unique" : false,

"uuid" : UUID("74bb6ba3-d394-4dd4-ac65-fd8acb5008e3")

}

{

"_id" : "test.vast",

"lastmodEpoch" : ObjectId("5d97483fe2c8941870643ba4"),

"lastmod" : ISODate("1970-02-19T17:02:47.296Z"),

"dropped" : false,

"key" : {

"id" : 1

},

"unique" : false,

"uuid" : UUID("fedf1f65-9215-4786-b54c-9449521abead")

}

{

"_id" : "oldboy.vast",

"lastmodEpoch" : ObjectId("5d974ba9e2c8941870645292"),

"lastmod" : ISODate("1970-02-19T17:02:47.297Z"),

"dropped" : false,

"key" : {

"id" : "hashed"

},

"unique" : false,

"uuid" : UUID("f1152463-24dd-47f9-8107-fa981b556fc5")

}

4)查看分片的详细信息

db.printShardingStatus() #或者 sh.status()

5)删除分片节点(谨慎)

#1、确认blance是否在工作

sh.getBalancerState()

#2、删除shard2节点(谨慎)

mongos> db.runCommand( { removeShard: "shard2" } )

#注意:删除操作一定会立即触发blancer。

3.9、balancer操作

3.9.1、介绍

mongos的一个重要功能,自动巡查所有shard节点上的chunk的情况,自动做chunk迁移

3.9.2、工作时间

1)自动运行,会检测系统不繁忙的时候做迁移

2)在做节点删除的时候,立即开始迁移工作

3)balancer只能在预设定的时间窗口内运行

4)有需要时可以关闭和开启blancer(备份的时候)

mongos> sh.stopBalancer() mongos> sh.startBalancer()

3.9.3、自定义balancer自动平衡进行的时间段

官方文档:https://docs.mongodb.com/manual/tutorial/manage-sharded-cluster-balancer/#schedule-the-balancing-window

use config

sh.setBalancerState( true )

db.settings.update({ _id : "balancer" }, { $set : { activeWindow : { start : "3:00", stop : "5:00" } } }, true )

sh.getBalancerWindow()

sh.status()

操作示例:

mongos> use config

switched to db config

mongos> sh.setBalancerState( true )

{

"ok" : 1,

"operationTime" : Timestamp(1570197638, 3),

"$clusterTime" : {

"clusterTime" : Timestamp(1570197638, 3),

"signature" : {

"hash" : BinData(0,"AAAAAAAAAAAAAAAAAAAAAAAAAAA="),

"keyId" : NumberLong(0)

}

}

}

mongos> db.settings.update({ _id : "balancer" }, { $set : { activeWindow : { start : "3:00", stop : "5:00" } } }, true )

WriteResult({ "nMatched" : 1, "nUpserted" : 0, "nModified" : 1 })

mongos> sh.getBalancerWindow()

{ "start" : "3:00", "stop" : "5:00" }3.9.4、 集合的balancer

#关闭某个集合的balancer

sh.disableBalancing("students.grades")

#打开某个集合的balancer

sh.enableBalancing("students.grades")

#确定某个集合的balance是开启或者关闭

db.getSiblingDB("config").collections.findOne({_id : "students.grades"}).noBalance;

以上是关于MongoDB-复制集rs及sharding cluster的主要内容,如果未能解决你的问题,请参考以下文章