从NN到RNN再到LSTM: Gated Recurrent Units

Posted clear-

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了从NN到RNN再到LSTM: Gated Recurrent Units相关的知识,希望对你有一定的参考价值。

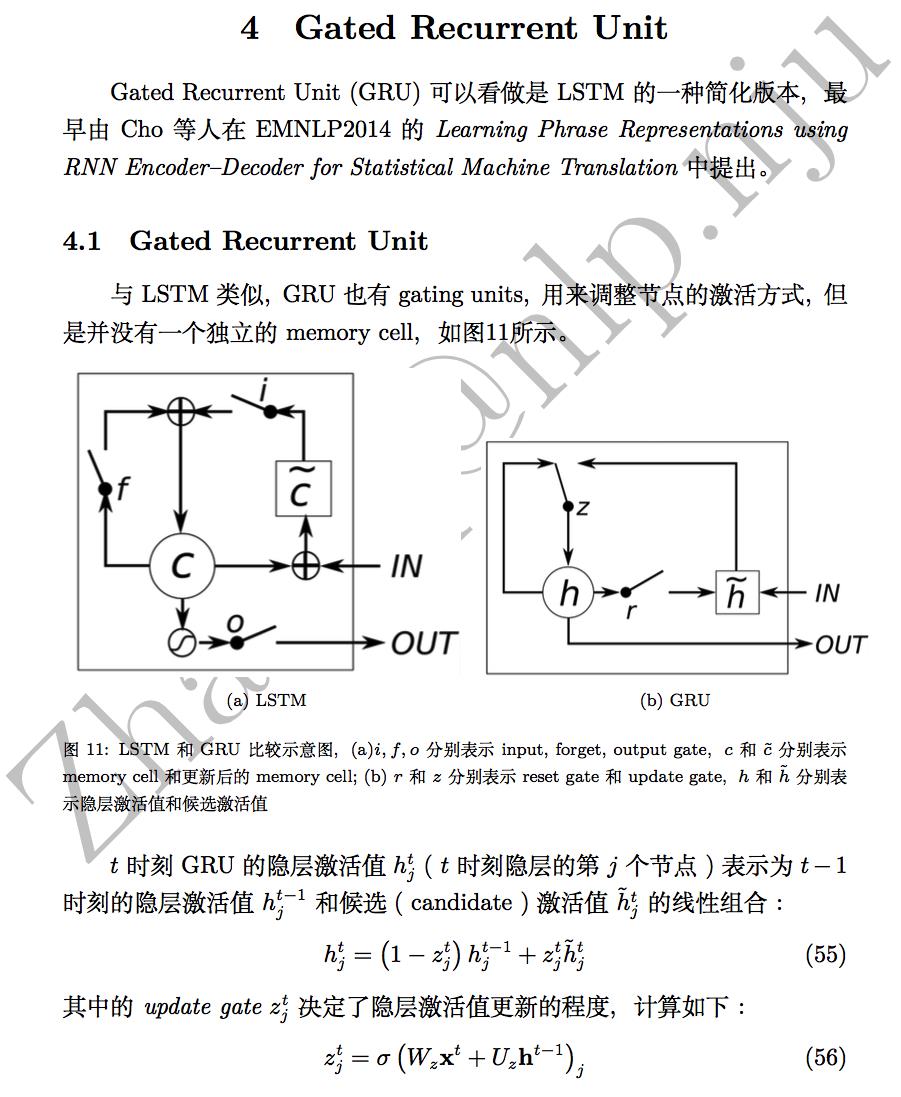

本文将简要介绍GRU的相关知识。

转载请注明出处:http://blog.csdn.net/u011414416/article/details/51433875

本文参考的文章有:

Kyunghyun Cho, Bart van Merrenboer, Caglar Gulcehre Dzmitry Bahdanau, Fethi Bougares, Holger Schwenk, and Yoshua Bengio. 2014. Learning phrase representations using rnn encoder-decoder for statistical machine. In Proceedings of the 2014 Conference on EMNLP, 1724-1734.

Junyoung Chung, Caglar Gulcehre, KyungHyun Cho, Yoshua Bengio. Empirical Evaluation of Gated Recurrent Neural Networks on Sequence Modeling. arXiv:1412.3555

以上是关于从NN到RNN再到LSTM: Gated Recurrent Units的主要内容,如果未能解决你的问题,请参考以下文章