目标检测数据增强:DOTA数据集

Posted zstar-_

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了目标检测数据增强:DOTA数据集相关的知识,希望对你有一定的参考价值。

前言

之前对于xml格式的YOLO数据集,之前记录过如何用imgaug对其进行数据增强。不过DOTA数据集采用的是txt格式的旋转框标注,因此不能直接套用,只能另辟蹊径。

DOTA数据集简介

DOTA数据集全称:Dataset for Object deTection in Aerial images

DOTA数据集v1.0共收录2806张4000 × 4000的图片,总共包含188282个目标。

DOTA数据集论文介绍:https://arxiv.org/pdf/1711.10398.pdf

数据集官网:https://captain-whu.github.io/DOTA/dataset.html

DOTA数据集总共有3个版本

DOTAV1.0

- 类别数目:15

- 类别名称:plane, ship, storage tank, baseball diamond, tennis court, basketball court, ground track field, harbor, bridge, large vehicle, small vehicle, helicopter, roundabout, soccer ball field , swimming pool

DOTAV1.5

- 类别数目:16

- 类别名称:plane, ship, storage tank, baseball diamond, tennis court, basketball court, ground track field, harbor, bridge, large vehicle, small vehicle, helicopter, roundabout, soccer ball field, swimming pool , container crane

DOTAV2.0

- 类别数目:18

- 类别名称:plane, ship, storage tank, baseball diamond, tennis court, basketball court, ground track field, harbor, bridge, large vehicle, small vehicle, helicopter, roundabout, soccer ball field, swimming pool, container crane, airport , helipad

DOTAV2.0版本,备份在我的GitHub上。

https://github.com/zstar1003/Dataset

本实验演示选择的是DOTA数据集中的一张图片,原图如下:

标签如下:

每个对象有10个数值,前8个代表一个矩形框四个角的坐标,第9个表示对象类别,第10个表示识别难易程度,0表示简单,1表示困难。

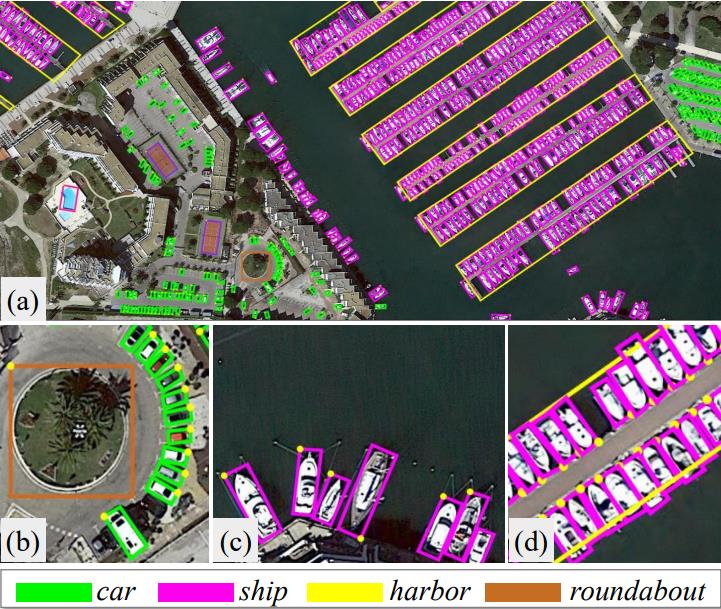

使用JDet中的visualization.py将标签可视化,效果如下图所示:

注:由于可视化代码需要坐标点输入为整数,因此后续给输出对象坐标点进行了取整操作,这会损失一些精度。

数据增强及可视化

数据增强代码主要参考的是这篇博文:目标识别小样本数据扩增

调整亮度

这里通过skimage.exposure.adjust_gamma来调整亮度:

# 调整亮度

def changeLight(img, inputtxt, outputiamge, outputtxt):

# random.seed(int(time.time()))

flag = random.uniform(0.5, 1.5) # flag>1为调暗,小于1为调亮

label = round(flag, 2)

(filepath, tempfilename) = os.path.split(inputtxt)

(filename, extension) = os.path.splitext(tempfilename)

outputiamge = os.path.join(outputiamge + "/" + filename + "_" + str(label) + ".png")

outputtxt = os.path.join(outputtxt + "/" + filename + "_" + str(label) + extension)

ima_gamma = exposure.adjust_gamma(img, 0.5)

shutil.copyfile(inputtxt, outputtxt)

cv.imwrite(outputiamge, ima_gamma)

添加高斯噪声

添加高斯噪声需要先将像素点进行归一化(/255)

# 添加高斯噪声

def gasuss_noise(image, inputtxt, outputiamge, outputtxt, mean=0, var=0.01):

'''

mean : 均值

var : 方差

'''

image = np.array(image / 255, dtype=float)

noise = np.random.normal(mean, var ** 0.5, image.shape)

out = image + noise

if out.min() < 0:

low_clip = -1.

else:

low_clip = 0.

out = np.clip(out, low_clip, 1.0)

out = np.uint8(out * 255)

(filepath, tempfilename) = os.path.split(inputtxt)

(filename, extension) = os.path.splitext(tempfilename)

outputiamge = os.path.join(outputiamge + "/" + filename + "_gasunoise_" + str(mean) + "_" + str(var) + ".png")

outputtxt = os.path.join(outputtxt + "/" + filename + "_gasunoise_" + str(mean) + "_" + str(var) + extension)

shutil.copyfile(inputtxt, outputtxt)

cv.imwrite(outputiamge, out)

调整对比度

# 调整对比度

def ContrastAlgorithm(rgb_img, inputtxt, outputiamge, outputtxt):

img_shape = rgb_img.shape

temp_imag = np.zeros(img_shape, dtype=float)

for num in range(0, 3):

# 通过直方图正规化增强对比度

in_image = rgb_img[:, :, num]

# 求输入图片像素最大值和最小值

Imax = np.max(in_image)

Imin = np.min(in_image)

# 要输出的最小灰度级和最大灰度级

Omin, Omax = 0, 255

# 计算a 和 b的值

a = float(Omax - Omin) / (Imax - Imin)

b = Omin - a * Imin

# 矩阵的线性变化

out_image = a * in_image + b

# 数据类型的转化

out_image = out_image.astype(np.uint8)

temp_imag[:, :, num] = out_image

(filepath, tempfilename) = os.path.split(inputtxt)

(filename, extension) = os.path.splitext(tempfilename)

outputiamge = os.path.join(outputiamge + "/" + filename + "_contrastAlgorithm" + ".png")

outputtxt = os.path.join(outputtxt + "/" + filename + "_contrastAlgorithm" + extension)

shutil.copyfile(inputtxt, outputtxt)

cv.imwrite(outputiamge, temp_imag)

旋转

旋转本质上是利用仿射矩阵,这里预留了接口参数,可以自定义旋转角度

# 旋转

def rotate_img_bbox(img, inputtxt, temp_outputiamge, temp_outputtxt, angle, scale=1):

nAgree = angle

size = img.shape

w = size[1]

h = size[0]

for numAngle in range(0, len(nAgree)):

dRot = nAgree[numAngle] * np.pi / 180

dSinRot = math.sin(dRot)

dCosRot = math.cos(dRot)

nw = (abs(np.sin(dRot) * h) + abs(np.cos(dRot) * w)) * scale

nh = (abs(np.cos(dRot) * h) + abs(np.sin(dRot) * w)) * scale

(filepath, tempfilename) = os.path.split(inputtxt)

(filename, extension) = os.path.splitext(tempfilename)

outputiamge = os.path.join(temp_outputiamge + "/" + filename + "_rotate_" + str(nAgree[numAngle]) + ".png")

outputtxt = os.path.join(temp_outputtxt + "/" + filename + "_rotate_" + str(nAgree[numAngle]) + extension)

rot_mat = cv.getRotationMatrix2D((nw * 0.5, nh * 0.5), nAgree[numAngle], scale)

rot_move = np.dot(rot_mat, np.array([(nw - w) * 0.5, (nh - h) * 0.5, 0]))

rot_mat[0, 2] += rot_move[0]

rot_mat[1, 2] += rot_move[1]

# 仿射变换

rotat_img = cv.warpAffine(img, rot_mat, (int(math.ceil(nw)), int(math.ceil(nh))), flags=cv.INTER_LANCZOS4)

cv.imwrite(outputiamge, rotat_img)

save_txt = open(outputtxt, 'w')

f = open(inputtxt)

for line in f.readlines():

line = line.split(" ")

x1 = float(line[0])

y1 = float(line[1])

x2 = float(line[2])

y2 = float(line[3])

x3 = float(line[4])

y3 = float(line[5])

x4 = float(line[6])

y4 = float(line[7])

category = str(line[8])

dif = str(line[9])

point1 = np.dot(rot_mat, np.array([x1, y1, 1]))

point2 = np.dot(rot_mat, np.array([x2, y2, 1]))

point3 = np.dot(rot_mat, np.array([x3, y3, 1]))

point4 = np.dot(rot_mat, np.array([x4, y4, 1]))

x1 = round(point1[0], 3)

y1 = round(point1[1], 3)

x2 = round(point2[0], 3)

y2 = round(point2[1], 3)

x3 = round(point3[0], 3)

y3 = round(point3[1], 3)

x4 = round(point4[0], 3)

y4 = round(point4[1], 3)

# string = str(x1) + " " + str(y1) + " " + str(x2) + " " + str(y2) + " " + str(x3) + " " + str(y3) + " " + str(x4) + " " + str(y4) + " " + category + " " + dif

string = str(int(x1)) + " " + str(int(y1)) + " " + str(int(x2)) + " " + str(int(y2)) + " " + str(

int(x3)) + " " + str(int(y3)) + " " + str(int(x4)) + " " + str(int(y4)) + " " + category + " " + dif

save_txt.write(string)

翻转图像

翻转图像使用cv.flip进行

# 翻转图像

def filp_pic_bboxes(img, inputtxt, outputiamge, outputtxt):

(filepath, tempfilename) = os.path.split(inputtxt)

(filename, extension) = os.path.splitext(tempfilename)

output_vert_flip_img = os.path.join(outputiamge + "/" + filename + "_vert_flip" + ".png")

output_vert_flip_txt = os.path.join(outputtxt + "/" + filename + "_vert_flip" + extension)

output_horiz_flip_img = os.path.join(outputiamge + "/" + filename + "_horiz_flip" + ".png")

output_horiz_flip_txt = os.path.join(outputtxt + "/" + filename + "_horiz_flip" + extension)

h, w, _ = img.shape

# 垂直翻转

vert_flip_img = cv.flip(img, 1)

cv.imwrite(output_vert_flip_img, vert_flip_img)

# 水平翻转

horiz_flip_img = cv.flip(img, 0)

cv.imwrite(output_horiz_flip_img, horiz_flip_img)

# ---------------------- 调整boundingbox ----------------------

save_vert_txt = open(output_vert_flip_txt, 'w')

save_horiz_txt = open(output_horiz_flip_txt, 'w')

f = open(inputtxt)

for line in f.readlines():

line = line.split(" ")

x1 = float(line[0])

y1 = float(line[1])

x2 = float(line[2])

y2 = float(line[3])

x3 = float(line[4])

y3 = float(line[5])

x4 = float(line[6])

y4 = float(line[7])

category = str(line[8])

dif = str(line[9])

vert_string = str(int(round(w - x1, 3))) + " " + str(int(y1)) + " " + str(int(round(w - x2, 3))) + " " + str(

int(y2)) + " " + str(int(round(w - x3, 3))) + " " + str(int(y3)) + " " + str(

int(round(w - x4, 3))) + " " + str(int(y4)) + " " + category + " " + dif

horiz_string = str(int(x1)) + " " + str(int(round(h - y1, 3))) + " " + str(int(x2)) + " " + str(

int(round(h - y2, 3))) + " " + str(int(x3)) + " " + str(int(round(h - y3, 3))) + " " + str(

int(x4)) + " " + str(int(round(h - y4, 3))) + " " + category + " " + dif

save_horiz_txt.write(horiz_string)

save_vert_txt.write(vert_string)

平移图像

平移图像本质也是利用仿射矩阵

# 平移图像

def shift_pic_bboxes(img, inputtxt, outputiamge, outputtxt):

w = img.shape[1]

h = img.shape[0]

x_min = w # 裁剪后的包含所有目标框的最小的框

x_max = 0

y_min = h

y_max = 0

f = open(inputtxt)

for line in f.readlines():

line = line.split(" ")

x1 = float(line[0])

y1 = float(line[1])

x2 = float(line[2])

y2 = float(line[3])

x3 = float(line[4])

y3 = float(line[5])

x4 = float(line[6])

y4 = float(line[7])

category = str(line以上是关于目标检测数据增强:DOTA数据集的主要内容,如果未能解决你的问题,请参考以下文章