下载Hadoop

解压并移动到/software目录:

tar -zxvf hadoop-2.8.2.tar.gz

mv hadoop-2.8.2 /software/hadoop

在/etc/profile文件添加:

export HADOOP_HOME=/software/hadoop

export HADOOP_INSTALL=$HADOOP_HOME

export HADOOP_MAPRED_HOME=$HADOOP_HOME

export HADOOP_COMMON_HOME=$HADOOP_HOME

export HADOOP_HDFS_HOME=$HADOOP_HOME

export YARN_HOME=$HADOOP_HOME

export HADOOP_COMMON_LIB_NATIVE_DIR=$HADOOP_HOME/lib/native

export PATH=$HADOOP_HOME/sbin:$HADOOP_HOME/bin:$PATH

export CLASSPATH=$($HADOOP_HOME/bin/hadoop classpath):$CLASSPATH

保存并且更新/etc/profile文件:source /etc/profile。

编辑hadoop-env.sh文件:vim $HADOOP_HOME/etc/hadoop/hadoop-env.sh,在最后加上:

export JAVA_HOME=/software/jdk

修改Configuration文件:vim $HADOOP_HOME/etc/hadoop/core-site.xml

<configuration>

<property>

<name>fs.default.name</name>

<value>hdfs://localhost:9000</value>

</property>

</configuration>

修改hdfs-site.xml:vim $HADOOP_HOME/etc/hadoop/hdfs-site.xml

<configuration>

<property>

<name>dfs.replication</name>

<value>1</value>

</property>

</configuration>

修改mapred-site.xml:

cp $HADOOP_HOME/etc/hadoop/mapred-site.xml.template $HADOOP_HOME/etc/hadoop/mapred-site.xml

vim $HADOOP_HOME/etc/hadoop/mapred-site.xml

<configuration>

<property>

<name>mapreduce.framework.name</name>

<value>yarn</value>

</property>

</configuration>

修改yarn-site.xml:vim $HADOOP_HOME/etc/hadoop/yarn-site.xml

<configuration>

<property>

<name>yarn.nodemanager.aux-services</name>

<value>mapreduce_shuffle</value>

</property>

</configuration>

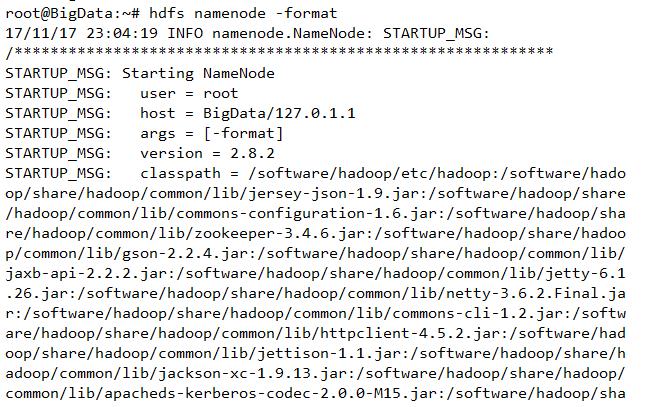

初始化hadoop:

hdfs namenode –format

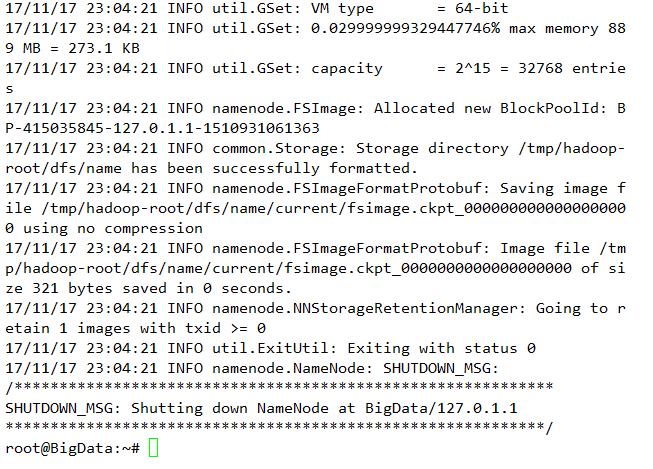

启动

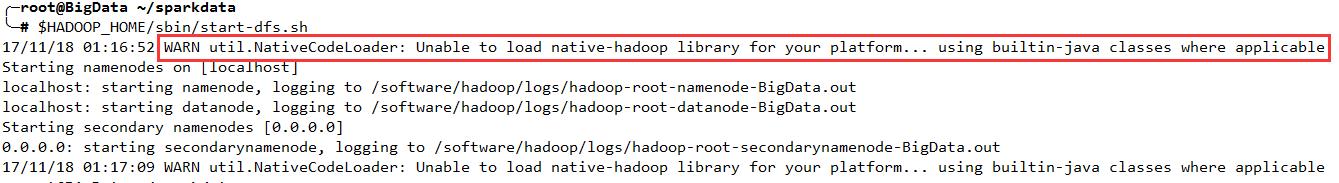

$HADOOP_HOME/sbin/start-all.sh

输入jps查看是否成功:

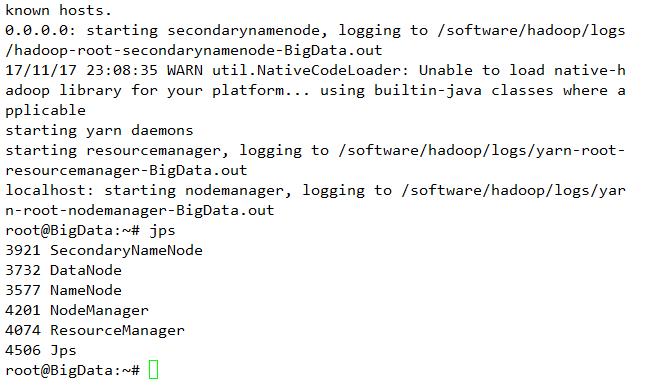

检查WebUI,浏览器打开端口:http://localhost:8088

这里是用宿主机访问虚拟机,所以ip是虚拟机的ip。

停止

$HADOOP_HOME/sbin/stop-all.sh

安装完成。

但是在启动时候有一个警告,提示hadoop不能加载本地库:

解决方法:

在/etc/profile中添加:

HADOOP_OPTS="-Djava.library.path=$HADOOP_HOME/lib:$HADOOP_COMMON_LIB_NATIVE_DIR"

保存并更新文件:source /etc/profile

并且在hadoop-env.sh添加: vim $HADOOP_HOME/etc/hadoop/hadoop-env.sh

export HADOOP_HOME=/software/hadoop

export HADOOP_COMMON_LIB_NATIVE_DIR=$HADOOP_HOME/lib/native

export HADOOP_OPTS="-Djava.library.path=$HADOOP_HOME/lib:$HADOOP_COMMON_LIB_NATIVE_DIR"