mongodb3集群搭建

Posted 网络终结者

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了mongodb3集群搭建相关的知识,希望对你有一定的参考价值。

三台服务器:先设置hosts 10.0.0.231 node1 10.0.0.232 node2 10.0.0.233 node3

1:下载 mongodb-linux-x86_64-rhel70-3.4.6.tgz

安装目录:/usr/local/mongodb-linux-x86_64-rhel70-3.4.6

软链接 ln -s mongodb-linux-x86_64-rhel70-3.4.6 mongodb

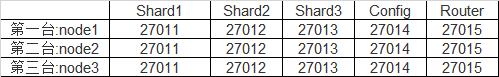

2:下图指定端口,三台服务器情况:

3:设置环境变量

服务器:node1,node2,node3分别设置

修改/etc/profile文件:

export MONGODB_HOME=/usr/local/mongodb

export PATH=$MONGODB_HOME/bin:$PATH

并重新生效 source /ect/profile

4:创建数据文件夹

服务器:node1,node2,node3分别创建

mkdir -p /usr/local/mongodb/data/27011/logs /usr/local/mongodb/data/27011/pid /usr/local/mongodb/data/27011/data mkdir -p /usr/local/mongodb/data/27012/logs /usr/local/mongodb/data/27012/pid /usr/local/mongodb/data/27012/data mkdir -p /usr/local/mongodb/data/27013/logs /usr/local/mongodb/data/27013/pid /usr/local/mongodb/data/27013/data mkdir -p /usr/local/mongodb/data/27014/logs /usr/local/mongodb/data/27014/pid /usr/local/mongodb/data/27014/data mkdir -p /usr/local/mongodb/data/27015/logs /usr/local/mongodb/data/27015/pid /usr/local/mongodb/data/27015/data mkdir -p /usr/local/mongodb/clusting

5:创建shard文件配置

服务器:node1,node2,node3的clusting文件夹中

分别创建 27011.conf 27012.conf 27013.conf 27014.conf 27015.conf 5个文件

6:分别配制conf内容

服务器:node1,node2,node3的clusting文件夹中

27011.conf 27012.conf 27013.conf ,配制方法为

port=27011 bind_ip=node1 logpath=/usr/local/mongodb/data/27011/logs/l.log logappend=true pidfilepath=/usr/local/mongodb/data/27011/pid/l.pid dbpath=/usr/local/mongodb/data/27011/data replSet=rs001 fork=true

注意replSet的值:27011.conf :rs001 27012.conf :rs002 27013.conf :rs003

27014.conf

port=27014 bind_ip=node1 logpath=/usr/local/mongodb/data/27014/logs/l.log logappend=true pidfilepath=/usr/local/mongodb/data/27014/pid/l.pid dbpath=/usr/local/mongodb/data/27014/data replSet=configReplSet fork=true configsvr=true

注意replSet的值:configReplSet

27015.conf

port=27015 logpath=/usr/local/mongodb/data/27015/logs/l.log pidfilepath=/usr/local/mongodb/data/27015/pid/l.pid configdb=configReplSet/node1:27014,node2:27014,node3:27014 fork=true

三台机器根据服务器不同,节点不同来相应修改

node1: 27011.conf 27012.conf 27013.conf 27014.conf 27015.conf

node2: 27011.conf 27012.conf 27013.conf 27014.conf 27015.conf

node3: 27011.conf 27012.conf 27013.conf 27014.conf 27015.conf

总共15个配制文件

7:启动服务 --先启动Shard服务

node1,node2,node3

/usr/local/mongodb/bin/mongod -f /usr/local/mongodb/clusting/27011.conf /usr/local/mongodb/bin/mongod -f /usr/local/mongodb/clusting/27012.conf /usr/local/mongodb/bin/mongod -f /usr/local/mongodb/clusting/27013.conf

然后在node1上

登录mongo服务编写配置:

/usr/local/mongodb/mongo --port 27011 --host node1

开始初使化:

cfg={ _id:"rs001", members:[ {_id:0,host:\'node1:27011\',priority:2}, {_id:1,host:\'node2:27011\',priority:1},{_id:2,host:\'node3:27011\',arbiterOnly:true}] };

rs.initiate(cfg)

#查看状态

rs.status();

/usr/local/mongodb/mongo --port 27012 --host node1

开始初使化:

cfg={ _id:"rs002", members:[ {_id:0,host:\'node1:27012\',priority:2}, {_id:1,host:\'node2:27012\',priority:1},{_id:2,host:\'node3:27012\',arbiterOnly:true}] };

rs.initiate(cfg)

#查看状态

rs.status();

/usr/local/mongodb/mongo --port 27013 --host node1 开始初使化: cfg={ _id:"rs003", members:[ {_id:0,host:\'node1:27013\',priority:2}, {_id:1,host:\'node2:27013\',priority:1},{_id:2,host:\'node3:27013\',arbiterOnly:true}] }; rs.initiate(cfg) #查看状态 rs.status();

8:启动confing server服务

node1,node2,node3

/usr/local/mongodb/bin/mongod -f /usr/local/mongodb/clusting/27014.conf

然后在node1上

登录mongo服务编写配置:

/usr/local/mongodb/mongo --port 27014 --host node1 开始初使化: cfg={ _id:"configReplSet", members:[ {_id:0,host:\'node1:27014\',priority:2}, {_id:1,host:\'node2:27014\',priority:1},{_id:2,host:\'node3:27014\',arbiterOnly:true}] }; rs.initiate(cfg) #查看状态 rs.status();

9:启动Router服务

node1,node2,node3

/usr/local/mongodb/bin/mongos -f /usr/local/mongodb/clusting/27014.conf

然后在node1上

登录mongo服务,然后在Router服务中添加分片信息,顺序执行

/usr/local/mongodb/bin/mongo --port 27015 --host node1 sh.addShard("rs001/node1:27011,node2:27011,node3:27011") sh.addShard("rs001/node1:27012,node2:27012,node3:27012") sh.addShard("rs001/node1:27013,node2:27013,node3:27013")

执行: sh.enableSharding("transport") 对数据库transport进行分片操作。 执行: sh.shardCollection( "transport.kuaixin", {"_id": "hashed" } ) ,对collection的分配规则 执行: sh.shardCollection( "transport.lixin", {"_id": "hashed" } ) ,对collection的分配规则。 至此,Mongodb集群搭建完成。 执行sh.status()查看搭建状态,其中是没有显示出Arbiter节点,因为这个节点只用于投票,不存储副本数据。

以上是关于mongodb3集群搭建的主要内容,如果未能解决你的问题,请参考以下文章