redis演练(10) redis Cluster 集群节点维护

Posted

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了redis演练(10) redis Cluster 集群节点维护相关的知识,希望对你有一定的参考价值。

通过《redis演练(9)》演练,借助自带的redis-trib.rb工具,可“秒出”一个6节点的主从集群;还可以阅读服务器的响应;还演练了下自动failover效果。

接上回继续演练。本文演练内容涵盖以下内容。

为6节点集群环境,添加新节点

删除新增的新节点

集群间迁移

1.添加新节点

#环境清理 [[email protected] create-cluster]# ./create-cluster clean [[email protected] create-cluster]# ls create-cluster README #启动节点(默认是6个) [[email protected] create-cluster]# ./create-cluster start Starting 30001 Starting 30002 Starting 30003 Starting 30004 Starting 30005 Starting 30006 #创建集群 [[email protected] create-cluster]# ./create-cluster create >>> Creating cluster >>> Performing hash slots allocation on 6 nodes... Using 3 masters: 127.0.0.1:30001 127.0.0.1:30002 127.0.0.1:30003 Adding replica 127.0.0.1:30004 to 127.0.0.1:30001 Adding replica 127.0.0.1:30005 to 127.0.0.1:30002 Adding replica 127.0.0.1:30006 to 127.0.0.1:30003 M: 3220b1b57d652ab82bbe56750636e57d0a6480c2 127.0.0.1:30001 slots:0-5460 (5461 slots) master M: 06e3347954cd77a8c61cea80673cd3e537ad3cd7 127.0.0.1:30002 slots:5461-10922 (5462 slots) master M: 498ca3472b917cd1a8e1f14cbb67b54327bacae7 127.0.0.1:30003 slots:10923-16383 (5461 slots) master S: fb2d0f156daec5e148c9b5a462e185b43e177e4e 127.0.0.1:30004 replicates 3220b1b57d652ab82bbe56750636e57d0a6480c2 S: 08a60e2151bf760a14a5fd27dd2025023a4826e8 127.0.0.1:30005 replicates 06e3347954cd77a8c61cea80673cd3e537ad3cd7 S: 0717c88dad90923befe94d8e1536720bccb0d3b5 127.0.0.1:30006 replicates 498ca3472b917cd1a8e1f14cbb67b54327bacae7 Can I set the above configuration? (type ‘yes‘ to accept): yes >>> Nodes configuration updated >>> Assign a different config epoch to each node >>> Sending CLUSTER MEET messages to join the cluster Waiting for the cluster to join. >>> Performing Cluster Check (using node 127.0.0.1:30001) M: 3220b1b57d652ab82bbe56750636e57d0a6480c2 127.0.0.1:30001 slots:0-5460 (5461 slots) master 1 additional replica(s) M: 06e3347954cd77a8c61cea80673cd3e537ad3cd7 127.0.0.1:30002 slots:5461-10922 (5462 slots) master 1 additional replica(s) S: 0717c88dad90923befe94d8e1536720bccb0d3b5 127.0.0.1:30006 slots: (0 slots) slave replicates 498ca3472b917cd1a8e1f14cbb67b54327bacae7 S: 08a60e2151bf760a14a5fd27dd2025023a4826e8 127.0.0.1:30005 slots: (0 slots) slave replicates 06e3347954cd77a8c61cea80673cd3e537ad3cd7 S: fb2d0f156daec5e148c9b5a462e185b43e177e4e 127.0.0.1:30004 slots: (0 slots) slave replicates 3220b1b57d652ab82bbe56750636e57d0a6480c2 M: 498ca3472b917cd1a8e1f14cbb67b54327bacae7 127.0.0.1:30003 slots:10923-16383 (5461 slots) master 1 additional replica(s) [OK] All nodes agree about slots configuration. >>> Check for open slots... >>> Check slots coverage... [OK] All 16384 slots covered.

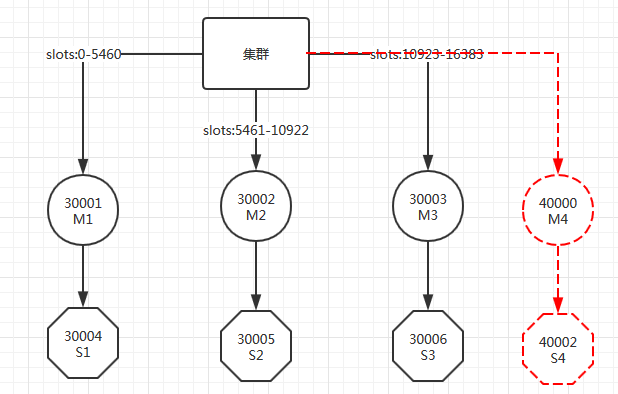

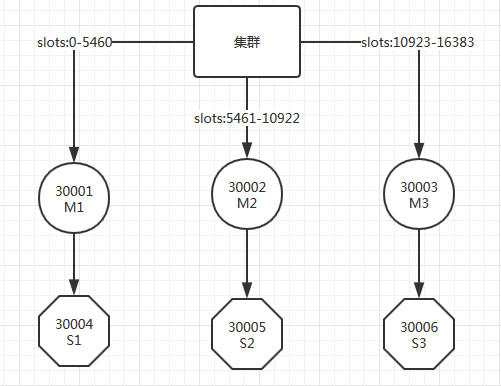

集群添加节点前情况

添加节点后预期情况

1.启动新实例(端口40000) [[email protected] create-cluster]# ../../src/redis-server --port 40000 --cluster-enabled yes --cluster-config-file nodes-40000.conf --cluster-node-timeout 5000 --appendonly yes --appendfilename appendonly-40000.aof --daemonize yes 可确认下 [[email protected] create-cluster]# ps -ef |grep redis 2.#将新节点加入集群(后面的30001,也可以用30002) ./redis-trib.rb add-node 127.0.0.1:40000 127.0.0.1:30001 3.确认是否加入(新节点已经加入集群,但是未分配哈希槽) 127.0.0.1:30001> cluster nodes 06e3347954cd77a8c61cea80673cd3e537ad3cd7 127.0.0.1:[email protected] master - 0 1473854215560 2 connected 5461-10922 0717c88dad90923befe94d8e1536720bccb0d3b5 127.0.0.1:[email protected] slave 498ca3472b917cd1a8e1f14cbb67b54327bacae7 0 1473854215560 6 connected 08a60e2151bf760a14a5fd27dd2025023a4826e8 127.0.0.1:[email protected] slave 06e3347954cd77a8c61cea80673cd3e537ad3cd7 0 1473854215659 5 connected 3220b1b57d652ab82bbe56750636e57d0a6480c2 127.0.0.1:[email protected] myself,master - 0 0 1 connected 0-5460 529d12d3fd0e48b09ca71c8408b72baaf7954d11 127.0.0.1:[email protected] master - 0 1473854215659 0 connected fb2d0f156daec5e148c9b5a462e185b43e177e4e 127.0.0.1:[email protected] slave 3220b1b57d652ab82bbe56750636e57d0a6480c2 0 1473854215559 4 connected 498ca3472b917cd1a8e1f14cbb67b54327bacae7 127.0.0.1:[email protected] master - 0 1473854215560 3 connected 10923-16383 这时候新节点,还不能接受写入请求 4. 继续为新节点分配哈希槽 [[email protected] src]# ./redis-trib.rb reshard 127.0.0.1:30001 >>> Performing Cluster Check (using node 127.0.0.1:30001) M: 3220b1b57d652ab82bbe56750636e57d0a6480c2 127.0.0.1:30001 slots:0-5460 (5461 slots) master 1 additional replica(s) M: 06e3347954cd77a8c61cea80673cd3e537ad3cd7 127.0.0.1:30002 slots:5461-10922 (5462 slots) master 1 additional replica(s) S: 0717c88dad90923befe94d8e1536720bccb0d3b5 127.0.0.1:30006 slots: (0 slots) slave replicates 498ca3472b917cd1a8e1f14cbb67b54327bacae7 S: 08a60e2151bf760a14a5fd27dd2025023a4826e8 127.0.0.1:30005 slots: (0 slots) slave replicates 06e3347954cd77a8c61cea80673cd3e537ad3cd7 M: 529d12d3fd0e48b09ca71c8408b72baaf7954d11 127.0.0.1:40000 slots:10923-14646 (3724 slots) master 0 additional replica(s) S: fb2d0f156daec5e148c9b5a462e185b43e177e4e 127.0.0.1:30004 slots: (0 slots) slave replicates 3220b1b57d652ab82bbe56750636e57d0a6480c2 M: 498ca3472b917cd1a8e1f14cbb67b54327bacae7 127.0.0.1:30003 slots:14647-16383 (1737 slots) master 1 additional replica(s) [OK] All nodes agree about slots configuration. >>> Check for open slots... >>> Check slots coverage... [OK] All 16384 slots covered. How many slots do you want to move (from 1 to 16384)? 3000(分配哈希槽数) What is the receiving node ID? 529d12d3fd0e48b09ca71c8408b72baaf7954d11((40000对应的node id) Please enter all the source node IDs. Type ‘all‘ to use all the nodes as source nodes for the hash slots. Type ‘done‘ once you entered all the source nodes IDs. Source node #1:498ca3472b917cd1a8e1f14cbb67b54327bacae7(30003对应的node id) Source node #2:done .... Moving slot 16376 from 498ca3472b917cd1a8e1f14cbb67b54327bacae7 Moving slot 16377 from 498ca3472b917cd1a8e1f14cbb67b54327bacae7 Moving slot 16378 from 498ca3472b917cd1a8e1f14cbb67b54327bacae7 Moving slot 16379 from 498ca3472b917cd1a8e1f14cbb67b54327bacae7 Moving slot 16380 from 498ca3472b917cd1a8e1f14cbb67b54327bacae7 Moving slot 16381 from 498ca3472b917cd1a8e1f14cbb67b54327bacae7 Moving slot 16382 from 498ca3472b917cd1a8e1f14cbb67b54327bacae7 Moving slot 16383 from 498ca3472b917cd1a8e1f14cbb67b54327bacae7 #哈希槽分布情况 127.0.0.1:30001> cluster nodes 06e3347954cd77a8c61cea80673cd3e537ad3cd7 127.0.0.1:[email protected] master - 0 1473856106534 2 connected 5461-10922 0717c88dad90923befe94d8e1536720bccb0d3b5 127.0.0.1:[email protected] slave 498ca3472b917cd1a8e1f14cbb67b54327bacae7 0 1473856106534 6 connected 08a60e2151bf760a14a5fd27dd2025023a4826e8 127.0.0.1:[email protected] slave 06e3347954cd77a8c61cea80673cd3e537ad3cd7 0 1473856106534 5 connected 3220b1b57d652ab82bbe56750636e57d0a6480c2 127.0.0.1:[email protected] myself,master - 0 0 1 connected 0-5460 529d12d3fd0e48b09ca71c8408b72baaf7954d11 127.0.0.1:[email protected] master - 0 1473856106635 7 connected 10923-14646(实际分了4353个,和3000不匹配) fb2d0f156daec5e148c9b5a462e185b43e177e4e 127.0.0.1:[email protected] slave 3220b1b57d652ab82bbe56750636e57d0a6480c2 0 1473856106535 4 connected 498ca3472b917cd1a8e1f14cbb67b54327bacae7 127.0.0.1:[email protected] master - 0 1473856106534 3 connected 14647-16383

注意:我目标是分配从M3分出3000个槽给M4,实际上分配了4353个槽。

验证下效果,发现正常调整,添加节点有效 127.0.0.1:30001> set k1 "1" -> Redirected to slot [12706] located at 127.0.0.1:40000 OK 127.0.0.1:40000> set k2 "1" -> Redirected to slot [449] located at 127.0.0.1:30001 OK 127.0.0.1:30001> get k1 -> Redirected to slot [12706] located at 127.0.0.1:40000 "1"

为新加的M4节点添加个从节点S4

# 启动从节点(40010) [[email protected] create-cluster]# ../../src/redis-server --port 40010 --cluster-enabled yes --cluster-config-file nodes-40010.conf --cluster-node-timeout 5000 --appendonly yes --appendfilename appendonly-40010.aof --daemonize yes --pidfile /var/run/redis_40010.pid # 添加从节点到集群 [[email protected] create-cluster]# ../../src/redis-trib.rb add-node --slave --master-id 529d12d3fd0e48b09ca71c8408b72baaf7954d11 127.0.0.1:40010 127.0.0.1:30001 >>> Adding node 127.0.0.1:40010 to cluster 127.0.0.1:30001 >>> Performing Cluster Check (using node 127.0.0.1:30001) S: 3220b1b57d652ab82bbe56750636e57d0a6480c2 127.0.0.1:30001 slots: (0 slots) slave replicates fb2d0f156daec5e148c9b5a462e185b43e177e4e M: fb2d0f156daec5e148c9b5a462e185b43e177e4e 127.0.0.1:30004 slots:0-5460 (5461 slots) master 1 additional replica(s) M: 498ca3472b917cd1a8e1f14cbb67b54327bacae7 127.0.0.1:30003 slots:14647-16383 (1737 slots) master 1 additional replica(s) M: 529d12d3fd0e48b09ca71c8408b72baaf7954d11 127.0.0.1:40000 slots:10923-14646 (3724 slots) master 0 additional replica(s) S: 0717c88dad90923befe94d8e1536720bccb0d3b5 127.0.0.1:30006 slots: (0 slots) slave replicates 498ca3472b917cd1a8e1f14cbb67b54327bacae7 S: 08a60e2151bf760a14a5fd27dd2025023a4826e8 127.0.0.1:30005 slots: (0 slots) slave replicates 06e3347954cd77a8c61cea80673cd3e537ad3cd7 M: 06e3347954cd77a8c61cea80673cd3e537ad3cd7 127.0.0.1:30002 slots:5461-10922 (5462 slots) master 1 additional replica(s) [OK] All nodes agree about slots configuration. >>> Check for open slots... >>> Check slots coverage... [OK] All 16384 slots covered. >>> Send CLUSTER MEET to node 127.0.0.1:40010 to make it join the cluster. Waiting for the cluster to join. >>> Configure node as replica of 127.0.0.1:40000. [OK] New node added correctly. 127.0.0.1:30001> cluster nodes fb2d0f156daec5e148c9b5a462e185b43e177e4e 127.0.0.1:[email protected] master - 0 1473858554505 8 connected 0-5460 498ca3472b917cd1a8e1f14cbb67b54327bacae7 127.0.0.1:[email protected] master - 0 1473858554505 3 connected 14647-16383 3220b1b57d652ab82bbe56750636e57d0a6480c2 127.0.0.1:[email protected] myself,slave fb2d0f156daec5e148c9b5a462e185b43e177e4e 0 0 1 connected 4e1dd54fcae4a7f92d78b6a6add09b5cd1538c64 127.0.0.1:[email protected] slave 529d12d3fd0e48b09ca71c8408b72baaf7954d11 0 1473858554101 7 connected 529d12d3fd0e48b09ca71c8408b72baaf7954d11 127.0.0.1:[email protected] master - 0 1473858554505 7 connected 10923-14646 0717c88dad90923befe94d8e1536720bccb0d3b5 127.0.0.1:[email protected] slave 498ca3472b917cd1a8e1f14cbb67b54327bacae7 0 1473858554506 6 connected 08a60e2151bf760a14a5fd27dd2025023a4826e8 127.0.0.1:[email protected] slave 06e3347954cd77a8c61cea80673cd3e537ad3cd7 0 1473858554505 5 connected 06e3347954cd77a8c61cea80673cd3e537ad3cd7 127.0.0.1:[email protected] master - 0 1473858554506 2 connected 5461-10922

不要关注,30001节点信息(因为实验过程中,一不小心把30001节点进程杀死了,发生了failover)

演示下新增节点的主从效果

127.0.0.1:30001> cluster nodes fb2d0f156daec5e148c9b5a462e185b43e177e4e 127.0.0.1:[email protected] master - 0 1473858841896 8 connected 0-5460 498ca3472b917cd1a8e1f14cbb67b54327bacae7 127.0.0.1:[email protected] master - 0 1473858841897 3 connected 14647-16383 3220b1b57d652ab82bbe56750636e57d0a6480c2 127.0.0.1:[email protected] myself,slave fb2d0f156daec5e148c9b5a462e185b43e177e4e 0 0 1 connected 4e1dd54fcae4a7f92d78b6a6add09b5cd1538c64 127.0.0.1:[email protected] master - 0 1473858842503 9 connected 10923-14646 529d12d3fd0e48b09ca71c8408b72baaf7954d11 127.0.0.1:[email protected] master,fail - 1473858838865 1473858837856 7 disconnected 0717c88dad90923befe94d8e1536720bccb0d3b5 127.0.0.1:[email protected] slave 498ca3472b917cd1a8e1f14cbb67b54327bacae7 0 1473858841897 6 connected 08a60e2151bf760a14a5fd27dd2025023a4826e8 127.0.0.1:[email protected] slave 06e3347954cd77a8c61cea80673cd3e537ad3cd7 0 1473858841897 5 connected 06e3347954cd77a8c61cea80673cd3e537ad3cd7 127.0.0.1:[email protected] master - 0 1473858841897 2 connected 5461-10922 127.0.0.1:30001>

经测试正常切换。

2.删除节点

#如果移除未启动状态节点,报错。 #移除从节点(端口是40000) [[email protected] create-cluster]# ../../src/redis-trib.rb del-node 127.0.0.1:30001 529d12d3fd0e48b09ca71c8408b72baaf7954d11 >>> Removing node 529d12d3fd0e48b09ca71c8408b72baaf7954d11 from cluster 127.0.0.1:30001 [ERR] No such node ID 529d12d3fd0e48b09ca71c8408b72baaf7954d11 #启动40000节点 略 [[email protected] create-cluster]# ps -ef |grep redis ... root 3636 1 0 21:08 ? 00:00:01 ../../src/redis-server *:40010 [cluster] root 3709 1 0 21:17 ? 00:00:00 ../../src/redis-server *:40000 [cluster] #再次删除 [[email protected] create-cluster]# ../../src/redis-trib.rb del-node 127.0.0.1:30001 529d12d3fd0e48b09ca71c8408b72baaf7954d11 >>> Removing node 529d12d3fd0e48b09ca71c8408b72baaf7954d11 from cluster 127.0.0.1:30001 >>> Sending CLUSTER FORGET messages to the cluster... >>> SHUTDOWN the node. [[email protected] create-cluster]# ps -ef |grep 40000 root 3724 2539 0 21:18 pts/1 00:00:00 grep 40000 #40000端口进程杀死了 [[email protected] create-cluster]# ps -ef |grep redis ... root 3636 1 0 21:08 ? 00:00:01 ../../src/redis-server *:40010 [cluster] 成功移除了40000节点 127.0.0.1:30001> cluster nodes fb2d0f156daec5e148c9b5a462e185b43e177e4e 127.0.0.1:[email protected] master - 0 1473859078007 8 connected 0-5460 498ca3472b917cd1a8e1f14cbb67b54327bacae7 127.0.0.1:[email protected] master - 0 1473859078007 3 connected 14647-16383 3220b1b57d652ab82bbe56750636e57d0a6480c2 127.0.0.1:[email protected] myself,slave fb2d0f156daec5e148c9b5a462e185b43e177e4e 0 0 1 connected 4e1dd54fcae4a7f92d78b6a6add09b5cd1538c64 127.0.0.1:[email protected] master - 0 1473859078007 9 connected 10923-14646 0717c88dad90923befe94d8e1536720bccb0d3b5 127.0.0.1:[email protected] slave 498ca3472b917cd1a8e1f14cbb67b54327bacae7 0 1473859078006 6 connected 08a60e2151bf760a14a5fd27dd2025023a4826e8 127.0.0.1:[email protected] slave 06e3347954cd77a8c61cea80673cd3e537ad3cd7 0 1473859078006 5 connected 06e3347954cd77a8c61cea80673cd3e537ad3cd7 127.0.0.1:[email protected] master - 0 1473859078007 2 connected 5461-10922 ###################################### ###继续移除主节点40010 # 尝试直接删除40010节点( 发现不允许删除,提示有数据在,需要reshard处理) [[email protected] create-cluster]# ../../src/redis-trib.rb del-node 127.0.0.1:30001 498ca3472b917cd1a8e1f14cbb67b54327bacae7 >>> Removing node 498ca3472b917cd1a8e1f14cbb67b54327bacae7 from cluster 127.0.0.1:30001 [ERR] Node 127.0.0.1:40010is not empty! Reshard data away and try again. #执行reshard,重新分配(如果目标节点为从节点,也报错) ../../src/redis-trib.rb reshard --from 4e1dd54fcae4a7f92d78b6a6add09b5cd1538c64 --to 498ca3472b917cd1a8e1f14cbb67b54327bacae7 --slots 3000 127.0.0.1:30001 #如果目标几点选择从节点,报错信息如下 #*** The specified node is not known or not a master, please retry. #重复迁移数据到30003节点(我执行了2次) ../../src/redis-trib.rb reshard --from 4e1dd54fcae4a7f92d78b6a6add09b5cd1538c64 --to 498ca3472b917cd1a8e1f14cbb67b54327bacae7 --slots 3000 127.0.0.1:30001 确认下是否40010上是否还分配着哈希槽 127.0.0.1:30001> cluster nodes fb2d0f156daec5e148c9b5a462e185b43e177e4e 127.0.0.1:[email protected] master - 0 1473860273927 8 connected 0-5460 498ca3472b917cd1a8e1f14cbb67b54327bacae7 127.0.0.1:[email protected] master - 0 1473860274026 10 connected 10923-16383 3220b1b57d652ab82bbe56750636e57d0a6480c2 127.0.0.1:[email protected] myself,slave fb2d0f156daec5e148c9b5a462e185b43e177e4e 0 0 1 connected 4e1dd54fcae4a7f92d78b6a6add09b5cd1538c64 127.0.0.1:[email protected] master - 0 1473860274126 9 connected 0717c88dad90923befe94d8e1536720bccb0d3b5 127.0.0.1:[email protected] slave 498ca3472b917cd1a8e1f14cbb67b54327bacae7 0 1473860273927 10 connected 08a60e2151bf760a14a5fd27dd2025023a4826e8 127.0.0.1:[email protected] slave 06e3347954cd77a8c61cea80673cd3e537ad3cd7 0 1473860273927 5 connected 06e3347954cd77a8c61cea80673cd3e537ad3cd7 127.0.0.1:[email protected] master - 0 1473860273927 2 connected 5461-10922 # 删除节点(这次成功了) [[email protected] create-cluster]# ../../src/redis-trib.rb del-node 127.0.0.1:30001 4e1dd54fcae4a7f92d78b6a6add09b5cd1538c64 >>> Removing node 4e1dd54fcae4a7f92d78b6a6add09b5cd1538c64 from cluster 127.0.0.1:30001 >>> Sending CLUSTER FORGET messages to the cluster... >>> SHUTDOWN the node. #确认下40040节点已经从集群中删除了。 127.0.0.1:30001> cluster nodes fb2d0f156daec5e148c9b5a462e185b43e177e4e 127.0.0.1:[email protected] master - 0 1473860314240 8 connected 0-5460 498ca3472b917cd1a8e1f14cbb67b54327bacae7 127.0.0.1:[email protected] master - 0 1473860314341 10 connected 10923-16383 3220b1b57d652ab82bbe56750636e57d0a6480c2 127.0.0.1:[email protected] myself,slave fb2d0f156daec5e148c9b5a462e185b43e177e4e 0 0 1 connected 0717c88dad90923befe94d8e1536720bccb0d3b5 127.0.0.1:[email protected] slave 498ca3472b917cd1a8e1f14cbb67b54327bacae7 0 1473860314240 10 connected 08a60e2151bf760a14a5fd27dd2025023a4826e8 127.0.0.1:[email protected] slave 06e3347954cd77a8c61cea80673cd3e537ad3cd7 0 1473860314240 5 connected 06e3347954cd77a8c61cea80673cd3e537ad3cd7 127.0.0.1:[email protected] master - 0 1473860314240 2 connected 5461-10922 #确认下在40010上的数据,已经迁移到30003节点上。 127.0.0.1:30001> get k1 (error) MOVED 12706 127.0.0.1:30003

总结: 通过集群节点的添加和移除,很大一部分工作,在数据哈希槽的迁移上。因为节点的变化,势必会影响到哈希槽的路由规则,如果节点有哈希槽存在,是不允许移除的,如果移除的话,哈希槽就不完整映射了,redis是不允许该情况存在了。

通过演练,确实进一步加深了对redis集群的理解。当然时间有限,本篇就不对redis集群迁移演练了。

本文出自 “简单” 博客,请务必保留此出处http://dba10g.blog.51cto.com/764602/1852860

以上是关于redis演练(10) redis Cluster 集群节点维护的主要内容,如果未能解决你的问题,请参考以下文章

「故障演练」 Redis Cluster集群,当master宕机,主从切换