大数据基础问答-之二

Posted 中道学友

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了大数据基础问答-之二相关的知识,希望对你有一定的参考价值。

What is Spark?

=============

Apache Spark is a fast and general-purpose cluster computing system. It provides high-level APIs in Java, Scala, Python and R, and an optimized engine that supports general execution graphs.

Apache Spark provides programmers with an application programming interface centered on a data structure called the resilient distributed dataset (RDD), a read-only multiset of data items distributed over a cluster of machines, that is maintained in a fault-tolerant way.

It was developed in response to limitations in the MapReduce cluster computing paradigm, which forces a particular linear dataflow structure on distributed programs:

MapReduce programs read input data from disk, map a function across the data, reduce the results of the map, and store reduction results on disk. Spark\'s RDDs function as a working set for distributed programs that offers a (deliberately) restricted form of distributed shared memory.

It was developed in response to limitations in the MapReduce cluster computing paradigm, which forces a particular linear dataflow structure on distributed programs

What is KAFKA?

==============

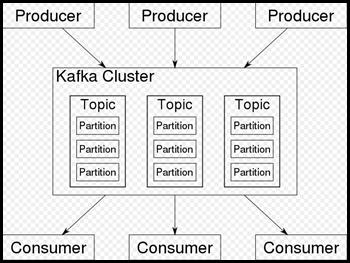

Apache Kafka is an open-source stream processing platform developed by the Apache Software Foundation written in Scala and Java. The project aims to provide a unified, high-throughput, low-latency platform for handling real-time data feeds. Its storage layer is essentially a "massively scalable pub/sub message queue architected as a distributed transaction log," making it highly valuable for enterprise infrastructures to process streaming data. Additionally, Kafka connects to external systems (for data import/export) via Kafka Connect and provides Kafka Streams, a Java stream processing library.

Kafka stores messages which come from arbitrarily many processes called "producers". The data can thereby be partitioned in different "partitions" within different "topics". Within a partition the messages are indexed and stored together with a timestamp. Other processes called "consumers" can query messages from partitions. Kafka runs on a cluster of one or more servers and the partitions can be distributed across cluster nodes.

What is Splunk?

==============

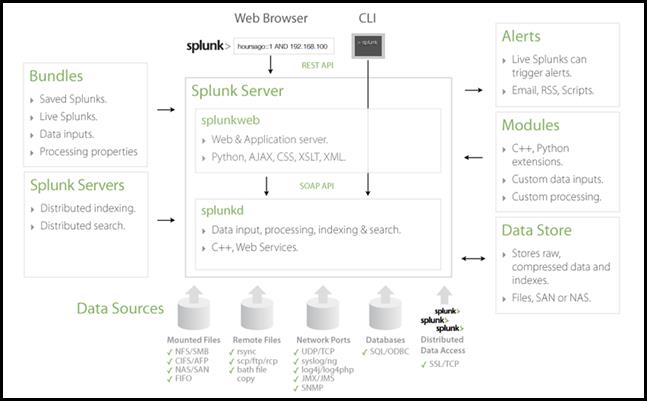

What do you do when you need information about the state of all devices in your data center? Looking at all logfiles would be the right answer if it was possible in any practical amount of time. This is where Splunk comes in.

Splunk started out as a kind of “Google for Logfiles”. It does a lot more today but log processing is still at the product’s core. It stores all your logs and provides very fast search capabilities roughly in the same way Google does for the internet.

- splunkd is a distributed C/C++ server that accesses, processes and indexes streaming IT data and also handles search requests.

- splunkweb is a Python-based application server providing the Splunk Web user interface.

- Splunk\'s Data Store manages the original raw data in compressed format as well as the indexes into the data. Data can be deleted or archived based on retention period or maximum data store size.

- Splunk Servers can communicate with one another via Splunk-2-Splunk, a TCP-based protocol, to forward data from one server to another and to distribute searches across multiple servers.

- Bundles are files that contain configuration settings including, user accounts, Splunks, Live Splunks, Data Inputs and Processing Properties to easily create specific Splunk environments.

- Modules are files that add new functionality to Splunk by adding to or modifying existing processors and pipelines.

What is SAS?

============

Big data analytics software.

What is Storm?

============

Apache Storm, in simple terms, is a distributed framework for real time processing of Big Data like Apache Hadoop is a distributed framework for batch processing.

Why Storm was needed :

First of all , we should understand why we needed storm when we already had Hadoop. Hadoop is intelligently designed to process huge amount of data in distributed fashion on commodity hardwares but the way it processes does not suit the use case of Real time processing. MR jobs are disk intensive, every time it runs, it fetches data from disk,processes in memory in mapper/reducer and then write back to disk ; the job is finished then and there. For next batch of data, a new job will be created again and whole cycle will repeat.

However in real time processing, data will be coming continuously and you have to keep getting data,process it and keep writing the output as well. And of course, all these have to be done parallel and fast in distributed fashion with no data loss. This is where Storm pitches in.

以上是关于大数据基础问答-之二的主要内容,如果未能解决你的问题,请参考以下文章