大数据学习:Hadoop中伪分布的搭建

Posted

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了大数据学习:Hadoop中伪分布的搭建相关的知识,希望对你有一定的参考价值。

<注:我们假设使用的是一个没有进行过任何配置的Linux系统,下面我们开始进行伪分布的搭建>

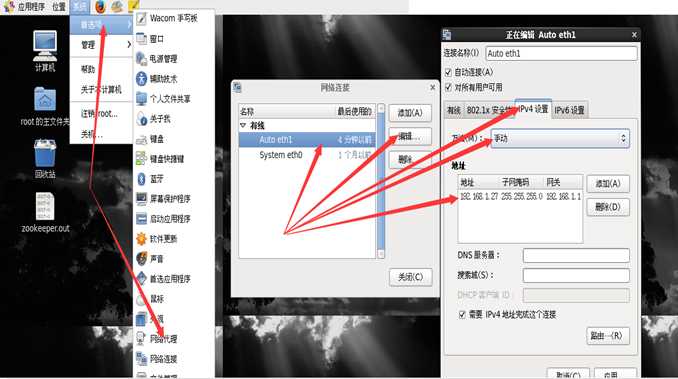

1.设置IP 地址

设置完成后,执行命令:service iptables restart

验证: ifconfig

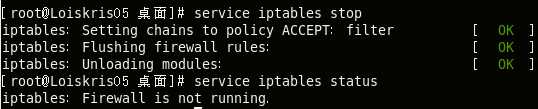

2. 关闭防火墙

执行命令 service iptables stop

验证: service iptables status

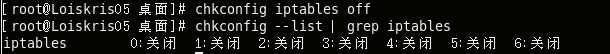

3.关闭防火墙的自动运行

执行命令 chkconfig iptables off

验证: chkconfig --list | grep iptables

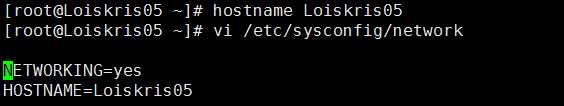

4 设置主机名

执行命令 (1)hostname Loiskris05

(2)vi /etc/sysconfig/network

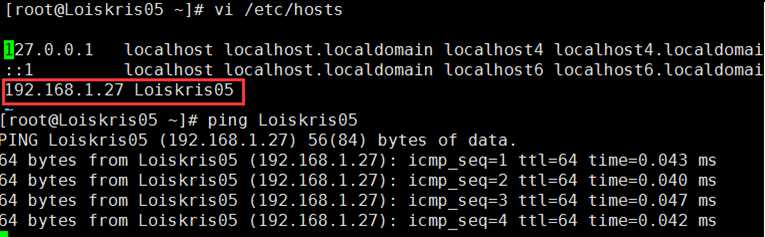

5 ip与hostname绑定

执行命令 vi /etc/hosts

在最后一行加入:IP 主机名(如:192.168.1.27 Loiskris05)

验证: ping Loiskris05

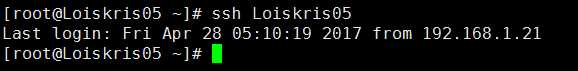

6 设置ssh免密码登陆

执行命令 (1)ssh-keygen -t rsa

(2) cat ~/.ssh/id_rsa.pub >> ~/.ssh/authorized_keys(以追加的方式复制)

验证: ssh Loiskris05

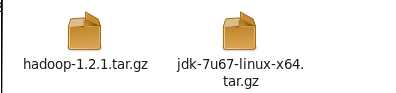

接下来开始安装软件,准备好一下两个安装包

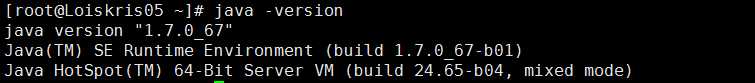

7 安装jdk

执行命令 (1)cd /usr

(2)chmod u+x jdk-6u24-linux-i586.bin

(3)./jdk-6u24-linux-i586.bin

(4)mv jdk-1.6.0_24 jdk

(5)vi /etc/profile 增加内容如下:

export JAVA_HOME=/usr/jdk

export PATH=.:$JAVA_HOME/bin:$PATH

(6)source /etc/profile

验证: java –version

8 安装hadoop

执行命令 (1)tar -zxvf hadoop-1.1.2.tar.gz

(2)mv hadoop-1.1.2 hadoop

(3)vi /etc/profile 增加内容如下:

export JAVA_HOME=/usr/jdk

export HADOOP_HOME=/usr/hadoop

export PATH=.:$HADOOP_HOME/bin:$JAVA_HOME/bin:$PATH

(4)source /etc/profile

(5)修改conf目录下的配置文件hadoop-env.sh、core-site.xml、hdfs-site.xml、mapred-site.xml

1.hadoop-env.sh

export JAVA_HOME=/usr/local/jdk/

2.core-site.xml

<configuration>

<property>

<name>fs.default.name</name>

<value>hdfs://Loiskris05:9000</value>

</property>

<property>

<name>hadoop.tmp.dir</name>

<value>/usr/local/ Loiskris05/tmp</value>

</property>

</configuration>

3.hdfs-site.xml

<configuration>

<生成副本的数量>

<property>

<name>dfs.replication</name>

<value>1</value>

</property>

<权限设置>

<property>

<name>dfs.permissions</name>

<value>false</value>

</property>

</configuration>

4.mapred-site.xml

<configuration>

<property>

<name>mapred.job.tracker</name>

<value> Loiskris05:9001</value>

</property>

</configuration>

(6)hadoop namenode -format

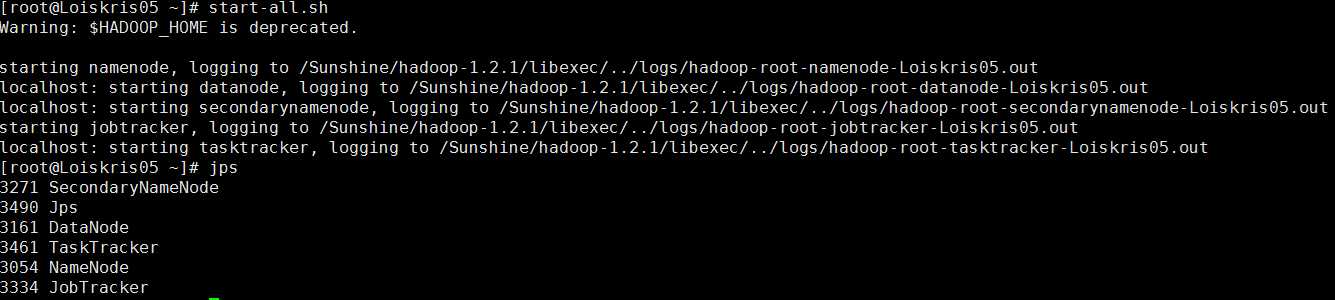

(7)start-all.sh

验证: (1)执行命令jps 如果看到5个新的java进程,分别是NameNode、SecondaryNameNode、DataNode、JobTracker、TaskTracker

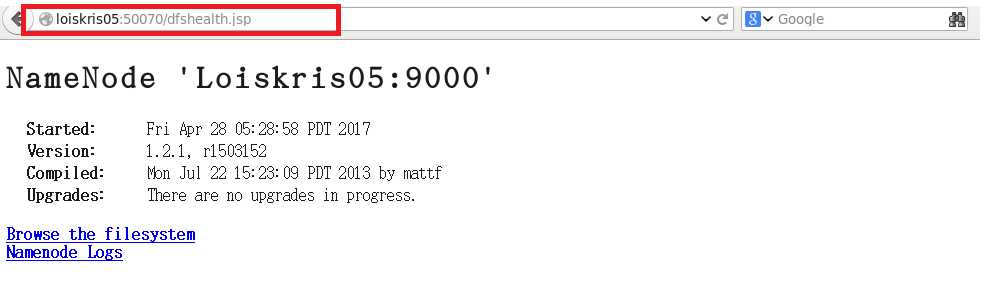

(2)在浏览器查看,http:// Loiskris05:50070/

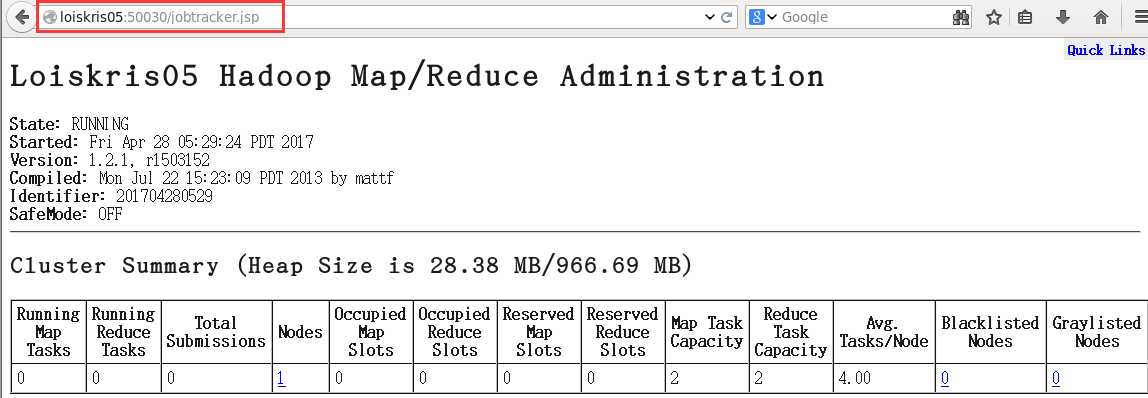

http:// Loiskris05:50030

如果都没有出现错误,则说明伪分布搭建成功。

以上是关于大数据学习:Hadoop中伪分布的搭建的主要内容,如果未能解决你的问题,请参考以下文章

大数据学习系列之七 ----- Hadoop+Spark+Zookeeper+HBase+Hive集