Hadoop2.x介绍与源代码编译

Posted llguanli

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了Hadoop2.x介绍与源代码编译相关的知识,希望对你有一定的参考价值。

- Hadoop Common: The common utilities that support the other Hadoop modules.

- Hadoop Distributed File System (HDFS™): A distributed file system that provides high-throughput access to application data.

- Hadoop YARN: A framework for job scheduling and cluster resource management.

- Hadoop MapReduce: A YARN-based system for parallel processing of large data sets.

- 给部署在YARN上的应用,分配资源

- 管理资源

- JOB/APPLICATION 调度

3、技能

- 云计算,Hadoop 2.x

- 服务总线,SOA/OSB,Dubble

- 全文检索,Lucunce、Solr、Nutch

1)Linux 64 位操作系统。CentOS 6.4 版本号。VMWare 搭建的虚拟机

2)虚拟机能够联网

解压命令:tar -zxvf hadoop-2.2.0-src.tar.gz

之后进入到解压目录下,能够查看BUILDING.txt文件。 more BUILDING.txt 。向下翻页是空格键,当中内容例如以下

Requirements:

* Unix System

* JDK 1.6+

* Maven 3.0 or later

* Findbugs 1.3.9 (if running findbugs)

* ProtocolBuffer 2.5.0

* CMake 2.6 or newer (if compiling native code)

* Internet connection for first build (to fetch all Maven and Hadoop dependencies)

----------------------------------------------------------------------------------

Maven main modules:

hadoop (Main Hadoop project)

- hadoop-project (Parent POM for all Hadoop Maven modules. )

(All plugins & dependencies versions are defined here.)

- hadoop-project-dist (Parent POM for modules that generate distributions.)

- hadoop-annotations (Generates the Hadoop doclet used to generated the Java

docs)

- hadoop-assemblies (Maven assemblies used by the different modules)

- hadoop-common-project (Hadoop Common)

- hadoop-hdfs-project (Hadoop HDFS)

- hadoop-mapreduce-project (Hadoop MapReduce)

- hadoop-tools (Hadoop tools like Streaming, Distcp, etc.)

- hadoop-dist (Hadoop distribution assembler)

----------------------------------------------------------------------------------

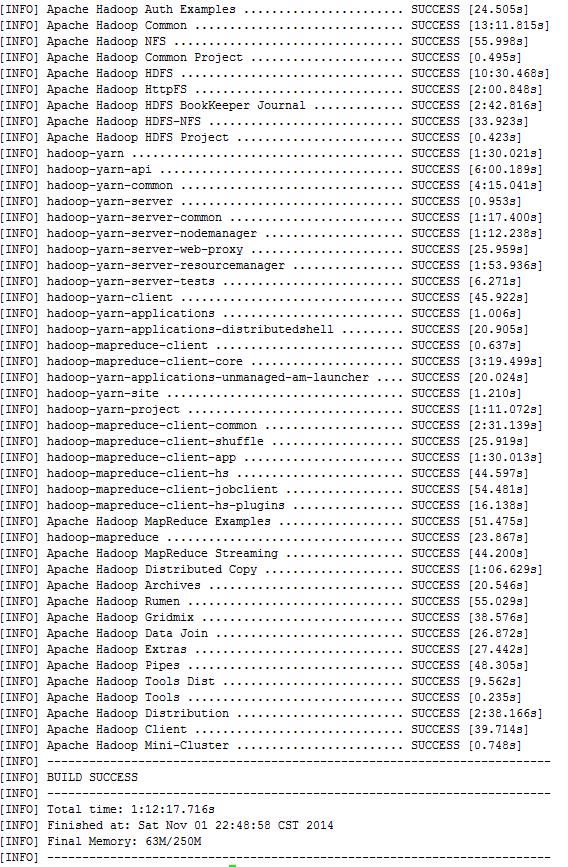

在编译完毕之后,能够查看Hadoop的版本号信息

libhadoop.so.1.0.0: ELF 64-bit LSB shared object, x86-64, version 1 (SYSV), dynamically linked, not stripped

[root@centos native]# pwd

/opt/hadoop-2.2.0-src/hadoop-dist/target/hadoop-2.2.0/lib/native

[root@centos native]#

安装linux系统包

- yum install autoconf automake libtool cmake

- yum install ncurses-devel

- yum install openssl-devel

- yum install lzo-devel zlib-devel gcc gcc-c++

安装Maven

- 下载:apache-maven-3.0.5-bin.tar.gz

- 解压:tar -zxvf apache-maven-3.0.5-bin.tar.gz

- 环境变量设置,打开/etc/profile文件,加入

- export MAVEN_HOME=/opt/apache-maven-3.0.5

- export PATH=$PATH:$MAVEN_HOME/bin

- 运行命令使之生效:source /etc/profile或者./etc/profile

- 验证:mvn -v

- 解压:tar -zxvf protobuf-2.5.0.tar.gz

- 进入安装文件夹,进行配置,运行命令。./configure

- 安装命令:make & make check & make install

- 验证:protoc --version

- 解压:tar -zxvf findbugs.tar.gz

- 环境变量设置:

- export export FINDBUGS_HOME=/opt/findbugs-3.0.0

- export PATH=$PATH:$FINDBUGS_HOME/bin

- 验证命令:findbugs -version

Error: could not find libjava.so

Error: could not find Java 2 Runtime Environment.

java version "1.7.0_71"

Java(TM) SE Runtime Environment (build 1.7.0_71-b14)

Java HotSpot(TM) 64-Bit Server VM (build 24.71-b01, mixed mode)

[root@centos ~]# javac -version

javac 1.7.0_71

[root@centos ~]#

|

<dependency>

<groupId>org.mortbay.jetty</groupId>

<artifactId>jetty-util</artifactId>

<scope>test</scope>

</dependency>

<dependency>

<groupId>org.mortbay.jetty</groupId>

<artifactId>jetty</artifactId>

<scope>test</scope>

</dependency>

|

进入到Hadoop源代码文件夹下/opt/hadoop-2.2.0-src。执行红色字体[可选项]:

Building distributions:

Create binary distribution without native code and without documentation:

$ mvn package -Pdist -DskipTests -Dtar

Create binary distribution with native code and with documentation:

$ mvn package -Pdist,native,docs -DskipTests -Dtar

Create source distribution:

$ mvn package -Psrc -DskipTests

Create source and binary distributions with native code and documentation:

$ mvn -e -X package -Pdist,native[,docs,src] -DskipTests -Dtar

Create a local staging version of the website (in /tmp/hadoop-site)

$ mvn clean site; mvn site:stage -DstagingDirectory=/tmp/hadoop-site

- 进入安装文件夹 /opt/modules/apache-maven-3.0.5/conf,编辑 settings.xml 文件

* 改动<mirrors>内容:

<mirror>

<id>nexus-osc</id>

<mirrorOf>*</mirrorOf>

<name>Nexus osc</name>

<url>http://maven.oschina.net/content/groups/public/</url>

</mirror>

* 改动<profiles>内容:

<profile>

<id>jdk-1.6</id>

<activation>

<jdk>1.6</jdk>

</activation>

<repositories>

<repository>

<id>nexus</id>

<name>local private nexus</name>

<url>http://maven.oschina.net/content/groups/public/</url>

<releases>

<enabled>true</enabled>

</releases>

<snapshots>

<enabled>false</enabled>

</snapshots>

</repository>

</repositories>

<pluginRepositories>

<pluginRepository>

<id>nexus</id>

<name>local private nexus</name>

<url>http://maven.oschina.net/content/groups/public/</url>

<releases>

<enabled>true</enabled>

</releases>

<snapshots>

<enabled>false</enabled>

</snapshots>

</pluginRepository>

</pluginRepositories>

</profile>

- 复制配置

将该配置文件拷贝到用户文件夹,使得每次对maven创建时,都採用该配置

* 查看用户文件夹【/home/hadoop】是否存在【.m2】文件夹,如没有。则创建

$ cd /home/hadoop

$ mkdir .m2

* 拷贝文件

$ cp /opt/modules/apache-maven-3.0.5/conf/settings.xml ~/.m2/

改动: vi /etc/resolv.conf

nameserver 8.8.8.8

nameserver 8.8.4.4

Importing projects to eclipse

When you import the project to eclipse, install hadoop-maven-plugins at first.

$ cd hadoop-maven-plugins

$ mvn install

Then, generate eclipse project files.

$ mvn eclipse:eclipse -DskipTests

At last, import to eclipse by specifying the root directory of the project via

[File] > [Import] > [Existing Projects into Workspace].

注意:

编译过程中假设出现不论什么有关jdk或者jre的问题:JAVA_HOME environment variable is not set.

如出现hadoop-hdfs/target/findbugsXml.xml does not exist则从该命令删除docs參数再执行mvn package -Pdist,native -DskipTests -Dtar -rf :hadoop-pipes