[卷积神经网络]-FOR CNN OUTPUT CALCULATE

Posted Jucway

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了[卷积神经网络]-FOR CNN OUTPUT CALCULATE相关的知识,希望对你有一定的参考价值。

文章目录

卷积计算

In a convolutional neural network, there are 3 main parameters that need to be tweaked to modify the behavior of a convolutional layer.

CNN 中有三个主要的参数需要调整和修改

These parameters are filter size, stride and zero padding.

分别为卷积核大小,卷积操作的步长以及填充大小

The size of the output feature map generated depends on the above 3 important parameters.

Size of the filters play an important role in finding the key features. It is difficult to select an optimal size of the filter.

每一层输出的特征图都取决于这三个主要的参数,同时在选择最优的和大小的时候是比较困难的;

It all depends on the application. A larger size kernel can overlook at the features and could skip the essential details in the images whereas a smaller size kernel could provide more information leading to more confusion. Thus there is a need to determine the most suitable size of the kernel/filter .

在进行卷积操作的时候,对于卷积核大小的选择是需要思考的,太小,可以得到更多细节信息

Methods like Gaussian pyramids(set of different sized kernels) can be used to test the efficiency of the feature extraction and appropriate size of kernel is determined. Added to the filter size, it is very important to understand and decide the size of stride and the padding.

Stride actually controls the number of steps that you move the filter over the input image. When the stride is 1, we move the filter one pixel at a time. When we set the stride to 2 or 3 (uncommon), we move the filter 2 or 3 pixels at a time depending on the stride. The value of the stride also controls the size of the output volume generated by the convolutional layer. Bigger the stride, smaller the output volume size. For example if the input image is 7×7

and stride is 1, the output volume will be 5×5. On the other hand if we increase the stride to be 2, the output volume reduces to 3×3.

Stride is normally set in a way so that the output volume is an integer and not a fraction .

Next important parameter is the zero padding. Zero padding refers to padding the input volume with zeros around the border. The zero padding also allows us to control the spatial size of the output volume. If we do not add any zero padding and we use a stride of 1, the spatial size of the output volume is reduced. However, with the first few convolutional layers in the network we would want to preserve the spatial size and make sure that the input volume is equal to the output volume. This is where the zero padding is helpful. In the 7×7

input image example, if we use a stride of 1 and a zero padding of 1, then the output volume is also equal to 7×7.

The formula to be used to measure the padding value to get the spatial size of the input and output volume to be the same with stride 1 is

K−12

where K is the filter size.

Finally, the formula to calculate the output size is equal to

O=W−K+2PS+1

where O is the output height/length, W is the input height/length, K is the filter size, P is the padding, and S is the stride.

For example, if we take S=1, P=2 with W=200 and K=5 and using 40 filters, then the output size will 200×200×40

using the above formula.

On the other hand if we use S=1,P=1

, then the output size would be 198×198×40.

I know it is a lengthy answer but just wanted to clarify how filter size, stride and padding work to control the output size in a convolutional layer

激活函数

Here is how to look at it.

You get a patch of size k×k

centered at (i,j)

from a feature map, do a dot product with the kernel weights to compute the result at that position and then move to the next position and repeat.

Note. That describes a cross-correlation. For a convolution we just flip the weights before the dot product which makes no difference at all.

So how is that non-linear?

The dot product is linear and all you are doing is scanning it over an input feature map at different locations. So we can assume the result of that scanning is also linear.

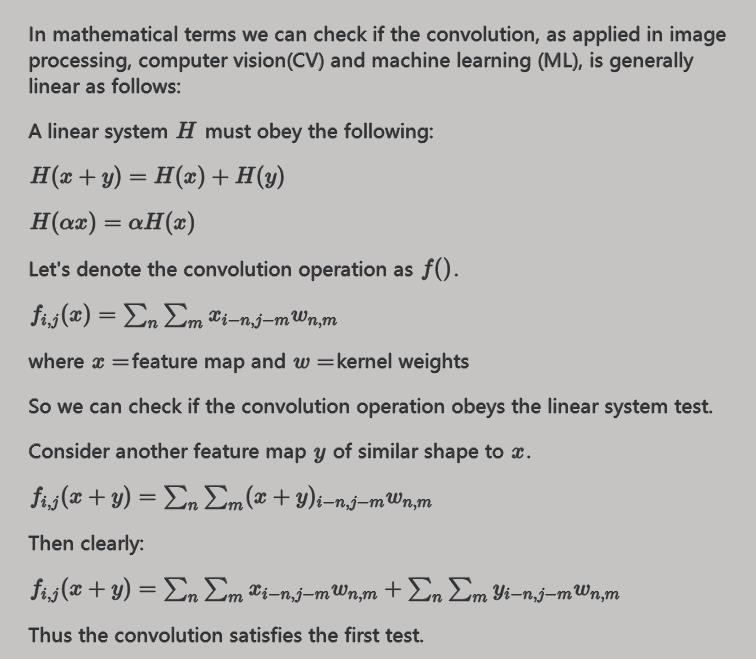

In mathematical terms we can check if the convolution, as applied in image processing, computer vision(CV) and machine learning (ML), is generally linear as follows:

A linear system H

must obey the following:

H(x+y)=H(x)+H(y)

H(αx)=αH(x)

Let’s denote the convolution operation as f()

.

fi,j(x)=∑n∑mxi−n,j−mwn,m

where x=

feature map and w=

kernel weights

So we can check if the convolution operation obeys the linear system test.

Consider another feature map y

of similar shape to x

.

fi,j(x+y)=∑n∑m(x+y)i−n,j−mwn,m

Then clearly:

fi,j(x+y)=∑n∑mxi−n,j−mwn,m+∑n∑myi−n,j−mwn,m

Thus the convolution satisfies the first test.

Also

fi,j(αx)=∑n∑mαxi−n,j−mwn,m

Which can easily be reduced to:

fi,j(αx)=α∑n∑mxi−n,j−mwn,m

Which proves that the convolution operation in convolutional neural networks (CNN) is itself linear.

Thus without non-linear activation functions the whole multi-layer(deep) CNN would collapse into a single equivalent convolutional layer.

Those activation functions play a critical role of introducing non-linearity in CNNs.

Hope this helps.

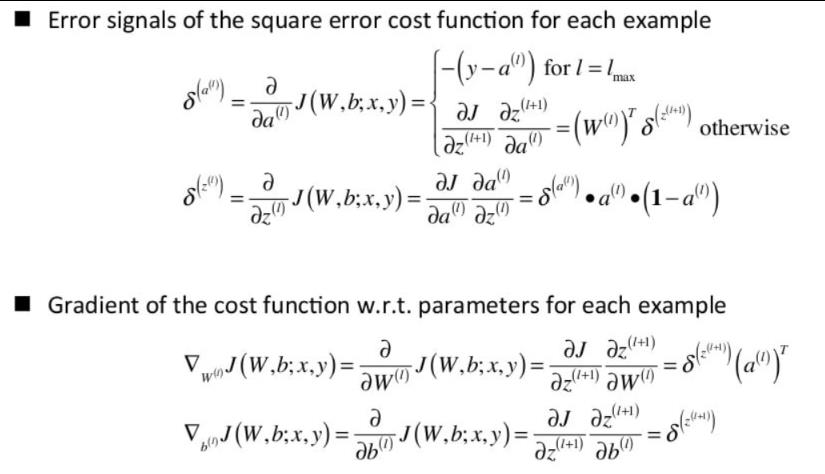

Backward gradient

pytorch and tensorflow difference

I use PyTorch at home and TensorFlow at work. The other way around would be also great, which kinda gives you a hint.

There are two “general use cases”. Training and inference. Each of them has its own challenges, but if you have only training (students and researchers) or mostly inference and implementation (developers), you start focusing on different things.

TensorFlow is built around a concept of Static Computational Graph (SCG). That means, first you define everything that is going to happen inside your framework, then you run it. It has two great upsides:

When a model becomes obscenely huge, it’s easier to understand it, because everything is basically a giant function that never changes.

It’s always easier to optimize a static computational graph, because it allows all kinds of tricks: preallocating buffers, fusing layers, precompiling the functions.

If this is what matters most for you, then your choice is probably TensorFlow.

A network written in PyTorch is a Dynamic Computational Graph (DCG). It allows you to do any crazy thing you want to do.

Dynamic data structures inside the network. You can have any number of inputs at any given point of training in PyTorch. Lists? Stacks? No problem.

Networks are modular. This is my favorite feature, actually. Each part is implemented separately, and you can debug it separately, unlike a monolithic TF construction.

TensorFlow has very wide support for parallel and distributed training. If you have 100 GPU…well, if you have 100 GPU, stop wasting time here and go check your networks, something must be already finished.

I like many interesting ways to optimize different processes in TF, from parallel training with queues to almost-built-in weight quantization[1] . It’s a great choice for our team and works marvelously in production.

PyTorch allows to write a lot of things very quickly without visible losses in performance during training. It’s hard to overestimate the importance of this, especially when you have strict deadlines and a lot of ideas to validate.

Try a few things with both and see the difference:

Skip some layers

Remove a few layers from a pretrained model

Mix activation functions / change them

There’s no compelling reason to do everything in one framework and miss out on a great deal of opportunities and convenient features. Different research groups will keep publishing code written in both, because there’s a place for each paradigm.

反向传播

以上是关于[卷积神经网络]-FOR CNN OUTPUT CALCULATE的主要内容,如果未能解决你的问题,请参考以下文章