从零开始搭建一个K8S的环境

Posted 大象无形,大音希声

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了从零开始搭建一个K8S的环境相关的知识,希望对你有一定的参考价值。

市面上有很多的K8S的书籍,但是关于如何搭建K8S的文章不多;下面笔者结合自己的经验,分享一下如何在阿里云的CentOs服务器上,搭建一个K8S的集群。

前提条件

一个阿里云的账号或者自己准备两台VM,其中一台是K8S的Master服务器,另外一台是K8S的Worker节点。

笔者自己在阿里云上面新建了2台按需付费的实例。

Master服务器:k8sMaster 172.24.137.71

Worker服务器:k8sWorker 172.24.137.72

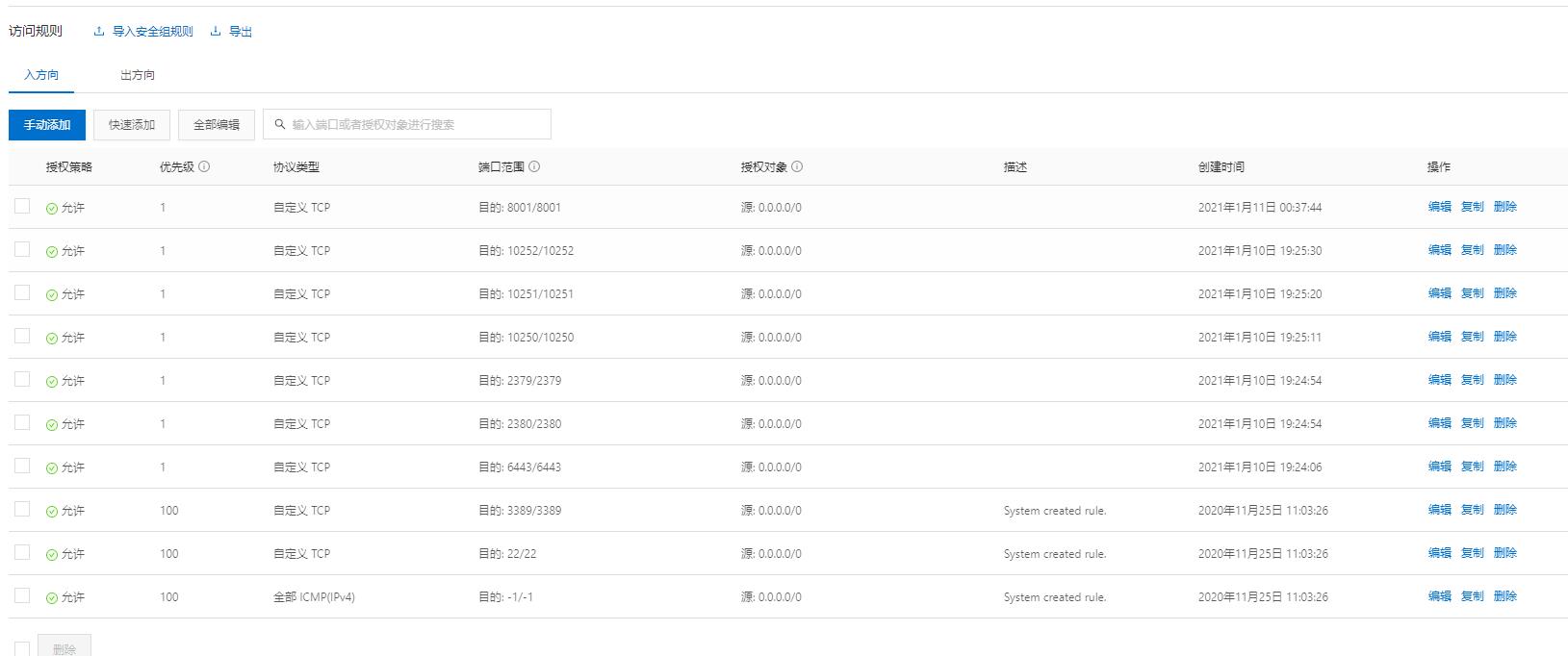

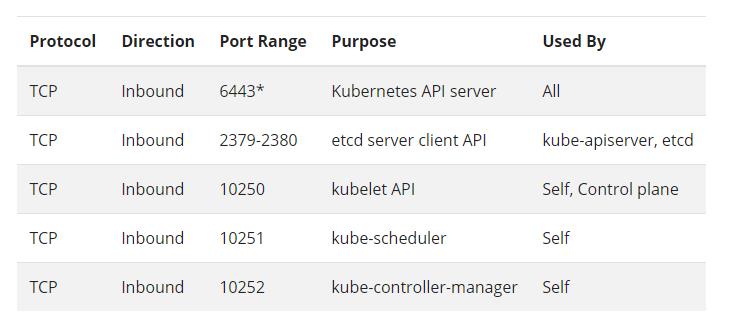

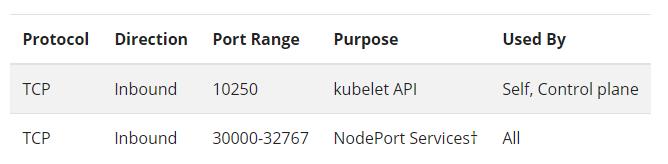

因为Master节点和Worker节点要进行相互的网络访问,根据K8S对网络防火墙的要求,需要开通下面的端口

创建两个安全组:

Master节点加入下面的安全组

Woker节点加入下面的安全组

具体细节,请参考K8S防火墙

Master节点应该开的网络防火墙设置:

Worker节点应该开的网络防火墙设置:

1. 安装Docker

首先在Master服务器和Worker服务器上都要安装好Docker,为了加快安装的过程,需要把Docker安装文件的镜像指向国内的

阿里云镜像。如果直接用国外的,可能要1个多小时甚至更长时间下载安装包,但是如果直接指向http://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.rep,快的话,分分钟的时间就下载并安装完成。

1.1 更改源为阿里源

sudo yum remove docker \\

docker-client \\

docker-client-latest \\

docker-common \\

docker-latest \\

docker-latest-logrotate \\

docker-logrotate \\

docker-engine

sudo yum install -y yum-utils device-mapper-persistent-data lvm2

sudo yum-config-manager --add-repo http://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.rep

1.2 安装Docker

sudo yum install -y yum-utils device-mapper-persistent-data lvm2

sudo yum-config-manager --add-repo http://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.rep

yum list docker-ce --showduplicates | sort -r

sudo yum makecache fast

yum install docker-ce-18.06.3.ce-3.el7 docker-ce-cli-18.06.3.ce-3.el7 containerd.io

安装完后执行

systemctl enable docker

systemctl start docker

1.3 修改Docker的源为阿里自己的Docker Hub <可选>

安装完毕后, 建议将 docker 源替换为国内. 推荐阿里云镜像加速, 有阿里云账号即可免费使用.阿里云 -> 容器镜像服务 -> 镜像中心 -> 镜像加速

sudo mkdir -p /etc/docker

sudo tee /etc/docker/daemon.json <<-'EOF'

"registry-mirrors": ["https://xxxxx.mirror.aliyuncs.com"],

"exec-opts": ["native.cgroupdriver=systemd"],

"log-driver": "json-file",

"log-opts":

"max-size": "100m"

,

"storage-driver": "overlay2"

EOF

其中,xxxxx自己阿里云账号的Docker Hub Register中心

安装完后执行

sudo systemctl daemon-reload

sudo systemctl restart docker

2. 安装K8S

在Master和Worker的机器上都是一样的安装步骤

2.1 修改K8S的源为阿里的源

cat <<EOF > /etc/yum.repos.d/kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl=http://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64

enabled=1

gpgcheck=1

repo_gpgcheck=1

gpgkey=http://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg http://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

exclude=kube*

EOF

2.2 安装K8S的软件

yum install -y kubelet kubeadm kubectl --disableexcludes=kubernetes

systemctl enable kubelet && systemctl start kubelet

2.3 K8S的通用网络配置

cat <<EOF > /etc/sysctl.d/k8s.conf

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

EOF

sysctl --system

3. 配置Master节点和安装Dashboard

3.1 初始化Master节点

kubeadm config print init-defaults > kubeadm-init.yaml

修改模板文件的中2处配置:

将advertiseAddress: 1.2.3.4修改为本机地址

将imageRepository: k8s.gcr.io修改为imageRepository: registry.cn-hangzhou.aliyuncs.com/google_containers

[root@k8sMaster ~]# cat kubeadm-init.yaml

apiVersion: kubeadm.k8s.io/v1beta2

bootstrapTokens:

- groups:

- system:bootstrappers:kubeadm:default-node-token

token: abcdef.0123456789abcdef

ttl: 24h0m0s

usages:

- signing

- authentication

kind: InitConfiguration

localAPIEndpoint:

advertiseAddress: 172.24.137.71

bindPort: 6443

nodeRegistration:

criSocket: /var/run/dockershim.sock

name: k8smaster

taints:

- effect: NoSchedule

key: node-role.kubernetes.io/master

---

apiServer:

timeoutForControlPlane: 4m0s

apiVersion: kubeadm.k8s.io/v1beta2

certificatesDir: /etc/kubernetes/pki

clusterName: kubernetes

controllerManager:

dns:

type: CoreDNS

etcd:

local:

dataDir: /var/lib/etcd

imageRepository: registry.cn-hangzhou.aliyuncs.com/google_containers

kind: ClusterConfiguration

kubernetesVersion: v1.20.0

networking:

dnsDomain: cluster.local

serviceSubnet: 10.96.0.0/12

scheduler:

3.2 下载K8S Master节点的依赖的管理Docker镜像

kubeadm config images pull --config kubeadm-init.yaml

3.3 执行初始化

kubeadm init --config kubeadm-init.yaml

最后两行需要保存下来, kubeadm join …是 worker 节点加入所需要执行的命令.

其参考格式如下:

kubeadm join 172.24.137.71:6443 --token 6s1u3g.rns2x03czqjux1x1 \\

--discovery-token-ca-cert-hash sha256:da3ffd7e60b1ad55f2a95ea6375f15deb21d09299eb0b803b9f7fb8a4ad0a356

3.4 配置kubectl命令

为了能够使用kubectl, 需要依赖/etc/kubernetes/admin.conf 文件,配置方法

在当前的登陆用户名下,新建一个.kube的目录,kubectl默认会读取这个文件夹下的配置,然后执行下面的复制命令

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

此时,就能执行kubectl的相关命令

[root@k8sMaster ~]# kubectl

kubectl controls the Kubernetes cluster manager.

Find more information at:

https://kubernetes.io/docs/reference/kubectl/overview/

Basic Commands (Beginner):

create Create a resource from a file or from stdin.

expose Take a replication controller, service, deployment or pod and

expose it as a new Kubernetes Service

run Run a particular image on the cluster

set Set specific features on objects

Basic Commands (Intermediate):

explain Documentation of resources

get Display one or many resources

edit Edit a resource on the server

delete Delete resources by filenames, stdin, resources and names, or by

resources and label selector

Deploy Commands:

rollout Manage the rollout of a resource

scale Set a new size for a Deployment, ReplicaSet or Replication

Controller

autoscale Auto-scale a Deployment, ReplicaSet, or ReplicationController

Cluster Management Commands:

certificate Modify certificate resources.

cluster-info Display cluster info

top Display Resource (CPU/Memory/Storage) usage.

cordon Mark node as unschedulable

uncordon Mark node as schedulable

drain Drain node in preparation for maintenance

taint Update the taints on one or more nodes

Troubleshooting and Debugging Commands:

describe Show details of a specific resource or group of resources

logs Print the logs for a container in a pod

attach Attach to a running container

exec Execute a command in a container

port-forward Forward one or more local ports to a pod

proxy Run a proxy to the Kubernetes API server

cp Copy files and directories to and from containers.

auth Inspect authorization

debug Create debugging sessions for troubleshooting workloads and

nodes

Advanced Commands:

diff Diff live version against would-be applied version

apply Apply a configuration to a resource by filename or stdin

patch Update field(s) of a resource

replace Replace a resource by filename or stdin

wait Experimental: Wait for a specific condition on one or many

resources.

kustomize Build a kustomization target from a directory or a remote url.

Settings Commands:

label Update the labels on a resource

annotate Update the annotations on a resource

completion Output shell completion code for the specified shell (bash or

zsh)

Other Commands:

api-resources Print the supported API resources on the server

api-versions Print the supported API versions on the server, in the form of

"group/version"

config Modify kubeconfig files

plugin Provides utilities for interacting with plugins.

version Print the client and server version information

Usage:

kubectl [flags] [options]

Use "kubectl <command> --help" for more information about a given command.

Use "kubectl options" for a list of global command-line options (applies to all

commands).

3.5 配置Master节点的网络

wget https://docs.projectcalico.org/v3.8/manifests/calico.yaml

kubectl apply -f calico.yaml

3.6 安装Dashboard

3.6.1 安装Dashboard

安装Dashboard的命令行如下:

wget https://raw.githubusercontent.com/kubernetes/dashboard/v2.0.0-beta4/aio/deploy/recommended.yaml

kubectl apply -f recommended.yaml

kubectl get pods --all-namespaces | grep dashboard

kubernetes-dashboard dashboard-metrics-scraper-7445d59dfd-pvr5q 1/1 Running 3 6d23h

kubernetes-dashboard kubernetes-dashboard-5d6fdccd5-l5bk7 1/1 Running 3 6d23h

3.6.2 创建Dashboard用户

创建一个用于登录 Dashboard 的用户. 创建文件dashboard-adminuser.yaml内容如下:

apiVersion: v1

kind: ServiceAccount

metadata:

name: admin-user

namespace: kube-system

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: admin-user

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: cluster-admin

subjects:

- kind: ServiceAccount

name: admin-user

namespace: kube-system

并执行下面的命令

kubectl apply -f dashboard-adminuser.yaml

3.6.3 生成Dashboard证书

grep 'client-certificate-data' ~/.kube/config | head -n 1 | awk 'print $2' | base64 -d >> kubecfg.crt

grep 'client-key-data' ~/.kube/config | head -n 1 | awk 'print $2' | base64 -d >> kubecfg.key

openssl pkcs12 -export -clcerts -inkey kubecfg.key -in kubecfg.crt -out kubecfg.p12 -name "kubernetes-client"

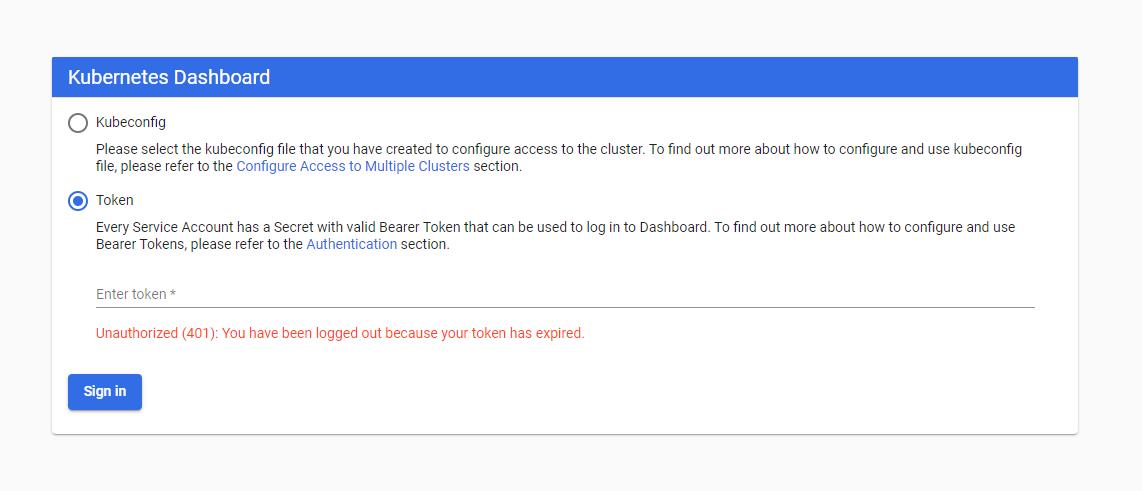

3.6.4 登陆Dashboard

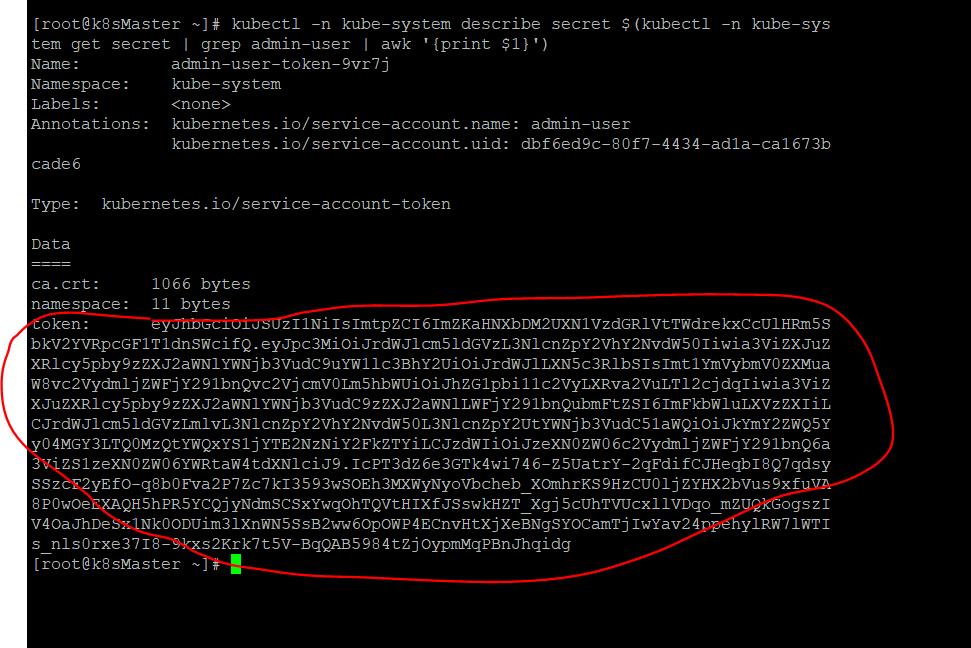

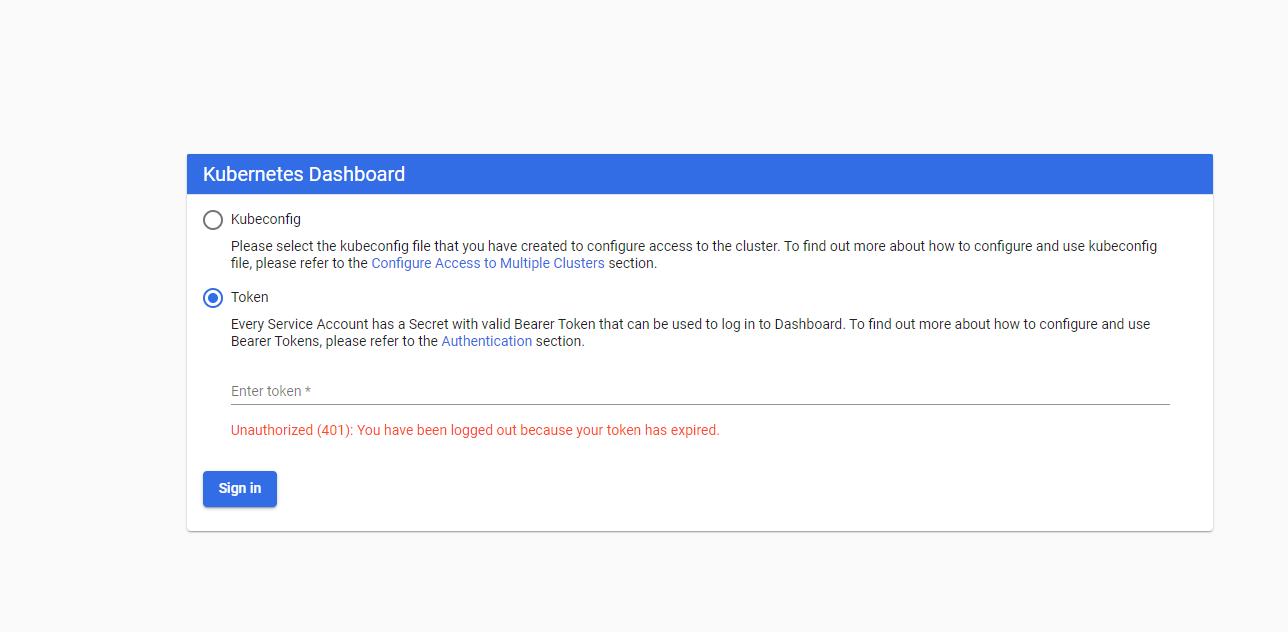

打开 Dashboard的时候,会首先进入下面的认证的页面。

一般我们会使用第二种方式,就是获取到Token,其命令如下

kubectl -n kube-system describe secret $(kubectl -n kube-system get secret | grep admin-user | awk 'print $1')

拷贝上面Token的值,注意不要包括前面的空格

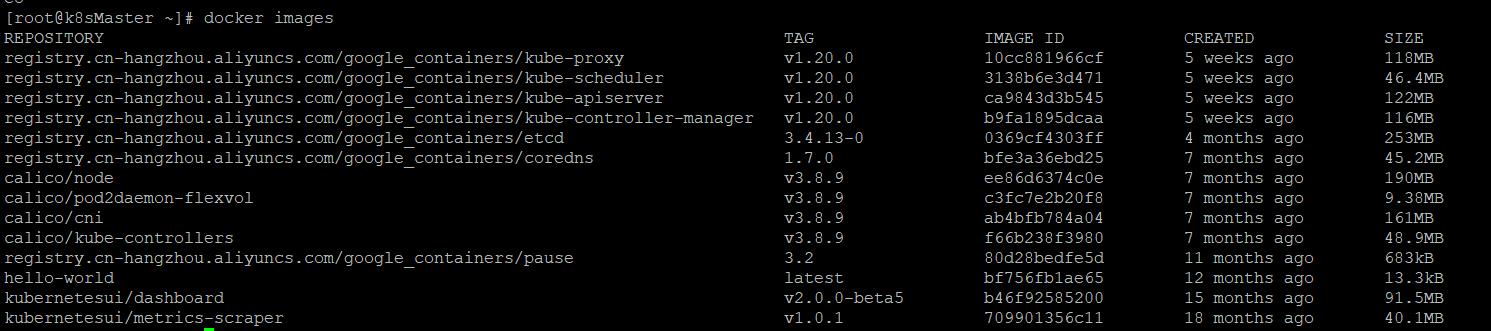

至此,所有的Docker的列表如下:

4. 配置Worker节点并加入Master节点

Worker节点的加入比较简单,直接执行上面提到的 “kubeadm join ” 命令

kubeadm join 172.24.137.71:6443 --token 6s1u3g.rns2x03czqjux1x1 \\

--discovery-token-ca-cert-hash sha256:da3ffd7e60b1ad55f2a95ea6375f15deb21d09299eb0b803b9f7fb8a4ad0a356

5. 常见问题

5.1 Worker节点执行kubectl失败

k8s集群slave节点使用kubectl命令时The connection to the server localhost:8080 was refused - did you specify the right host or port?

mkdir -p /root/.kube

scp root@172.24.137.71:/root/.kube/* /root/.kube 或者 cp -i /etc/kubernetes/admin.kubeconfig /root/.kube/config

kubectl get nodes

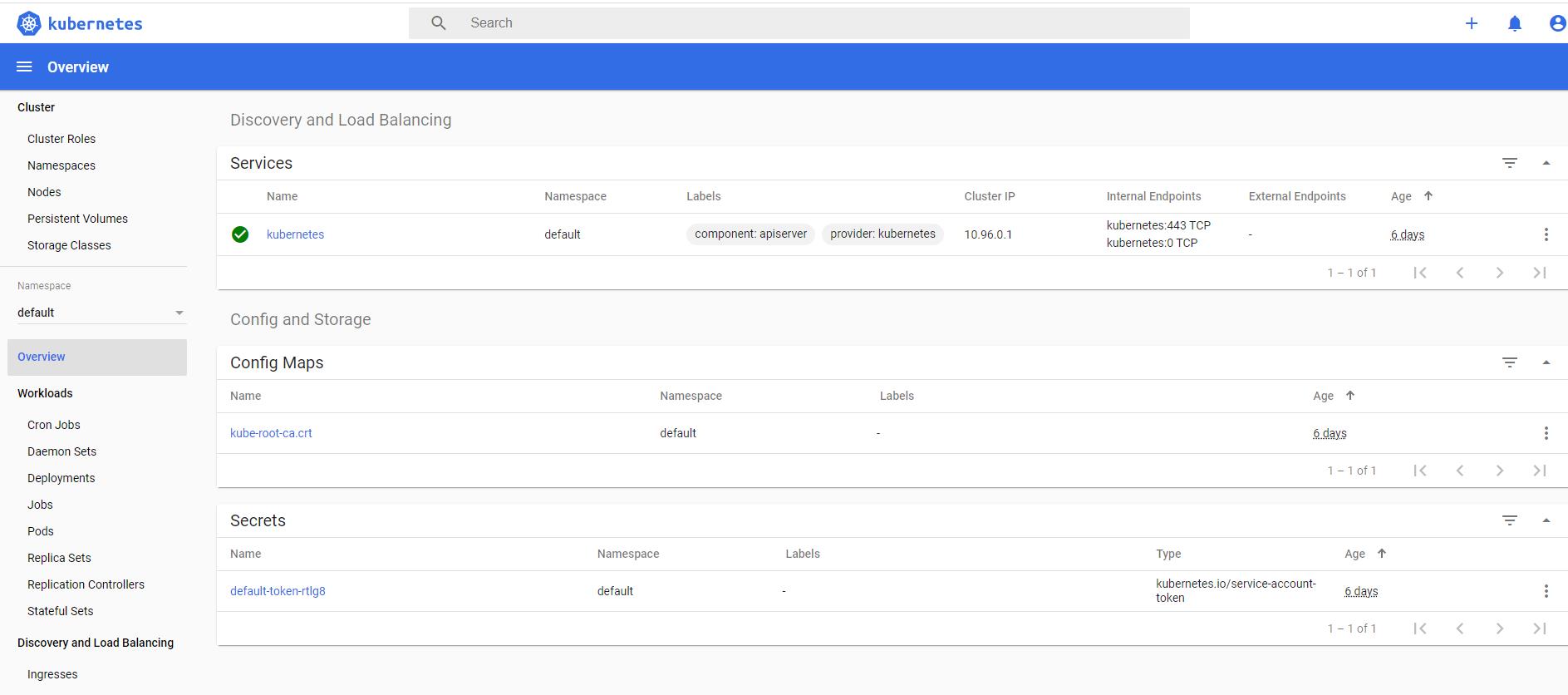

5.2 Dashboard在公网环境下不能访问登陆页面

Dashboard在公网环境下不能打开下面的访问登陆页面,

解决方案:

在Master节点执行下面的命令

kubectl create clusterrolebinding test:anonymous --clusterrole=cluster-admin --user=system:anonymous

5.3 在Join Master节点的时候,一直在“pre flight”命令行下不动

Worker节点在加入Master节点时,一直在“pre flight”命令行下不动

kubeadm join 172.24.137.71:6443 --token 6s1u3g.rns2x03czqjux1x1 \\

--discovery-token-ca-cert-hash sha256:da3ffd7e60b1ad55f2a95ea6375f15deb21d09299eb0b803b9f7fb8a4ad0a356

解决办法: 原因是Master生成的Token过期了

登陆到Master服务器上

3.1 原因是第一次生成的Token 过期了

kubeadm token list //token列表 ,第一次登陆上去,应该列表是空的

kubeadm token create --ttl 0 //永不失效

kubeadm token create //有效期一天

3.2 看出CA Token,一般CA Token是不会过期的

openssl x509 -pubkey -in /etc/kubernetes/pki/ca.crt | openssl rsa -pubin -outform der 2>/dev/null | openssl dgst -sha256 -hex | sed 's/^.* //'

46f6cf1d84d0eadb4f6e7f05b908e5572025886d9f134db27f92b98e1c3dd3ed //token

3.3 重新生成新的Worker加入Master节点的命令

kubeadm join 172.24.137.71:6443 --token abcdef.0123456789abcdef \\

--discovery-token-ca-cert-hash sha256:da3ffd7e60b1ad55f2a95ea6375f15deb21d09299eb0b803b9f7fb8a4ad0a356

参考文档

kubernetes join 卡住 token过期

Kubernetes(一) 跟着官方文档从零搭建K8S

以上是关于从零开始搭建一个K8S的环境的主要内容,如果未能解决你的问题,请参考以下文章