深入理解Android Tunnel Mode

Posted zhanghui_cuc

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了深入理解Android Tunnel Mode相关的知识,希望对你有一定的参考价值。

什么是Tunnel mode?

通俗的说就是video的offload playback mode,把视频解码\\音画同步\\渲染的工作从由AP(Application Processor)完成转变为都由DSP来完成,甚至全程不需要android Framework的参与,性能更强、功耗更低,尤其适合在TV设备上播放UHD\\HDR\\高码率\\高帧率内容。

Audio的Tunnel mode在kitkat中就已经存在了,可以在音乐播放中起到节省电量的作用。

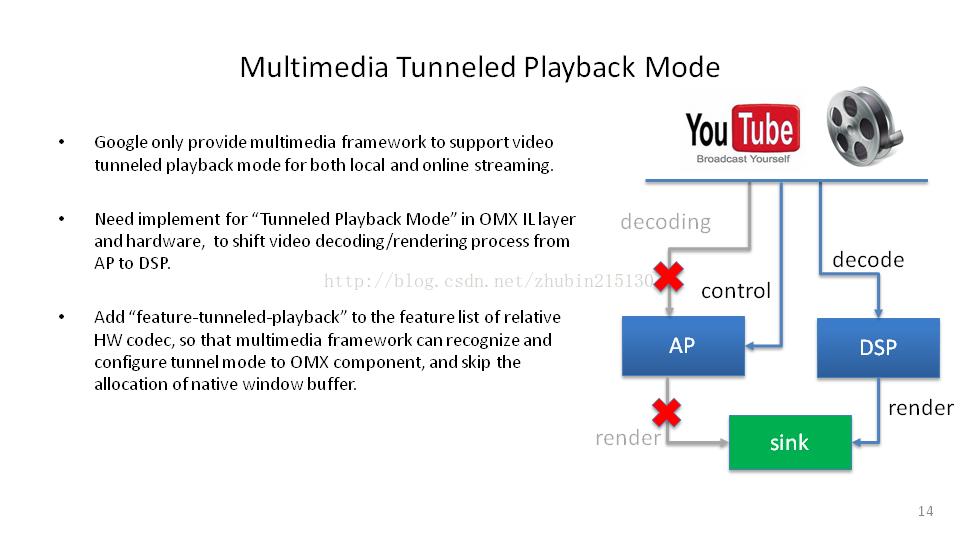

Google为本地和在线播放的Tunnel mode提供了framework,但是需要在OMX IL层的硬件层做具体的实现,将视频decode和render的工作从AP转移到DSP。

这里面包括给相关硬件解码器的feature list加上”feature tunnel playback”项,从而让multimedia framework能够为OMX components识别\\配置tunnel mode,再略过原本在多媒体framework中需要进行的native window buffer分配等工作来进行渲染。

MediaCodec中的Tunnel Mode

MediaCodecInfo.CodecCapabilities中与Tunnel playback有关的内容

/**

* <b>video or audio decoder only</b>: codec supports tunneled playback.

*/

public static final String FEATURE_TunneledPlayback = "tunneled-playback";

Exoplayer中用DecoderInfo封装了MediaCodecInfo.CodecCapabilities,在com/google/android/exoplayer/MediaCodecTrackRenderer.java的maybeInitCodec函数中获取DecoderInfo信息

//获取解码器信息

DecoderInfo decoderInfo = null;

try

decoderInfo = getDecoderInfo(mediaCodecSelector, mimeType, requiresSecureDecoder);

catch (DecoderQueryException e)

notifyAndThrowDecoderInitError(new DecoderInitializationException(format, e,

requiresSecureDecoder, DecoderInitializationException.DECODER_QUERY_ERROR));

此外,我们也可以直接用下面的代码查看全部Codec对tunneled-playback feature的支持

int numCodecs = MediaCodecList.getCodecCount();

for (int i = 0; i < numCodecs; i++)

MediaCodecInfo codecInfo = MediaCodecList.getCodecInfoAt(i);

String name = codecInfo.getName();

Log.i(TAG, "Examinig " + (codecInfo.isEncoder() ? "encoder" : "decoder") + ": " + name);

for(String type: codecInfo.getSupportedTypes())

boolean tp = codecInfo.getCapabilitiesForType(type).isFeatureSupported(MediaCodecInfo.CodecCapabilities.FEATURE_TunneledPlayback);

Log.i(TAG, "supports tunneled playback: " + tp);

codec的feature可以直接在media_codecs.xml中看到,举例

<MediaCodec name="OMX.qcom.video.decoder.avc" type="video/avc">

<Quirk name="requires-allocate-on-input-ports"/>

<Quirk name="requires-allocate-on-output-ports"/>

<Limit name="size" min="64x64" max="4096x2160"/>

<Limit name="alignment" value="2x2"/>

<Limit name="block-size" value="16x16"/>

<Limit name="blocks-per-second" min="1" max="1958400"/>

<Limit name="bitrate" range="1-100000000"/>

<Limit name="frame-rate" range="1-240"/>

<Limit name="vt-low-latency" value="1"/>

<Limit name="vt-max-macroblock-processing-rate" value="972000"/>

<Limit name="vt-max-level" value="52"/>

<Limit name="vt-max-instances" value="16"/>

<Feature name="adaptive-playback"/>

<Limit name="concurrent-instances" max="16"/>

</MediaCodec>

在MTK和高通平台的手机上查看的结果是没有任何Codec支持tunneled-playback feature,包括OMX.qcom.video.decoder.hevc等等。

但是在MSTAR、AMLOGIC等电视上则找到了支持tunneled playback的codec

<MediaCodec name="OMX.MS.AVC.Decoder" type="video/avc">

<Limit name="concurrent-instances" max="32"/>

<Quirk name="requires-allocate-on-input-ports"/>

<Quirk name="requires-allocate-on-output-ports"/>

<Quirk name="requires-loaded-to-idle-after-allocation"/>

<Limit name="size" min="64x64" max="3840x2160"/>

<Limit name="alignment" value="2x2"/>

<Limit name="block-size" value="16x16"/>

<Limit name="blocks-per-second" min="1" max="972000"/>

<Feature name="adaptive-playback"/>

<Feature name="tunneled-playback"/> 在这里

</MediaCodec>

深入到framework中看看omx层对应的实现

/frameworks/av/media/libstagefright/Acodec.cpp

status_t ACodec::configureTunneledVideoPlayback(

int32_t audioHwSync, const sp<ANativeWindow> &nativeWindow)

native_handle_t* sidebandHandle;

status_t err = mOMX->configureVideoTunnelMode(

mNode, kPortIndexOutput, OMX_TRUE, audioHwSync, &sidebandHandle);

if (err != OK)

ALOGE("configureVideoTunnelMode failed! (err %d).", err);

return err;

….....

上面的内容会去调用

status_t OMXNodeInstance::configureVideoTunnelMode(

OMX_U32 portIndex, OMX_BOOL tunneled, OMX_U32 audioHwSync,

native_handle_t **sidebandHandle)

…..

ConfigureVideoTunnelModeParams tunnelParams;

InitOMXParams(&tunnelParams);

tunnelParams.nPortIndex = portIndex;

tunnelParams.bTunneled = tunneled;

tunnelParams.nAudioHwSync = audioHwSync;

err = OMX_SetParameter(mHandle, index, &tunnelParams);

…....

err = OMX_GetParameter(mHandle, index, &tunnelParams);

…....

if (sidebandHandle)

*sidebandHandle = (native_handle_t*)tunnelParams.pSidebandWindow;

.......

/frameworks/native/include/media/hardware/HardwareAPI.h

描述VideoTunnelMode参数的结构体,被OMX_SetParameter和OMX_GetParameter调用.

bTunneled用于指明video decoder是否工作在Tunneled Mode

如果为true, video decoder将decoded frames直接输出到sink,此时nAudioHwSync对应前面所设置的KEY_SESSION_ID,即当前的视频流要和哪个音频流同步(hardware synchronizaiton).

如果为false,不工作在tunneled mode, nAudioHwSync也要被ignore

pSidebandWindow指向codec allocated sideband window

// A pointer to this struct is passed to OMX_SetParameter or OMX_GetParameter

// when the extension index for the

// 'OMX.google.android.index.configureVideoTunnelMode' extension is given.

// If the extension is supported then tunneled playback mode should be supported

// by the codec. If bTunneled is set to OMX_TRUE then the video decoder should

// operate in "tunneled" mode and output its decoded frames directly to the

// sink. In this case nAudioHwSync is the HW SYNC ID of the audio HAL Output

// stream to sync the video with. If bTunneled is set to OMX_FALSE, "tunneled"

// mode should be disabled and nAudioHwSync should be ignored.

// OMX_GetParameter is used to query tunneling configuration. bTunneled should

// return whether decoder is operating in tunneled mode, and if it is,

// pSidebandWindow should contain the codec allocated sideband window handle.

struct ConfigureVideoTunnelModeParams

OMX_U32 nSize; // IN

OMX_VERSIONTYPE nVersion; // IN

OMX_U32 nPortIndex; // IN

OMX_BOOL bTunneled; // IN/OUT

OMX_U32 nAudioHwSync; // IN

OMX_PTR pSidebandWindow; // OUT

;

......

在底层还会看到

OMX_ERRORTYPE MS_OMX_VideoDecodeGetParameter(

OMX_IN OMX_HANDLETYPE hComponent,

OMX_IN OMX_INDEXTYPE nParamIndex,

OMX_INOUT OMX_PTR ComponentParameterStructure)

…..

switch (nParamIndex)

case OMX_IndexParamConfigureVideoTunnelMode:

ConfigureVideoTunnelModeParams *tunnelParams = (ConfigureVideoTunnelModeParams *)ComponentParameterStructure;

if (tunnelParams->nPortIndex != OUTPUT_PORT_INDEX)

ret = OMX_ErrorBadPortIndex; //看这里

goto EXIT;

MS_VDEC_HANDLE *handle = (MS_VDEC_HANDLE *)pMSComponent->hCodecHandle;

tunnelParams->bTunneled = handle->bSideBand;

tunnelParams->pSidebandWindow = handle->pSidebandWindow;

break;

…..

显示通路中的Tunnel Mode

先说ACodec,如果OMX组件支持tunneled的话,ACodec会skip the allocation of output buffer from the native window

status_t ACodec::configureOutputBuffersFromNativeWindow(

OMX_U32 *bufferCount, OMX_U32 *bufferSize,

OMX_U32 *minUndequeuedBuffers) …...

// Exits here for tunneled video playback codecs -- i.e. skips native window

// buffer allocation step as this is managed by the tunneled OMX omponent

// itself and explicitly sets def.nBufferCountActual to 0.

if (mTunneled)

ALOGV("Tunneled Playback: skipping native window buffer allocation.");

def.nBufferCountActual = 0;

err = mOMX->setParameter(

mNode, OMX_IndexParamPortDefinition, &def, sizeof(def));

*minUndequeuedBuffers = 0;

*bufferCount = 0;

*bufferSize = 0;

return err;

…...

在Tunnel Mode中有一个关键概念Sideband stream。

在/frameworks/native/services/surfaceflinger/DisplayHardware/HWComposer.cpp我们可以看到

virtual void setSidebandStream(const sp<NativeHandle>& stream)

ALOG_ASSERT(stream->handle() != NULL);

getLayer()->compositionType = HWC_SIDEBAND;

getLayer()->sidebandStream = stream->handle();

HWC_SIDEBAND的定义在

/hardware/libhardware/include/hardware/hwcomposer_defs.h

/* this layer's contents are taken from a sideband buffer stream.

* Added in HWC_DEVICE_API_VERSION_1_4. */

HWC_SIDEBAND = 4,

compositionType和getLayer()->sidebandStream的定义在/hardware/libhardware/include/hardware/hwcomposer.h

如何显示?如果指定了HWC_SIDEBAND,不由Layer处理显示

In /frameworks/native/services/surfaceflinger/SurfaceFlinger.cpp

bool SurfaceFlinger::doComposeSurfaces(const sp<const DisplayDevice>& hw, const Region& dirty)......

/*and then, render the layers targeted at the framebuffer*/

switch (cur->getCompositionType())

case HWC_CURSOR_OVERLAY:case HWC_OVERLAY:

const Layer::State& state(layer->getDrawingState());

if ((cur->getHints() & HWC_HINT_CLEAR_FB)

&& I && layer->isOpaque(state) && (state.alpha == 0xFF)

&& hasGlesComposition)

// never clear the very first layer since we're

// guaranteed the FB is already cleared

layer->clearWithOpenGL(hw, clip);

break;

case HWC_FRAMEBUFFER(代表常规的显示方法):

layer->draw(hw, clip);

Break;

......

总结重点如下:

- OMX组件需要有支持tunnel playback的标识

- Tunnel Mode下的音画同步由硬件完成

- Tunnel Mode解码器直接将解码后的数据吐给显示硬件,走sideband stream

- Tunnel Mode下的解码、音画同步和渲染完全由芯片厂商自定义,全程无需Android Framework层的参与

怎么用Tunnel Mode

参考cts中的实现

cts/tests/tests/media/src/android/media/cts/MediaCodecTunneledPlayer.java

// setup tunneled video codec if needed

if (isVideo && mTunneled)

format.setFeatureEnabled(MediaCodecInfo.CodecCapabilities.FEATURE_TunneledPlayback,

true);

MediaCodecList mcl = new MediaCodecList(MediaCodecList.ALL_CODECS);

String codecName = mcl.findDecoderForFormat(format);

if (codecName == null)

Log.e(TAG,"addTrack - Could not find Tunneled playback codec for "+mime+

" format!");

return false;

codec = MediaCodec.createByCodecName(codecName);

if (codec == null)

Log.e(TAG, "addTrack - Could not create Tunneled playback codec "+

codecName+"!");

return false;

if (mAudioTrackState != null)

format.setInteger(MediaFormat.KEY_AUDIO_SESSION_ID, mAudiosessionId);

/cts/tests/tests/media/src/android/media/cts/CodecState.java

public void start()

mCodec.start();

mCodecInputBuffers = mCodec.getInputBuffers();

if (!mTunneled || mIsAudio)

mCodecOutputBuffers = mCodec.getOutputBuffers();

.....

public void flush()

mAvailableInputBufferIndices.clear();

if (!mTunneled || mIsAudio)

mAvailableOutputBufferIndices.clear();

mAvailableOutputBufferInfos.clear();

....

mCodec.flush();

......

public void doSomeWork()

......

while (feedInputBuffer())

if (mIsAudio || !mTunneled)

MediaCodec.BufferInfo info = new MediaCodec.BufferInfo();

int indexOutput = mCodec.dequeueOutputBuffer(info, 0 /* timeoutUs */);

if (indexOutput == MediaCodec.INFO_OUTPUT_FORMAT_CHANGED)

mOutputFormat = mCodec.getOutputFormat();

onOutputFormatChanged();

else if (indexOutput == MediaCodec.INFO_OUTPUT_BUFFERS_CHANGED)

mCodecOutputBuffers = mCodec.getOutputBuffers();

else if (indexOutput != MediaCodec.INFO_TRY_AGAIN_LATER)

mAvailableOutputBufferIndices.add(indexOutput);

mAvailableOutputBufferInfos.add(info);

.......

.......

private boolean feedInputBuffer() throws MediaCodec.CryptoException, IllegalStateException

......

if (mTunneled && !mIsAudio)

if (mSampleBaseTimeUs == -1)

mSampleBaseTimeUs = sampleTime;

sampleTime -= mSampleBaseTimeUs;

// FIX-ME: in tunneled mode we currently use input buffer time

// as video presentation time. This is not accurate and should be fixed

mPresentationTimeUs = sampleTime;

......

}

/cts/tests/tests/media/src/android/media/cts/DecoderTest.java

/**

* Test tunneled video playback mode if supported

*/

public void testTunneledVideoPlayback() throws Exception

if (!isVideoFeatureSupported(MediaFormat.MIMETYPE_VIDEO_AVC,

CodecCapabilities.FEATURE_TunneledPlayback