CUDA中的几种并行计算模型

Posted ithiker

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了CUDA中的几种并行计算模型相关的知识,希望对你有一定的参考价值。

Background

CUDA编程中由于thread space和input space通常是不同的:

- Thread Space最多可以是5D,2D Grid + 3D Block

- Input Space通常是1D,有时也有2D的矩阵等

这就导致了我们需要将inputs映射到threads中去,比如我在《CUDA中线程与数据的对应关系》中所写的,主要就是考虑线程数和数据数下的映射关系。

如果我们有一个1D的Input array, 采用不同的thread space, thread index和input index的对应关系如下:

- 1 block, N threads(1D): 对应的线程索引threadIdx.x

- 1 block, M X N threads(2D): 对应的线程索引blockIdx.y * blockDim.x + threadIdx.x

- N block(1D), M threads(1D): 对应的线程索引blockIdx.x * gridDim.x + threadIdx.x

这里只列举部分情况。

CUDA中的并行计算模型是基于上面的对应关系的,常见的并行计算模型有如下几种:

- Map

- Gather

- Scatter

- Transpose

- Reduce

- Scan

下面逐个介绍这几种模型。

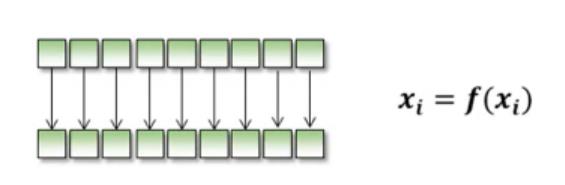

Map

Map就是将某个function作用到输入队列的每一个元素,然后用结果更新该队列:

比如,我们要将某个队列中的每个元素都加10,我们可以写类似这样的代码:

#include <curand.h>

#include <cuda.h>

#include <iostream>

#include <stdio.h>

__global__ void addTen(float* d, int count)

int threadsPerBlock = blockDim.x * blockDim.y * blockDim.z;

int threadPosInBlock = threadIdx.x + blockDim.x * threadIdx.y + blockDim.x * blockDim.y * threadIdx.z;

int blockPosInGrid = blockIdx.x + gridDim.x * blockIdx.y; // grid is always 2D

int threadIndex = blockPosInGrid * threadsPerBlock + threadPosInBlock;

if (threadIndex < count)

d[threadIndex] += 10;

int main()

curandGenerator_t gen;

curandCreateGenerator(&gen, CURAND_RNG_PSEUDO_MTGP32);

curandSetPseudoRandomGeneratorSeed(gen, time(0));

const int count = 123456;

const int size = count * sizeof(float);

float *d;

float h[count];

cudaMalloc(&d, size);

curandGenerateUniform(gen, d, count);

dim3 block(8, 8, 8);

dim3 grid(16, 16);

addTen<<<grid, block>>>(d, count);

cudaMemcpy(h, d, size, cudaMemcpyDeviceToHost);

cudaFree(d);

for (int i = 0; i < 100; ++i)

std::cout << h[i] <<std::endl;

getchar();

return 0;

上面,将会产生0-1之间的随机均匀分布的数字,CUDA代码的作用是在该array中的每个数加10。注意这里用到了CUDA的curand库,因此编译时需要link到curand,如下:

nvcc -o Map Map.cu -lcurand

Gather

Gather “gathers” information from other parts of memory. Scatter “scatters” information to other parts of memory.[1]

Gather就是从内存已有的信息中提取信息,例如由给定array中的某些元素生成一个元素:

比如一般的stencil问题:

如果radius=3, 每个元素是相邻的7个元素之和,实现代码如下:

#include <thrust/device_vector.h>

#define RADIUS 3

#define BLOCKSIZE 32

/*******************/

/* iDivUp FUNCTION */

/*******************/

int iDivUp(int a, int b) return ((a % b) != 0) ? (a / b + 1) : (a / b);

/********************/

/* CUDA ERROR CHECK */

/********************/

#define gpuErrchk(ans) gpuAssert((ans), __FILE__, __LINE__);

inline void gpuAssert(cudaError_t code, const char *file, int line, bool abort=true)

if (code != cudaSuccess)

fprintf(stderr,"GPUassert: %s %s %d\\n", cudaGetErrorString(code), file, line);

if (abort) exit(code);

/**********/

/* KERNEL */

/**********/

__global__ void moving_average(unsigned int *in, unsigned int *out, int N)

__shared__ unsigned int temp[BLOCKSIZE + 2 * RADIUS];

int gindexx = threadIdx.x + blockIdx.x * blockDim.x;

int lindexx = threadIdx.x + RADIUS;

// --- Read input elements into shared memory

temp[lindexx] = (gindexx < N)? in[gindexx] : 0;

if (threadIdx.x < RADIUS)

temp[threadIdx.x] = (((gindexx - RADIUS) >= 0)&&(gindexx <= N)) ? in[gindexx - RADIUS] : 0;

temp[threadIdx.x + (RADIUS + BLOCKSIZE)] = ((gindexx + BLOCKSIZE) < N)? in[gindexx + BLOCKSIZE] : 0;

__syncthreads();

// --- Apply the stencil

unsigned int result = 0;

for (int offset = -RADIUS ; offset <= RADIUS ; offset++)

result += temp[lindexx + offset];

// --- Store the result

out[gindexx] = result;

/********/

/* MAIN */

/********/

int main()

const int N = 55 + 2 * RADIUS;

const unsigned int constant = 4;

thrust::device_vector<unsigned int> d_in(N, constant);

thrust::device_vector<unsigned int> d_out(N);

moving_average<<<iDivUp(N, BLOCKSIZE), BLOCKSIZE>>>(thrust::raw_pointer_cast(d_in.data()), thrust::raw_pointer_cast(d_out.data()), N);

gpuErrchk(cudaPeekAtLastError());

gpuErrchk(cudaDeviceSynchronize());

thrust::host_vector<unsigned int> h_out = d_out;

for (int i=0; i<N; i++)

printf("Element i = %i; h_out = %i\\n", i, h_out[i]);

return 0;

Scatter

Scatter正好和Gather相反,这里有一个Scatter的比较好的解释:

When each thread needs to write its output in a different or multiple places we call this the scatter operation.

当需要把一个元素写到不同的位置或者写到多个位置时,就是scatter.

参考[3], 这里有一个用thrust实现的scatter的例子:

#include <thrust/scatter.h>

#include <thrust/device_vector.h>

#include <thrust/execution_policy.h>

#include <vector>

#include <iostream>

int main()

int values[10] = 1, 0, 1, 0, 1, 0, 1, 0, 1, 0;

thrust::device_vector<int> d_values(values, values + 10);

// scatter all even indices into the first half of the range, and odd indices vice versa

int map[10] = 0, 5, 1, 6, 2, 7, 3, 8, 4, 9;

thrust::device_vector<int> d_map(map, map + 10);

thrust::device_vector<int> d_output(10);

thrust::scatter(thrust::device, d_values.begin(), d_values.end(), d_map.begin(), d_output.begin());

std::vector<int> result(d_output.size());

thrust::copy(d_output.begin(), d_output.end(), result.begin());

for(auto& e : result)

std::cout << e << std::endl;

Thurst 是CUDA的原生库,编译时不需要做额外的链接,如下即可:

nvcc -std=c++11 -o Scatter Scatter.cu

Transpose

Transpose通常涉及到矩阵的转置。

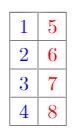

比如,我们有一个图片,它的pixel值信息是按行排列存储的:

那么,我们把它放到CUDA的内存中,它的排布是这样的:

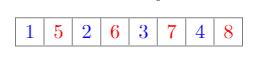

但是,通常,我们使用图片时,可能采用按列顺序读取的方式,比如先第一列1,5;然后第二列2,6,…,最后4,8

那么,根据内存读取优化原则,相邻读取的元素在内存中相邻排布才有最好的性能,那么,我们实际希望的GPU内存排布是:

Transpose是一种特殊的scatter, 实现这个需求只需要把上面的代码稍微修改即可:

#include <thrust/scatter.h>

#include <thrust/device_vector.h>

#include <thrust/execution_policy.h>

#include <vector>

#include <iostream>

int main()

int values[8] = 1, 2, 3, 4, 5, 6, 7, 8;

thrust::device_vector<int> d_values(values, values + 8);

// scatter first half to the dest even index and second half to the dest odd indices

int map[8] = 0, 2, 4, 6, 1, 3, 5, 7;

thrust::device_vector<int> d_map(map, map + 8);

thrust::device_vector<int> d_output(8);

thrust::scatter(thrust::device, d_values.begin(), d_values.end(), d_map.begin(), d_output.begin());

std::vector<int> result(d_output.size());

thrust::copy(d_output.begin(), d_output.end(), result.begin());

for(auto& e : result)

std::cout << e << std::endl;

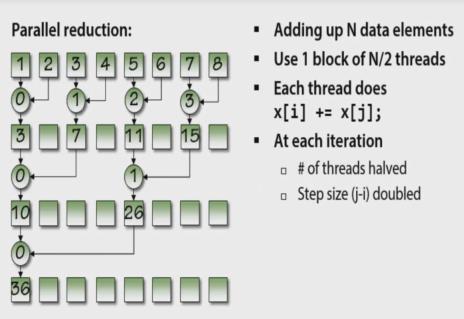

Reduce

Reduce就是一种分而治之(divide and conquer)的方法,比如下面的求和:

在CUDA中可以采用如下的并行算法:

初始时,thread number是data size的一半,每个迭代,一个线程负责两个数的相加;每过一个迭代,data size进一步减半,因而需要使用的线程也减半。

对应的核代码为:

#include <cuda.h>

#include <iostream>

#include <stdio.h>

__global__ void sum(int* d)

int tid = threadIdx.x;

for (int threadCount = blockDim.x, stepSize = 1; threadCount > 0; threadCount /= 2, stepSize *= 2)

if (tid < threadCount)

int lhsIndex = tid * stepSize * 2;

int rhsIndex = lhsIndex + stepSize;

d[lhsIndex] += d[rhsIndex];

int main()

const int count = 8;

const int size = count * sizeof(int);

int *d;

int h[count] = 1, 2, 3, 4, 5, 6, 7, 8;

cudaMalloc(&d, size);

cudaMemcpy(d, h, size, cudaMemcpyHostToDevice);

sum<<<1, count / 2>>>(d);

cudaMemcpy(h, d, size, cudaMemcpyDeviceToHost);

cudaFree(d);

for (int i = 0; i < count; ++i)

std::cout << h[i] <<std::endl;

getchar();

return 0;

程序输出为:

36

2

7

4

26

6

15

8

第一个数为我们想要的sum, 对比上图和程序,我们发现thread 0干的活最多,因为tid最小为0,threadCount不断减半,所以最后结果写到了d[0]处。

Scan

当输出队列的第n个元素有输入队列的前n个元素决定时,称这种计算模式为scan.

当f操作为求和时:

| input | 1 | 2 | 3 | 4 | 5 |

|---|---|---|---|---|---|

| output | 1 | 3 | 6 | 10 | 15 |

这里,我们仍然采用1维的grid, 采用N-1个线程,index为0的线程仍然干最多的活:

#include <cuda.h>

#include <iostream>

#include <stdio.h>

__global__ void scanSum(int* d)

int tid = threadIdx.x;

for (int threadCount = blockDim.x, stepSize = 1; threadCount > 0; stepSize *= 2)

if (tid < threadCount)

d[tid + stepSize] += d[tid];

threadCount -= stepSize;

int main()

const int count = 5;

const int size = count * sizeof(int);

int *d;

int h[count] = 1, 2, 3, 4, 5;

cudaMalloc(&d, size);

cudaMemcpy(d, h, size, cudaMemcpyHostToDevice);

scanSum<<<1, count - 1>>>(d);

cudaMemcpy(h, d, size, cudaMemcpyDeviceToHost);

cudaFree(d);

for (int i = 0; i < count; ++i)

std::cout << h[i] <<std::endl;

getchar();

return 0;

Reference:

- https://developer.nvidia.com/gpugems/gpugems2/part-iv-general-purpose-computation-gpus-primer/chapter-31-mapping-computational

- https://developer.nvidia.com/gpugems/gpugems3/part-vi-gpu-computing/chapter-39-parallel-prefix-sum-scan-cuda

- https://blog.csdn.net/seamanj/article/details/82976687

- https://thrust.github.io/doc/group__scattering_gac1b33a02dd67cf9c8b3b3aa05c881a37.html

- https://docs.nvidia.com/cuda/thrust/index.html

以上是关于CUDA中的几种并行计算模型的主要内容,如果未能解决你的问题,请参考以下文章