OpenCV红绿灯识别 轮廓识别 C++ OpenCV 案例实现

Posted 猿力猪

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了OpenCV红绿灯识别 轮廓识别 C++ OpenCV 案例实现相关的知识,希望对你有一定的参考价值。

目录

前言

本文以实现行车过程当中的红绿灯识别为目标,核心的内容包括:OpenCV轮廓识别原理以及OpenCV红绿灯识别的实现具体步骤

一、轮廓识别相关原理

什么是轮廓检测?

- 目前轮廓检测方法有两类,一类是利用传统的边缘检测算子检测目标轮廓,另一类是从人类视觉系统中提取可以使用的数学模型完成目标轮廓检测

轮廓提取函数 findContours

📍函数原型: findContours( InputOutputArray image, OutputArrayOfArrays contours,

OutputArray hierarchy, int mode,

int method, Point offset=Point());

📍参数:

1️⃣image:单通道图像矩阵,可以是灰度图,但更常用的是二值图像,一般是经过Canny、拉普拉斯等边缘检测算子处理过的二值图像

2️⃣contours: 定义为“vector<vector<Point>> contours”,是一个向量,并且是一个双重向量,向量内每个元素保存了一组由连续的Point点构成的点的集合的向量,每一组Point点集就是一个轮廓。有多少轮廓,向量contours就有多少元素

3️⃣hierarchy: 也是一个向量,向量内每个元素保存了一个包含4个int整型的数组。向量内的元素和轮廓向量contours内的元素是一一对应的,向量的容量相同

4️⃣int mode:

取值一:CV_CHAIN_APPROX_NONE 保存物体边界上所有连续的轮廓点到 contours向量内

取值二:CV_CHAIN_APPROX_SIMPLE 仅保存轮廓的拐点信息,把所有轮廓拐点处的点保存入contours向量内,拐点与拐点之间直线段上的信息点不予保留

取值三和四:CV_CHAIN_APPROX_TC89_L1,CV_CHAIN_APPROX_TC89_KCOS使用teh-Chinl chain 近似算法

5️⃣Point: Point偏移量,所有的轮廓信息相对于原始图像对应点的偏移量,相当于在每一个检测出的轮廓点上加上该偏移量,并且Point还可以是负值!

参数详解引用出处:findContours函数参数详解_-牧野-的博客-CSDN博客_findcontours函数

二、案例实现

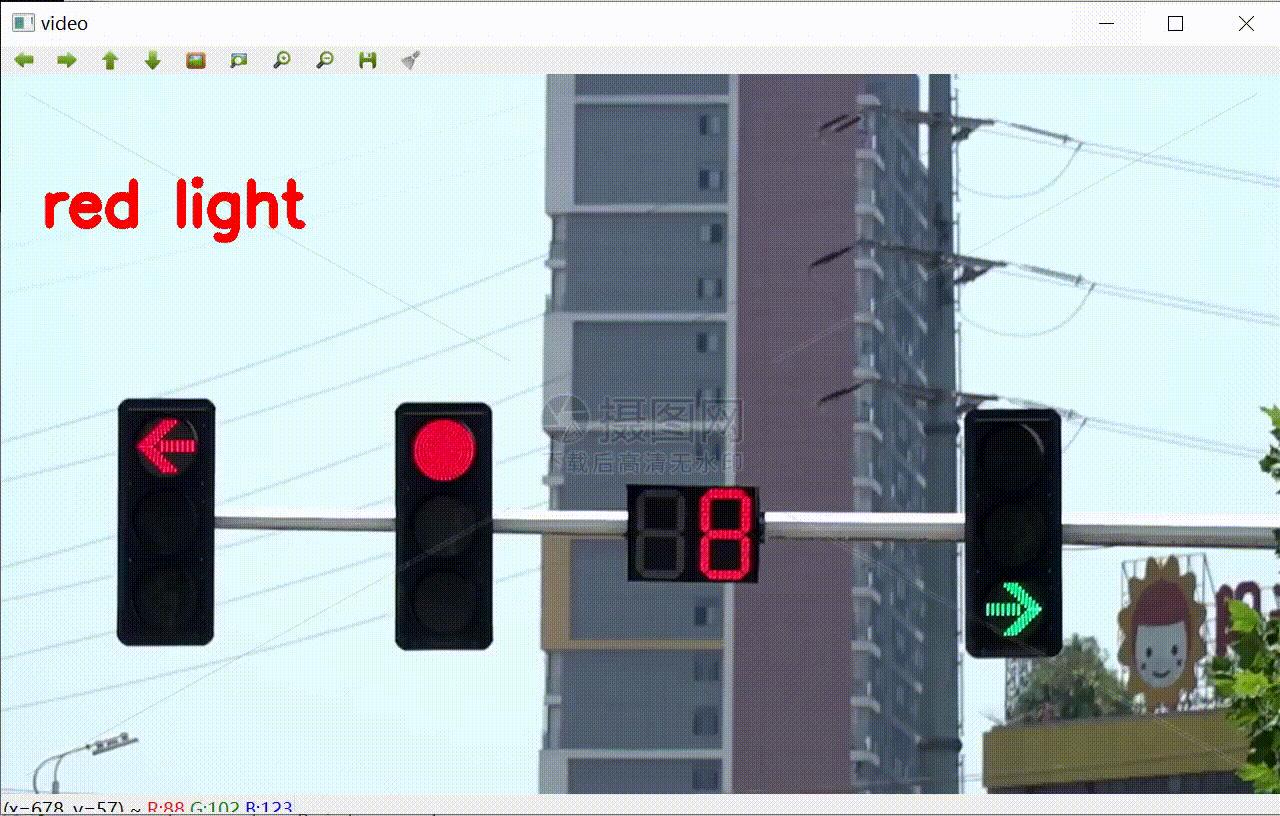

- 这是本案例所用到的素材,如下图所示:

PS:视频的效果比较好,如果方便的话可以自行外出拍摄取材

Step1:初始化配置

- 做好准备工作,创建我们需要的内容,设置亮度参数,以及我们导入的视频路径

int redCount = 0;

int greenCount = 0;

Mat frame;

Mat img;

Mat imgYCrCb;

Mat imgGreen;

Mat imgRed;

// 亮度参数

double a = 0.3;

double b = (1 - a) * 125;

VideoCapture capture("C:/Users/86177/Desktop/image/123.mp4");//导入视频的路径

if (!capture.isOpened())

cout << "Start device failed!\\n" << endl;//启动设备失败!

return -1;

Step2:进行帧处理

- 调整视频亮度,分解YCrCb的三个成分,拆分红色和绿色,方便对红绿两种颜色进行特征提取

// 帧处理

while (1)

capture >> frame;

//调整亮度

frame.convertTo(img, img.type(), a, b);

//转换为YCrCb颜色空间

cvtColor(img, imgYCrCb, CV_BGR2YCrCb);

imgRed.create(imgYCrCb.rows, imgYCrCb.cols, CV_8UC1);

imgGreen.create(imgYCrCb.rows, imgYCrCb.cols, CV_8UC1);

//分解YCrCb的三个成分

vector<Mat> planes;

split(imgYCrCb, planes);

// 遍历以根据Cr分量拆分红色和绿色

MatIterator_<uchar> it_Cr = planes[1].begin<uchar>(),

it_Cr_end = planes[1].end<uchar>();

MatIterator_<uchar> it_Red = imgRed.begin<uchar>();

MatIterator_<uchar> it_Green = imgGreen.begin<uchar>();

for (; it_Cr != it_Cr_end; ++it_Cr, ++it_Red, ++it_Green)

// RED, 145<Cr<470 红色

if (*it_Cr > 145 && *it_Cr < 470)

*it_Red = 255;

else

*it_Red = 0;

// GREEN 95<Cr<110 绿色

if (*it_Cr > 95 && *it_Cr < 110)

*it_Green = 255;

else

*it_Green = 0;

Step3:膨胀腐蚀处理

- 去除其他噪点,提高我们需要的红绿灯的特征

//膨胀和腐蚀

dilate(imgRed, imgRed, Mat(15, 15, CV_8UC1), Point(-1, -1));

erode(imgRed, imgRed, Mat(1, 1, CV_8UC1), Point(-1, -1));

dilate(imgGreen, imgGreen, Mat(15, 15, CV_8UC1), Point(-1, -1));

erode(imgGreen, imgGreen, Mat(1, 1, CV_8UC1), Point(-1, -1));

redCount = processImgR(imgRed);

greenCount = processImgG(imgGreen);

cout << "red:" << redCount << "; " << "green:" << greenCount << endl;Step4:红绿灯提示判断

- 加入红绿灯识别结果显示,方便我们查看效果

if(redCount == 0 && greenCount == 0)

cv::putText(frame, "lights out", Point(40, 150), cv::FONT_HERSHEY_SIMPLEX, 2, cv::Scalar(255, 255, 255), 8, 8, 0);

else if(redCount > greenCount)

cv::putText(frame, "red light", Point(40, 150), cv::FONT_HERSHEY_SIMPLEX, 2, cv::Scalar(0, 0, 255), 8, 8, 0);

else

cv::putText(frame, "green light", Point(40, 150), cv::FONT_HERSHEY_SIMPLEX, 2, cv::Scalar(0, 255, 0), 8, 8, 0);

Step5:轮廓提取

- 分别对红灯和绿灯进行轮廓特征提取,提高辨识度

int processImgR(Mat src)

Mat tmp;

vector<vector<Point>> contours;

vector<Vec4i> hierarchy;

vector<Point> hull;

CvPoint2D32f tempNode;

CvMemStorage* storage = cvCreateMemStorage();

CvSeq* pointSeq = cvCreateSeq(CV_32FC2, sizeof(CvSeq), sizeof(CvPoint2D32f), storage);

Rect* trackBox;

Rect* result;

int resultNum = 0;

int area = 0;

src.copyTo(tmp);

//提取轮廓

findContours(tmp, contours, hierarchy, CV_RETR_CCOMP, CV_CHAIN_APPROX_SIMPLE);

if (contours.size() > 0)

trackBox = new Rect[contours.size()];

result = new Rect[contours.size()];

//确定要跟踪的区域

for (int i = 0; i < contours.size(); i++)

cvClearSeq(pointSeq);

// 获取凸包的点集

convexHull(Mat(contours[i]), hull, true);

int hullcount = (int)hull.size();

// 凸包的保存点

for (int j = 0; j < hullcount - 1; j++)

tempNode.x = hull[j].x;

tempNode.y = hull[j].y;

cvSeqPush(pointSeq, &tempNode);

trackBox[i] = cvBoundingRect(pointSeq);

if (isFirstDetectedR)

lastTrackBoxR = new Rect[contours.size()];

for (int i = 0; i < contours.size(); i++)

lastTrackBoxR[i] = trackBox[i];

lastTrackNumR = contours.size();

isFirstDetectedR = false;

else

for (int i = 0; i < contours.size(); i++)

for (int j = 0; j < lastTrackNumR; j++)

if (isIntersected(trackBox[i], lastTrackBoxR[j]))

result[resultNum] = trackBox[i];

break;

resultNum++;

delete[] lastTrackBoxR;

lastTrackBoxR = new Rect[contours.size()];

for (int i = 0; i < contours.size(); i++)

lastTrackBoxR[i] = trackBox[i];

lastTrackNumR = contours.size();

delete[] trackBox;

else

isFirstDetectedR = true;

result = NULL;

cvReleaseMemStorage(&storage);

if (result != NULL)

for (int i = 0; i < resultNum; i++)

area += result[i].area();

delete[] result;

return area;

int processImgG(Mat src)

Mat tmp;

vector<vector<Point> > contours;

vector<Vec4i> hierarchy;

vector< Point > hull;

CvPoint2D32f tempNode;

CvMemStorage* storage = cvCreateMemStorage();

CvSeq* pointSeq = cvCreateSeq(CV_32FC2, sizeof(CvSeq), sizeof(CvPoint2D32f), storage);

Rect* trackBox;

Rect* result;

int resultNum = 0;

int area = 0;

src.copyTo(tmp);

//提取轮廓

findContours(tmp, contours, hierarchy, CV_RETR_CCOMP, CV_CHAIN_APPROX_SIMPLE);

if (contours.size() > 0)

trackBox = new Rect[contours.size()];

result = new Rect[contours.size()];

// 确定要跟踪的区域

for (int i = 0; i < contours.size(); i++)

cvClearSeq(pointSeq);

// 获取凸包的点集

convexHull(Mat(contours[i]), hull, true);

int hullcount = (int)hull.size();

// 保存凸包的点

for (int j = 0; j < hullcount - 1; j++)

tempNode.x = hull[j].x;

tempNode.y = hull[j].y;

cvSeqPush(pointSeq, &tempNode);

trackBox[i] = cvBoundingRect(pointSeq);

if (isFirstDetectedG)

lastTrackBoxG = new Rect[contours.size()];

for (int i = 0; i < contours.size(); i++)

lastTrackBoxG[i] = trackBox[i];

lastTrackNumG = contours.size();

isFirstDetectedG = false;

else

for (int i = 0; i < contours.size(); i++)

for (int j = 0; j < lastTrackNumG; j++)

if (isIntersected(trackBox[i], lastTrackBoxG[j]))

result[resultNum] = trackBox[i];

break;

resultNum++;

delete[] lastTrackBoxG;

lastTrackBoxG = new Rect[contours.size()];

for (int i = 0; i < contours.size(); i++)

lastTrackBoxG[i] = trackBox[i];

lastTrackNumG = contours.size();

delete[] trackBox;

else

isFirstDetectedG = true;

result = NULL;

cvReleaseMemStorage(&storage);

if (result != NULL)

for (int i = 0; i < resultNum; i++)

area += result[i].area();

delete[] result;

return area;

Step4:判断是否相交

//确定两个矩形区域是否相交

bool isIntersected(Rect r1, Rect r2)

int minX = max(r1.x, r2.x);

int minY = max(r1.y, r2.y);

int maxX = min(r1.x + r1.width, r2.x + r2.width);

int maxY = min(r1.y + r1.height, r2.y + r2.height);

if (minX < maxX && minY < maxY)

return true;

else

return false;

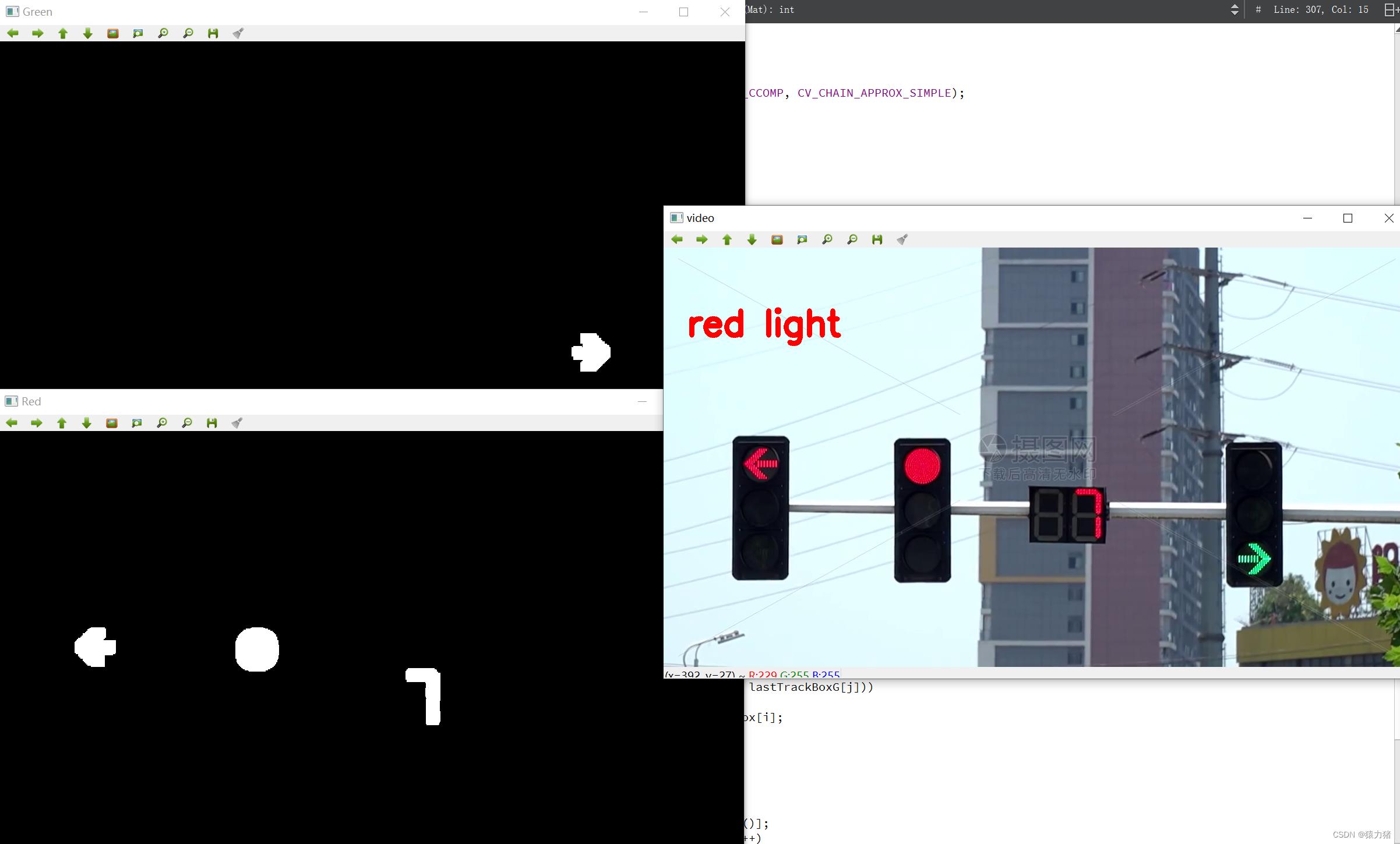

🚀案例效果

💡完整代码

#include "opencv2/opencv.hpp"

#include "opencv2/imgproc.hpp"

#include <windows.h>

#include <iostream>

using namespace std;

using namespace cv;

// Function headers

int processImgR(Mat);

int processImgG(Mat);

bool isIntersected(Rect, Rect);

// Global variables

bool isFirstDetectedR = true;

bool isFirstDetectedG = true;

Rect* lastTrackBoxR;

Rect* lastTrackBoxG;

int lastTrackNumR;

int lastTrackNumG;

//主函数

int main()

int redCount = 0;

int greenCount = 0;

Mat frame;

Mat img;

Mat imgYCrCb;

Mat imgGreen;

Mat imgRed;

// 亮度参数

double a = 0.3;

double b = (1 - a) * 125;

VideoCapture capture("C:/Users/86177/Desktop/image/123.mp4");//导入视频的路径

if (!capture.isOpened())

cout << "Start device failed!\\n" << endl;//启动设备失败!

return -1;

// 帧处理

while (1)

capture >> frame;

//调整亮度

frame.convertTo(img, img.type(), a, b);

//转换为YCrCb颜色空间

cvtColor(img, imgYCrCb, CV_BGR2YCrCb);

imgRed.create(imgYCrCb.rows, imgYCrCb.cols, CV_8UC1);

imgGreen.create(imgYCrCb.rows, imgYCrCb.cols, CV_8UC1);

//分解YCrCb的三个成分

vector<Mat> planes;

split(imgYCrCb, planes);

// 遍历以根据Cr分量拆分红色和绿色

MatIterator_<uchar> it_Cr = planes[1].begin<uchar>(),

it_Cr_end = planes[1].end<uchar>();

MatIterator_<uchar> it_Red = imgRed.begin<uchar>();

MatIterator_<uchar> it_Green = imgGreen.begin<uchar>();

for (; it_Cr != it_Cr_end; ++it_Cr, ++it_Red, ++it_Green)

// RED, 145<Cr<470 红色

if (*it_Cr > 145 && *it_Cr < 470)

*it_Red = 255;

else

*it_Red = 0;

// GREEN 95<Cr<110 绿色

if (*it_Cr > 95 && *it_Cr < 110)

*it_Green = 255;

else

*it_Green = 0;

//膨胀和腐蚀

dilate(imgRed, imgRed, Mat(15, 15, CV_8UC1), Point(-1, -1));

erode(imgRed, imgRed, Mat(1, 1, CV_8UC1), Point(-1, -1));

dilate(imgGreen, imgGreen, Mat(15, 15, CV_8UC1), Point(-1, -1));

erode(imgGreen, imgGreen, Mat(1, 1, CV_8UC1), Point(-1, -1));

redCount = processImgR(imgRed);

greenCount = processImgG(imgGreen);

cout << "red:" << redCount << "; " << "green:" << greenCount << endl;

if(redCount == 0 && greenCount == 0)

cv::putText(frame, "lights out", Point(40, 150), cv::FONT_HERSHEY_SIMPLEX, 2, cv::Scalar(255, 255, 255), 8, 8, 0);

else if(redCount > greenCount)

cv::putText(frame, "red light", Point(40, 150), cv::FONT_HERSHEY_SIMPLEX, 2, cv::Scalar(0, 0, 255), 8, 8, 0);

else

cv::putText(frame, "green light", Point(40, 150), cv::FONT_HERSHEY_SIMPLEX, 2, cv::Scalar(0, 255, 0), 8, 8, 0);

imshow("video", frame);

imshow("Red", imgRed);

imshow("Green", imgGreen);

// Handle with the keyboard input

if (cvWaitKey(20) == 'q')

break;

return 0;

int processImgR(Mat src)

Mat tmp;

vector<vector<Point>> contours;

vector<Vec4i> hierarchy;

vector<Point> hull;

CvPoint2D32f tempNode;

CvMemStorage* storage = cvCreateMemStorage();

CvSeq* pointSeq = cvCreateSeq(CV_32FC2, sizeof(CvSeq), sizeof(CvPoint2D32f), storage);

Rect* trackBox;

Rect* result;

int resultNum = 0;

int area = 0;

src.copyTo(tmp);

//提取轮廓

findContours(tmp, contours, hierarchy, CV_RETR_CCOMP, CV_CHAIN_APPROX_SIMPLE);

if (contours.size() > 0)

trackBox = new Rect[contours.size()];

result = new Rect[contours.size()];

//确定要跟踪的区域

for (int i = 0; i < contours.size(); i++)

cvClearSeq(pointSeq);

// 获取凸包的点集

convexHull(Mat(contours[i]), hull, true);

int hullcount = (int)hull.size();

// 凸包的保存点

for (int j = 0; j < hullcount - 1; j++)

tempNode.x = hull[j].x;

tempNode.y = hull[j].y;

cvSeqPush(pointSeq, &tempNode);

trackBox[i] = cvBoundingRect(pointSeq);

if (isFirstDetectedR)

lastTrackBoxR = new Rect[contours.size()];

for (int i = 0; i < contours.size(); i++)

lastTrackBoxR[i] = trackBox[i];

lastTrackNumR = contours.size();

isFirstDetectedR = false;

else

for (int i = 0; i < contours.size(); i++)

for (int j = 0; j < lastTrackNumR; j++)

if (isIntersected(trackBox[i], lastTrackBoxR[j]))

result[resultNum] = trackBox[i];

break;

resultNum++;

delete[] lastTrackBoxR;

lastTrackBoxR = new Rect[contours.size()];

for (int i = 0; i < contours.size(); i++)

lastTrackBoxR[i] = trackBox[i];

lastTrackNumR = contours.size();

delete[] trackBox;

else

isFirstDetectedR = true;

result = NULL;

cvReleaseMemStorage(&storage);

if (result != NULL)

for (int i = 0; i < resultNum; i++)

area += result[i].area();

delete[] result;

return area;

int processImgG(Mat src)

Mat tmp;

vector<vector<Point> > contours;

vector<Vec4i> hierarchy;

vector< Point > hull;

CvPoint2D32f tempNode;

CvMemStorage* storage = cvCreateMemStorage();

CvSeq* pointSeq = cvCreateSeq(CV_32FC2, sizeof(CvSeq), sizeof(CvPoint2D32f), storage);

Rect* trackBox;

Rect* result;

int resultNum = 0;

int area = 0;

src.copyTo(tmp);

//提取轮廓

findContours(tmp, contours, hierarchy, CV_RETR_CCOMP, CV_CHAIN_APPROX_SIMPLE);

if (contours.size() > 0)

trackBox = new Rect[contours.size()];

result = new Rect[contours.size()];

// 确定要跟踪的区域

for (int i = 0; i < contours.size(); i++)

cvClearSeq(pointSeq);

// 获取凸包的点集

convexHull(Mat(contours[i]), hull, true);

int hullcount = (int)hull.size();

// 保存凸包的点

for (int j = 0; j < hullcount - 1; j++)

tempNode.x = hull[j].x;

tempNode.y = hull[j].y;

cvSeqPush(pointSeq, &tempNode);

trackBox[i] = cvBoundingRect(pointSeq);

if (isFirstDetectedG)

lastTrackBoxG = new Rect[contours.size()];

for (int i = 0; i < contours.size(); i++)

lastTrackBoxG[i] = trackBox[i];

lastTrackNumG = contours.size();

isFirstDetectedG = false;

else

for (int i = 0; i < contours.size(); i++)

for (int j = 0; j < lastTrackNumG; j++)

if (isIntersected(trackBox[i], lastTrackBoxG[j]))

result[resultNum] = trackBox[i];

break;

resultNum++;

delete[] lastTrackBoxG;

lastTrackBoxG = new Rect[contours.size()];

for (int i = 0; i < contours.size(); i++)

lastTrackBoxG[i] = trackBox[i];

lastTrackNumG = contours.size();

delete[] trackBox;

else

isFirstDetectedG = true;

result = NULL;

cvReleaseMemStorage(&storage);

if (result != NULL)

for (int i = 0; i < resultNum; i++)

area += result[i].area();

delete[] result;

return area;

//确定两个矩形区域是否相交

bool isIntersected(Rect r1, Rect r2)

int minX = max(r1.x, r2.x);

int minY = max(r1.y, r2.y);

int maxX = min(r1.x + r1.width, r2.x + r2.width);

int maxY = min(r1.y + r1.height, r2.y + r2.height);

if (minX < maxX && minY < maxY)

return true;

else

return false;

三、总结

- 本文主要讲解OpenCV轮廓识别原理以及OpenCV红绿灯识别的实现具体步骤

- OpenCV还是有很多识别的库函数可以用,接下来继续探索,结合生活实际继续做一些有意思的案例

以上就是本文的全部内容啦!如果对您有帮助,麻烦点赞啦!收藏啦!欢迎各位评论区留言!! !

以上是关于OpenCV红绿灯识别 轮廓识别 C++ OpenCV 案例实现的主要内容,如果未能解决你的问题,请参考以下文章

opencv 利用cv.matchShapes()函数实现图像识别技术

基于OpenCV修复表格缺失的轮廓--如何识别和修复表格识别中的虚线