07 hdfs 集群搭建

Posted 蓝风9

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了07 hdfs 集群搭建相关的知识,希望对你有一定的参考价值。

前言

呵呵 最近有一系列环境搭建的相关需求

记录一下

hdfs 三个节点 : 192.168.110.150, 192.168.110.151, 192.168.110.152

150 为 master, 151 为 slave01, 152 为 slave02

三台机器都做了 trusted shell

hdfs 集群搭建

hdfs 三个节点 : 192.168.110.150, 192.168.110.151, 192.168.110.152

1. 基础环境准备

192.168.110.150, 192.168.110.151, 192.168.110.152 上面安装 jdk, 上传 hadoop 的安装包

安装包来自于 Apache Hadoop

2. hdfs 配置调整

在 master 上面更新如下系列配置文件, 然后将发布包 scp 到 slave01, slave02

更新 hdfs-site.xml

<configuration>

<property>

<name>dfs.namenode.secondary.http-address</name>

<value>master:50090</value>

</property>

<property>

<name>dfs.replication</name>

<value>2</value>

</property>

</configuration>

更新 core-site.xml

<configuration>

<property>

<name>fs.defaultFS</name>

<value>hdfs://master:9000</value>

</property>

<property>

<name>hadoop.tmp.dir</name>

<value>/var/hadoop</value>

</property>

</configuration>

更新 hadoop-env.sh

# The java implementation to use.

export JAVA_HOME=/usr/local/ProgramFiles/jdk1.8.0_291

编辑 slaves 节点

root@master:/usr/local/ProgramFiles/hadoop-2.10.1# cat etc/hadoop/slaves

slave01

slave02

3. 启动集群

在 master 所在的节点执行 start-dfs 脚本, 就启动了 hdfs 集群

root@master:/usr/local/ProgramFiles/hadoop-2.10.1# ./sbin/start-dfs.sh

Starting namenodes on [master]

master: starting namenode, logging to /usr/local/ProgramFiles/hadoop-2.10.1/logs/hadoop-root-namenode-master.out

slave01: starting datanode, logging to /usr/local/ProgramFiles/hadoop-2.10.1/logs/hadoop-root-datanode-slave01.out

slave02: starting datanode, logging to /usr/local/ProgramFiles/hadoop-2.10.1/logs/hadoop-root-datanode-slave02.out

Starting secondary namenodes [master]

master: starting secondarynamenode, logging to /usr/local/ProgramFiles/hadoop-2.10.1/logs/hadoop-root-secondarynamenode-master.out

测试集群

基于 hadoop fs 的测试

root@master:/usr/local/ProgramFiles/hadoop-2.10.1# hadoop fs -ls /

root@master:/usr/local/ProgramFiles/hadoop-2.10.1# hadoop fs -mkdir /2022-05-21

root@master:/usr/local/ProgramFiles/hadoop-2.10.1# hadoop fs -ls /

Found 1 items

drwxr-xr-x - root supergroup 0 2022-05-21 01:19 /2022-05-21

root@master:/usr/local/ProgramFiles/hadoop-2.10.1# hadoop fs -put README.txt /2022-05-21/upload.txt

root@master:/usr/local/ProgramFiles/hadoop-2.10.1# hadoop fs -ls /2022-05-21

Found 1 items

-rw-r--r-- 2 root supergroup 1366 2022-05-21 01:20 /2022-05-21/upload.txt

root@master:/usr/local/ProgramFiles/hadoop-2.10.1# hadoop fs -cat /2022-05-21/upload.txt

For the latest information about Hadoop, please visit our website at:

http://hadoop.apache.org/core/

and our wiki, at:

http://wiki.apache.org/hadoop/

This distribution includes cryptographic software. The country in

which you currently reside may have restrictions on the import,

possession, use, and/or re-export to another country, of

encryption software. BEFORE using any encryption software, please

check your country's laws, regulations and policies concerning the

import, possession, or use, and re-export of encryption software, to

see if this is permitted. See <http://www.wassenaar.org/> for more

information.

The U.S. Government Department of Commerce, Bureau of Industry and

Security (BIS), has classified this software as Export Commodity

Control Number (ECCN) 5D002.C.1, which includes information security

software using or performing cryptographic functions with asymmetric

algorithms. The form and manner of this Apache Software Foundation

distribution makes it eligible for export under the License Exception

ENC Technology Software Unrestricted (TSU) exception (see the BIS

Export Administration Regulations, Section 740.13) for both object

code and source code.

The following provides more details on the included cryptographic

software:

Hadoop Core uses the SSL libraries from the Jetty project written

by mortbay.org.

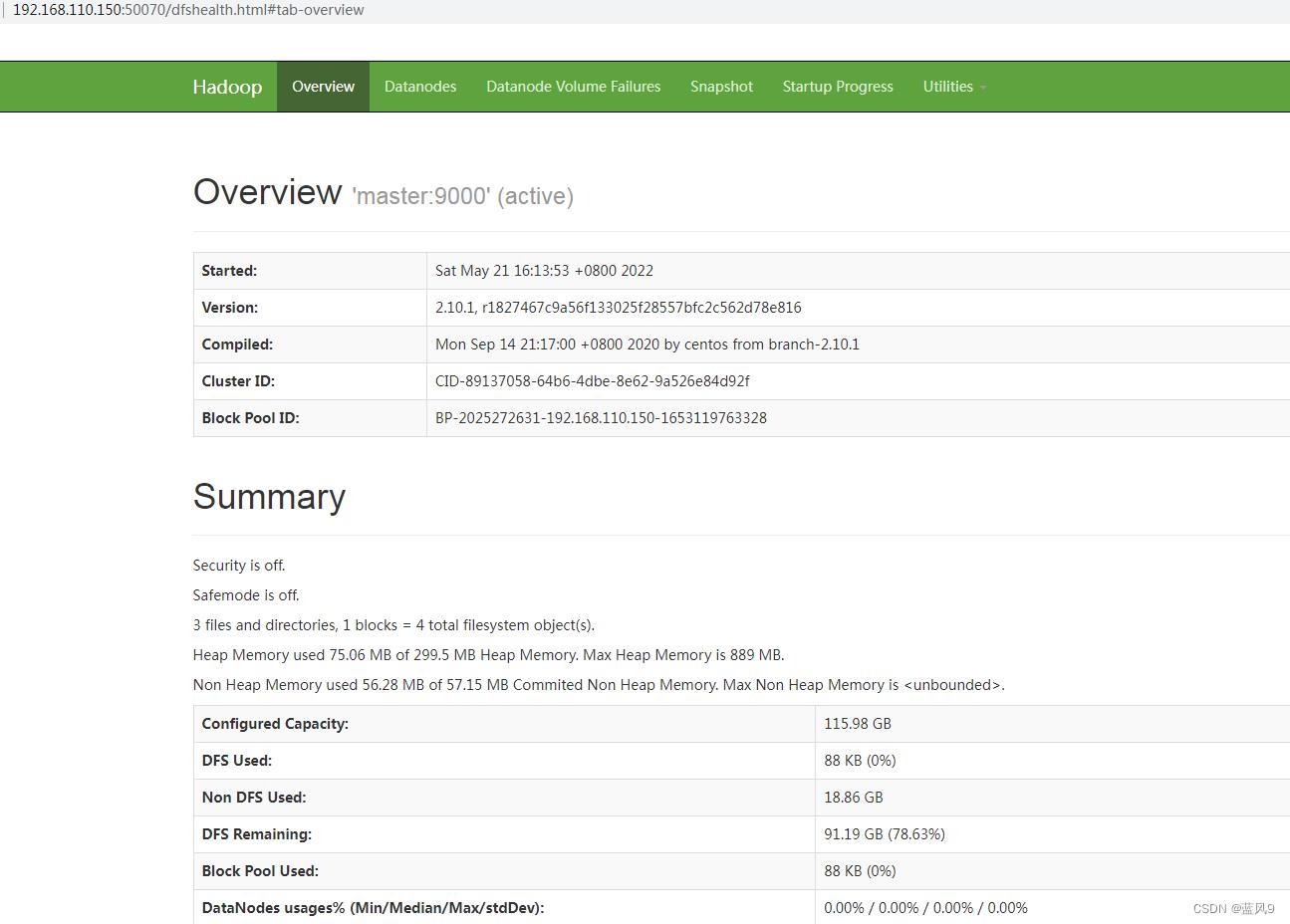

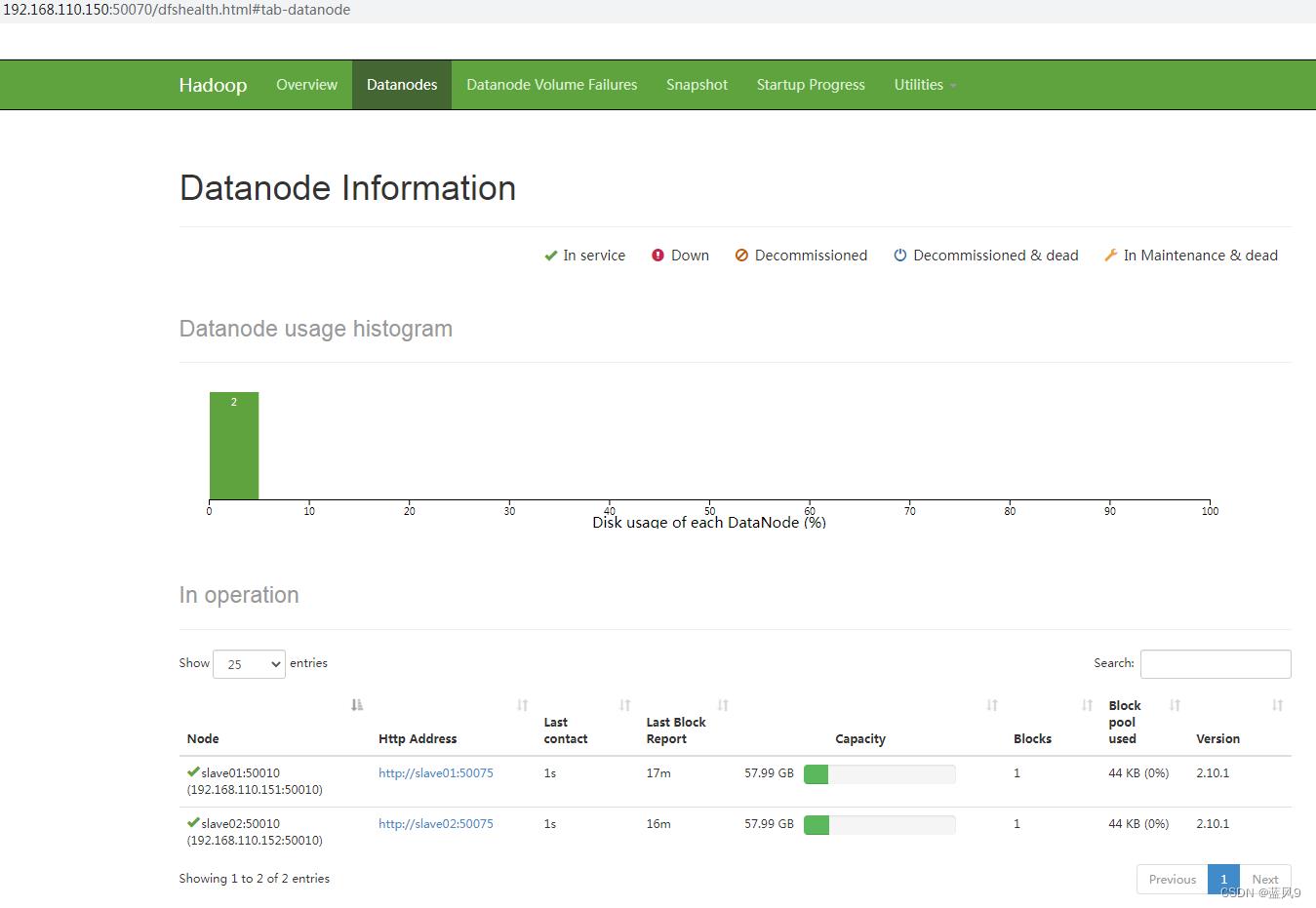

root@master:/usr/local/ProgramFiles/hadoop-2.10.1# namenode 的 webui

完

以上是关于07 hdfs 集群搭建的主要内容,如果未能解决你的问题,请参考以下文章