01 kafka 集群搭建

Posted 蓝风9

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了01 kafka 集群搭建相关的知识,希望对你有一定的参考价值。

前言

// 今年的第一个目标应该就是这样了吧

呵呵 最近有一系列环境搭建的相关需求

记录一下

kafka 三个节点 : 192.168.110.150, 192.168.110.151, 192.168.110.152

150 为 master, 151 为 slave01, 152 为 slave02

三台机器都做了 trusted shell

kafka 单节点 docker 搭建

创建 docker-compose.yml 如下, 然后 docker-compose up -d 启动 kafka 即可使用

version: '3.2'

services:

zookeeper:

image: wurstmeister/zookeeper

container_name: zookeeper

ports:

- "2181:2181"

restart: always

kafka:

image: wurstmeister/kafka:2.12-2.3.0

ports:

- "9092:9092"

container_name: kafka

environment:

- KAFKA_ZOOKEEPER_CONNECT=zookeeper:2181

- KAFKA_ADVERTISED_LISTENERS=PLAINTEXT://$IP:9092

- KAFKA_LISTENERS=PLAINTEXT://:9092

- KAFKA_ADVERTISED_HOST_NAME=192.168.235.250

volumes:

- /var/run/docker.sock:/var/run/docker.sock

restart: always

kafka 集群搭建

kafka 三个节点 : 192.168.110.150, 192.168.110.151, 192.168.110.152

zookeeper 单节点 : 192.168.110.250

1. 基础环境准备

192.168.110.250 上面安装 zookeeper

root@ubuntu:~/HelloDocker/zookeeper# docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

7e2781f9a2aa zookeeper "/docker-entrypoint.…" 7 months ago Up About an hour 2888/tcp, 0.0.0.0:2181->2181/tcp, 3888/tcp, 0.0.0.0:18080->8080/tcp zk

192.168.110.150, 192.168.110.151, 192.168.110.152 上面安装 jdk, 上传 kafka 的安装包

安装包来自于 Apache Kafka

root@ubuntu:/usr/local/ProgramFiles# ll

total 69916

drwxr-xr-x 3 root root 4096 May 13 19:48 ./

drwxr-xr-x 11 root root 4096 Jan 5 05:53 ../

drwxr-xr-x 8 10143 10143 4096 Apr 7 2021 jdk1.8.0_291/

-rw-r--r-- 1 root root 71578705 May 13 19:48 kafka_2.12-2.8.1.tgz

root@ubuntu:/usr/local/ProgramFiles# java -version

java version "1.8.0_291"

Java(TM) SE Runtime Environment (build 1.8.0_291-b10)

Java HotSpot(TM) 64-Bit Server VM (build 25.291-b10, mixed mode)

2. kafka 配置调整

分别进入 master, slave01, slave02 机器

进入 kafka 目录, 调整 config/server.properties

# The id of the broker. This must be set to a unique integer for each broker.

broker.id=0

# The address the socket server listens on. It will get the value returned from

# java.net.InetAddress.getCanonicalHostName() if not configured.

# FORMAT:

# listeners = listener_name://host_name:port

# EXAMPLE:

# listeners = PLAINTEXT://your.host.name:9092

listeners=PLAINTEXT://192.168.110.150:9092

# Zookeeper connection string (see zookeeper docs for details).

# This is a comma separated host:port pairs, each corresponding to a zk

# server. e.g. "127.0.0.1:3000,127.0.0.1:3001,127.0.0.1:3002".

# You can also append an optional chroot string to the urls to specify the

# root directory for all kafka znodes.

zookeeper.connect=192.168.110.250:2181

broker.id=1

listeners=PLAINTEXT://192.168.110.151:9092

zookeeper.connect=192.168.110.250:2181broker.id=2

listeners=PLAINTEXT://192.168.110.152:9092

zookeeper.connect=192.168.110.250:21813. 启动 kafka 集群

进入 master, slave01, slave02 分别启动 kafka-server

./bin/kafka-server-start.sh config/server.properties测试集群

基于 kafka 脚本的测试

root@master:/usr/local/ProgramFiles/kafka_2.12-2.8.1# ./bin/kafka-topics.sh --create --zookeeper 192.168.110.250:2181 --topic test --partitions 3 --replication-factor 1

Created topic test.

root@master:/usr/local/ProgramFiles/kafka_2.12-2.8.1# ./bin/kafka-console-producer.sh --broker-list 192.168.110.150:9092,192.168.110.151:9092,192.168.110.152:9092 --topic test

>^[[Dsdfsdfsdf

>HelloWoerld

>root@master:/usr/local/ProgramFiles/kafka_2.12-2.8.1# ./bin/kafka-console-consumer.sh --bootstrap-server 192.168.110.150:9092,192.168.110.151:9092,192.168.110.152:9092 --topic test --from-beginning

HelloWoerld

sdfsdfsdf

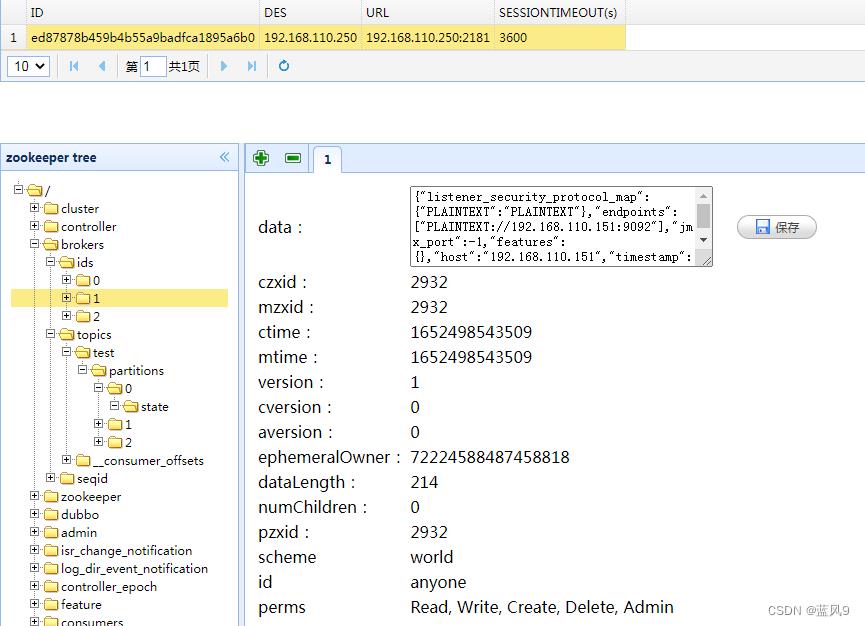

检查 zookeeper 的元数据信息

基于 offset expolorer 的测试

完

以上是关于01 kafka 集群搭建的主要内容,如果未能解决你的问题,请参考以下文章